| .github | ||

| bin | ||

| examples | ||

| fixtures | ||

| fixtures-code | ||

| imgs | ||

| issues | ||

| src | ||

| .editorconfig | ||

| .gitignore | ||

| .goreleaser.yaml | ||

| default.nix | ||

| flake.lock | ||

| flake.nix | ||

| go.mod | ||

| go.sum | ||

| LICENSE | ||

| Makefile | ||

| process-compose-win.yaml | ||

| process-compose.override.yaml | ||

| process-compose.yaml | ||

| README.md | ||

| test_loop.bash | ||

| test_loop.ps1 | ||

Process Compose

Process Compose is a simple and flexible scheduler and orchestrator to manage non-containerized applications.

Why? Because sometimes you just don't want to deal with docker files, volume definitions, networks and docker registries.

Features:

- Processes execution (in parallel or/and serially)

- Processes dependencies and startup order

- Defining recovery policies

- Manual process [re]start

- Processes arguments

bashorzshstyle (or define your own shell) - Per process and global environment variables

- Per process or global (single file) logs

- Health checks (liveness and readiness)

- Terminal User Interface (TUI) or CLI modes

- Forking (services or daemons) processes

- REST API (OpenAPI a.k.a Swagger)

- Logs caching

- Functions as both server and client

- Configurable shortcuts (see Wiki)

- Merge Configuration Files

- Namespaces

- Run Multiple Replicas of a Process 🔥 NEW!

It is heavily inspired by docker-compose, but without the need for containers. The configuration syntax tries to follow the docker-compose specifications, with a few minor additions and lots of subtractions.

Quick Start

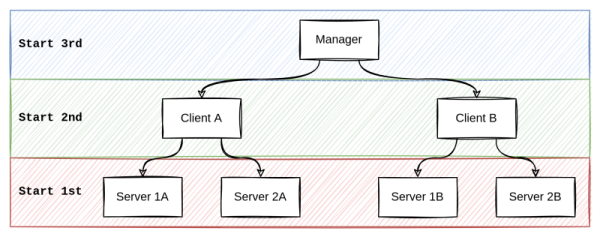

Imaginary system diagram:

process-compose.yaml definitions for the system above:

version: "0.5"

environment:

- "GLOBAL_ENV_VAR=1"

log_location: /path/to/combined/output/logfile.log

log_level: debug

processes:

Manager:

command: "/path/to/manager"

availability:

restart: "always"

depends_on:

ClientA:

condition: process_started

ClientB:

condition: process_started

ClientA:

command: "/path/to/ClientA"

availability:

restart: "always"

depends_on:

Server_1A:

condition: process_started

Server_2A:

condition: process_started

environment:

- "LOCAL_ENV_VAR=1"

ClientB:

command: "/path/to/ClientB -some -arg"

availability:

restart: "always"

depends_on:

Server_1B:

condition: process_started

Server_2B:

condition: process_started

environment:

- "LOCAL_ENV_VAR=2"

Server_1A:

command: "/path/to/Server_1A"

availability:

restart: "always"

Server_2A:

command: "/path/to/Server_2A"

availability:

restart: "always"

Server_1B:

command: "/path/to/Server_1B"

availability:

restart: "always"

Server_2B:

command: "/path/to/Server_2B"

availability:

restart: "always"

Finally, run process-compose in the process-compose.yaml directory. Or give it a direct path:

process-compose -f /path/to/process-compose.yaml

Installation

- Go to the releases, download the package for your OS, and copy the binary to somewhere on your PATH.

- If you have the Nix package manager installed with Flake support, just run:

# to use the latest binary release

nix run nixpkgs/master#process-compose -- --help

# or to compile from the latest source

nix run github:F1bonacc1/process-compose -- --help

To use process-compose declaratively configured in your project flake.nix, checkout process-compose-flake.

Brew (MacOS and Linux)

brew install f1bonacc1/tap/process-compose

Documentation

- See examples of workflows for best practices

- See below

Features

Launcher

Parallel

process1:

command: "sleep 3"

process2:

command: "sleep 3"

Serial

process1:

command: "sleep 3"

depends_on:

process2:

condition: process_completed_successfully # or "process_completed" if you don't care about errors

process2:

command: "sleep 3"

depends_on:

process3:

condition: process_completed_successfully # or "process_completed" if you don't care about errors

Multiple Replicas of a Process

You can run multiple replicas of a process by adding processes.process_name.replicas parameter (default: 1)

processes:

process_name:

command: "sleep 2"

log_location: ./log_file.{PC_REPLICA_NUM}.log # <- {PC_REPLICA_NUM} will be replaced with replica number. If more than one replica and PC_REPLICA_NUM is not specified, the replica number will be concatenated to the file end.

replicas: 2 # <- NEW

To scale a process on the fly CLI:

process-compose process scale process_name 3

To scale a process on the fly TUI: F2 or Process Compose in client mode (process-compose attach).

Note

: Starting multiple processes using the same port, will fail. Please use the injected

PC_REPLICA_NUMenvironment variable to increment the used port number.

Specify a working directory

process1:

command: "ls -laF --color=always"

working_dir: "/path/to/your/working/directory"

Make sure that you have the proper access permissions to the specified working_dir. If not, the command will fail with a permission denied error. The process status in TUI will be Error.

Define process dependencies

process2:

depends_on:

process3:

condition: process_completed_successfully

process4:

condition: process_completed_successfully

There are 4 condition types that can be used in process dependencies:

process_completed- is the type for waiting until a process has been completed (any exit code)process_completed_successfully- is the type for waiting until a process has been completed successfully (exit code 0)process_healthy- is the type for waiting until a process is healthyprocess_started- is the type for waiting until a process has started (default)

Run only specific processes

For testing and debugging purposes, especially when your process-compose.yaml file contains many processes, you might want to specify only a subset of processes to run. For example:

#process-compose.yaml

process1:

command: "echo 'Hi from Process1'"

depends_on:

process2:

condition: process_completed_successfully

process2:

command: "echo 'Hi from Process2'"

process3:

command: "echo 'Hi from Process3'"

process-compose up # will run all the processes - equal to 'process-compose'

#output:

#Hi from Process3

#Hi from Process2

#Hi from Process1

process-compose up process1 process3 # will run 'process1', 'process3' and all of their dependencies - 'process2'

#output:

#Hi from Process3

#Hi from Process2

#Hi from Process1

process-compose up process1 process3 --no-deps # will run 'process1', 'process3' without any dependencies

#output:

#Hi from Process3

#Hi from Process1

Termination Parameters

nginx:

command: "docker run --rm --name nginx_test nginx"

shutdown:

command: "docker stop nginx_test"

timeout_seconds: 10 # default 10

signal: 15 # default 15, but only if the 'command' is not defined or empty

parent_only: no # default no. If yes, only signal the running process instead of its whole process group

shutdown is optional and can be omitted. The default behavior in this case: SIGTERM is issued to the process group of the running process.

In case only shutdown.signal is defined [1..31] the running process group will be terminated with its value.

If shutdown.parent_only is yes, the signal is only sent to the running process and not to the whole process group.

In case the shutdown.command is defined:

- The

shutdown.commandis executed with all the Environment Variables of the primary process - Wait for

shutdown.timeout_secondsfor its completion (if not defined wait for 10 seconds) - In case of timeout, the process group will receive the

SIGKILLsignal (irrespective of theshutdown.parent_onlyoption).

Background (detached) Processes

nginx:

command: "docker run -d --rm --name nginx_test nginx" # note the '-d' for detached mode

is_daemon: true # this flag is required for background processes (default false)

shutdown:

command: "docker stop nginx_test"

timeout_seconds: 10 # default 10

signal: 15 # default 15, but only if command is not defined or empty

-

For processes that start services / daemons in the background, please use the

is_daemonflag set totrue. -

In case a process is daemon it will be considered running until stopped.

-

Daemon processes can only be stopped with the

$PROCESSNAME.shutdown.commandas in the example above.

Output Handling

- Show process name

- Different colors per process

- StdErr is printed in Red

TUI (Terminal User Interface)

Review processes status

Start processes (only completed or disabled)

Stop processes

Review logs

TUI is the default run mode, but it's possible to disable it:

./process-compose -t=false

Control the UI log buffer size:

log_level: info

log_length: 1200 #default: 1000

processes:

process2:

command: "ls -R /"

Note

: Using a too large buffer will put a significant penalty on your CPU.

By default process-compose uses the standard ANSI colors mode to display logs. However, you can disable it for each process:

processes:

process_name:

command: "ls -R /"

disable_ansi_colors: true #default false

Note

: Too long log lines (above 2^16 bytes long) can cause the log collector to hang.

Disabled Processes

Process execution can be disabled:

processes:

process_name:

command: "ls -R /"

disabled: true #default false

Even if disabled, it is still listed in the TUI and the REST client can be started manually when needed.

Processes State Columns Sorting

Sorting is performed by pressing shift + the letter that appears in () next to the column title. Pressing the same combination again will reverse the sort order.

For example: To sort by process AGE(A) press shift+A

Logger

Per Process Log Collection

process2:

log_location: ./pc.process2.log #if undefined or empty no logs will be saved

Captures StdOut and StdErr output

Merge into a single file (Unified Logging)

environment:

- "ABC=42"

log_location: ./pc.global.log #if undefined or empty, no logs will be saved (if not defined per process)

processes:

process2:

command: "chmod 666 /path/to/file"

Process compose console log level

log_level: info # other options: "trace", "debug", "info", "warn", "error", "fatal", "panic"

processes:

process2:

command: "chmod 666 /path/to/file"

This setting controls the process-compose log level. The processes log level should be defined inside the process. It is recommended to support this definition with an environment variable in process-compose.yaml

Log Rotation

# unified log

version: "0.5"

log_level: info

log_location: /tmp/pc.log

log_configuration:

rotation:

max_size_mb: 1 # the max size in MB of the logfile before it's rolled

max_age_days: 3 # the max age in days to keep a logfile

max_backups: 3 # the max number of rolled files to keep

compress: true # determines if the rotated log files should be compressed using gzip. The default is false

#process level logging (same syntax)

processes:

someProc:

command: "some command"

log_configuration:

rotation:

max_size_mb: 1 # the max size in MB of the logfile before it's rolled

max_age_days: 3 # the max age in days to keep a logfile

max_backups: 3 # the max number of rolled files to keep

compress: true # determines if the rotated log files should be compressed using gzip. The default is false

Logger Configuration

log_configuration:

fields_order: ["time", "level", "message"] # order of logging fields. The default is time, level, message

disable_json: true # output as plain text. The default is false

timestamp_format: "06-01-02 15:04:05.000" # timestamp format. The default is RFC3339

no_metadata: true # don't log process name and replica number

add_timestamp: true # add timestamp to the logger. Default is false

no_color: true # disable ANSII colors in the logger. Default is false

| Parameter Name | Description | Depends On | Default Value |

|---|---|---|---|

fields_order |

Order of the logging fields. The default is time, level, message. In case one of the fields is omitted, it will be missing in the log as well. | disable_json: trueadd_timestamp: true for "time" |

["time", "level", "message"] |

disable_json |

Disables JSON logging format. Use Console Mode Format. | false |

|

timestamp_format |

Sets the format of the logger timestamp. | add_timestamp: true |

If disable_json: true:3:04PMIf disable_json: false:"2006-01-02T15:04:05Z07:00" |

no_metadata |

Don't log the process name and replica number. | false |

|

add_timestamp |

Add timestamp to the logger. Useful for processes without an internal logger. | false |

|

no_color |

Disable ANSII colors in the log file. | disable_json: true |

false |

Process Compose Internal Log

Default log location: /tmp/process-compose-$USER.log

Tip: It is recommended to add the following process configuration to your process-compose.yaml:

processes:

pc_log:

command: "tail -f -n100 process-compose-${USER}.log"

working_dir: "/tmp"

This will allow you to spot any issues with the processes execution, without leaving the process-compose TUI.

Health Checks

Many applications running for long periods of time eventually transition to broken states, and cannot recover except by being restarted. Process Compose provides liveness and readiness probes to detect and remedy such situations.

Probes configuration and functionality are designed to work similarly to Kubernetes liveness and readiness probes.

Liveness Probe

nginx:

command: "docker run -d --rm -p80:80 --name nginx_test nginx"

is_daemon: true

shutdown:

command: "docker stop nginx_test"

signal: 15

timeout_seconds: 5

liveness_probe:

exec:

command: "[ $(docker inspect -f '{{.State.Running}}' nginx_test) = 'true' ]"

working_dir: /tmp # if not specified the process working dir will be used

initial_delay_seconds: 5

period_seconds: 2

timeout_seconds: 5

success_threshold: 1

failure_threshold: 3

Readiness Probe

nginx:

command: "docker run -d --rm -p80:80 --name nginx_test nginx"

is_daemon: true

shutdown:

command: "docker stop nginx_test"

readiness_probe:

http_get:

host: 127.0.0.1

scheme: http

path: "/"

port: 80

initial_delay_seconds: 5

period_seconds: 10

timeout_seconds: 5

success_threshold: 1

failure_threshold: 3

Each probe type (liveness_probe or readiness_probe) can be configured to use one of the 2 mutually exclusive modes:

exec: Will run a configuredcommandand based on theexit codedecide if the process is in a correct state. 0 indicates success. Any other value indicates failure.http_get: For an HTTP probe, the Process Compose sends an HTTP request to the specified path and port to perform the check. Response code 200 indicates success. Any other value indicates failure.host: Host name to connect to.scheme: Scheme to use for connecting to the host (HTTP or HTTPS). Defaults to HTTP.path: Path to access on the HTTP server. Defaults to /.port: Number of port to access the process. The number must be in the range 1 to 65535.

Configure Probes

Probes have a number of fields that you can use to control the behavior of liveness and readiness checks more precisely:

initial_delay_seconds: Number of seconds after the container has started before liveness or readiness probes are initiated. Defaults to 0 seconds. The minimum value is 0.period_seconds: How often (in seconds) to perform the probe. Defaults to 10 seconds. The minimum value is 1.timeout_seconds: Number of seconds after which the probe times out. Defaults to 1 second. The minimum value is 1.success_threshold: Minimum consecutive successes for the probe to be considered successful after failing. Defaults to 1. Must be 1 for liveness and startup Probes. The minimum value is 1. Note: this value is not respected and was added as a placeholder for future implementation.failure_threshold: When a probe fails, Process Compose will tryfailure_thresholdtimes before giving up. Giving up in case of liveness probe means restarting the process. In case of readiness probe, the Pod will be marked Unready. Defaults to 3. The minimum value is 1.

Auto Restart if not Healthy

In order to ensure that the process is restarted (and not transitioned to a completed state) in case of readiness check fail, please make sure to define the availability configuration. For background (is_daemon=true) processes, the restart policy should be always.

Auto Restart on Exit

process2:

availability:

restart: on_failure # other options: "exit_on_failure", "always", "no" (default)

backoff_seconds: 2 # default: 1

max_restarts: 5 # default: 0 (unlimited)

Terminate Process Compose on Failure

There are cases when you might want process-compose to terminate immediately when one of the processes exits with a non 0 exit code. This can be useful when you would like to perform "pre-flight" validation checks on the environment.

To achieve that, use exit_on_failure restart policy. If defined, process-compose will gracefully shut down all the other running processes and exit with the same exit code as the failed process.

sanitycheck:

command: "which go"

availability:

restart: "exit_on_failure"

other_proc:

command: "go test ./..."

depends_on:

sanitycheck:

condition: process_completed_successfuly

Terminate Process Compose once given process ends

There are cases when you might want process-compose to terminate immediately when one of the processes exits (regardless of the exit code). For example when running tests that depend on other processes like databases etc. You might want the processes, on which the test process depends, to start first, then run the tests, and finally terminate all processes once the test process exits, reporting the code returned by the test process.

To achieve that, set availability.exit_on_end to true, and process-compose will gracefully shut down all the other running processes and exit with the same exit code as the given process.

tests:

command: tests-run

availability:

# NOTE: `restart: exit_on_failure` is not needed since

# exit_on_end implies it.

exit_on_end: true

depends_on:

redis: process_healthy

postgres: process_healthy

redis:

command: redis-start

readiness_probe:

exec:

command: redis-health-check

postgres:

command: postgres-start

readiness_probe:

exec:

command: postgres-health-check

💡 setting

restart: exit_on_failuretogether withexit_on_end: trueis not needed as the latter causes termination regardless of the exit code. However, it might be sometimes useful toexit_on_endwithrestart: on_failureandmax_restartsin case you want the process to recover from failure and only cause termination on success.

💡

exit_on_endcan be set on more than one process, for example when running multiple tasks in parallel and wishing to terminate as soon as any one finished.

Environment Variables

Per Process

process2:

environment:

- "I_AM_LOCAL_EV=42"

Global

processes:

process2:

command: "chmod 666 /path/to/file"

environment:

- "I_AM_LOCAL_EV=42"

environment:

- "I_AM_GLOBAL_EV=42"

Default environment variables:

PC_PROC_NAME - Defines the process name as defined in the process-compose.yaml file.

PC_REPLICA_NUM - Defines the process replica number. Useful for port collision avoidance for processes with multiple replicas.

REST API

A convenient Swagger API is provided: http://localhost:8080

Default port is 8080. Specify your own port:

process-compose -p 8080

Alternatively use PC_PORT_NUM environment variable:

PC_PORT_NUM=8080 process-compose

Client Mode

Process compose can also connect to itself as a client. Available commands:

Processes List

process-compose process list #lists available processes

Process Start

process-compose process start [PROCESS] #starts one of the available non running processes

Process Stop

process-compose process stop [PROCESS] #stops one of the running processes

Process Restart

process-compose process restart [PROCESS] #restarts one of the available processes

Restart will wait process.availability.backoff_seconds seconds between stop and start of the process. If not configured the default value is 1s.

By default, the client will try to use the default port 8080 and default address localhost to connect to the locally running instance of process-compose. You can provide deferent values:

process-compose -p PORT process -a ADDRESS list

Client in TUI Mode

For situations when process-compose was started in headless mode -t=false, another process-compose instance (client) can run in a fully remote TUI mode:

process-compose attach

The client can connect to a remote server, docker container, headless and TUI process-compose instances.

In remote mode the Process Compose logo will be replaced from 🔥 to ⚡and show server hostname instead local hostname.

Configuration

Support .env file

Override ${var} and $var from environment variables or .env values

Specify which configuration files to use

process-compose -f "path/to/process-compose-file.yaml"

Auto discover configuration files

The following discovery order is used: compose.yml, compose.yaml, process-compose.yml, process-compose.yaml. If multiple files are present the first one will be used.

Merge 2 or more configuration files with override values

process-compose -f "path/to/process-compose-file.yaml" -f "path/to/process-compose-override-file.yaml"

Using multiple process-compose files lets you customize a process-compose application for different environments or different workflows.

See the process-compose wiki for more information on Multiple Compose Files.

Namespaces

Assigning namespaces to processes allows better grouping and sorting, especially in TUI:

processes:

process1:

command: "tail -f -n100 process-compose-${USER}.log"

working_dir: "/tmp"

namespace: debug # if not defined 'default' namespace is automatically assigned to each process

Note: By default process-compose will start process from all the configured namespaces. To start a sub set of the configured namespaces (ns1, ns2, ns3):

process-compose -n ns1 -n ns3 # will start only ns1 and ns3. ns2 namespace won't run and won't be visible in the TUI

Multi-platform

Linux

The default backend is bash. You can define a different backend with a COMPOSE_SHELL environment variable.

Windows

The default backend is cmd. You can define a different backend with a COMPOSE_SHELL environment variable.

process1:

command: "python -c print(str(40+2))"

#note that the same command for bash/zsh would look like: "python -c 'print(str(40+2))'"

Using powershell backend had some funky behavior (like missing command1 && command2 functionality in older versions). If you need to run powershell scripts, use the following syntax:

process2:

command: "powershell.exe ./test.ps1 arg1 arg2 argN"

macOS

The default backend is bash. You can define a different backend with a COMPOSE_SHELL environment variable.

Configurable Backend

For cases where your process compose requires a non default or transferable backend definition, setting an environment variable won't do. For that, you can configure it directly in the process-compose.yaml file:

version: "0.5"

shell:

shell_command: "python3"

shell_argument: "-m"

processes:

http:

command: "server.py"

Note

: please make sure that the

shell.shell_commandvalue is in your$PATH

How to Contribute

- Fork it

- Create your feature branch (git checkout -b my-new-feature)

- Commit your changes (git commit -am 'Add some feature')

- Push to the branch (git push origin my-new-feature)

- Create new Pull Request

English is not my native language, so PRs correcting grammar or spelling are welcome and appreciated.

Consider supporting the project ❤️

Github (preferred)

https://github.com/sponsors/F1bonacc1

Bitcoin

3QjRfBzwQASQfypATTwa6gxwUB65CX1jfX

3QjRfBzwQASQfypATTwa6gxwUB65CX1jfX

Thank You!