mirror of

https://github.com/Sygil-Dev/sygil-webui.git

synced 2024-12-14 22:13:41 +03:00

Merge branch 'dev' into dependabot/pip/dev/fairscale-0.4.12

This commit is contained in:

commit

8bfd91bb4d

11

.idea/.gitignore

vendored

11

.idea/.gitignore

vendored

@ -1,11 +0,0 @@

|

|||||||

# Default ignored files

|

|

||||||

/shelf/

|

|

||||||

/workspace.xml

|

|

||||||

# Editor-based HTTP Client requests

|

|

||||||

/httpRequests/

|

|

||||||

# Datasource local storage ignored files

|

|

||||||

/dataSources/

|

|

||||||

/dataSources.local.xml

|

|

||||||

|

|

||||||

*.pyc

|

|

||||||

.idea

|

|

||||||

@ -34,7 +34,6 @@ maxMessageSize = 200

|

|||||||

enableWebsocketCompression = false

|

enableWebsocketCompression = false

|

||||||

|

|

||||||

[browser]

|

[browser]

|

||||||

serverAddress = "localhost"

|

|

||||||

gatherUsageStats = false

|

gatherUsageStats = false

|

||||||

serverPort = 8501

|

serverPort = 8501

|

||||||

|

|

||||||

|

|||||||

134

README.md

134

README.md

@ -1,41 +1,45 @@

|

|||||||

# <center>Web-based UI for Stable Diffusion</center>

|

# <center>Web-based UI for Stable Diffusion</center>

|

||||||

|

|

||||||

## Created by [sd-webui](https://github.com/sd-webui)

|

## Created by [Sygil.Dev](https://github.com/sygil-dev)

|

||||||

|

|

||||||

## [Visit sd-webui's Discord Server](https://discord.gg/gyXNe4NySY) [](https://discord.gg/gyXNe4NySY)

|

## [Join us at Sygil.Dev's Discord Server](https://discord.gg/gyXNe4NySY) [](https://discord.gg/gyXNe4NySY)

|

||||||

|

|

||||||

## Installation instructions for:

|

## Installation instructions for:

|

||||||

- **[Windows](https://sd-webui.github.io/stable-diffusion-webui/docs/1.windows-installation.html)**

|

|

||||||

- **[Linux](https://sd-webui.github.io/stable-diffusion-webui/docs/2.linux-installation.html)**

|

- **[Windows](https://sygil-dev.github.io/sygil-webui/docs/1.windows-installation.html)**

|

||||||

|

- **[Linux](https://sygil-dev.github.io/sygil-webui/docs/2.linux-installation.html)**

|

||||||

|

|

||||||

### Want to ask a question or request a feature?

|

### Want to ask a question or request a feature?

|

||||||

|

|

||||||

Come to our [Discord Server](https://discord.gg/gyXNe4NySY) or use [Discussions](https://github.com/sd-webui/stable-diffusion-webui/discussions).

|

Come to our [Discord Server](https://discord.gg/gyXNe4NySY) or use [Discussions](https://github.com/sygil-dev/sygil-webui/discussions).

|

||||||

|

|

||||||

## Documentation

|

## Documentation

|

||||||

|

|

||||||

[Documentation is located here](https://sd-webui.github.io/stable-diffusion-webui/)

|

[Documentation is located here](https://sygil-dev.github.io/sygil-webui/)

|

||||||

|

|

||||||

## Want to contribute?

|

## Want to contribute?

|

||||||

|

|

||||||

Check the [Contribution Guide](CONTRIBUTING.md)

|

Check the [Contribution Guide](CONTRIBUTING.md)

|

||||||

|

|

||||||

[sd-webui](https://github.com/sd-webui) is:

|

[Sygil-Dev](https://github.com/Sygil-Dev) main devs:

|

||||||

|

|

||||||

*  [hlky](https://github.com/hlky)

|

*  [hlky](https://github.com/hlky)

|

||||||

* [ZeroCool940711](https://github.com/ZeroCool940711)

|

* [ZeroCool940711](https://github.com/ZeroCool940711)

|

||||||

* [codedealer](https://github.com/codedealer)

|

* [codedealer](https://github.com/codedealer)

|

||||||

|

|

||||||

### Project Features:

|

### Project Features:

|

||||||

|

|

||||||

* Two great Web UI's to choose from: Streamlit or Gradio

|

|

||||||

* No more manually typing parameters, now all you have to do is write your prompt and adjust sliders

|

|

||||||

* Built-in image enhancers and upscalers, including GFPGAN and realESRGAN

|

* Built-in image enhancers and upscalers, including GFPGAN and realESRGAN

|

||||||

|

|

||||||

|

* Generator Preview: See your image as its being made

|

||||||

|

|

||||||

* Run additional upscaling models on CPU to save VRAM

|

* Run additional upscaling models on CPU to save VRAM

|

||||||

* Textual inversion 🔥: [info](https://textual-inversion.github.io/) - requires enabling, see [here](https://github.com/hlky/sd-enable-textual-inversion), script works as usual without it enabled

|

|

||||||

* Advanced img2img editor with Mask and crop capabilities

|

* Textual inversion: [Reaserch Paper](https://textual-inversion.github.io/)

|

||||||

* Mask painting 🖌️: Powerful tool for re-generating only specific parts of an image you want to change (currently Gradio only)

|

|

||||||

* More diffusion samplers 🔥🔥: A great collection of samplers to use, including:

|

* K-Diffusion Samplers: A great collection of samplers to use, including:

|

||||||

- `k_euler` (Default)

|

|

||||||

|

- `k_euler`

|

||||||

- `k_lms`

|

- `k_lms`

|

||||||

- `k_euler_a`

|

- `k_euler_a`

|

||||||

- `k_dpm_2`

|

- `k_dpm_2`

|

||||||

@ -44,57 +48,78 @@ Check the [Contribution Guide](CONTRIBUTING.md)

|

|||||||

- `PLMS`

|

- `PLMS`

|

||||||

- `DDIM`

|

- `DDIM`

|

||||||

|

|

||||||

* Loopback ➿: Automatically feed the last generated sample back into img2img

|

* Loopback: Automatically feed the last generated sample back into img2img

|

||||||

* Prompt Weighting 🏋️: Adjust the strength of different terms in your prompt

|

|

||||||

* Selectable GPU usage with `--gpu <id>`

|

* Prompt Weighting & Negative Prompts: Gain more control over your creations

|

||||||

* Memory Monitoring 🔥: Shows VRAM usage and generation time after outputting

|

|

||||||

* Word Seeds 🔥: Use words instead of seed numbers

|

* Selectable GPU usage from Settings tab

|

||||||

* CFG: Classifier free guidance scale, a feature for fine-tuning your output

|

|

||||||

* Automatic Launcher: Activate conda and run Stable Diffusion with a single command

|

* Word Seeds: Use words instead of seed numbers

|

||||||

* Lighter on VRAM: 512x512 Text2Image & Image2Image tested working on 4GB

|

|

||||||

|

* Automated Launcher: Activate conda and run Stable Diffusion with a single command

|

||||||

|

|

||||||

|

* Lighter on VRAM: 512x512 Text2Image & Image2Image tested working on 4GB (with *optimized* mode enabled in Settings)

|

||||||

|

|

||||||

* Prompt validation: If your prompt is too long, you will get a warning in the text output field

|

* Prompt validation: If your prompt is too long, you will get a warning in the text output field

|

||||||

* Copy-paste generation parameters: A text output provides generation parameters in an easy to copy-paste form for easy sharing.

|

|

||||||

* Correct seeds for batches: If you use a seed of 1000 to generate two batches of two images each, four generated images will have seeds: `1000, 1001, 1002, 1003`.

|

* Sequential seeds for batches: If you use a seed of 1000 to generate two batches of two images each, four generated images will have seeds: `1000, 1001, 1002, 1003`.

|

||||||

|

|

||||||

* Prompt matrix: Separate multiple prompts using the `|` character, and the system will produce an image for every combination of them.

|

* Prompt matrix: Separate multiple prompts using the `|` character, and the system will produce an image for every combination of them.

|

||||||

* Loopback for Image2Image: A checkbox for img2img allowing to automatically feed output image as input for the next batch. Equivalent to saving output image, and replacing input image with it.

|

|

||||||

|

|

||||||

|

* [Gradio] Advanced img2img editor with Mask and crop capabilities

|

||||||

|

|

||||||

# Stable Diffusion Web UI

|

* [Gradio] Mask painting 🖌️: Powerful tool for re-generating only specific parts of an image you want to change (currently Gradio only)

|

||||||

A fully-integrated and easy way to work with Stable Diffusion right from a browser window.

|

|

||||||

|

# SD WebUI

|

||||||

|

|

||||||

|

An easy way to work with Stable Diffusion right from your browser.

|

||||||

|

|

||||||

## Streamlit

|

## Streamlit

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

**Features:**

|

**Features:**

|

||||||

- Clean UI with an easy to use design, with support for widescreen displays.

|

|

||||||

- Dynamic live preview of your generations

|

- Clean UI with an easy to use design, with support for widescreen displays

|

||||||

- Easily customizable presets right from the WebUI (Coming Soon!)

|

- *Dynamic live preview* of your generations

|

||||||

- An integrated gallery to show the generations for a prompt or session (Coming soon!)

|

- Easily customizable defaults, right from the WebUI's Settings tab

|

||||||

- Better optimization VRAM usage optimization, less errors for bigger generations.

|

- An integrated gallery to show the generations for a prompt

|

||||||

- Text2Video - Generate video clips from text prompts right from the WEb UI (WIP)

|

- *Optimized VRAM* usage for bigger generations or usage on lower end GPUs

|

||||||

- Concepts Library - Run custom embeddings others have made via textual inversion.

|

- *Text to Video:* Generate video clips from text prompts right from the WebUI (WIP)

|

||||||

- Actively being developed with new features being added and planned - Stay Tuned!

|

- Image to Text: Use [CLIP Interrogator](https://github.com/pharmapsychotic/clip-interrogator) to interrogate an image and get a prompt that you can use to generate a similar image using Stable Diffusion.

|

||||||

- Streamlit is now the new primary UI for the project moving forward.

|

- *Concepts Library:* Run custom embeddings others have made via textual inversion.

|

||||||

- *Currently in active development and still missing some of the features present in the Gradio Interface.*

|

- Textual Inversion training: Train your own embeddings on any photo you want and use it on your prompt.

|

||||||

|

- **Currently in development: [Stable Horde](https://stablehorde.net/) integration; ImgLab, batch inputs, & mask editor from Gradio

|

||||||

|

|

||||||

|

**Prompt Weights & Negative Prompts:**

|

||||||

|

|

||||||

|

To give a token (tag recognized by the AI) a specific or increased weight (emphasis), add `:0.##` to the prompt, where `0.##` is a decimal that will specify the weight of all tokens before the colon.

|

||||||

|

Ex: `cat:0.30, dog:0.70` or `guy riding a bicycle :0.7, incoming car :0.30`

|

||||||

|

|

||||||

|

Negative prompts can be added by using `###` , after which any tokens will be seen as negative.

|

||||||

|

Ex: `cat playing with string ### yarn` will negate `yarn` from the generated image.

|

||||||

|

|

||||||

|

Negatives are a very powerful tool to get rid of contextually similar or related topics, but **be careful when adding them since the AI might see connections you can't**, and end up outputting gibberish

|

||||||

|

|

||||||

|

**Tip:* Try using the same seed with different prompt configurations or weight values see how the AI understands them, it can lead to prompts that are more well-tuned and less prone to error.

|

||||||

|

|

||||||

Please see the [Streamlit Documentation](docs/4.streamlit-interface.md) to learn more.

|

Please see the [Streamlit Documentation](docs/4.streamlit-interface.md) to learn more.

|

||||||

|

|

||||||

|

## Gradio [Legacy]

|

||||||

## Gradio

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

**Features:**

|

**Features:**

|

||||||

- Older UI design that is fully functional and feature complete.

|

|

||||||

|

- Older UI that is functional and feature complete.

|

||||||

- Has access to all upscaling models, including LSDR.

|

- Has access to all upscaling models, including LSDR.

|

||||||

- Dynamic prompt entry automatically changes your generation settings based on `--params` in a prompt.

|

- Dynamic prompt entry automatically changes your generation settings based on `--params` in a prompt.

|

||||||

- Includes quick and easy ways to send generations to Image2Image or the Image Lab for upscaling.

|

- Includes quick and easy ways to send generations to Image2Image or the Image Lab for upscaling.

|

||||||

- *Note, the Gradio interface is no longer being actively developed and is only receiving bug fixes.*

|

|

||||||

|

**Note: the Gradio interface is no longer being actively developed by Sygil.Dev and is only receiving bug fixes.**

|

||||||

|

|

||||||

Please see the [Gradio Documentation](docs/5.gradio-interface.md) to learn more.

|

Please see the [Gradio Documentation](docs/5.gradio-interface.md) to learn more.

|

||||||

|

|

||||||

|

|

||||||

## Image Upscalers

|

## Image Upscalers

|

||||||

|

|

||||||

---

|

---

|

||||||

@ -106,8 +131,8 @@ Please see the [Gradio Documentation](docs/5.gradio-interface.md) to learn more.

|

|||||||

Lets you improve faces in pictures using the GFPGAN model. There is a checkbox in every tab to use GFPGAN at 100%, and also a separate tab that just allows you to use GFPGAN on any picture, with a slider that controls how strong the effect is.

|

Lets you improve faces in pictures using the GFPGAN model. There is a checkbox in every tab to use GFPGAN at 100%, and also a separate tab that just allows you to use GFPGAN on any picture, with a slider that controls how strong the effect is.

|

||||||

|

|

||||||

If you want to use GFPGAN to improve generated faces, you need to install it separately.

|

If you want to use GFPGAN to improve generated faces, you need to install it separately.

|

||||||

Download [GFPGANv1.3.pth](https://github.com/TencentARC/GFPGAN/releases/download/v1.3.0/GFPGANv1.3.pth) and put it

|

Download [GFPGANv1.4.pth](https://github.com/TencentARC/GFPGAN/releases/download/v1.3.4/GFPGANv1.4.pth) and put it

|

||||||

into the `/stable-diffusion-webui/models/gfpgan` directory.

|

into the `/sygil-webui/models/gfpgan` directory.

|

||||||

|

|

||||||

### RealESRGAN

|

### RealESRGAN

|

||||||

|

|

||||||

@ -117,20 +142,24 @@ Lets you double the resolution of generated images. There is a checkbox in every

|

|||||||

There is also a separate tab for using RealESRGAN on any picture.

|

There is also a separate tab for using RealESRGAN on any picture.

|

||||||

|

|

||||||

Download [RealESRGAN_x4plus.pth](https://github.com/xinntao/Real-ESRGAN/releases/download/v0.1.0/RealESRGAN_x4plus.pth) and [RealESRGAN_x4plus_anime_6B.pth](https://github.com/xinntao/Real-ESRGAN/releases/download/v0.2.2.4/RealESRGAN_x4plus_anime_6B.pth).

|

Download [RealESRGAN_x4plus.pth](https://github.com/xinntao/Real-ESRGAN/releases/download/v0.1.0/RealESRGAN_x4plus.pth) and [RealESRGAN_x4plus_anime_6B.pth](https://github.com/xinntao/Real-ESRGAN/releases/download/v0.2.2.4/RealESRGAN_x4plus_anime_6B.pth).

|

||||||

Put them into the `stable-diffusion-webui/models/realesrgan` directory.

|

Put them into the `sygil-webui/models/realesrgan` directory.

|

||||||

|

|

||||||

### GoBig, LSDR, and GoLatent *(Currently Gradio Only)*

|

### LSDR

|

||||||

|

|

||||||

|

Download **LDSR** [project.yaml](https://heibox.uni-heidelberg.de/f/31a76b13ea27482981b4/?dl=1) and [model last.cpkt](https://heibox.uni-heidelberg.de/f/578df07c8fc04ffbadf3/?dl=1). Rename last.ckpt to model.ckpt and place both under `sygil-webui/models/ldsr/`

|

||||||

|

|

||||||

|

### GoBig, and GoLatent *(Currently on the Gradio version Only)*

|

||||||

|

|

||||||

More powerful upscalers that uses a seperate Latent Diffusion model to more cleanly upscale images.

|

More powerful upscalers that uses a seperate Latent Diffusion model to more cleanly upscale images.

|

||||||

|

|

||||||

Download **LDSR** [project.yaml](https://heibox.uni-heidelberg.de/f/31a76b13ea27482981b4/?dl=1) and [ model last.cpkt](https://heibox.uni-heidelberg.de/f/578df07c8fc04ffbadf3/?dl=1). Rename last.ckpt to model.ckpt and place both under stable-diffusion-webui/models/ldsr/

|

Please see the [Image Enhancers Documentation](docs/6.image_enhancers.md) to learn more.

|

||||||

|

|

||||||

Please see the [Image Enhancers Documentation](docs/5.image_enhancers.md) to learn more.

|

|

||||||

|

|

||||||

-----

|

-----

|

||||||

|

|

||||||

### *Original Information From The Stable Diffusion Repo*

|

### *Original Information From The Stable Diffusion Repo:*

|

||||||

|

|

||||||

# Stable Diffusion

|

# Stable Diffusion

|

||||||

|

|

||||||

*Stable Diffusion was made possible thanks to a collaboration with [Stability AI](https://stability.ai/) and [Runway](https://runwayml.com/) and builds upon our previous work:*

|

*Stable Diffusion was made possible thanks to a collaboration with [Stability AI](https://stability.ai/) and [Runway](https://runwayml.com/) and builds upon our previous work:*

|

||||||

|

|

||||||

[**High-Resolution Image Synthesis with Latent Diffusion Models**](https://ommer-lab.com/research/latent-diffusion-models/)<br/>

|

[**High-Resolution Image Synthesis with Latent Diffusion Models**](https://ommer-lab.com/research/latent-diffusion-models/)<br/>

|

||||||

@ -144,7 +173,6 @@ Please see the [Image Enhancers Documentation](docs/5.image_enhancers.md) to lea

|

|||||||

|

|

||||||

which is available on [GitHub](https://github.com/CompVis/latent-diffusion). PDF at [arXiv](https://arxiv.org/abs/2112.10752). Please also visit our [Project page](https://ommer-lab.com/research/latent-diffusion-models/).

|

which is available on [GitHub](https://github.com/CompVis/latent-diffusion). PDF at [arXiv](https://arxiv.org/abs/2112.10752). Please also visit our [Project page](https://ommer-lab.com/research/latent-diffusion-models/).

|

||||||

|

|

||||||

|

|

||||||

[Stable Diffusion](#stable-diffusion-v1) is a latent text-to-image diffusion

|

[Stable Diffusion](#stable-diffusion-v1) is a latent text-to-image diffusion

|

||||||

model.

|

model.

|

||||||

Thanks to a generous compute donation from [Stability AI](https://stability.ai/) and support from [LAION](https://laion.ai/), we were able to train a Latent Diffusion Model on 512x512 images from a subset of the [LAION-5B](https://laion.ai/blog/laion-5b/) database.

|

Thanks to a generous compute donation from [Stability AI](https://stability.ai/) and support from [LAION](https://laion.ai/), we were able to train a Latent Diffusion Model on 512x512 images from a subset of the [LAION-5B](https://laion.ai/blog/laion-5b/) database.

|

||||||

@ -172,7 +200,6 @@ Thanks for open-sourcing!

|

|||||||

|

|

||||||

- The implementation of the transformer encoder is from [x-transformers](https://github.com/lucidrains/x-transformers) by [lucidrains](https://github.com/lucidrains?tab=repositories).

|

- The implementation of the transformer encoder is from [x-transformers](https://github.com/lucidrains/x-transformers) by [lucidrains](https://github.com/lucidrains?tab=repositories).

|

||||||

|

|

||||||

|

|

||||||

## BibTeX

|

## BibTeX

|

||||||

|

|

||||||

```

|

```

|

||||||

@ -184,7 +211,4 @@ Thanks for open-sourcing!

|

|||||||

archivePrefix={arXiv},

|

archivePrefix={arXiv},

|

||||||

primaryClass={cs.CV}

|

primaryClass={cs.CV}

|

||||||

}

|

}

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|

||||||

|

|

||||||

|

|||||||

554

Web_based_UI_for_Stable_Diffusion_colab.ipynb

Normal file

554

Web_based_UI_for_Stable_Diffusion_colab.ipynb

Normal file

@ -0,0 +1,554 @@

|

|||||||

|

{

|

||||||

|

"nbformat": 4,

|

||||||

|

"nbformat_minor": 0,

|

||||||

|

"metadata": {

|

||||||

|

"colab": {

|

||||||

|

"private_outputs": true,

|

||||||

|

"provenance": [],

|

||||||

|

"collapsed_sections": [

|

||||||

|

"5-Bx4AsEoPU-",

|

||||||

|

"xMWVQOg0G1Pj"

|

||||||

|

]

|

||||||

|

},

|

||||||

|

"kernelspec": {

|

||||||

|

"name": "python3",

|

||||||

|

"display_name": "Python 3"

|

||||||

|

},

|

||||||

|

"language_info": {

|

||||||

|

"name": "python"

|

||||||

|

},

|

||||||

|

"accelerator": "GPU"

|

||||||

|

},

|

||||||

|

"cells": [

|

||||||

|

{

|

||||||

|

"cell_type": "markdown",

|

||||||

|

"source": [

|

||||||

|

"[](https://colab.research.google.com/github/Sygil-Dev/sygil-webui/blob/dev/Web_based_UI_for_Stable_Diffusion_colab.ipynb)"

|

||||||

|

],

|

||||||

|

"metadata": {

|

||||||

|

"id": "S5RoIM-5IPZJ"

|

||||||

|

}

|

||||||

|

},

|

||||||

|

{

|

||||||

|

"cell_type": "markdown",

|

||||||

|

"source": [

|

||||||

|

"# README"

|

||||||

|

],

|

||||||

|

"metadata": {

|

||||||

|

"id": "5-Bx4AsEoPU-"

|

||||||

|

}

|

||||||

|

},

|

||||||

|

{

|

||||||

|

"cell_type": "markdown",

|

||||||

|

"source": [

|

||||||

|

"###<center>Web-based UI for Stable Diffusion</center>\n",

|

||||||

|

"\n",

|

||||||

|

"## Created by [Sygil-Dev](https://github.com/Sygil-Dev)\n",

|

||||||

|

"\n",

|

||||||

|

"## [Visit Sygil-Dev's Discord Server](https://discord.gg/gyXNe4NySY) [](https://discord.gg/gyXNe4NySY)\n",

|

||||||

|

"\n",

|

||||||

|

"## Installation instructions for:\n",

|

||||||

|

"\n",

|

||||||

|

"- **[Windows](https://sygil-dev.github.io/sygil-webui/docs/1.windows-installation.html)** \n",

|

||||||

|

"- **[Linux](https://sygil-dev.github.io/sygil-webui/docs/2.linux-installation.html)**\n",

|

||||||

|

"\n",

|

||||||

|

"### Want to ask a question or request a feature?\n",

|

||||||

|

"\n",

|

||||||

|

"Come to our [Discord Server](https://discord.gg/gyXNe4NySY) or use [Discussions](https://github.com/Sygil-Dev/sygil-webui/discussions).\n",

|

||||||

|

"\n",

|

||||||

|

"## Documentation\n",

|

||||||

|

"\n",

|

||||||

|

"[Documentation is located here](https://sygil-dev.github.io/sygil-webui/)\n",

|

||||||

|

"\n",

|

||||||

|

"## Want to contribute?\n",

|

||||||

|

"\n",

|

||||||

|

"Check the [Contribution Guide](CONTRIBUTING.md)\n",

|

||||||

|

"\n",

|

||||||

|

"[Sygil-Dev](https://github.com/Sygil-Dev) main devs:\n",

|

||||||

|

"\n",

|

||||||

|

"*  [hlky](https://github.com/hlky)\n",

|

||||||

|

"* [ZeroCool940711](https://github.com/ZeroCool940711)\n",

|

||||||

|

"* [codedealer](https://github.com/codedealer)\n",

|

||||||

|

"\n",

|

||||||

|

"### Project Features:\n",

|

||||||

|

"\n",

|

||||||

|

"* Two great Web UI's to choose from: Streamlit or Gradio\n",

|

||||||

|

"\n",

|

||||||

|

"* No more manually typing parameters, now all you have to do is write your prompt and adjust sliders\n",

|

||||||

|

"\n",

|

||||||

|

"* Built-in image enhancers and upscalers, including GFPGAN and realESRGAN\n",

|

||||||

|

"\n",

|

||||||

|

"* Run additional upscaling models on CPU to save VRAM\n",

|

||||||

|

"\n",

|

||||||

|

"* Textual inversion 🔥: [info](https://textual-inversion.github.io/) - requires enabling, see [here](https://github.com/hlky/sd-enable-textual-inversion), script works as usual without it enabled\n",

|

||||||

|

"\n",

|

||||||

|

"* Advanced img2img editor with Mask and crop capabilities\n",

|

||||||

|

"\n",

|

||||||

|

"* Mask painting 🖌️: Powerful tool for re-generating only specific parts of an image you want to change (currently Gradio only)\n",

|

||||||

|

"\n",

|

||||||

|

"* More diffusion samplers 🔥🔥: A great collection of samplers to use, including:\n",

|

||||||

|

" \n",

|

||||||

|

" - `k_euler` (Default)\n",

|

||||||

|

" - `k_lms`\n",

|

||||||

|

" - `k_euler_a`\n",

|

||||||

|

" - `k_dpm_2`\n",

|

||||||

|

" - `k_dpm_2_a`\n",

|

||||||

|

" - `k_heun`\n",

|

||||||

|

" - `PLMS`\n",

|

||||||

|

" - `DDIM`\n",

|

||||||

|

"\n",

|

||||||

|

"* Loopback ➿: Automatically feed the last generated sample back into img2img\n",

|

||||||

|

"\n",

|

||||||

|

"* Prompt Weighting 🏋️: Adjust the strength of different terms in your prompt\n",

|

||||||

|

"\n",

|

||||||

|

"* Selectable GPU usage with `--gpu <id>`\n",

|

||||||

|

"\n",

|

||||||

|

"* Memory Monitoring 🔥: Shows VRAM usage and generation time after outputting\n",

|

||||||

|

"\n",

|

||||||

|

"* Word Seeds 🔥: Use words instead of seed numbers\n",

|

||||||

|

"\n",

|

||||||

|

"* CFG: Classifier free guidance scale, a feature for fine-tuning your output\n",

|

||||||

|

"\n",

|

||||||

|

"* Automatic Launcher: Activate conda and run Stable Diffusion with a single command\n",

|

||||||

|

"\n",

|

||||||

|

"* Lighter on VRAM: 512x512 Text2Image & Image2Image tested working on 4GB\n",

|

||||||

|

"\n",

|

||||||

|

"* Prompt validation: If your prompt is too long, you will get a warning in the text output field\n",

|

||||||

|

"\n",

|

||||||

|

"* Copy-paste generation parameters: A text output provides generation parameters in an easy to copy-paste form for easy sharing.\n",

|

||||||

|

"\n",

|

||||||

|

"* Correct seeds for batches: If you use a seed of 1000 to generate two batches of two images each, four generated images will have seeds: `1000, 1001, 1002, 1003`.\n",

|

||||||

|

"\n",

|

||||||

|

"* Prompt matrix: Separate multiple prompts using the `|` character, and the system will produce an image for every combination of them.\n",

|

||||||

|

"\n",

|

||||||

|

"* Loopback for Image2Image: A checkbox for img2img allowing to automatically feed output image as input for the next batch. Equivalent to saving output image, and replacing input image with it.\n",

|

||||||

|

"\n",

|

||||||

|

"# Stable Diffusion Web UI\n",

|

||||||

|

"\n",

|

||||||

|

"A fully-integrated and easy way to work with Stable Diffusion right from a browser window.\n",

|

||||||

|

"\n",

|

||||||

|

"## Streamlit\n",

|

||||||

|

"\n",

|

||||||

|

"\n",

|

||||||

|

"\n",

|

||||||

|

"**Features:**\n",

|

||||||

|

"\n",

|

||||||

|

"- Clean UI with an easy to use design, with support for widescreen displays.\n",

|

||||||

|

"- Dynamic live preview of your generations\n",

|

||||||

|

"- Easily customizable presets right from the WebUI (Coming Soon!)\n",

|

||||||

|

"- An integrated gallery to show the generations for a prompt or session (Coming soon!)\n",

|

||||||

|

"- Better optimization VRAM usage optimization, less errors for bigger generations.\n",

|

||||||

|

"- Text2Video - Generate video clips from text prompts right from the WEb UI (WIP)\n",

|

||||||

|

"- Concepts Library - Run custom embeddings others have made via textual inversion.\n",

|

||||||

|

"- Actively being developed with new features being added and planned - Stay Tuned!\n",

|

||||||

|

"- Streamlit is now the new primary UI for the project moving forward.\n",

|

||||||

|

"- *Currently in active development and still missing some of the features present in the Gradio Interface.*\n",

|

||||||

|

"\n",

|

||||||

|

"Please see the [Streamlit Documentation](docs/4.streamlit-interface.md) to learn more.\n",

|

||||||

|

"\n",

|

||||||

|

"## Gradio\n",

|

||||||

|

"\n",

|

||||||

|

"\n",

|

||||||

|

"\n",

|

||||||

|

"**Features:**\n",

|

||||||

|

"\n",

|

||||||

|

"- Older UI design that is fully functional and feature complete.\n",

|

||||||

|

"- Has access to all upscaling models, including LSDR.\n",

|

||||||

|

"- Dynamic prompt entry automatically changes your generation settings based on `--params` in a prompt.\n",

|

||||||

|

"- Includes quick and easy ways to send generations to Image2Image or the Image Lab for upscaling.\n",

|

||||||

|

"- *Note, the Gradio interface is no longer being actively developed and is only receiving bug fixes.*\n",

|

||||||

|

"\n",

|

||||||

|

"Please see the [Gradio Documentation](docs/5.gradio-interface.md) to learn more.\n",

|

||||||

|

"\n",

|

||||||

|

"## Image Upscalers\n",

|

||||||

|

"\n",

|

||||||

|

"---\n",

|

||||||

|

"\n",

|

||||||

|

"### GFPGAN\n",

|

||||||

|

"\n",

|

||||||

|

"\n",

|

||||||

|

"\n",

|

||||||

|

"Lets you improve faces in pictures using the GFPGAN model. There is a checkbox in every tab to use GFPGAN at 100%, and also a separate tab that just allows you to use GFPGAN on any picture, with a slider that controls how strong the effect is.\n",

|

||||||

|

"\n",

|

||||||

|

"If you want to use GFPGAN to improve generated faces, you need to install it separately.\n",

|

||||||

|

"Download [GFPGANv1.4.pth](https://github.com/TencentARC/GFPGAN/releases/download/v1.3.4/GFPGANv1.4.pth) and put it\n",

|

||||||

|

"into the `/sygil-webui/models/gfpgan` directory. \n",

|

||||||

|

"\n",

|

||||||

|

"### RealESRGAN\n",

|

||||||

|

"\n",

|

||||||

|

"\n",

|

||||||

|

"\n",

|

||||||

|

"Lets you double the resolution of generated images. There is a checkbox in every tab to use RealESRGAN, and you can choose between the regular upscaler and the anime version.\n",

|

||||||

|

"There is also a separate tab for using RealESRGAN on any picture.\n",

|

||||||

|

"\n",

|

||||||

|

"Download [RealESRGAN_x4plus.pth](https://github.com/xinntao/Real-ESRGAN/releases/download/v0.1.0/RealESRGAN_x4plus.pth) and [RealESRGAN_x4plus_anime_6B.pth](https://github.com/xinntao/Real-ESRGAN/releases/download/v0.2.2.4/RealESRGAN_x4plus_anime_6B.pth).\n",

|

||||||

|

"Put them into the `sygil-webui/models/realesrgan` directory. \n",

|

||||||

|

"\n",

|

||||||

|

"\n",

|

||||||

|

"\n",

|

||||||

|

"### LSDR\n",

|

||||||

|

"\n",

|

||||||

|

"Download **LDSR** [project.yaml](https://heibox.uni-heidelberg.de/f/31a76b13ea27482981b4/?dl=1) and [model last.cpkt](https://heibox.uni-heidelberg.de/f/578df07c8fc04ffbadf3/?dl=1). Rename last.ckpt to model.ckpt and place both under `sygil-webui/models/ldsr/`\n",

|

||||||

|

"\n",

|

||||||

|

"### GoBig, and GoLatent *(Currently on the Gradio version Only)*\n",

|

||||||

|

"\n",

|

||||||

|

"More powerful upscalers that uses a seperate Latent Diffusion model to more cleanly upscale images.\n",

|

||||||

|

"\n",

|

||||||

|

"\n",

|

||||||

|

"\n",

|

||||||

|

"Please see the [Image Enhancers Documentation](docs/6.image_enhancers.md) to learn more.\n",

|

||||||

|

"\n",

|

||||||

|

"-----\n",

|

||||||

|

"\n",

|

||||||

|

"### *Original Information From The Stable Diffusion Repo*\n",

|

||||||

|

"\n",

|

||||||

|

"# Stable Diffusion\n",

|

||||||

|

"\n",

|

||||||

|

"*Stable Diffusion was made possible thanks to a collaboration with [Stability AI](https://stability.ai/) and [Runway](https://runwayml.com/) and builds upon our previous work:*\n",

|

||||||

|

"\n",

|

||||||

|

"[**High-Resolution Image Synthesis with Latent Diffusion Models**](https://ommer-lab.com/research/latent-diffusion-models/)<br/>\n",

|

||||||

|

"[Robin Rombach](https://github.com/rromb)\\*,\n",

|

||||||

|

"[Andreas Blattmann](https://github.com/ablattmann)\\*,\n",

|

||||||

|

"[Dominik Lorenz](https://github.com/qp-qp)\\,\n",

|

||||||

|

"[Patrick Esser](https://github.com/pesser),\n",

|

||||||

|

"[Björn Ommer](https://hci.iwr.uni-heidelberg.de/Staff/bommer)<br/>\n",

|

||||||

|

"\n",

|

||||||

|

"**CVPR '22 Oral**\n",

|

||||||

|

"\n",

|

||||||

|

"which is available on [GitHub](https://github.com/CompVis/latent-diffusion). PDF at [arXiv](https://arxiv.org/abs/2112.10752). Please also visit our [Project page](https://ommer-lab.com/research/latent-diffusion-models/).\n",

|

||||||

|

"\n",

|

||||||

|

"[Stable Diffusion](#stable-diffusion-v1) is a latent text-to-image diffusion\n",

|

||||||

|

"model.\n",

|

||||||

|

"Thanks to a generous compute donation from [Stability AI](https://stability.ai/) and support from [LAION](https://laion.ai/), we were able to train a Latent Diffusion Model on 512x512 images from a subset of the [LAION-5B](https://laion.ai/blog/laion-5b/) database. \n",

|

||||||

|

"Similar to Google's [Imagen](https://arxiv.org/abs/2205.11487), \n",

|

||||||

|

"this model uses a frozen CLIP ViT-L/14 text encoder to condition the model on text prompts.\n",

|

||||||

|

"With its 860M UNet and 123M text encoder, the model is relatively lightweight and runs on a GPU with at least 10GB VRAM.\n",

|

||||||

|

"See [this section](#stable-diffusion-v1) below and the [model card](https://huggingface.co/CompVis/stable-diffusion).\n",

|

||||||

|

"\n",

|

||||||

|

"## Stable Diffusion v1\n",

|

||||||

|

"\n",

|

||||||

|

"Stable Diffusion v1 refers to a specific configuration of the model\n",

|

||||||

|

"architecture that uses a downsampling-factor 8 autoencoder with an 860M UNet\n",

|

||||||

|

"and CLIP ViT-L/14 text encoder for the diffusion model. The model was pretrained on 256x256 images and \n",

|

||||||

|

"then finetuned on 512x512 images.\n",

|

||||||

|

"\n",

|

||||||

|

"*Note: Stable Diffusion v1 is a general text-to-image diffusion model and therefore mirrors biases and (mis-)conceptions that are present\n",

|

||||||

|

"in its training data. \n",

|

||||||

|

"Details on the training procedure and data, as well as the intended use of the model can be found in the corresponding [model card](https://huggingface.co/CompVis/stable-diffusion).\n",

|

||||||

|

"\n",

|

||||||

|

"## Comments\n",

|

||||||

|

"\n",

|

||||||

|

"- Our codebase for the diffusion models builds heavily on [OpenAI's ADM codebase](https://github.com/openai/guided-diffusion)\n",

|

||||||

|

" and [https://github.com/lucidrains/denoising-diffusion-pytorch](https://github.com/lucidrains/denoising-diffusion-pytorch). \n",

|

||||||

|

" Thanks for open-sourcing!\n",

|

||||||

|

"\n",

|

||||||

|

"- The implementation of the transformer encoder is from [x-transformers](https://github.com/lucidrains/x-transformers) by [lucidrains](https://github.com/lucidrains?tab=repositories). \n",

|

||||||

|

"\n",

|

||||||

|

"## BibTeX\n",

|

||||||

|

"\n",

|

||||||

|

"```\n",

|

||||||

|

"@misc{rombach2021highresolution,\n",

|

||||||

|

" title={High-Resolution Image Synthesis with Latent Diffusion Models}, \n",

|

||||||

|

" author={Robin Rombach and Andreas Blattmann and Dominik Lorenz and Patrick Esser and Björn Ommer},\n",

|

||||||

|

" year={2021},\n",

|

||||||

|

" eprint={2112.10752},\n",

|

||||||

|

" archivePrefix={arXiv},\n",

|

||||||

|

" primaryClass={cs.CV}\n",

|

||||||

|

"}\n",

|

||||||

|

"\n",

|

||||||

|

"```"

|

||||||

|

],

|

||||||

|

"metadata": {

|

||||||

|

"id": "z4kQYMPQn4d-"

|

||||||

|

}

|

||||||

|

},

|

||||||

|

{

|

||||||

|

"cell_type": "markdown",

|

||||||

|

"source": [

|

||||||

|

"# Config options for Colab instance\n",

|

||||||

|

"> Before running, make sure GPU backend is enabled. (Unless you plan on generating with Stable Horde)\n",

|

||||||

|

">> Runtime -> Change runtime type -> Hardware Accelerator -> GPU (Make sure to save)"

|

||||||

|

],

|

||||||

|

"metadata": {

|

||||||

|

"id": "iegma7yteERV"

|

||||||

|

}

|

||||||

|

},

|

||||||

|

{

|

||||||

|

"cell_type": "code",

|

||||||

|

"source": [

|

||||||

|

"#@markdown WebUI repo (and branch)\n",

|

||||||

|

"repo_name = \"Sygil-Dev/sygil-webui\" #@param {type:\"string\"}\n",

|

||||||

|

"repo_branch = \"dev\" #@param {type:\"string\"}\n",

|

||||||

|

"\n",

|

||||||

|

"#@markdown Mount Google Drive\n",

|

||||||

|

"mount_google_drive = True #@param {type:\"boolean\"}\n",

|

||||||

|

"save_outputs_to_drive = True #@param {type:\"boolean\"}\n",

|

||||||

|

"#@markdown Folder in Google Drive to search for custom models\n",

|

||||||

|

"MODEL_DIR = \"\" #@param {type:\"string\"}\n",

|

||||||

|

"\n",

|

||||||

|

"#@markdown Enter auth token from Huggingface.co\n",

|

||||||

|

"#@markdown >(required for downloading stable diffusion model.)\n",

|

||||||

|

"HF_TOKEN = \"\" #@param {type:\"string\"}\n",

|

||||||

|

"\n",

|

||||||

|

"#@markdown Select which models to prefetch\n",

|

||||||

|

"STABLE_DIFFUSION = True #@param {type:\"boolean\"}\n",

|

||||||

|

"WAIFU_DIFFUSION = False #@param {type:\"boolean\"}\n",

|

||||||

|

"TRINART_SD = False #@param {type:\"boolean\"}\n",

|

||||||

|

"SD_WD_LD_TRINART_MERGED = False #@param {type:\"boolean\"}\n",

|

||||||

|

"GFPGAN = True #@param {type:\"boolean\"}\n",

|

||||||

|

"REALESRGAN = True #@param {type:\"boolean\"}\n",

|

||||||

|

"LDSR = True #@param {type:\"boolean\"}\n",

|

||||||

|

"BLIP_MODEL = False #@param {type:\"boolean\"}\n",

|

||||||

|

"\n"

|

||||||

|

],

|

||||||

|

"metadata": {

|

||||||

|

"id": "OXn96M9deVtF"

|

||||||

|

},

|

||||||

|

"execution_count": null,

|

||||||

|

"outputs": []

|

||||||

|

},

|

||||||

|

{

|

||||||

|

"cell_type": "markdown",

|

||||||

|

"source": [

|

||||||

|

"# Setup\n",

|

||||||

|

"\n",

|

||||||

|

">Runtime will crash when installing conda. This is normal as we are forcing a restart of the runtime from code.\n",

|

||||||

|

"\n",

|

||||||

|

">Just hit \"Run All\" again. 😑"

|

||||||

|

],

|

||||||

|

"metadata": {

|

||||||

|

"id": "IZjJSr-WPNxB"

|

||||||

|

}

|

||||||

|

},

|

||||||

|

{

|

||||||

|

"cell_type": "code",

|

||||||

|

"metadata": {

|

||||||

|

"id": "eq0-E5mjSpmP"

|

||||||

|

},

|

||||||

|

"source": [

|

||||||

|

"#@title Make sure we have access to GPU backend\n",

|

||||||

|

"!nvidia-smi -L"

|

||||||

|

],

|

||||||

|

"execution_count": null,

|

||||||

|

"outputs": []

|

||||||

|

},

|

||||||

|

{

|

||||||

|

"cell_type": "code",

|

||||||

|

"source": [

|

||||||

|

"#@title Install miniConda (mamba)\n",

|

||||||

|

"!pip install condacolab\n",

|

||||||

|

"import condacolab\n",

|

||||||

|

"condacolab.install_from_url(\"https://github.com/conda-forge/miniforge/releases/download/4.14.0-0/Mambaforge-4.14.0-0-Linux-x86_64.sh\")\n",

|

||||||

|

"\n",

|

||||||

|

"import condacolab\n",

|

||||||

|

"condacolab.check()\n",

|

||||||

|

"# The runtime will crash here!!! Don't panic! We planned for this remember?"

|

||||||

|

],

|

||||||

|

"metadata": {

|

||||||

|

"id": "cDu33xkdJ5mD"

|

||||||

|

},

|

||||||

|

"execution_count": null,

|

||||||

|

"outputs": []

|

||||||

|

},

|

||||||

|

{

|

||||||

|

"cell_type": "code",

|

||||||

|

"source": [

|

||||||

|

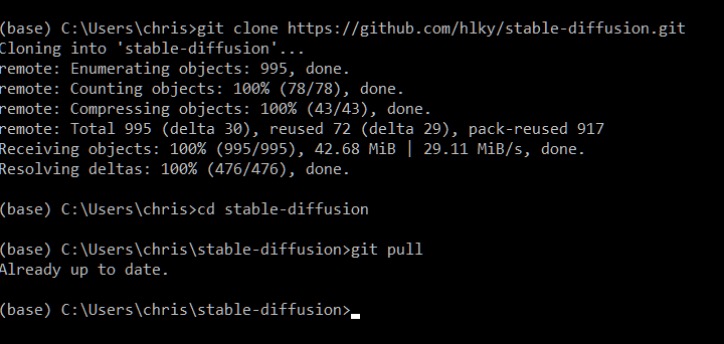

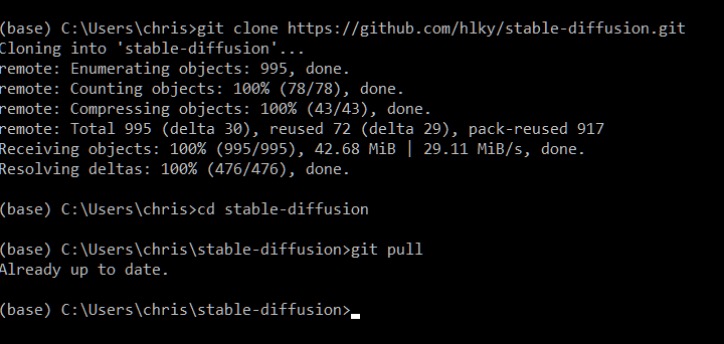

"#@title Clone webUI repo and download font\n",

|

||||||

|

"import os\n",

|

||||||

|

"REPO_URL = os.path.join('https://github.com', repo_name)\n",

|

||||||

|

"PATH_TO_REPO = os.path.join('/content', repo_name.split('/')[1])\n",

|

||||||

|

"!git clone {REPO_URL}\n",

|

||||||

|

"%cd {PATH_TO_REPO}\n",

|

||||||

|

"!git checkout {repo_branch}\n",

|

||||||

|

"!git pull\n",

|

||||||

|

"!wget -O arial.ttf https://github.com/matomo-org/travis-scripts/blob/master/fonts/Arial.ttf?raw=true"

|

||||||

|

],

|

||||||

|

"metadata": {

|

||||||

|

"id": "pZHGf03Vp305"

|

||||||

|

},

|

||||||

|

"execution_count": null,

|

||||||

|

"outputs": []

|

||||||

|

},

|

||||||

|

{

|

||||||

|

"cell_type": "code",

|

||||||

|

"source": [

|

||||||

|

"#@title Install dependencies\n",

|

||||||

|

"!mamba install cudatoolkit=11.3 git numpy=1.22.3 pip=20.3 python=3.8.5 pytorch=1.11.0 scikit-image=0.19.2 torchvision=0.12.0 -y\n",

|

||||||

|

"!python --version\n",

|

||||||

|

"!pip install -r requirements.txt"

|

||||||

|

],

|

||||||

|

"metadata": {

|

||||||

|

"id": "dmN2igp5Yk3z"

|

||||||

|

},

|

||||||

|

"execution_count": null,

|

||||||

|

"outputs": []

|

||||||

|

},

|

||||||

|

{

|

||||||

|

"cell_type": "code",

|

||||||

|

"source": [

|

||||||

|

"#@title Install localtunnel to openGoogle's ports\n",

|

||||||

|

"!npm install localtunnel"

|

||||||

|

],

|

||||||

|

"metadata": {

|

||||||

|

"id": "Nxaxfgo_F8Am"

|

||||||

|

},

|

||||||

|

"execution_count": null,

|

||||||

|

"outputs": []

|

||||||

|

},

|

||||||

|

{

|

||||||

|

"cell_type": "code",

|

||||||

|

"source": [

|

||||||

|

"#@title Mount Google Drive (if selected)\n",

|

||||||

|

"if mount_google_drive:\n",

|

||||||

|

" # Mount google drive to store outputs.\n",

|

||||||

|

" from google.colab import drive\n",

|

||||||

|

" drive.mount('/content/drive/', force_remount=True)\n",

|

||||||

|

"\n",

|

||||||

|

"if save_outputs_to_drive:\n",

|

||||||

|

" # Make symlink to redirect downloads\n",

|

||||||

|

" OUTPUT_PATH = os.path.join('/content/drive/MyDrive', repo_name.split('/')[1], 'outputs')\n",

|

||||||

|

" os.makedirs(OUTPUT_PATH, exist_ok=True)\n",

|

||||||

|

" os.symlink(OUTPUT_PATH, os.path.join(PATH_TO_REPO, 'outputs'), target_is_directory=True)\n"

|

||||||

|

],

|

||||||

|

"metadata": {

|

||||||

|

"id": "pcSWo9Zkzbsf"

|

||||||

|

},

|

||||||

|

"execution_count": null,

|

||||||

|

"outputs": []

|

||||||

|

},

|

||||||

|

{

|

||||||

|

"cell_type": "code",

|

||||||

|

"source": [

|

||||||

|

"#@title Pre-fetch models\n",

|

||||||

|

"%cd {PATH_TO_REPO}\n",

|

||||||

|

"# make list of models we want to download\n",

|

||||||

|

"model_list = {\n",

|

||||||

|

" 'stable_diffusion': f'{STABLE_DIFFUSION}',\n",

|

||||||

|

" 'waifu_diffusion': f'{WAIFU_DIFFUSION}',\n",

|

||||||

|

" 'trinart_stable_diffusion': f'{TRINART_SD}',\n",

|

||||||

|

" 'sd_wd_ld_trinart_merged': f'{SD_WD_LD_TRINART_MERGED}',\n",

|

||||||

|

" 'gfpgan': f'{GFPGAN}',\n",

|

||||||

|

" 'realesrgan': f'{REALESRGAN}',\n",

|

||||||

|

" 'ldsr': f'{LDSR}',\n",

|

||||||

|

" 'blip_model': f'{BLIP_MODEL}'}\n",

|

||||||

|

"download_list = {k for (k,v) in model_list.items() if v == 'True'}\n",

|

||||||

|

"\n",

|

||||||

|

"# get model info (file name, download link, save location)\n",

|

||||||

|

"import yaml\n",

|

||||||

|

"from pprint import pprint\n",

|

||||||

|

"with open('configs/webui/webui_streamlit.yaml') as f:\n",

|

||||||

|

" dataMap = yaml.safe_load(f)\n",

|

||||||

|

"models = dataMap['model_manager']['models']\n",

|

||||||

|

"\n",

|

||||||

|

"# copy script from model manager\n",

|

||||||

|

"import requests, time\n",

|

||||||

|

"from requests.auth import HTTPBasicAuth\n",

|

||||||

|

"\n",

|

||||||

|

"def download_file(file_name, file_path, file_url):\n",

|

||||||

|

" os.makedirs(file_path, exist_ok=True)\n",

|

||||||

|

" if os.path.exists(os.path.join(MODEL_DIR , file_name)):\n",

|

||||||

|

" print( file_name + \"found in Google Drive\")\n",

|

||||||

|

" print( \"Creating symlink...\")\n",

|

||||||

|

" os.symlink(os.path.join(MODEL_DIR , file_name), os.path.join(file_path, file_name))\n",

|

||||||

|

" elif not os.path.exists(os.path.join(file_path , file_name)):\n",

|

||||||

|

" print( \"Downloading \" + file_name + \"...\", end=\"\" )\n",

|

||||||

|

" token = None\n",

|

||||||

|

" if \"huggingface.co\" in file_url:\n",

|

||||||

|

" token = HTTPBasicAuth('token', HF_TOKEN)\n",

|

||||||

|

" try:\n",

|

||||||

|

" with requests.get(file_url, auth = token, stream=True) as r:\n",

|

||||||

|

" starttime = time.time()\n",

|

||||||

|

" r.raise_for_status()\n",

|

||||||

|

" with open(os.path.join(file_path, file_name), 'wb') as f:\n",

|

||||||

|

" for chunk in r.iter_content(chunk_size=8192):\n",

|

||||||

|

" f.write(chunk)\n",

|

||||||

|

" if ((time.time() - starttime) % 60.0) > 2 :\n",

|

||||||

|

" starttime = time.time()\n",

|

||||||

|

" print( \".\", end=\"\" )\n",

|

||||||

|

" print( \"done\" )\n",

|

||||||

|

" print( \" \" + file_name + \" downloaded to \\'\" + file_path + \"\\'\" )\n",

|

||||||

|

" except:\n",

|

||||||

|

" print( \"Failed to download \" + file_name + \".\" )\n",

|

||||||

|

" else:\n",

|

||||||

|

" print( file_name + \" already exists.\" )\n",

|

||||||

|

"\n",

|

||||||

|

"# download models in list\n",

|

||||||

|

"for model in download_list:\n",

|

||||||

|

" model_name = models[model]['model_name']\n",

|

||||||

|

" file_info = models[model]['files']\n",

|

||||||

|

" for file in file_info:\n",

|

||||||

|

" file_name = file_info[file]['file_name']\n",

|

||||||

|

" file_url = file_info[file]['download_link']\n",

|

||||||

|

" if 'save_location' in file_info[file]:\n",

|

||||||

|

" file_path = file_info[file]['save_location']\n",

|

||||||

|

" else: \n",

|

||||||

|

" file_path = models[model]['save_location']\n",

|

||||||

|

" download_file(file_name, file_path, file_url)\n",

|

||||||

|

"\n",

|

||||||

|

"# add custom models not in list\n",

|

||||||

|

"CUSTOM_MODEL_DIR = os.path.join(PATH_TO_REPO, 'models/custom')\n",

|

||||||

|

"if MODEL_DIR != \"\":\n",

|

||||||

|

" MODEL_DIR = os.path.join('/content/drive/MyDrive', MODEL_DIR)\n",

|

||||||

|

" if os.path.exists(MODEL_DIR):\n",

|

||||||

|

" custom_models = os.listdir(MODEL_DIR)\n",

|

||||||

|

" custom_models = [m for m in custom_models if os.path.isfile(MODEL_DIR + '/' + m)]\n",

|

||||||

|

" os.makedirs(CUSTOM_MODEL_DIR, exist_ok=True)\n",

|

||||||

|

" print( \"Custom model(s) found: \" )\n",

|

||||||

|

" for m in custom_models:\n",

|

||||||

|

" print( \" \" + m )\n",

|

||||||

|

" os.symlink(os.path.join(MODEL_DIR , m), os.path.join(CUSTOM_MODEL_DIR, m))\n",

|

||||||

|

"\n"

|

||||||

|

],

|

||||||

|

"metadata": {

|

||||||

|

"id": "vMdmh81J70yA"

|

||||||

|

},

|

||||||

|

"execution_count": null,

|

||||||

|

"outputs": []

|

||||||

|

},

|

||||||

|

{

|

||||||

|

"cell_type": "markdown",

|

||||||

|

"source": [

|

||||||

|

"# Launch the web ui server\n",

|

||||||

|

"### (optional) JS to prevent idle timeout:\n",

|

||||||

|

"Press 'F12' OR ('CTRL' + 'SHIFT' + 'I') OR right click on this website -> inspect. Then click on the console tab and paste in the following code.\n",

|

||||||

|

"```js,\n",

|

||||||

|

"function ClickConnect(){\n",

|

||||||

|

"console.log(\"Working\");\n",

|

||||||

|

"document.querySelector(\"colab-toolbar-button#connect\").click()\n",

|

||||||

|

"}\n",

|

||||||

|

"setInterval(ClickConnect,60000)\n",

|

||||||

|

"```"

|

||||||

|

],

|

||||||

|

"metadata": {

|

||||||

|

"id": "pjIjiCuJysJI"

|

||||||

|

}

|

||||||

|

},

|

||||||

|

{

|

||||||

|

"cell_type": "code",

|

||||||

|

"source": [

|

||||||

|

"#@title Press play on the music player to keep the tab alive (Uses only 13MB of data)\n",

|

||||||

|

"%%html\n",

|

||||||

|

"<b>Press play on the music player to keep the tab alive, then start your generation below (Uses only 13MB of data)</b><br/>\n",

|

||||||

|

"<audio src=\"https://henk.tech/colabkobold/silence.m4a\" controls>"

|

||||||

|

],

|

||||||

|

"metadata": {

|

||||||

|

"id": "-WknaU7uu_q6"

|

||||||

|

},

|

||||||

|

"execution_count": null,

|

||||||

|

"outputs": []

|

||||||

|

},

|

||||||

|

{

|

||||||

|

"cell_type": "code",

|

||||||

|

"source": [

|

||||||

|

"#@title Run localtunnel and start Streamlit server. ('Ctrl' + 'left click') on link in the 'link.txt' file. (/content/link.txt)\n",

|

||||||

|

"!npx localtunnel --port 8501 &>/content/link.txt &\n",

|

||||||

|

"!streamlit run scripts/webui_streamlit.py --theme.base dark --server.headless true 2>&1 | tee -a /content/log.txt"

|

||||||

|

],

|

||||||

|

"metadata": {

|

||||||

|

"id": "5whXm2nfSZ39"

|

||||||

|

},

|

||||||

|

"execution_count": null,

|

||||||

|

"outputs": []

|

||||||

|

}

|

||||||

|

]

|

||||||

|

}

|

||||||

@ -1,6 +1,6 @@

|

|||||||

# This file is part of stable-diffusion-webui (https://github.com/sd-webui/stable-diffusion-webui/).

|

# This file is part of sygil-webui (https://github.com/Sygil-Dev/sygil-webui/).

|

||||||

|

|

||||||

# Copyright 2022 sd-webui team.

|

# Copyright 2022 Sygil-Dev team.

|

||||||

# This program is free software: you can redistribute it and/or modify

|

# This program is free software: you can redistribute it and/or modify

|

||||||

# it under the terms of the GNU Affero General Public License as published by

|

# it under the terms of the GNU Affero General Public License as published by

|

||||||

# the Free Software Foundation, either version 3 of the License, or

|

# the Free Software Foundation, either version 3 of the License, or

|

||||||

|

|||||||

@ -1,6 +1,6 @@

|

|||||||

# This file is part of stable-diffusion-webui (https://github.com/sd-webui/stable-diffusion-webui/).

|

# This file is part of sygil-webui (https://github.com/Sygil-Dev/sygil-webui/).

|

||||||

|

|

||||||

# Copyright 2022 sd-webui team.

|

# Copyright 2022 Sygil-Dev team.

|

||||||

# This program is free software: you can redistribute it and/or modify

|

# This program is free software: you can redistribute it and/or modify

|

||||||

# it under the terms of the GNU Affero General Public License as published by

|

# it under the terms of the GNU Affero General Public License as published by

|

||||||

# the Free Software Foundation, either version 3 of the License, or

|

# the Free Software Foundation, either version 3 of the License, or

|

||||||

@ -19,14 +19,16 @@

|

|||||||

# You may add overrides in a file named "userconfig_streamlit.yaml" in this folder, which can contain any subset

|

# You may add overrides in a file named "userconfig_streamlit.yaml" in this folder, which can contain any subset

|

||||||

# of the properties below.

|

# of the properties below.

|

||||||

general:

|

general:

|

||||||

|

version: 1.24.6

|

||||||

streamlit_telemetry: False

|

streamlit_telemetry: False

|

||||||

default_theme: dark

|

default_theme: dark

|

||||||

huggingface_token: ""

|

huggingface_token: ''

|

||||||

|

stable_horde_api: '0000000000'

|

||||||

gpu: 0

|

gpu: 0

|

||||||

outdir: outputs

|

outdir: outputs

|

||||||

default_model: "Stable Diffusion v1.4"

|

default_model: "Stable Diffusion v1.5"

|

||||||

default_model_config: "configs/stable-diffusion/v1-inference.yaml"

|

default_model_config: "configs/stable-diffusion/v1-inference.yaml"

|

||||||

default_model_path: "models/ldm/stable-diffusion-v1/model.ckpt"

|

default_model_path: "models/ldm/stable-diffusion-v1/Stable Diffusion v1.5.ckpt"

|

||||||

use_sd_concepts_library: True

|

use_sd_concepts_library: True

|

||||||

sd_concepts_library_folder: "models/custom/sd-concepts-library"

|

sd_concepts_library_folder: "models/custom/sd-concepts-library"

|

||||||

GFPGAN_dir: "./models/gfpgan"

|

GFPGAN_dir: "./models/gfpgan"

|

||||||

@ -38,17 +40,19 @@ general:

|

|||||||

upscaling_method: "RealESRGAN"

|

upscaling_method: "RealESRGAN"

|

||||||

outdir_txt2img: outputs/txt2img

|

outdir_txt2img: outputs/txt2img

|

||||||

outdir_img2img: outputs/img2img

|

outdir_img2img: outputs/img2img

|

||||||

|

outdir_img2txt: outputs/img2txt

|

||||||

gfpgan_cpu: False

|

gfpgan_cpu: False

|

||||||

esrgan_cpu: False

|

esrgan_cpu: False

|

||||||

extra_models_cpu: False

|

extra_models_cpu: False

|

||||||

extra_models_gpu: False

|

extra_models_gpu: False

|

||||||

gfpgan_gpu: 0

|

gfpgan_gpu: 0

|

||||||

esrgan_gpu: 0

|

esrgan_gpu: 0

|

||||||

|

keep_all_models_loaded: False

|

||||||

save_metadata: True

|

save_metadata: True

|

||||||

save_format: "png"

|

save_format: "png"

|

||||||

skip_grid: False

|

skip_grid: False

|

||||||

skip_save: False

|

skip_save: False

|

||||||

grid_format: "jpg:95"

|

grid_quality: 95

|

||||||

n_rows: -1

|

n_rows: -1

|

||||||

no_verify_input: False

|

no_verify_input: False

|

||||||

no_half: False

|

no_half: False

|

||||||

@ -62,6 +66,13 @@ general:

|

|||||||

update_preview: True

|

update_preview: True

|

||||||

update_preview_frequency: 10

|

update_preview_frequency: 10

|

||||||

|

|

||||||

|

admin:

|

||||||

|

hide_server_setting: False

|

||||||

|

hide_browser_setting: False

|

||||||

|

|

||||||

|

debug:

|

||||||

|

enable_hydralit: False

|

||||||

|

|

||||||

txt2img:

|

txt2img:

|

||||||

prompt:

|

prompt:

|

||||||

width:

|

width:

|

||||||

@ -79,7 +90,6 @@ txt2img:

|

|||||||

cfg_scale:

|

cfg_scale:

|

||||||

value: 7.5

|

value: 7.5

|

||||||

min_value: 1.0

|

min_value: 1.0

|

||||||

max_value: 30.0

|

|

||||||

step: 0.5

|

step: 0.5

|

||||||

|

|

||||||

seed: ""

|

seed: ""

|

||||||

@ -126,8 +136,8 @@ txt2img:

|

|||||||

write_info_files: True

|

write_info_files: True

|

||||||

|

|

||||||

txt2vid:

|

txt2vid:

|

||||||

default_model: "CompVis/stable-diffusion-v1-4"

|

default_model: "runwayml/stable-diffusion-v1-5"

|

||||||

custom_models_list: ["CompVis/stable-diffusion-v1-4"]

|

custom_models_list: ["runwayml/stable-diffusion-v1-5", "CompVis/stable-diffusion-v1-4", "hakurei/waifu-diffusion"]

|

||||||

prompt:

|

prompt:

|

||||||

width:

|

width:

|

||||||

value: 512

|

value: 512

|

||||||

@ -144,7 +154,6 @@ txt2vid:

|

|||||||

cfg_scale:

|

cfg_scale:

|

||||||

value: 7.5

|

value: 7.5

|

||||||

min_value: 1.0

|

min_value: 1.0

|

||||||

max_value: 30.0

|

|

||||||

step: 0.5

|

step: 0.5

|

||||||

|

|

||||||

batch_count:

|

batch_count:

|

||||||

@ -175,9 +184,11 @@ txt2vid:

|

|||||||

normalize_prompt_weights: True

|

normalize_prompt_weights: True

|

||||||

save_individual_images: True

|

save_individual_images: True

|

||||||

save_video: True

|

save_video: True

|

||||||

|

save_video_on_stop: False

|

||||||

group_by_prompt: True

|

group_by_prompt: True

|

||||||

write_info_files: True

|

write_info_files: True

|

||||||

do_loop: False

|

do_loop: False

|

||||||

|

use_lerp_for_text: False

|

||||||

save_as_jpg: False

|

save_as_jpg: False

|

||||||

use_GFPGAN: False

|

use_GFPGAN: False

|

||||||

use_RealESRGAN: False

|

use_RealESRGAN: False

|

||||||

@ -193,20 +204,20 @@ txt2vid:

|

|||||||

|

|

||||||

beta_start:

|

beta_start:

|

||||||

value: 0.00085

|

value: 0.00085

|

||||||

min_value: 0.0001

|

min_value: 0.00010

|

||||||

max_value: 0.0300

|

max_value: 0.03000

|

||||||

step: 0.0001

|

step: 0.00010

|

||||||

format: "%.5f"

|

format: "%.5f"

|

||||||

|

|

||||||

beta_end:

|

beta_end:

|

||||||

value: 0.012

|

value: 0.01200

|

||||||

min_value: 0.0001

|

min_value: 0.00010

|

||||||

max_value: 0.0300

|

max_value: 0.03000

|

||||||

step: 0.0001

|

step: 0.00010

|

||||||

format: "%.5f"

|

format: "%.5f"

|

||||||

|

|

||||||

beta_scheduler_type: "scaled_linear"

|

beta_scheduler_type: "scaled_linear"

|

||||||

max_frames: 100

|

max_duration_in_seconds: 30

|

||||||

|

|

||||||

LDSR_config:

|

LDSR_config:

|

||||||

sampling_steps: 50

|

sampling_steps: 50

|

||||||

@ -224,7 +235,8 @@ img2img:

|

|||||||

step: 0.01

|

step: 0.01

|

||||||

# 0: Keep masked area

|

# 0: Keep masked area

|

||||||

# 1: Regenerate only masked area

|

# 1: Regenerate only masked area

|

||||||

mask_mode: 0

|

mask_mode: 1

|

||||||

|

noise_mode: "Matched Noise"

|

||||||

mask_restore: False

|

mask_restore: False

|

||||||

# 0: Just resize

|

# 0: Just resize

|

||||||

# 1: Crop and resize

|

# 1: Crop and resize

|

||||||

@ -248,7 +260,6 @@ img2img:

|

|||||||

cfg_scale:

|

cfg_scale:

|

||||||

value: 7.5

|

value: 7.5

|

||||||

min_value: 1.0

|

min_value: 1.0

|

||||||

max_value: 30.0

|

|

||||||

step: 0.5

|

step: 0.5

|

||||||

|

|

||||||

batch_count:

|

batch_count:

|

||||||

@ -271,9 +282,8 @@ img2img:

|

|||||||

|

|

||||||

find_noise_steps:

|

find_noise_steps:

|

||||||

value: 100

|

value: 100

|

||||||

min_value: 0

|

min_value: 100

|

||||||

max_value: 500

|

step: 100

|

||||||

step: 10

|

|

||||||

|

|

||||||

LDSR_config:

|

LDSR_config:

|

||||||

sampling_steps: 50

|

sampling_steps: 50

|

||||||

@ -300,7 +310,7 @@ img2img:

|

|||||||

write_info_files: True

|

write_info_files: True

|

||||||

|

|

||||||

img2txt:

|

img2txt:

|

||||||

batch_size: 420

|

batch_size: 2000

|

||||||

blip_image_eval_size: 512

|

blip_image_eval_size: 512

|

||||||

keep_all_models_loaded: False

|

keep_all_models_loaded: False

|

||||||

|

|

||||||

@ -311,7 +321,7 @@ gfpgan:

|

|||||||

strength: 100

|

strength: 100

|

||||||

|

|

||||||

textual_inversion:

|

textual_inversion:

|

||||||

pretrained_model_name_or_path: "models/diffusers/stable-diffusion-v1-4"

|

pretrained_model_name_or_path: "models/diffusers/stable-diffusion-v1-5"

|

||||||

tokenizer_name: "models/clip-vit-large-patch14"

|

tokenizer_name: "models/clip-vit-large-patch14"

|

||||||

|

|

||||||

|

|

||||||

@ -321,12 +331,12 @@ daisi_app:

|

|||||||

model_manager:

|

model_manager:

|

||||||

models:

|

models:

|

||||||

stable_diffusion:

|

stable_diffusion:

|

||||||

model_name: "Stable Diffusion v1.4"

|

model_name: "Stable Diffusion v1.5"

|

||||||

save_location: "./models/ldm/stable-diffusion-v1"

|

save_location: "./models/ldm/stable-diffusion-v1"

|

||||||

files:

|

files:

|

||||||

model_ckpt:

|

model_ckpt:

|

||||||

file_name: "model.ckpt"

|

file_name: "Stable Diffusion v1.5.ckpt"

|

||||||

download_link: "https://www.googleapis.com/storage/v1/b/aai-blog-files/o/sd-v1-4.ckpt?alt=media"

|

download_link: "https://huggingface.co/runwayml/stable-diffusion-v1-5/resolve/main/v1-5-pruned-emaonly.ckpt"