mirror of

https://github.com/enso-org/enso.git

synced 2024-12-22 21:41:34 +03:00

Invoke all Enso benchmarks via JMH (#7101)

# Important Notes

#### The Plot

- there used to be two kinds of benchmarks: in Java and in Enso

- those in Java got quite a good treatment

- there even are results updated daily: https://enso-org.github.io/engine-benchmark-results/

- the benchmarks written in Enso used to be 2nd class citizen

#### The Revelation

This PR has the potential to fix it all!

- It designs new [Bench API](88fd6fb988) ready for non-batch execution

- It allows for _single benchmark in a dedicated JVM_ execution

- It provides a simple way to wrap such an Enso benchmark as a Java benchmark

- thus the results of Enso and Java benchmarks are [now unified](https://github.com/enso-org/enso/pull/7101#discussion_r1257504440)

Long live _single benchmarking infrastructure for Java and Enso_!

This commit is contained in:

parent

162debb88c

commit

8e49255d92

113

build.sbt

113

build.sbt

@ -1770,6 +1770,119 @@ lazy val `distribution-manager` = project

|

||||

.dependsOn(pkg)

|

||||

.dependsOn(`logging-utils`)

|

||||

|

||||

lazy val `bench-processor` = (project in file("lib/scala/bench-processor"))

|

||||

.settings(

|

||||

frgaalJavaCompilerSetting,

|

||||

libraryDependencies ++= Seq(

|

||||

"jakarta.xml.bind" % "jakarta.xml.bind-api" % jaxbVersion,

|

||||

"com.sun.xml.bind" % "jaxb-impl" % jaxbVersion,

|

||||

"org.openjdk.jmh" % "jmh-core" % jmhVersion % "provided",

|

||||

"org.openjdk.jmh" % "jmh-generator-annprocess" % jmhVersion % "provided",

|

||||

"org.netbeans.api" % "org-openide-util-lookup" % netbeansApiVersion % "provided",

|

||||

"org.graalvm.sdk" % "graal-sdk" % graalMavenPackagesVersion % "provided",

|

||||

"junit" % "junit" % junitVersion % Test,

|

||||

"com.github.sbt" % "junit-interface" % junitIfVersion % Test,

|

||||

"org.graalvm.truffle" % "truffle-api" % graalMavenPackagesVersion % Test

|

||||

),

|

||||

Compile / javacOptions := ((Compile / javacOptions).value ++

|

||||

// Only run ServiceProvider processor and ignore those defined in META-INF, thus

|

||||

// fixing incremental compilation setup

|

||||

Seq(

|

||||

"-processor",

|

||||

"org.netbeans.modules.openide.util.ServiceProviderProcessor"

|

||||

)),

|

||||

commands += WithDebugCommand.withDebug,

|

||||

(Test / fork) := true,

|

||||

(Test / parallelExecution) := false,

|

||||

(Test / javaOptions) ++= {

|

||||

val runtimeJars =

|

||||

(LocalProject("runtime") / Compile / fullClasspath).value

|

||||

val jarsStr = runtimeJars.map(_.data).mkString(File.pathSeparator)

|

||||

Seq(

|

||||

s"-Dtruffle.class.path.append=${jarsStr}"

|

||||

)

|

||||

}

|

||||

)

|

||||

.dependsOn(`polyglot-api`)

|

||||

.dependsOn(runtime)

|

||||

|

||||

lazy val `std-benchmarks` = (project in file("std-bits/benchmarks"))

|

||||

.settings(

|

||||

frgaalJavaCompilerSetting,

|

||||

libraryDependencies ++= jmh ++ Seq(

|

||||

"org.openjdk.jmh" % "jmh-core" % jmhVersion % Benchmark,

|

||||

"org.openjdk.jmh" % "jmh-generator-annprocess" % jmhVersion % Benchmark,

|

||||

"org.graalvm.sdk" % "graal-sdk" % graalMavenPackagesVersion % "provided",

|

||||

"org.graalvm.truffle" % "truffle-api" % graalMavenPackagesVersion % Benchmark

|

||||

),

|

||||

commands += WithDebugCommand.withDebug,

|

||||

(Compile / logManager) :=

|

||||

sbt.internal.util.CustomLogManager.excludeMsg(

|

||||

"Could not determine source for class ",

|

||||

Level.Warn

|

||||

)

|

||||

)

|

||||

.configs(Benchmark)

|

||||

.settings(

|

||||

inConfig(Benchmark)(Defaults.testSettings)

|

||||

)

|

||||

.settings(

|

||||

(Benchmark / parallelExecution) := false,

|

||||

(Benchmark / run / fork) := true,

|

||||

(Benchmark / run / connectInput) := true,

|

||||

// Pass -Dtruffle.class.path.append to javac

|

||||

(Benchmark / compile / javacOptions) ++= {

|

||||

val runtimeClasspath =

|

||||

(LocalProject("runtime") / Compile / fullClasspath).value

|

||||

val runtimeInstrumentsClasspath =

|

||||

(LocalProject(

|

||||

"runtime-with-instruments"

|

||||

) / Compile / fullClasspath).value

|

||||

val appendClasspath =

|

||||

(runtimeClasspath ++ runtimeInstrumentsClasspath)

|

||||

.map(_.data)

|

||||

.mkString(File.pathSeparator)

|

||||

Seq(

|

||||

s"-J-Dtruffle.class.path.append=$appendClasspath"

|

||||

)

|

||||

},

|

||||

(Benchmark / compile / javacOptions) ++= Seq(

|

||||

"-s",

|

||||

(Benchmark / sourceManaged).value.getAbsolutePath,

|

||||

"-Xlint:unchecked"

|

||||

),

|

||||

(Benchmark / run / javaOptions) ++= {

|

||||

val runtimeClasspath =

|

||||

(LocalProject("runtime") / Compile / fullClasspath).value

|

||||

val runtimeInstrumentsClasspath =

|

||||

(LocalProject(

|

||||

"runtime-with-instruments"

|

||||

) / Compile / fullClasspath).value

|

||||

val appendClasspath =

|

||||

(runtimeClasspath ++ runtimeInstrumentsClasspath)

|

||||

.map(_.data)

|

||||

.mkString(File.pathSeparator)

|

||||

Seq(

|

||||

s"-Dtruffle.class.path.append=$appendClasspath"

|

||||

)

|

||||

}

|

||||

)

|

||||

.settings(

|

||||

bench := (Benchmark / run).toTask("").tag(Exclusive).value,

|

||||

benchOnly := Def.inputTaskDyn {

|

||||

import complete.Parsers.spaceDelimited

|

||||

val name = spaceDelimited("<name>").parsed match {

|

||||

case List(name) => name

|

||||

case _ => throw new IllegalArgumentException("Expected one argument.")

|

||||

}

|

||||

Def.task {

|

||||

(Benchmark / run).toTask(" " + name).value

|

||||

}

|

||||

}.evaluated

|

||||

)

|

||||

.dependsOn(`bench-processor` % Benchmark)

|

||||

.dependsOn(runtime % Benchmark)

|

||||

|

||||

lazy val editions = project

|

||||

.in(file("lib/scala/editions"))

|

||||

.configs(Test)

|

||||

|

||||

@ -387,6 +387,7 @@ the following flags:

|

||||

allow for manual analysis and discovery of optimisation failures.

|

||||

- `--showCompilations`: Prints the truffle compilation trace information.

|

||||

- `--printAssembly`: Prints the assembly output from the HotSpot JIT tier.

|

||||

- `--debugger`: Launches the JVM with the remote debugger enabled.

|

||||

|

||||

For more information on this sbt command, please see

|

||||

[WithDebugCommand.scala](../project/WithDebugCommand.scala).

|

||||

@ -756,7 +757,8 @@ interface of the runner prints all server options when you execute it with

|

||||

Below are options uses by the Language Server:

|

||||

|

||||

- `--server`: Runs the Language Server

|

||||

- `--root-id <uuid>`: Content root id.

|

||||

- `--root-id <uuid>`: Content root id. The Language Server chooses one randomly,

|

||||

so any valid UUID can be passed.

|

||||

- `--path <path>`: Path to the content root.

|

||||

- `--interface <interface>`: Interface for processing all incoming connections.

|

||||

Default value is 127.0.0.1

|

||||

|

||||

@ -8,96 +8,14 @@ order: 0

|

||||

|

||||

# Enso Debugger

|

||||

|

||||

The Enso Debugger allows amongst other things, to execute arbitrary expressions

|

||||

in a given execution context - this is used to implement a debugging REPL. The

|

||||

REPL can be launched when triggering a breakpoint in the code.

|

||||

This folder contains all documentation pertaining to the debugging facilities

|

||||

used by Enso, broken up as follows:

|

||||

|

||||

This folder contains all documentation pertaining to the REPL and the debugger,

|

||||

which is broken up as follows:

|

||||

|

||||

- [**The Enso Debugger Protocol:**](./protocol.md) The protocol for the Debugger

|

||||

|

||||

# Chrome Developer Tools Debugger

|

||||

|

||||

As a well written citizen of the [GraalVM](http://graalvm.org) project the Enso

|

||||

language can be used with existing tools available for the overall platform. One

|

||||

of them is

|

||||

[Chrome Debugger](https://www.graalvm.org/22.1/tools/chrome-debugger/) and Enso

|

||||

language is fully integrated with it. Launch the `bin/enso` executable with

|

||||

additional `--inspect` option and debug your Enso programs in _Chrome Developer

|

||||

Tools_.

|

||||

|

||||

```bash

|

||||

enso$ ./built-distribution/enso-engine-*/enso-*/bin/enso --inspect --run ./test/Tests/src/Data/Numbers_Spec.enso

|

||||

Debugger listening on ws://127.0.0.1:9229/Wugyrg9

|

||||

For help, see: https://www.graalvm.org/tools/chrome-debugger

|

||||

E.g. in Chrome open: devtools://devtools/bundled/js_app.html?ws=127.0.0.1:9229/Wugyrg9

|

||||

```

|

||||

|

||||

copy the printed URL into chrome browser and you should see:

|

||||

|

||||

|

||||

|

||||

Step in, step over, set breakpoints, watch values of the variables as well as

|

||||

evaluate arbitrary expressions in the console. Note that as of December 2022,

|

||||

with GraalVM 22.3.0, there is a well-known

|

||||

[bug in Truffle](https://github.com/oracle/graal/issues/5513) that causes

|

||||

`NullPointerException` when a host object gets into the chrome inspector. There

|

||||

is a workaround for that, but it may not work in certain situations. Therefore,

|

||||

if you encounter `NullPointerException` thrown from

|

||||

|

||||

```

|

||||

at org.graalvm.truffle/com.oracle.truffle.polyglot.PolyglotContextImpl.getContext(PolyglotContextImpl.java:685)

|

||||

```

|

||||

|

||||

simply ignore it. It will be handled within the debugger and should not affect

|

||||

the rest of the environment.

|

||||

|

||||

# Debugging Enso and Java Code at Once

|

||||

|

||||

Enso libraries are written in a mixture of Enso code and Java libraries.

|

||||

Debugging both sides (the Java as well as Enso code) is possible with a decent

|

||||

IDE.

|

||||

|

||||

Get [NetBeans](http://netbeans.apache.org) version 13 or newer or

|

||||

[VS Code with Apache Language Server extension](https://cwiki.apache.org/confluence/display/NETBEANS/Apache+NetBeans+Extension+for+Visual+Studio+Code)

|

||||

and _start listening on port 5005_ with _Debug/Attach Debugger_ or by specifying

|

||||

following debug configuration in VSCode:

|

||||

|

||||

```json

|

||||

{

|

||||

"name": "Listen to 5005",

|

||||

"type": "java+",

|

||||

"request": "attach",

|

||||

"listen": "true",

|

||||

"hostName": "localhost",

|

||||

"port": "5005"

|

||||

}

|

||||

```

|

||||

|

||||

Then it is just about executing following Sbt command which builds CLI version

|

||||

of the engine, launches it in debug mode and passes all other arguments to the

|

||||

started process:

|

||||

|

||||

```bash

|

||||

sbt:enso> runEngineDistribution --debug --run ./test/Tests/src/Data/Numbers_Spec.enso

|

||||

```

|

||||

|

||||

Alternatively you can pass in special JVM arguments when launching the

|

||||

`bin/enso` launcher:

|

||||

|

||||

```bash

|

||||

enso$ JAVA_OPTS=-agentlib:jdwp=transport=dt_socket,server=n,address=5005 ./built-distribution/enso-engine-*/enso-*/bin/enso --run ./test/Tests/src/Data/Numbers_Spec.enso

|

||||

```

|

||||

|

||||

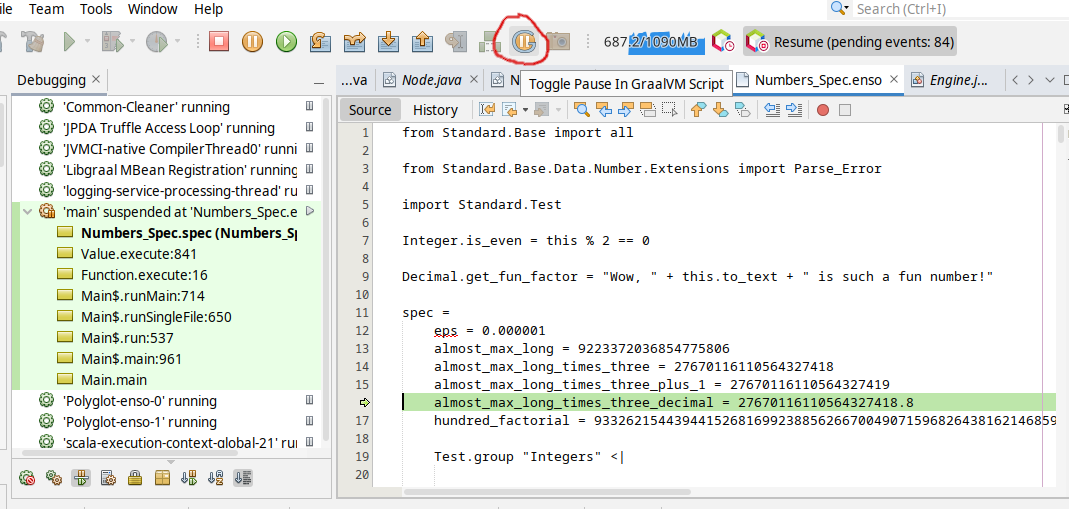

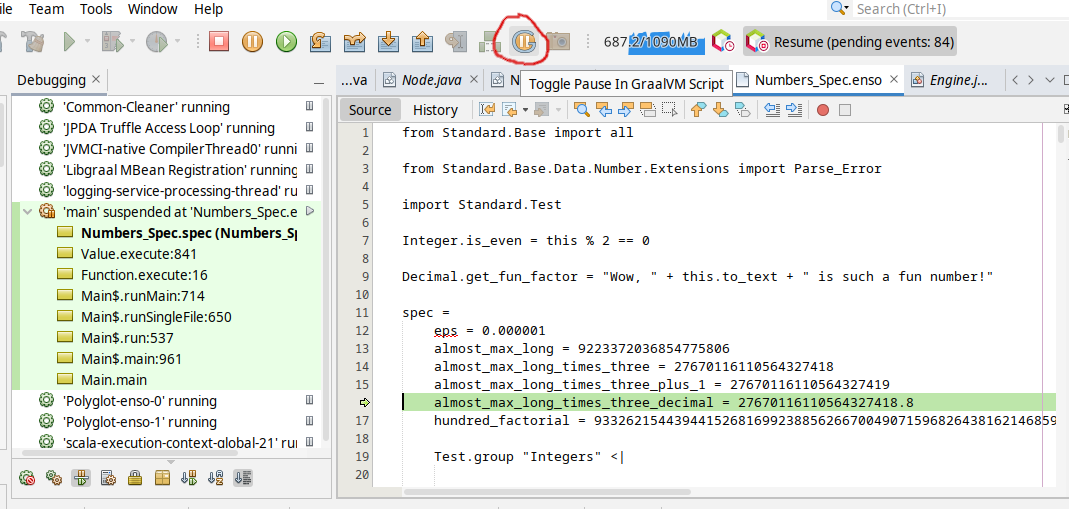

As soon as the debuggee connects and the Enso language starts - choose the

|

||||

_Toggle Pause in GraalVM Script_ button in the toolbar:

|

||||

|

||||

|

||||

|

||||

and your execution shall stop on the next `.enso` line of code. This mode allows

|

||||

to debug both - the Enso code as well as Java code. The stack traces shows a

|

||||

mixture of Java and Enso stack frames by default. Right-clicking on the thread

|

||||

allows one to switch to plain Java view (with a way more stack frames) and back.

|

||||

Analyzing low level details as well as Enso developer point of view shall be

|

||||

simple with such tool.

|

||||

- [**The Enso Debugger Protocol:**](./protocol.md) The protocol for the REPL

|

||||

Debugger.

|

||||

- [**Chrome devtools debugger:**](./chrome-devtools.md) A guide how to debug

|

||||

Enso code using Chrome devtools.

|

||||

- [**Debugging Enso and Java code at once:**](./mixed-debugging.md) A

|

||||

step-by-step guide how to debug both Enso and Java code in a single debugger.

|

||||

- [**Debugging Engine (Runtime) only Java code:**](./runtime-debugging.md) A

|

||||

guide how to debug the internal Engine Java code.

|

||||

|

||||

41

docs/debugger/chrome-devtools.md

Normal file

41

docs/debugger/chrome-devtools.md

Normal file

@ -0,0 +1,41 @@

|

||||

# Chrome Developer Tools Debugger

|

||||

|

||||

As a well written citizen of the [GraalVM](http://graalvm.org) project the Enso

|

||||

language can be used with existing tools available for the overall platform. One

|

||||

of them is

|

||||

[Chrome Debugger](https://www.graalvm.org/22.1/tools/chrome-debugger/) and Enso

|

||||

language is fully integrated with it. Launch the `bin/enso` executable with

|

||||

additional `--inspect` option and debug your Enso programs in _Chrome Developer

|

||||

Tools_.

|

||||

|

||||

```bash

|

||||

enso$ ./built-distribution/enso-engine-*/enso-*/bin/enso --inspect --run ./test/Tests/src/Data/Numbers_Spec.enso

|

||||

Debugger listening on ws://127.0.0.1:9229/Wugyrg9

|

||||

For help, see: https://www.graalvm.org/tools/chrome-debugger

|

||||

E.g. in Chrome open: devtools://devtools/bundled/js_app.html?ws=127.0.0.1:9229/Wugyrg9

|

||||

```

|

||||

|

||||

copy the printed URL into chrome browser and you should see:

|

||||

|

||||

|

||||

|

||||

Step in, step over, set breakpoints, watch values of the variables as well as

|

||||

evaluate arbitrary expressions in the console. Note that as of December 2022,

|

||||

with GraalVM 22.3.0, there is a well-known

|

||||

[bug in Truffle](https://github.com/oracle/graal/issues/5513) that causes

|

||||

`NullPointerException` when a host object gets into the chrome inspector. There

|

||||

is a workaround for that, but it may not work in certain situations. Therefore,

|

||||

if you encounter `NullPointerException` thrown from

|

||||

|

||||

```

|

||||

at org.graalvm.truffle/com.oracle.truffle.polyglot.PolyglotContextImpl.getContext(PolyglotContextImpl.java:685)

|

||||

```

|

||||

|

||||

simply ignore it. It will be handled within the debugger and should not affect

|

||||

the rest of the environment.

|

||||

|

||||

## Tips and tricks

|

||||

|

||||

- Use `env JAVA_OPTS=-Dpolyglot.inspect.Path=enso_debug` to set the chrome to

|

||||

use a fixed URL. In this case the URL is

|

||||

`devtools://devtools/bundled/js_app.html?ws=127.0.0.1:9229/enso_debug`

|

||||

48

docs/debugger/mixed-debugging.md

Normal file

48

docs/debugger/mixed-debugging.md

Normal file

@ -0,0 +1,48 @@

|

||||

# Debugging Enso and Java Code at Once

|

||||

|

||||

Enso libraries are written in a mixture of Enso code and Java libraries.

|

||||

Debugging both sides (the Java as well as Enso code) is possible with a decent

|

||||

IDE.

|

||||

|

||||

Get [NetBeans](http://netbeans.apache.org) version 13 or newer or

|

||||

[VS Code with Apache Language Server extension](https://cwiki.apache.org/confluence/display/NETBEANS/Apache+NetBeans+Extension+for+Visual+Studio+Code)

|

||||

and _start listening on port 5005_ with _Debug/Attach Debugger_ or by specifying

|

||||

following debug configuration in VSCode:

|

||||

|

||||

```json

|

||||

{

|

||||

"name": "Listen to 5005",

|

||||

"type": "java+",

|

||||

"request": "attach",

|

||||

"listen": "true",

|

||||

"hostName": "localhost",

|

||||

"port": "5005"

|

||||

}

|

||||

```

|

||||

|

||||

Then it is just about executing following Sbt command which builds CLI version

|

||||

of the engine, launches it in debug mode and passes all other arguments to the

|

||||

started process:

|

||||

|

||||

```bash

|

||||

sbt:enso> runEngineDistribution --debug --run ./test/Tests/src/Data/Numbers_Spec.enso

|

||||

```

|

||||

|

||||

Alternatively you can pass in special JVM arguments when launching the

|

||||

`bin/enso` launcher:

|

||||

|

||||

```bash

|

||||

enso$ JAVA_OPTS=-agentlib:jdwp=transport=dt_socket,server=n,address=5005 ./built-distribution/enso-engine-*/enso-*/bin/enso --run ./test/Tests/src/Data/Numbers_Spec.enso

|

||||

```

|

||||

|

||||

As soon as the debuggee connects and the Enso language starts - choose the

|

||||

_Toggle Pause in GraalVM Script_ button in the toolbar:

|

||||

|

||||

|

||||

|

||||

and your execution shall stop on the next `.enso` line of code. This mode allows

|

||||

to debug both - the Enso code as well as Java code. The stack traces shows a

|

||||

mixture of Java and Enso stack frames by default. Right-clicking on the thread

|

||||

allows one to switch to plain Java view (with a way more stack frames) and back.

|

||||

Analyzing low level details as well as Enso developer point of view shall be

|

||||

simple with such tool.

|

||||

69

docs/debugger/runtime-debugging.md

Normal file

69

docs/debugger/runtime-debugging.md

Normal file

@ -0,0 +1,69 @@

|

||||

# Runtime (Engine) debugging

|

||||

|

||||

This section explains how to debug various parts of the Engine. By Engine, we

|

||||

mean all the Java code located in the `runtime` SBT project, in `engine`

|

||||

directory.

|

||||

|

||||

## Debugging source file evaluation

|

||||

|

||||

This subsection provides a guide how to debug a single Enso source file

|

||||

evaluation. To evaluate a single source file, we use the _Engine distribution_

|

||||

built with `buildEngineDistribution` command. Both of the following two options

|

||||

starts the JVM in a debug mode. After the JVM is started in a debug mode, simply

|

||||

attach the debugger to the JVM process at the specified port.

|

||||

|

||||

The first option is to invoke the engine distribution from SBT shell with:

|

||||

|

||||

```sh

|

||||

sbt:enso> runEngineDistribution --debug --run ./test/Tests/src/Data/Numbers_Spec.enso

|

||||

```

|

||||

|

||||

The second options is to pass in special JVM arguments when launching the

|

||||

`bin/enso` from the engine distribution:

|

||||

|

||||

```bash

|

||||

enso$ JAVA_OPTS=-agentlib:jdwp=transport=dt_socket,server=n,address=5005 ./built-distribution/enso-engine-*/enso-*/bin/enso --run ./test/Tests/src/Data/Numbers_Spec.enso

|

||||

```

|

||||

|

||||

### Tips and tricks

|

||||

|

||||

There is no simple mapping of the Enso source code to the engine's Java code, so

|

||||

if you try to debug a specific expression, it might be a bit tricky. However,

|

||||

the following steps should help you to skip all the irrelevant code and get to

|

||||

the code you are interested in:

|

||||

|

||||

- To debug a method called `foo`, put a breakpoint in

|

||||

`org.enso.interpreter.node.ClosureRootNode#execute` with a condition on

|

||||

`this.name.contains("foo")`

|

||||

- To debug a specific expression, put some _unique_ expression, like

|

||||

`Debug.eval "1+1"`, in front of it and put a breakpoint in a Truffle node

|

||||

corresponding to that unique expression, in this case that is

|

||||

`org.enso.interpreter.node.expression.builtin.debug.DebugEvalNode`.

|

||||

|

||||

## Debugging annotation processors

|

||||

|

||||

The Engine uses annotation processors to generate some of the Java code, e.g.,

|

||||

the builtin methods with `org.enso.interpreter.dsl.MethodProcessor`, or JMH

|

||||

benchmark sources with `org.enso.benchmarks.processor.BenchProcessor`.

|

||||

Annotation processors are invoked by the Java compiler (`javac`), therefore, we

|

||||

need special instructions to attach the debugger to them.

|

||||

|

||||

Let's debug `org.enso.interpreter.dsl.MethodProcessor` as an example. The

|

||||

following are the commands invoked in the `sbt` shell:

|

||||

|

||||

- `project runtime`

|

||||

- `clean`

|

||||

- Delete all the compiled class files along with all the generated sources by

|

||||

the annotation processor. This ensures that the annotation processor will be

|

||||

invoked.

|

||||

- `set javacOptions += FrgaalJavaCompiler.debugArg`

|

||||

- This sets a special flag that is passed to the frgaal Java compiler, which

|

||||

in turn waits for the debugger to attach. Note that this setting is not

|

||||

persisted and will be reset once the project is reloaded.

|

||||

- `compile`

|

||||

- Launches the Java compiler, which will wait for the debugger to attach. Put

|

||||

a breakpoint in some class of `org.enso.interpreter.dsl` package. Wait for

|

||||

the message in the console instructing to attach the debugger.

|

||||

- To reset the `javacOptions` setting, either run

|

||||

`set javacOptions -= FrgaalJavaCompiler.debugArg`, or reload the project with

|

||||

`reload`.

|

||||

@ -22,5 +22,7 @@ up as follows:

|

||||

- [**Upgrading GraalVM:**](upgrading-graalvm.md) Description of steps that have

|

||||

to be performed by each developer when the project is upgraded to a new

|

||||

version of GraalVM.

|

||||

- [**Benchmarks:**](benchmarks.md) Description of the benchmarking

|

||||

infrastructure used for measuring performance of the runtime.

|

||||

- [**Logging**:](logging.md) Description of an unified and centralized logging

|

||||

infrastructure that should be used by all components.

|

||||

|

||||

102

docs/infrastructure/benchmarks.md

Normal file

102

docs/infrastructure/benchmarks.md

Normal file

@ -0,0 +1,102 @@

|

||||

# Benchmarks

|

||||

|

||||

In this document, we describe the benchmark types used for the runtime - Engine

|

||||

micro benchmarks in the section

|

||||

[Engine JMH microbenchmarks](#engine-jmh-microbenchmarks) and standard library

|

||||

benchmarks in the section

|

||||

[Standard library benchmarks](#standard-library-benchmarks), and how and where

|

||||

are the results stored and visualized in the section

|

||||

[Visualization](#visualization).

|

||||

|

||||

To track the performance of the engine, we use

|

||||

[JMH](https://openjdk.org/projects/code-tools/jmh/). There are two types of

|

||||

benchmarks:

|

||||

|

||||

- [micro benchmarks](#engine-jmh-microbenchmarks) located directly in the

|

||||

`runtime` SBT project. These benchmarks are written in Java, and are used to

|

||||

measure the performance of specific parts of the engine.

|

||||

- [standard library benchmarks](#standard-library-benchmarks) located in the

|

||||

`test/Benchmarks` Enso project. These benchmarks are entirelly written in

|

||||

Enso, along with the harness code.

|

||||

|

||||

## Engine JMH microbenchmarks

|

||||

|

||||

These benchmarks are written in Java and are used to measure the performance of

|

||||

specific parts of the engine. The sources are located in the `runtime` SBT

|

||||

project, under `src/bench` source directory.

|

||||

|

||||

### Running the benchmarks

|

||||

|

||||

To run the benchmarks, use `bench` or `benchOnly` command - `bench` runs all the

|

||||

benchmarks and `benchOnly` runs only one benchmark specified with the fully

|

||||

qualified name. The parameters for these benchmarks are hard-coded inside the

|

||||

JMH annotations in the source files. In order to change, e.g., the number of

|

||||

measurement iterations, you need to modify the parameter to the `@Measurement`

|

||||

annotation.

|

||||

|

||||

### Debugging the benchmarks

|

||||

|

||||

Currently, the best way to debug the benchmark is to set the `@Fork` annotation

|

||||

to 0, and to run `withDebug` command like this:

|

||||

|

||||

```

|

||||

withDebug --debugger benchOnly -- <fully qualified benchmark name>

|

||||

```

|

||||

|

||||

## Standard library benchmarks

|

||||

|

||||

Unlike the Engine micro benchmarks, these benchmarks are written entirely in

|

||||

Enso and located in the `test/Benchmarks` Enso project. There are two ways to

|

||||

run these benchmarks:

|

||||

|

||||

- [Running standalone](#running-standalone)

|

||||

- [Running via JMH launcher](#running-via-jmh-launcher)

|

||||

|

||||

### Running standalone

|

||||

|

||||

A single source file in the project may contain multiple benchmark definitions.

|

||||

If the source file defines `main` method, we can evaluate it the same way as any

|

||||

other Enso source file, for example via

|

||||

`runEngineDistribution --in-project test/Benchmarks --run <source file>`. The

|

||||

harness within the project is not meant for any sophisticated benchmarking, but

|

||||

rather for quick local evaluation. See the `Bench.measure` method documentation

|

||||

for more details. For more sophisticated approach, run the benchmarks via the

|

||||

JMH launcher.

|

||||

|

||||

### Running via JMH launcher

|

||||

|

||||

The JMH launcher is located in `std-bits/benchmarks` directory, as

|

||||

`std-benchmarks` SBT project. It is a single Java class with a `main` method

|

||||

that just delegates to the

|

||||

[standard JMH launcher](https://github.com/openjdk/jmh/blob/master/jmh-core/src/main/java/org/openjdk/jmh/Main.java),

|

||||

therefore, supports all the command line options as the standard launcher. For

|

||||

the full options summary, either see the

|

||||

[JMH source code](https://github.com/openjdk/jmh/blob/master/jmh-core/src/main/java/org/openjdk/jmh/runner/options/CommandLineOptions.java),

|

||||

or run the launcher with `-h` option.

|

||||

|

||||

The `std-benchmarks` SBT project supports `bench` and `benchOnly` commands, that

|

||||

work the same as in the `runtime` project, with the exception that the benchmark

|

||||

name does not have to be specified as a fully qualified name, but as a regular

|

||||

expression. To access the full flexibility of the JMH launcher, run it via

|

||||

`Bench/run` - for example, to see the help message: `Bench/run -h`. For example,

|

||||

you can run all the benchmarks that have "New_Vector" in their name with just 3

|

||||

seconds for warmup iterations and 2 measurement iterations with

|

||||

`Bench/run -w 3 -i 2 New_Vector`.

|

||||

|

||||

Whenever you add or delete any benchmarks from `test/Benchmarks` project, the

|

||||

generated JMH sources need to be recompiled with `Bench/clean; Bench/compile`.

|

||||

You do not need to recompile the `std-benchmarks` project if you only modify the

|

||||

benchmark sources.

|

||||

|

||||

## Visualization

|

||||

|

||||

The benchmarks are invoked as a daily

|

||||

[GitHub Action](https://github.com/enso-org/enso/actions/workflows/benchmark.yml),

|

||||

that can be invoked manually on a specific branch as well. The results are kept

|

||||

in the artifacts produced from the actions. In

|

||||

`tools/performance/engine-benchmarks` directory, there is a simple Python script

|

||||

for collecting and processing the results. See the

|

||||

[README in that directory](../../tools/performance/engine-benchmarks/README.md)

|

||||

for more information about how to run that script. This script is invoked

|

||||

regularly on a private machine and the results are published in

|

||||

[https://enso-org.github.io/engine-benchmark-results/](https://enso-org.github.io/engine-benchmark-results/).

|

||||

@ -0,0 +1,10 @@

|

||||

package org.enso.benchmarks;

|

||||

|

||||

/**

|

||||

* A configuration for a {@link BenchGroup benchmark group}.

|

||||

* Corresponds to {@code Bench_Options} in {@code distribution/lib/Standard/Test/0.0.0-dev/src/Bench.enso}

|

||||

*/

|

||||

public interface BenchConfig {

|

||||

int size();

|

||||

int iter();

|

||||

}

|

||||

@ -0,0 +1,13 @@

|

||||

package org.enso.benchmarks;

|

||||

|

||||

import java.util.List;

|

||||

|

||||

/**

|

||||

* A group of benchmarks with its own name and configuration.

|

||||

* Corresponds to {@code Bench.Group} defined in {@code distribution/lib/Standard/Test/0.0.0-dev/src/Bench.enso}.

|

||||

*/

|

||||

public interface BenchGroup {

|

||||

String name();

|

||||

BenchConfig configuration();

|

||||

List<BenchSpec> specs();

|

||||

}

|

||||

@ -0,0 +1,12 @@

|

||||

package org.enso.benchmarks;

|

||||

|

||||

import org.graalvm.polyglot.Value;

|

||||

|

||||

/**

|

||||

* Specification of a single benchmark.

|

||||

* Corresponds to {@code Bench.Spec} defined in {@code distribution/lib/Standard/Test/0.0.0-dev/src/Bench.enso}.

|

||||

*/

|

||||

public interface BenchSpec {

|

||||

String name();

|

||||

Value code();

|

||||

}

|

||||

@ -0,0 +1,11 @@

|

||||

package org.enso.benchmarks;

|

||||

|

||||

import java.util.List;

|

||||

|

||||

/**

|

||||

* Wraps all the groups of benchmarks specified in a single module.

|

||||

* Corresponds to {@code Bench.All} defined in {@code distribution/lib/Standard/Test/0.0.0-dev/src/Bench.enso}.

|

||||

*/

|

||||

public interface BenchSuite {

|

||||

List<BenchGroup> groups();

|

||||

}

|

||||

@ -0,0 +1,50 @@

|

||||

package org.enso.benchmarks;

|

||||

|

||||

import java.util.List;

|

||||

import java.util.Optional;

|

||||

|

||||

/**

|

||||

* A {@link BenchSuite} with a qualified name of the module it is defined in.

|

||||

*/

|

||||

public final class ModuleBenchSuite {

|

||||

private final BenchSuite suite;

|

||||

private final String moduleQualifiedName;

|

||||

|

||||

public ModuleBenchSuite(BenchSuite suite, String moduleQualifiedName) {

|

||||

this.suite = suite;

|

||||

this.moduleQualifiedName = moduleQualifiedName;

|

||||

}

|

||||

|

||||

public List<BenchGroup> getGroups() {

|

||||

return suite.groups();

|

||||

}

|

||||

|

||||

public String getModuleQualifiedName() {

|

||||

return moduleQualifiedName;

|

||||

}

|

||||

|

||||

public BenchGroup findGroupByName(String groupName) {

|

||||

return suite

|

||||

.groups()

|

||||

.stream()

|

||||

.filter(grp -> grp.name().equals(groupName))

|

||||

.findFirst()

|

||||

.orElse(null);

|

||||

}

|

||||

|

||||

public BenchSpec findSpecByName(String groupName, String specName) {

|

||||

Optional<BenchGroup> group = suite

|

||||

.groups()

|

||||

.stream()

|

||||

.filter(grp -> grp.name().equals(groupName))

|

||||

.findFirst();

|

||||

if (group.isPresent()) {

|

||||

return group.get().specs()

|

||||

.stream()

|

||||

.filter(spec -> spec.name().equals(specName))

|

||||

.findFirst()

|

||||

.orElseGet(() -> null);

|

||||

}

|

||||

return null;

|

||||

}

|

||||

}

|

||||

@ -0,0 +1,54 @@

|

||||

package org.enso.benchmarks;

|

||||

|

||||

import java.io.File;

|

||||

import java.net.URISyntaxException;

|

||||

|

||||

/** Utility methods used by the benchmark classes from the generated code */

|

||||

public class Utils {

|

||||

|

||||

/**

|

||||

* Returns the path to the {@link org.enso.polyglot.RuntimeOptions#LANGUAGE_HOME_OVERRIDE language

|

||||

* home override directory}.

|

||||

*

|

||||

* <p>Note that the returned file may not exist.

|

||||

*

|

||||

* @return Non-null file pointing to the language home override directory.

|

||||

*/

|

||||

public static File findLanguageHomeOverride() {

|

||||

File ensoDir = findRepoRootDir();

|

||||

// Note that ensoHomeOverride does not have to exist, only its parent directory

|

||||

return ensoDir.toPath().resolve("distribution").resolve("component").toFile();

|

||||

}

|

||||

|

||||

/**

|

||||

* Returns the root directory of the Enso repository.

|

||||

*

|

||||

* @return Non-null file pointing to the root directory of the Enso repository.

|

||||

*/

|

||||

public static File findRepoRootDir() {

|

||||

File ensoDir;

|

||||

try {

|

||||

ensoDir = new File(Utils.class.getProtectionDomain().getCodeSource().getLocation().toURI());

|

||||

} catch (URISyntaxException e) {

|

||||

throw new IllegalStateException("Unrecheable: ensoDir not found", e);

|

||||

}

|

||||

for (; ensoDir != null; ensoDir = ensoDir.getParentFile()) {

|

||||

if (ensoDir.getName().equals("enso")) {

|

||||

break;

|

||||

}

|

||||

}

|

||||

if (ensoDir == null || !ensoDir.exists() || !ensoDir.isDirectory() || !ensoDir.canRead()) {

|

||||

throw new IllegalStateException("Unrecheable: ensoDir does not exist or is not readable");

|

||||

}

|

||||

return ensoDir;

|

||||

}

|

||||

|

||||

public static BenchSpec findSpecByName(BenchGroup group, String specName) {

|

||||

for (BenchSpec spec : group.specs()) {

|

||||

if (spec.name().equals(specName)) {

|

||||

return spec;

|

||||

}

|

||||

}

|

||||

return null;

|

||||

}

|

||||

}

|

||||

@ -0,0 +1,319 @@

|

||||

package org.enso.benchmarks.processor;

|

||||

|

||||

import java.io.ByteArrayOutputStream;

|

||||

import java.io.File;

|

||||

import java.io.IOException;

|

||||

import java.io.Writer;

|

||||

import java.net.URISyntaxException;

|

||||

import java.util.List;

|

||||

import java.util.Set;

|

||||

import java.util.stream.Collectors;

|

||||

import javax.annotation.processing.AbstractProcessor;

|

||||

import javax.annotation.processing.FilerException;

|

||||

import javax.annotation.processing.Processor;

|

||||

import javax.annotation.processing.RoundEnvironment;

|

||||

import javax.annotation.processing.SupportedAnnotationTypes;

|

||||

import javax.lang.model.SourceVersion;

|

||||

import javax.lang.model.element.TypeElement;

|

||||

import javax.tools.Diagnostic.Kind;

|

||||

import org.enso.benchmarks.BenchGroup;

|

||||

import org.enso.benchmarks.BenchSpec;

|

||||

import org.enso.benchmarks.ModuleBenchSuite;

|

||||

import org.enso.polyglot.LanguageInfo;

|

||||

import org.enso.polyglot.MethodNames.TopScope;

|

||||

import org.enso.polyglot.RuntimeOptions;

|

||||

import org.graalvm.polyglot.Context;

|

||||

import org.graalvm.polyglot.PolyglotException;

|

||||

import org.graalvm.polyglot.Value;

|

||||

import org.graalvm.polyglot.io.IOAccess;

|

||||

import org.openide.util.lookup.ServiceProvider;

|

||||

|

||||

@SupportedAnnotationTypes("org.enso.benchmarks.processor.GenerateBenchSources")

|

||||

@ServiceProvider(service = Processor.class)

|

||||

public class BenchProcessor extends AbstractProcessor {

|

||||

|

||||

private final File ensoHomeOverride;

|

||||

private final File ensoDir;

|

||||

private File projectRootDir;

|

||||

private static final String generatedSourcesPackagePrefix = "org.enso.benchmarks.generated";

|

||||

private static final List<String> imports =

|

||||

List.of(

|

||||

"import java.nio.file.Paths;",

|

||||

"import java.io.ByteArrayOutputStream;",

|

||||

"import java.io.File;",

|

||||

"import java.util.List;",

|

||||

"import java.util.Objects;",

|

||||

"import org.openjdk.jmh.annotations.Benchmark;",

|

||||

"import org.openjdk.jmh.annotations.BenchmarkMode;",

|

||||

"import org.openjdk.jmh.annotations.Mode;",

|

||||

"import org.openjdk.jmh.annotations.Fork;",

|

||||

"import org.openjdk.jmh.annotations.Measurement;",

|

||||

"import org.openjdk.jmh.annotations.OutputTimeUnit;",

|

||||

"import org.openjdk.jmh.annotations.Setup;",

|

||||

"import org.openjdk.jmh.annotations.State;",

|

||||

"import org.openjdk.jmh.annotations.Scope;",

|

||||

"import org.openjdk.jmh.infra.BenchmarkParams;",

|

||||

"import org.openjdk.jmh.infra.Blackhole;",

|

||||

"import org.graalvm.polyglot.Context;",

|

||||

"import org.graalvm.polyglot.Value;",

|

||||

"import org.graalvm.polyglot.io.IOAccess;",

|

||||

"import org.enso.polyglot.LanguageInfo;",

|

||||

"import org.enso.polyglot.MethodNames;",

|

||||

"import org.enso.polyglot.RuntimeOptions;",

|

||||

"import org.enso.benchmarks.processor.SpecCollector;",

|

||||

"import org.enso.benchmarks.ModuleBenchSuite;",

|

||||

"import org.enso.benchmarks.BenchSpec;",

|

||||

"import org.enso.benchmarks.BenchGroup;",

|

||||

"import org.enso.benchmarks.Utils;");

|

||||

|

||||

public BenchProcessor() {

|

||||

File currentDir = null;

|

||||

try {

|

||||

currentDir =

|

||||

new File(

|

||||

BenchProcessor.class.getProtectionDomain().getCodeSource().getLocation().toURI());

|

||||

} catch (URISyntaxException e) {

|

||||

failWithMessage("ensoDir not found: " + e.getMessage());

|

||||

}

|

||||

for (; currentDir != null; currentDir = currentDir.getParentFile()) {

|

||||

if (currentDir.getName().equals("enso")) {

|

||||

break;

|

||||

}

|

||||

}

|

||||

if (currentDir == null) {

|

||||

failWithMessage("Unreachable: Could not find Enso root directory");

|

||||

}

|

||||

ensoDir = currentDir;

|

||||

|

||||

// Note that ensoHomeOverride does not have to exist, only its parent directory

|

||||

ensoHomeOverride = ensoDir.toPath().resolve("distribution").resolve("component").toFile();

|

||||

}

|

||||

|

||||

@Override

|

||||

public SourceVersion getSupportedSourceVersion() {

|

||||

return SourceVersion.latest();

|

||||

}

|

||||

|

||||

@Override

|

||||

public boolean process(Set<? extends TypeElement> annotations, RoundEnvironment roundEnv) {

|

||||

var elements = roundEnv.getElementsAnnotatedWith(GenerateBenchSources.class);

|

||||

for (var element : elements) {

|

||||

GenerateBenchSources annotation = element.getAnnotation(GenerateBenchSources.class);

|

||||

projectRootDir = new File(annotation.projectRootPath());

|

||||

if (!projectRootDir.exists() || !projectRootDir.isDirectory() || !projectRootDir.canRead()) {

|

||||

failWithMessage(

|

||||

"Project root dir '"

|

||||

+ projectRootDir.getAbsolutePath()

|

||||

+ "' specified in the annotation does not exist or is not readable");

|

||||

}

|

||||

try (var ctx =

|

||||

Context.newBuilder()

|

||||

.allowExperimentalOptions(true)

|

||||

.allowIO(IOAccess.ALL)

|

||||

.allowAllAccess(true)

|

||||

.logHandler(new ByteArrayOutputStream())

|

||||

.option(RuntimeOptions.PROJECT_ROOT, projectRootDir.getAbsolutePath())

|

||||

.option(RuntimeOptions.LANGUAGE_HOME_OVERRIDE, ensoHomeOverride.getAbsolutePath())

|

||||

.build()) {

|

||||

Value module = getModule(ctx, annotation.moduleName());

|

||||

assert module != null;

|

||||

List<ModuleBenchSuite> benchSuites =

|

||||

SpecCollector.collectBenchSpecsFromModule(module, annotation.variableName());

|

||||

for (ModuleBenchSuite benchSuite : benchSuites) {

|

||||

for (BenchGroup group : benchSuite.getGroups()) {

|

||||

generateClassForGroup(

|

||||

group, benchSuite.getModuleQualifiedName(), annotation.variableName());

|

||||

}

|

||||

}

|

||||

return true;

|

||||

} catch (Exception e) {

|

||||

failWithMessage("Uncaught exception in " + getClass().getName() + ": " + e.getMessage());

|

||||

return false;

|

||||

}

|

||||

}

|

||||

return true;

|

||||

}

|

||||

|

||||

private Value getModule(Context ctx, String moduleName) {

|

||||

try {

|

||||

return ctx.getBindings(LanguageInfo.ID).invokeMember(TopScope.GET_MODULE, moduleName);

|

||||

} catch (PolyglotException e) {

|

||||

failWithMessage("Cannot get module '" + moduleName + "': " + e.getMessage());

|

||||

return null;

|

||||

}

|

||||

}

|

||||

|

||||

private void generateClassForGroup(BenchGroup group, String moduleQualifiedName, String varName) {

|

||||

String fullClassName = createGroupClassName(group);

|

||||

try (Writer srcFileWriter =

|

||||

processingEnv.getFiler().createSourceFile(fullClassName).openWriter()) {

|

||||

generateClassForGroup(srcFileWriter, moduleQualifiedName, varName, group);

|

||||

} catch (IOException e) {

|

||||

if (!isResourceAlreadyExistsException(e)) {

|

||||

failWithMessage(

|

||||

"Failed to generate source file for group '" + group.name() + "': " + e.getMessage());

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

/**

|

||||

* Returns true iff the given exception is thrown because a file already exists exception. There

|

||||

* is no better way to check this.

|

||||

*

|

||||

* @param e Exception to check.

|

||||

* @return true iff the given exception is thrown because a file already exists exception.

|

||||

*/

|

||||

private static boolean isResourceAlreadyExistsException(IOException e) {

|

||||

List<String> messages =

|

||||

List.of(

|

||||

"Source file already created",

|

||||

"Resource already created",

|

||||

"Attempt to recreate a file");

|

||||

return e instanceof FilerException

|

||||

&& messages.stream().anyMatch(msg -> e.getMessage().contains(msg));

|

||||

}

|

||||

|

||||

private void generateClassForGroup(

|

||||

Writer javaSrcFileWriter, String moduleQualifiedName, String varName, BenchGroup group)

|

||||

throws IOException {

|

||||

String groupFullClassName = createGroupClassName(group);

|

||||

String className = groupFullClassName.substring(groupFullClassName.lastIndexOf('.') + 1);

|

||||

List<BenchSpec> specs = group.specs();

|

||||

List<String> specJavaNames =

|

||||

specs.stream().map(spec -> normalize(spec.name())).collect(Collectors.toUnmodifiableList());

|

||||

|

||||

javaSrcFileWriter.append("package " + generatedSourcesPackagePrefix + ";\n");

|

||||

javaSrcFileWriter.append("\n");

|

||||

javaSrcFileWriter.append(String.join("\n", imports));

|

||||

javaSrcFileWriter.append("\n");

|

||||

javaSrcFileWriter.append("\n");

|

||||

javaSrcFileWriter.append("/**\n");

|

||||

javaSrcFileWriter.append(" * Generated from:\n");

|

||||

javaSrcFileWriter.append(" * - Module: " + moduleQualifiedName + "\n");

|

||||

javaSrcFileWriter.append(" * - Group: \"" + group.name() + "\"\n");

|

||||

javaSrcFileWriter.append(" * Generated by {@link " + getClass().getName() + "}.\n");

|

||||

javaSrcFileWriter.append(" */\n");

|

||||

javaSrcFileWriter.append("@BenchmarkMode(Mode.AverageTime)\n");

|

||||

javaSrcFileWriter.append("@Fork(1)\n");

|

||||

javaSrcFileWriter.append("@State(Scope.Benchmark)\n");

|

||||

javaSrcFileWriter.append("public class " + className + " {\n");

|

||||

javaSrcFileWriter.append(" private Value groupInputArg;\n");

|

||||

for (var specJavaName : specJavaNames) {

|

||||

javaSrcFileWriter.append(" private Value benchFunc_" + specJavaName + ";\n");

|

||||

}

|

||||

javaSrcFileWriter.append(" \n");

|

||||

javaSrcFileWriter.append(" @Setup\n");

|

||||

javaSrcFileWriter.append(" public void setup(BenchmarkParams params) throws Exception {\n");

|

||||

javaSrcFileWriter

|

||||

.append(" File projectRootDir = Utils.findRepoRootDir().toPath().resolve(\"")

|

||||

.append(projectRootDir.toString())

|

||||

.append("\").toFile();\n");

|

||||

javaSrcFileWriter.append(

|

||||

" if (projectRootDir == null || !projectRootDir.exists() || !projectRootDir.canRead()) {\n");

|

||||

javaSrcFileWriter.append(

|

||||

" throw new IllegalStateException(\"Project root directory does not exist or cannot be read: \" + Objects.toString(projectRootDir));\n");

|

||||

javaSrcFileWriter.append(" }\n");

|

||||

javaSrcFileWriter.append(" File languageHomeOverride = Utils.findLanguageHomeOverride();\n");

|

||||

javaSrcFileWriter.append(" var ctx = Context.newBuilder()\n");

|

||||

javaSrcFileWriter.append(" .allowExperimentalOptions(true)\n");

|

||||

javaSrcFileWriter.append(" .allowIO(IOAccess.ALL)\n");

|

||||

javaSrcFileWriter.append(" .allowAllAccess(true)\n");

|

||||

javaSrcFileWriter.append(" .logHandler(new ByteArrayOutputStream())\n");

|

||||

javaSrcFileWriter.append(" .option(\n");

|

||||

javaSrcFileWriter.append(" RuntimeOptions.LANGUAGE_HOME_OVERRIDE,\n");

|

||||

javaSrcFileWriter.append(" languageHomeOverride.getAbsolutePath()\n");

|

||||

javaSrcFileWriter.append(" )\n");

|

||||

javaSrcFileWriter.append(" .option(\n");

|

||||

javaSrcFileWriter.append(" RuntimeOptions.PROJECT_ROOT,\n");

|

||||

javaSrcFileWriter.append(" projectRootDir.getAbsolutePath()\n");

|

||||

javaSrcFileWriter.append(" )\n");

|

||||

javaSrcFileWriter.append(" .build();\n");

|

||||

javaSrcFileWriter.append(" \n");

|

||||

javaSrcFileWriter.append(" Value bindings = ctx.getBindings(LanguageInfo.ID);\n");

|

||||

javaSrcFileWriter.append(

|

||||

" Value module = bindings.invokeMember(MethodNames.TopScope.GET_MODULE, \""

|

||||

+ moduleQualifiedName

|

||||

+ "\");\n");

|

||||

javaSrcFileWriter.append(

|

||||

" BenchGroup group = SpecCollector.collectBenchGroupFromModule(module, \""

|

||||

+ group.name()

|

||||

+ "\", \""

|

||||

+ varName

|

||||

+ "\");\n");

|

||||

javaSrcFileWriter.append(" \n");

|

||||

for (int i = 0; i < specs.size(); i++) {

|

||||

var specJavaName = specJavaNames.get(i);

|

||||

var specName = specs.get(i).name();

|

||||

javaSrcFileWriter.append(

|

||||

" BenchSpec benchSpec_"

|

||||

+ specJavaName

|

||||

+ " = Utils.findSpecByName(group, \""

|

||||

+ specName

|

||||

+ "\");\n");

|

||||

javaSrcFileWriter.append(

|

||||

" this.benchFunc_" + specJavaName + " = benchSpec_" + specJavaName + ".code();\n");

|

||||

}

|

||||

javaSrcFileWriter.append(" \n");

|

||||

javaSrcFileWriter.append(" this.groupInputArg = Value.asValue(null);\n");

|

||||

javaSrcFileWriter.append(" } \n"); // end of setup method

|

||||

javaSrcFileWriter.append(" \n");

|

||||

|

||||

// Benchmark methods

|

||||

for (var specJavaName : specJavaNames) {

|

||||

javaSrcFileWriter.append(" \n");

|

||||

javaSrcFileWriter.append(" @Benchmark\n");

|

||||

javaSrcFileWriter.append(" public void " + specJavaName + "(Blackhole blackhole) {\n");

|

||||

javaSrcFileWriter.append(

|

||||

" Value result = this.benchFunc_" + specJavaName + ".execute(this.groupInputArg);\n");

|

||||

javaSrcFileWriter.append(" blackhole.consume(result);\n");

|

||||

javaSrcFileWriter.append(" }\n"); // end of benchmark method

|

||||

}

|

||||

|

||||

javaSrcFileWriter.append("}\n"); // end of class className

|

||||

}

|

||||

|

||||

/**

|

||||

* Returns Java FQN for a benchmark spec.

|

||||

*

|

||||

* @param group Group name will be converted to Java package name.

|

||||

* @return

|

||||

*/

|

||||

private static String createGroupClassName(BenchGroup group) {

|

||||

var groupPkgName = normalize(group.name());

|

||||

return generatedSourcesPackagePrefix + "." + groupPkgName;

|

||||

}

|

||||

|

||||

private static boolean isValidChar(char c) {

|

||||

return Character.isAlphabetic(c) || Character.isDigit(c) || c == '_';

|

||||

}

|

||||

|

||||

/**

|

||||

* Converts Text to valid Java identifier.

|

||||

*

|

||||

* @param name Text to convert.

|

||||

* @return Valid Java identifier, non null.

|

||||

*/

|

||||

private static String normalize(String name) {

|

||||

var normalizedNameSb = new StringBuilder();

|

||||

for (char c : name.toCharArray()) {

|

||||

if (isValidChar(c)) {

|

||||

normalizedNameSb.append(c);

|

||||

} else if (Character.isWhitespace(c) && (peekLastChar(normalizedNameSb) != '_')) {

|

||||

normalizedNameSb.append('_');

|

||||

}

|

||||

}

|

||||

return normalizedNameSb.toString();

|

||||

}

|

||||

|

||||

private static char peekLastChar(StringBuilder sb) {

|

||||

if (!sb.isEmpty()) {

|

||||

return sb.charAt(sb.length() - 1);

|

||||

} else {

|

||||

return 0;

|

||||

}

|

||||

}

|

||||

|

||||

private void failWithMessage(String msg) {

|

||||

processingEnv.getMessager().printMessage(Kind.ERROR, msg);

|

||||

}

|

||||

}

|

||||

@ -0,0 +1,32 @@

|

||||

package org.enso.benchmarks.processor;

|

||||

|

||||

import java.lang.annotation.ElementType;

|

||||

import java.lang.annotation.Retention;

|

||||

import java.lang.annotation.RetentionPolicy;

|

||||

import java.lang.annotation.Target;

|

||||

|

||||

/**

|

||||

* Use this annotation to force the {@link BenchProcessor} to generate JMH sources

|

||||

* for all the collected Enso benchmarks. The location of the benchmarks is encoded

|

||||

* by the {@code projectRootPath}, {@code moduleName} and {@code variableName} parameters.

|

||||

*/

|

||||

@Retention(RetentionPolicy.CLASS)

|

||||

@Target(ElementType.TYPE)

|

||||

public @interface GenerateBenchSources {

|

||||

|

||||

/**

|

||||

* Path to the project root directory. Relative to the Enso repository root.

|

||||

*/

|

||||

String projectRootPath();

|

||||

|

||||

/**

|

||||

* Fully qualified name of the module within the project that defines all the benchmark {@link org.enso.benchmarks.BenchSuite suites}.

|

||||

* For example {@code local.Benchmarks.Main}.

|

||||

*/

|

||||

String moduleName();

|

||||

|

||||

/**

|

||||

* Name of the variable that holds a list of all the benchmark {@link org.enso.benchmarks.BenchSuite suites}.

|

||||

*/

|

||||

String variableName() default "all_benchmarks";

|

||||

}

|

||||

@ -0,0 +1,60 @@

|

||||

package org.enso.benchmarks.processor;

|

||||

|

||||

import java.util.ArrayList;

|

||||

import java.util.List;

|

||||

import org.enso.benchmarks.BenchGroup;

|

||||

import org.enso.benchmarks.BenchSuite;

|

||||

import org.enso.benchmarks.ModuleBenchSuite;

|

||||

import org.enso.polyglot.MethodNames.Module;

|

||||

import org.graalvm.polyglot.Value;

|

||||

|

||||

/**

|

||||

* Collect benchmark specifications from Enso source files.

|

||||

*/

|

||||

public class SpecCollector {

|

||||

private SpecCollector() {}

|

||||

|

||||

/**

|

||||

* Collects all the bench specifications from the given module in a variable with the given name.

|

||||

* @param varName Name of the variable that holds all the collected bench suites.

|

||||

* @return Empty list if no such variable exists, or if it is not a vector.

|

||||

*/

|

||||

public static List<ModuleBenchSuite> collectBenchSpecsFromModule(Value module, String varName) {

|

||||

Value moduleType = module.invokeMember(Module.GET_ASSOCIATED_TYPE);

|

||||

Value allSuitesVar = module.invokeMember(Module.GET_METHOD, moduleType, varName);

|

||||

String moduleQualifiedName = module.invokeMember(Module.GET_NAME).asString();

|

||||

if (!allSuitesVar.isNull()) {

|

||||

Value suitesValue = module.invokeMember(Module.EVAL_EXPRESSION, varName);

|

||||

if (!suitesValue.hasArrayElements()) {

|

||||

return List.of();

|

||||

}

|

||||

List<ModuleBenchSuite> suites = new ArrayList<>();

|

||||

for (long i = 0; i < suitesValue.getArraySize(); i++) {

|

||||

Value suite = suitesValue.getArrayElement(i);

|

||||

BenchSuite benchSuite = suite.as(BenchSuite.class);

|

||||

suites.add(

|

||||

new ModuleBenchSuite(benchSuite, moduleQualifiedName)

|

||||

);

|

||||

}

|

||||

return suites;

|

||||

}

|

||||

return List.of();

|

||||

}

|

||||

|

||||

/**

|

||||

* Collects all the bench specifications from the given module in a variable with the given name.

|

||||

* @param groupName Name of the benchmark group

|

||||

* @param varName Name of the variable that holds all the collected bench suites.

|

||||

* @return null if no such group exists.

|

||||

*/

|

||||

public static BenchGroup collectBenchGroupFromModule(Value module, String groupName, String varName) {

|

||||

var specs = collectBenchSpecsFromModule(module, varName);

|

||||

for (ModuleBenchSuite suite : specs) {

|

||||

BenchGroup group = suite.findGroupByName(groupName);

|

||||

if (group != null) {

|

||||

return group;

|

||||

}

|

||||

}

|

||||

return null;

|

||||

}

|

||||

}

|

||||

@ -0,0 +1,116 @@

|

||||

package org.enso.benchmarks.runner;

|

||||

|

||||

import jakarta.xml.bind.JAXBException;

|

||||

import java.io.File;

|

||||

import java.io.IOException;

|

||||

import java.util.ArrayList;

|

||||

import java.util.Collection;

|

||||

import java.util.List;

|

||||

import java.util.stream.Collectors;

|

||||

import org.openjdk.jmh.results.RunResult;

|

||||

import org.openjdk.jmh.runner.BenchmarkList;

|

||||

import org.openjdk.jmh.runner.BenchmarkListEntry;

|

||||

import org.openjdk.jmh.runner.Runner;

|

||||

import org.openjdk.jmh.runner.RunnerException;

|

||||

import org.openjdk.jmh.runner.options.CommandLineOptionException;

|

||||

import org.openjdk.jmh.runner.options.CommandLineOptions;

|

||||

import org.openjdk.jmh.runner.options.OptionsBuilder;

|

||||

|

||||

public class BenchRunner {

|

||||

public static final File REPORT_FILE = new File("./bench-report.xml");

|

||||

|

||||

/** @return A list of qualified names of all benchmarks visible to JMH. */

|

||||

public List<String> getAvailable() {

|

||||

return BenchmarkList.defaultList().getAll(null, new ArrayList<>()).stream()

|

||||

.map(BenchmarkListEntry::getUsername)

|

||||

.collect(Collectors.toList());

|

||||

}

|

||||

|

||||

public static void run(String[] args) throws RunnerException {

|

||||

CommandLineOptions cmdOpts = null;

|

||||

try {

|

||||

cmdOpts = new CommandLineOptions(args);

|

||||

} catch (CommandLineOptionException e) {

|

||||

System.err.println("Error parsing command line args:");

|

||||

System.err.println(" " + e.getMessage());

|

||||

System.exit(1);

|

||||

}

|

||||

|

||||

if (Boolean.getBoolean("bench.compileOnly")) {

|

||||

// Do not report results from `compileOnly` mode

|

||||

runCompileOnly(cmdOpts.getIncludes());

|

||||

} else {

|

||||

Runner jmhRunner = new Runner(cmdOpts);

|

||||

|

||||

if (cmdOpts.shouldHelp()) {

|

||||

System.err.println("Enso benchmark runner: A modified JMH runner for Enso benchmarks.");

|

||||

try {

|

||||

cmdOpts.showHelp();

|

||||

} catch (IOException e) {

|

||||

throw new IllegalStateException("Unreachable", e);

|

||||

}

|

||||

System.exit(0);

|

||||

}

|

||||

|

||||

if (cmdOpts.shouldList()) {

|

||||

jmhRunner.list();

|

||||

System.exit(0);

|

||||

}

|

||||

|

||||

Collection<RunResult> results;

|

||||

results = jmhRunner.run();

|

||||

|

||||

for (RunResult result : results) {

|

||||

try {

|

||||

reportResult(result.getParams().getBenchmark(), result);

|

||||

} catch (JAXBException e) {

|

||||

throw new IllegalStateException("Benchmark result report writing failed", e);

|

||||

}

|

||||

}

|

||||

System.out.println("Benchmark results reported into " + REPORT_FILE.getAbsolutePath());

|

||||

}

|

||||

}

|

||||

|

||||

private static Collection<RunResult> runCompileOnly(List<String> includes) throws RunnerException {

|

||||

System.out.println("Running benchmarks " + includes + " in compileOnly mode");

|

||||

var optsBuilder = new OptionsBuilder()

|

||||

.measurementIterations(1)

|

||||

.warmupIterations(0)

|

||||

.forks(0);

|

||||

includes.forEach(optsBuilder::include);

|

||||

var opts = optsBuilder.build();

|

||||

var runner = new Runner(opts);

|

||||

return runner.run();

|

||||

}

|

||||

|

||||

public static BenchmarkItem runSingle(String label) throws RunnerException, JAXBException {

|

||||

String includeRegex = "^" + label + "$";

|

||||

if (Boolean.getBoolean("bench.compileOnly")) {

|

||||

var results = runCompileOnly(List.of(includeRegex));

|

||||

var firstResult = results.iterator().next();

|

||||

return reportResult(label, firstResult);

|

||||

} else {

|

||||

var opts = new OptionsBuilder()

|

||||

.jvmArgsAppend("-Xss16M", "-Dpolyglot.engine.MultiTier=false")

|

||||

.include(includeRegex)

|

||||

.build();

|

||||

RunResult benchmarksResult = new Runner(opts).runSingle();

|

||||

return reportResult(label, benchmarksResult);

|

||||

}

|

||||

}

|

||||

|

||||

private static BenchmarkItem reportResult(String label, RunResult result) throws JAXBException {

|

||||

Report report;

|

||||

if (REPORT_FILE.exists()) {

|

||||

report = Report.readFromFile(REPORT_FILE);

|

||||

} else {

|

||||

report = new Report();

|

||||

}

|

||||

|

||||

BenchmarkItem benchItem =

|

||||

new BenchmarkResultProcessor().processResult(label, report, result);

|

||||

|

||||

Report.writeToFile(report, REPORT_FILE);

|

||||

return benchItem;

|

||||

}

|

||||

}

|

||||

@ -0,0 +1,35 @@

|

||||

package org.enso.benchmarks.runner;

|

||||

|

||||

import org.openjdk.jmh.results.Result;

|

||||

|

||||

/** Convenience class for clients to compare historic results with the last JMH run. */

|

||||

public class BenchmarkItem {

|

||||

private final Result result;

|

||||

private final ReportItem previousResults;

|

||||

|

||||

public BenchmarkItem(Result result, ReportItem previousResults) {

|

||||

this.result = result;

|

||||

this.previousResults = previousResults;

|

||||

}

|

||||

|

||||

public Result getResult() {

|

||||

return result;

|

||||

}

|

||||

|

||||

public ReportItem getPreviousResults() {

|

||||

return previousResults;

|

||||

}

|

||||

|

||||

/** @return Best historic score for the given benchmark (including current run). */

|

||||

public double getBestScore() {

|

||||

return previousResults.getBestScore().orElse(result.getScore());

|

||||

}

|

||||

|

||||

public double getScore() {

|

||||

return result.getScore();

|

||||

}

|

||||

|

||||

public String getLabel() {

|

||||

return result.getLabel();

|

||||

}

|

||||

}

|

||||

@ -0,0 +1,21 @@

|

||||

package org.enso.benchmarks.runner;

|

||||

|

||||

import org.openjdk.jmh.results.Result;

|

||||

import org.openjdk.jmh.results.RunResult;

|

||||

|

||||

public class BenchmarkResultProcessor {

|

||||

/**

|

||||

* Matches the new result with historic results from the report and updates the report.

|

||||

*

|

||||

* @param label The name by which this result should be referred to as in the result.

|

||||

* @param report Historic runs report.

|

||||

* @param result Fresh JMH benchmark result.

|

||||

* @return

|

||||

*/

|

||||

public BenchmarkItem processResult(String label, Report report, RunResult result) {

|

||||

Result primary = result.getPrimaryResult();

|

||||

ReportItem item = report.findOrCreateByLabel(label);

|

||||

item.addScore(primary.getScore());

|

||||

return new BenchmarkItem(primary, item);

|

||||

}

|

||||

}

|

||||

@ -0,0 +1,98 @@

|

||||

package org.enso.benchmarks.runner;

|