Turns out that #8923 isn't enough to support debugging of `Vector_Spec.enso` when root of Enso repository is opened as a folder/workspace. To allow debugging of `Vector_Spec.enso` two changes are needed. One is provided in this PR, the other one will be integrated as https://github.com/apache/netbeans/pull/7105

- Closes#9363

- Cleans up the Cloud mock as it got a bit messy. It still implements the bare minimum to be able to test basic secret and auth handling logic 'offline' (added very simple path resolution, only handling the minimum set of cases for the tests to work).

- Adds first implementation of caching Cloud replies.

- Currently only caching the `Enso_User.current`. This is a simple one to cache because we do not expect it to ever change, so it can be safely cached for a long period of time (I chose 2h to make it still refresh from time to time while not being noticeable).

- We may try using this for caching other values in future PRs.

This PR bumps the FlatBuffers version used by the backend to `24.3.25` (the latest version as of now).

Since the newer FlatBuffers releases come with prebuilt binaries for all platforms we target, we can simplify the build process by simply downloading the required `flatc` binary from the official FlatBuffers GitHub release page. This allows us to remove the dependency on `conda`, which was the only reliable way to get the outdated `flatc`.

The `conda` setup has been removed from the CI steps and the relevant code has been removed from the build script.

The FlatBuffers version is no longer hard-coded in the Rust build script, it is inferred from the `build.sbt` definition (similar to GraalVM).

# Important Notes

This does not affect the GUI binary protocol implementation.

While I initially wanted to update it, it turned out farly non-trivial.

As there are multiple issues with the generated TS code, it was significantly refactored by hand and it is impossible to automatically update it. Work to address this problem is left as [a future task](https://github.com/enso-org/enso/issues/9658).

As the Flatbuffers binary protocol is guaranteed to be compatible between versions (unlike the generated sources), there should be no adverse effects from bumping `flatc` only on the backend side.

- Closes#9289

- Ensures that we can refer through `Enso_File` to files that do not _yet_ exist - preparing us for implementing the Write functionalities for `Enso_File` (#9291).

Removes a bulk of rust crates that we no longer need, but that added significant install, build and testing time to the Rust parser.

Most significantly, removed `enso-web` and `enso-shapely`, and got rid of many no longer necessary `#![feature]`s. Moved two still used proc-macros from shapely to prelude. The last remaining usage of `web-sys` is within the logger (`console.log`), but we may actually want to keep that one.

This PR updates the Rust toolchain to recent nightly.

Most of the changes are related to fixing newly added warnings and adjusting the feature flags. Also the formatter changed its behavior slightly, causing some whitespace changes.

Other points:

* Changed debug level of the `buildscript` profile to `lint-tables-only` — this should improve the build times and space usage somewhat.

* Moved lint configuration to the worksppace `Cargo.toml` definition. Adjusted the formatter appropriately.

* Removed auto-generated IntelliJ run configurations, as they are not useful anymore.

* Added a few trivial stdlib nightly functions that were removed to our codebase.

* Bumped many dependencies but still not all:

* `clap` bump encountered https://github.com/clap-rs/clap/issues/5407 — for now the warnings were silenced by the lint config.

* `octocrab` — our forked diverged to far with the original, needs more refactoring.

* `derivative` — is unmaintained and has no updated version, despite introducing warnings in the generated code. There is no direct replacement.

* Initial connection to Snowflake via an account, username and password.

* Fix databases and schemas in Snowflake.

Add warehouses.

* Add warehouse.

Update schema dropdowns.

* Add ability to set warehouse and pass at connect.

* Fix for NPE in license review

* scalafmt

* Separate Snowflake from Database.

* Scala fmt.

* Legal Review

* Avoid using ARROW for snowflake.

* Tidy up Entity_Naming_Properties.

* Fix for separating Entity_Namimg_Properties.

* Allow some tweaking of Postgres dialect to allow snowflake to use as well.

* Working on reading Date, Time and Date Times.

* Changelog.

* Java format.

* Make Snowflake Time and TimeStamp stuff work.

Move some responsibilities to Type_Mapping.

* Make Snowflake Time and TimeStamp stuff work.

Move some responsibilities to Type_Mapping.

* fix

* Update distribution/lib/Standard/Database/0.0.0-dev/src/Connection/Connection.enso

Co-authored-by: Radosław Waśko <radoslaw.wasko@enso.org>

* PR comments.

* Last refactor for PR.

* Fix.

---------

Co-authored-by: Radosław Waśko <radoslaw.wasko@enso.org>

Co-authored-by: mergify[bot] <37929162+mergify[bot]@users.noreply.github.com>

- Closes#9300

- Now the Enso libraries are themselves capable of refreshing the access token, thus there is no more problems if the token expires during a long running workflow.

- Adds `get_optional_field` sibling to `get_required_field` for more unified parsing of JSON responses from the Cloud.

- Adds `expected_type` that checks the type of extracted fields. This way, if the response is malformed we get a nice Enso Cloud error telling us what is wrong with the payload instead of a `Type_Error` later down the line.

- Fixes `Test.expect_panic_with` to actually catch only panics. Before it used to also handle dataflow errors - but these have `.should_fail_with` instead. We should distinguish these scenarios.

One can now once more create CSV files from benchmark results with something like:

```

./bench_download.py -v -s stdlib --since 2024-01-01 --create-csv

```

The generated CSV is ready to be read by the Enso IDE.

# Important Notes

- Fix `--create-csv` functionality of the `bench_download.py` script.

- Remove an outdated Enso project from `tools/performance/engine_benchmarks/Engine_Benchs`

- This is now done by book clubs.

- Adds the Excel format as one of the formats supported when creating a data link.

- The data link can choose to read the file as a workbook, or read a sheet or range from it as a table, like `Excel_Format`.

- Also updated Delimited format dialog to allow customizing the quote style.

After some recent changes, the HTTP server helper would no longer stop when Ctrl-C was issued. That is because the semaphore was being used in the wrong way: it was released on the same thread that was supposed to acquire it - but the acquire never returned as it would be waiting for the release, so the release could also never happen. Thus the main thread was in a constant dead-lock.

- Closes#9120

- Reorders CI steps to do the license check last (to avoid it preventing tests from running which are more important than the license check)

- Tries to reword the warnings to be clearer

- Adds some CSS to the report to more clearly indicate which elements can be clicked.

`Bump` library uses parser combinators behind the scenes which are known to be good at expressing grammars but are not performance-oriented.

This change ditches the dependency in favour of an existing Java implementation. `jsemver` implements the full specification, which is probably an overkill in our case, but proved to be an almost drop-in replacement for the previous library.

Closes#8692

# Important Notes

Peformance improvements:

- roughly 50ms compared to the previous approach (from 80ms to 20-40ms)

I don't see any time spent in the new implementation during startup so it could be potentially aggressively inlined.

Further more, we could use a facade and offer our own strip down version of semver.

There are two projects transitively required by `runtime`, that have akka dependencies:

- `downloader`

- `connected-lock-manager`

This PR replaces the `akka-http` dependency in `downloader` by HttpClient from JDK, and splits `connected-lock-manager` into two projects such that there are no akka classes in `runtime.jar`.

# Important Notes

- Simplify the `downloader` project - remove akka.

- Add HTTP tests to the `downloader` project that uses our `http-test-helper` that is normally used for stdlib tests.

- It required few tweaks so that we can embed that server in a unit test.

- Split `connected-lock-manager` project into two projects - remove akka from `runtime`.

- **Native image build fixes and quality of life improvements:**

- Output of `native-image` is captured 743e167aa4

- The output will no longer be intertwined with the output from other commands on the CI.

- Arguments to the `native-image` are passed via an argument file, not via command line - ba0a69de6e

- This resolves an issue on Windows with "Command line too long", for example in https://github.com/enso-org/enso/actions/runs/7934447148/job/21665456738?pr=8953#step:8:2269

Updates Google_Api version for authentication and adds Google Analytics reporting api and run_google_report method.

This is an initial method for proof of concept, with further design changes to follow.

# Important Notes

Updates google-api-client to v 2.2.0 from 1.35.2

Adds google-analytics-data v 0.44.0

Let's _untie_ the [VSCode Enso extension](https://marketplace.visualstudio.com/items?itemName=Enso.enso4vscode) from `sbt` commands. Let's **open any Enso file in the editor** and then use _F5_ or _Ctrl-F5_ to execute it. Let the user choose which `bin/enso` script to use for execution completely skipping the need for `sbt`.

- ✅Linting fixes and groups.

- ✅Add `File.from that:Text` and use `File` conversions instead of taking both `File` and `Text` and calling `File.new`.

- ✅Align Unix Epoc with the UTC timezone and add converting from long value to `Date_Time` using it.

- ❌Add simple first logging API allowing writing to log messages from Enso.

- ✅Fix minor style issue where a test type had a empty constructor.

- ❌Added a `long` based array builder.

- Added `File_By_Line` to read a file line by line.

- Added "fast" JSON parser based off Jackson.

- ✅Altered range `to_vector` to be a proxy Vector.

- ✅Added `at` and `get` to `Database.Column`.

- ✅Added `get` to `Table.Column`.

- ✅Added ability to expand `Vector`, `Array` `Range`, `Date_Range` to columns.

- ✅Altered so `expand_to_column` default column name will be the same as the input column (i.e. no `Value` suffix).

- ✅Added ability to expand `Map`, `JS_Object` and `Jackson_Object` to rows with two columns coming out (and extra key column).

- ✅ Fixed bug where couldn't use integer index to expand to rows.

- Closes#8723

- Adds some missing features that were needed to make this work:

- `Enso_File.create_directory` and `Enso_File.delete`, and basic tests for it

- Changes how `Enso_Secret.list` is obtained - using a different Cloud endpoint allows us to implement the desired logic, the default endpoint was giving us _all_ secrets which was not what we wanted here.

- Implements `Enso_Secret.update` and tests for it

# Important Notes

Notes describing any problems with the current Cloud API:

https://docs.google.com/document/d/1x8RUt3KkwyhlxGux7XUGfOdtFSAZV3fI9lSSqQ3XsXk/edit

Apparently, everything that was needed to make this feature work has already been implemented, although a few features needed workarounds on Enso side to work properly.

Recently a classpath misconfiguration appeared in `engine/runtime` project:

The problem was caused by the `XyzTest.java` files being incorrectly assigned to `engine/runtime/src/main/java` source root and its classpath (which obviously doesn't contain JUnit). This PR restricts the `srcCp` to `inputDir`, when it is known. That properly assignes the `XyzTest.java` files to `engine/runtime/src/test/java` source root. The IGV as well as VSCode support seems to recognize unit test classpath properly now.

- Closes#8555

- Refactors the file format detection logic, compacting lots of repetitive logic for HTTP handling into helper functions.

- Some updates to CODEOWNERS.

- After [suggestion](https://github.com/enso-org/enso/pull/8497#discussion_r1429543815) from @JaroslavTulach I have tried reimplementing the URL encoding using just `URLEncode` builtin util. I will see if this does not complicate other followup improvements, but most likely all should work so we should be able to get rid of the unnecessary bloat.

- Closes#8354

- Extends `simple-httpbin` with a simple mock of the Cloud API (currently it checks the token and serves the `/users` endpoint).

- Renames `simple-httpbin` to `http-test-helper`.

- Closes#8352

- ~~Proposed fix for #8493~~

- The temporary fix is deemed not viable. I will try to figure out a workaround and leave fixing #8493 to the engine team.

- Closes#8111 by making sure that all Excel workbooks are read using a backing file (which should be more memory efficient).

- If the workbook is being opened from an input stream, that stream is materialized to a `Temporary_File`.

- Adds tests fetching Table formats from HTTP.

- Extends `simple-httpbin` with ability to serve files for our tests.

- Ensures that the `Infer` option on `Excel` format also works with streams, if content-type metadata is available (e.g. from HTTP headers).

- Implements a `Temporary_File` facility that can be used to create a temporary file that is deleted once all references to the `Temporary_File` instance are GCed.

Adds these JAR modules to the `component` directory inside Engine distribution:

- `graal-language-23.1.0`

- `org.bouncycastle.*` - these need to be added for graalpy language

# Important Notes

- Remove `org.bouncycastle.*` packages from `runtime.jar` fat jar.

- Make sure that the `./run` script preinstalls GraalPy standalone distribution before starting engine tests

- Note that using `python -m venv` is only possible from standalone distribution, we cannot distribute `graalpython-launcher`.

- Make sure that installation of `numpy` and its polyglot execution example works.

- Convert `Text` to `TruffleString` before passing to GraalPy - 8ee9a2816f

Upgrade to GraalVM JDK 21.

```

> java -version

openjdk version "21" 2023-09-19

OpenJDK Runtime Environment GraalVM CE 21+35.1 (build 21+35-jvmci-23.1-b15)

OpenJDK 64-Bit Server VM GraalVM CE 21+35.1 (build 21+35-jvmci-23.1-b15, mixed mode, sharing)

```

With SDKMan, download with `sdk install java 21-graalce`.

# Important Notes

- After this PR, one can theoretically run enso with any JRE with version at least 21.

- Removed `sbt bootstrap` hack and all the other build time related hacks related to the handling of GraalVM distribution.

- `project-manager` remains backward compatible - it can open older engines with runtimes. New engines now do no longer require a separate runtime to be downloaded.

- sbt does not support compilation of `module-info.java` files in mixed projects - https://github.com/sbt/sbt/issues/3368

- Which means that we can have `module-info.java` files only for Java-only projects.

- Anyway, we need just a single `module-info.class` in the resulting `runtime.jar` fat jar.

- `runtime.jar` is assembled in `runtime-with-instruments` with a custom merge strategy (`sbt-assembly` plugin). Caching is disabled for custom merge strategies, which means that re-assembly of `runtime.jar` will be more frequent.

- Engine distribution contains multiple JAR archives (modules) in `component` directory, along with `runner/runner.jar` that is hidden inside a nested directory.

- The new entry point to the engine runner is [EngineRunnerBootLoader](https://github.com/enso-org/enso/pull/7991/files#diff-9ab172d0566c18456472aeb95c4345f47e2db3965e77e29c11694d3a9333a2aa) that contains a custom ClassLoader - to make sure that everything that does not have to be loaded from a module is loaded from `runner.jar`, which is not a module.

- The new command line for launching the engine runner is in [distribution/bin/enso](https://github.com/enso-org/enso/pull/7991/files#diff-0b66983403b2c329febc7381cd23d45871d4d555ce98dd040d4d1e879c8f3725)

- [Newest version of Frgaal](https://repo1.maven.org/maven2/org/frgaal/compiler/20.0.1/) (20.0.1) does not recognize `--source 21` option, only `--source 20`.

The change upgrades `directory-watcher` library, hoping that it will fix the problem reported in #7695 (there has been a number of bug fixes in MacOS listener since then).

Once upgraded, tests in `WatcherAdapterSpec` because the logic that attempted to ensure the proper initialization order in the test using semaphore was wrong. Now starting the watcher using `watchAsync` which only returns the future when the watcher successfully registers for paths. Ideally authors of the library would make the registration bit public

(3218d68a84/core/src/main/java/io/methvin/watcher/DirectoryWatcher.java (L229C7-L229C20)) but it is the best we can do so far.

Had to adapt to the new API in PathWatcher as well, ensuring the right order of initialization.

Should fix#7695.

Having a modest-size files in a project would lead to a timeout when the project was first initialized. This became apparent when testing delivered `.enso-project` files with some data files. After some digging there was a bug in JGit

(https://bugs.eclipse.org/bugs/show_bug.cgi?id=494323) which meant that adding such files was really slow. The implemented fix is not on by default but even with `--renormalization` turned off I did not see improvement.

In the end it didn't make sense to add `data` directory to our version control, or any other files than those in `src` or some meta files in `.enso`. Not including such files eliminates first-use initialization problems.

# Important Notes

To test, pick an existing Enso project with some data files in it (> 100MB) and remove `.enso/.vcs` directory. Previously it would timeout on first try (and work in successive runs). Now it works even on the first try.

The crash:

```

[org.enso.languageserver.requesthandler.vcs.InitVcsHandler] Initialize project request [Number(2)] for [f9a7cd0d-529c-4e1d-a4fa-9dfe2ed79008] failed with: null.

java.util.concurrent.TimeoutException: null

at org.enso.languageserver.effect.ZioExec$.<clinit>(Exec.scala:134)

at org.enso.languageserver.effect.ZioExec.$anonfun$exec$3(Exec.scala:60)

at org.enso.languageserver.effect.ZioExec.$anonfun$exec$3$adapted(Exec.scala:60)

at zio.ZIO.$anonfun$foldCause$4(ZIO.scala:683)

at zio.internal.FiberRuntime.runLoop(FiberRuntime.scala:904)

at zio.internal.FiberRuntime.evaluateEffect(FiberRuntime.scala:381)

at zio.internal.FiberRuntime.evaluateMessageWhileSuspended(FiberRuntime.scala:504)

at zio.internal.FiberRuntime.drainQueueOnCurrentThread(FiberRuntime.scala:220)

at zio.internal.FiberRuntime.run(FiberRuntime.scala:139)

at akka.dispatch.TaskInvocation.run(AbstractDispatcher.scala:49)

at java.base/java.util.concurrent.ForkJoinTask$RunnableExecuteAction.exec(ForkJoinTask.java:1395)

at java.base/java.util.concurrent.ForkJoinTask.doExec(ForkJoinTask.java:373)

at java.base/java.util.concurrent.ForkJoinPool$WorkQueue.topLevelExec(ForkJoinPool.java:1182)

```

* Reduce extra output in compilation and tests

I couldn't stand the amount of extra output that we got when compiling

a clean project and when executing regular tests. We should strive to

keep output clean and not print anything additional to stdout/stderr.

* Getting rid of explicit setup by service loading

In order for SL4J to use service loading correctly had to upgrade to

latest slf4j. Unfortunately `TestLogProvider` which essentially

delegates to `logback` provider will lead to spurious ambiguous warnings

on multiple providers. In order to dictate which one to use and

therefore eliminate the warnings we can use the `slf4j.provider` env

var, which is only available in slf4j 2.x.

Now, there is no need to explicitly call `LoggerSetup.get().setup()` as

that is being called during service setup.

* legal review

* linter

* Ensure ConsoleHandler uses the default level

ConsoleHandler's constructor uses `Level.INFO` which is unnecessary for

tests.

* report warnings

* Enable log-to-file configuration

PR #7825 enabled parallel logging to a file with a much more

fine-grained log level by default.

However, logging at `TRACE` level on Windows appears to be still

problematic.

This PR reduced the default log level to file from `DEBUG` to `TRACE`

and allows to control it via an environment variable if one wishes to

change the verbosity without making code changes.

* PR comments

* Always log verbose to a file

The change adds an option by default to always log to a file with

verbose log level.

The implementation is a bit tricky because in the most common use-case

we have to always log in verbose mode to a socket and only later apply

the desired log levels. Previously socket appender would respect the

desired log level already before forwarding the log.

If by default we log to a file, verbose mode is simply ignored and does

not override user settings.

To test run `project-manager` with `ENSO_LOGSERVER_APPENDER=console` env

variable. That will output to the console with the default `INFO` level

and `TRACE` log level for the file.

* add docs

* changelog

* Address some PR requests

1. Log INFO level to CONSOLE by default

2. Change runner's default log level from ERROR to WARN

Took a while to figure out why the correct log level wasn't being passed

to the language server, therefore ignoring the (desired) verbose logs

from the log file.

* linter

* 3rd party uses log4j for logging

Getting rid of the warning by adding a log4j over slf4j bridge:

```

ERROR StatusLogger Log4j2 could not find a logging implementation. Please add log4j-core to the classpath. Using SimpleLogger to log to the console...

```

* legal review update

* Make sure tests use test resources

Having `application.conf` in `src/main/resources` and `test/resources`

does not guarantee that in Tests we will pick up the latter. Instead, by

default it seems to do some kind of merge of different configurations,

which is far from desired.

* Ensure native launcher test log to console only

Logging to console and (temporary) files is problematic for Windows.

The CI also revealed a problem with the native configuration because it

was not possible to modify the launcher via env variables as everything

was initialized during build time.

* Adapt to method changes

* Potentially deal with Windows failures

# Important Notes

- Binary LS endpoint is not yet handled.

- The parsing of provided source is not entirely correct, as each line (including imports) is treated as node. The usage of actual enso AST for nodes is not yet implemented.

- Modifications to the graph state are not yet synchronized back to the language server.

This change replaces Enso's custom logger with an existing, mostly off the shelf logging implementation. The change attempts to provide a 1:1 replacement for the existing solution while requiring only a minimal logic for the initialization.

Loggers are configured completely via `logging-server` section in `application.conf` HOCON file, all initial logback configuration has been removed. This opens up a lot of interesting opportunities because we can benefit from all the well maintained slf4j implementations without being to them in terms of functionality.

Most important differences have been outlined in `docs/infrastructure/logging.md`.

# Important Notes

Addresses:

- #7253

- #6739

New rules to recognize `"""` and `'''` at the end of line and color everything that's inside nested block of text as string. It properly _stops the string literal when nested block ends_.

# Important Notes

The [text mate grammar rules](https://macromates.com/manual/en/language_grammars) properly _end_ the text block when the block ends. For example in:

```ruby

main =

x = """

a text

y

```

`a text` is properly recognized as string.

The added benchmark is a basis for a performance investigation.

We compare the performance of the same operation run in Java vs Enso to see what is the overhead and try to get the Enso operations closer to the pure-Java performance.

- Previous GraalVM update: https://github.com/enso-org/enso/pull/6750

Removed warnings:

- Remove deprecated `ConditionProfile.createCountingProfile()`.

- Add `@Shared` to some `@Cached` parameters (Truffle now emits warnings about potential `@Share` usage).

- Specialization method names should not start with execute

- Add limit attribute to some specialization methods

- Add `@NeverDefault` for some cached initializer expressions

- Add `@Idempotent` or `@NonIdempotent` where appropriate

BigInteger and potential Node inlining are tracked in follow-up issues.

# Important Notes

For `SDKMan` users:

```

sdk install java 17.0.7-graalce

sdk use java 17.0.7-graalce

```

For other users - download link can be found at https://github.com/graalvm/graalvm-ce-builds/releases/tag/jdk-17.0.7

Release notes: https://www.graalvm.org/release-notes/JDK_17/

R component was dropped from the release 23.0.0, only `python` is available to install via `gu install python`.

Engine benchmark downloader tool [bench_download.py](https://github.com/enso-org/enso/blob/develop/tools/performance/engine-benchmarks/bench_download.py) can now plot multiple branches in the same charts. The tooltips on the charts, displayed when you hover over some data point, are now broken for some branches. I have no idea why, it might be a technical limitation of the Google charts library. Nevertheless, I have also extended the *selection info* section, that is displayed under every chart, where one can see all the important information, once you click on some data point.

Options added:

- `--branches` specifies list of branches for which all the benchmark data points will be in the plots. The default is `develop` only.

- `--labels` that can limit the number of generated charts.

This PR also **deprecates** the `--compare` option. There is no reason to keep that option around since we can now plot all the branches in the same charts.

An example for plotting benchmarks for PR #7009 with

```

python bench_download.py -v --since 2023-07-01 --until 2023-07-11 --branches develop wip/jtulach/ArgumentConversion

```

is:

# Important Notes

- Deprecate `--compare` option

- Add `--labels` option

- Add `--branches` option

- Add type detection for `Mixed` columns when calling column functions.

- Excel uses column name for missing headers.

- Add aliases for parse functions on text.

- Adjust `Date`, `Time_Of_Day` and `Date_Time` parse functions to not take `Nothing` anymore and provide dropdowns.

- Removed built-in parses.

- All support Locale.

- Add support for missing day or year for parsing a Date.

- All will trim values automatically.

- Added ability to list AWS profiles.

- Added ability to list S3 buckets.

- Workaround for Table.aggregate so default item added works.

Add diagnosis for unresolved symbols in `from ... import sym1, sym2, ...` statements.

- Adds a new compiler pass, `ImportSymbolAnalysis`, that checks these statements and iterates through the symbols and checks if all the symbols can be resolved.

- Works with `BindingsMap` metadata.

- Add `ImportExportTest` that creates various modules with various imports/exports and checks their generated `BindingMap`.

---------

Co-authored-by: mergify[bot] <37929162+mergify[bot]@users.noreply.github.com>

Co-authored-by: Jaroslav Tulach <jaroslav.tulach@enso.org>

`Number.nan` can be used as a key in `Map`. This PR basically implements the support for [JavaScript's Same Value Zero Equality](https://developer.mozilla.org/en-US/docs/Web/JavaScript/Equality_comparisons_and_sameness#same-value-zero_equality) so that `Number.nan` can be used as a key in `Map`.

# Important Notes

- For NaN, it holds that `Meta.is_same_object Number.nan Number.nan`, and `Number.nan != Number.nan` - inspired by JS spec.

- `Meta.is_same_object x y` implies `Any.== x y`, except for `Number.nan`.

* Update type ascriptions in some operators in Any

* Add @GenerateUncached to AnyToTextNode.

Will be used in another node with @GenerateUncached.

* Add tests for "sort handles incomparable types"

* Vector.sort handles incomparable types

* Implement sort handling for different comparators

* Comparison operators in Any do not throw Type_Error

* Fix some issues in Ordering_Spec

* Remove the remaining comparison operator overrides for numbers.

* Consolidate all sorting functionality into a single builtin node.

* Fix warnings attachment in sort

* PrimitiveValuesComparator handles other types than primitives

* Fix byFunc calling

* on function can be called from the builtin

* Fix build of native image

* Update changelog

* Add VectorSortTest

* Builtin method should not throw DataflowError.

If yes, the message is discarded (a bug?)

* TypeOfNode may not return only Type

* UnresolvedSymbol is not supported as `on` argument to Vector.sort_builtin

* Fix docs

* Fix bigint spec in LessThanNode

* Small fixes

* Small fixes

* Nothings and Nans are sorted at the end of default comparator group.

But not at the whole end of the resulting vector.

* Fix checking of `by` parameter - now accepts functions with default arguments.

* Fix changelog formatting

* Fix imports in DebuggingEnsoTest

* Remove Array.sort_builtin

* Add comparison operators to micro-distribution

* Remove Array.sort_builtin

* Replace Incomparable_Values by Type_Error in some tests

* Add on_incomparable argument to Vector.sort_builtin

* Fix after merge - Array.sort delegates to Vector.sort

* Add more tests for problem_behavior on Vector.sort

* SortVectorNode throws only Incomparable_Values.

* Delete Collections helper class

* Add test for expected failure for custom incomparable values

* Cosmetics.

* Fix test expecting different comparators warning

* isNothing is checked via interop

* Remove TruffleLogger from SortVectorNode

* Small review refactorings

* Revert "Remove the remaining comparison operator overrides for numbers."

This reverts commit 0df66b1080.

* Improve bench_download.py tool's `--compare` functionality.

- Output table is sorted by benchmark labels.

- Do not fail when there are different benchmark labels in both runs.

* Wrap potential interop values with `HostValueToEnsoNode`

* Use alter function in Vector_Spec

* Update docs

* Invalid comparison throws Incomparable_Values rather than Type_Error

* Number comparison builtin methods return Nothing in case of incomparables

close#6080

Changelog

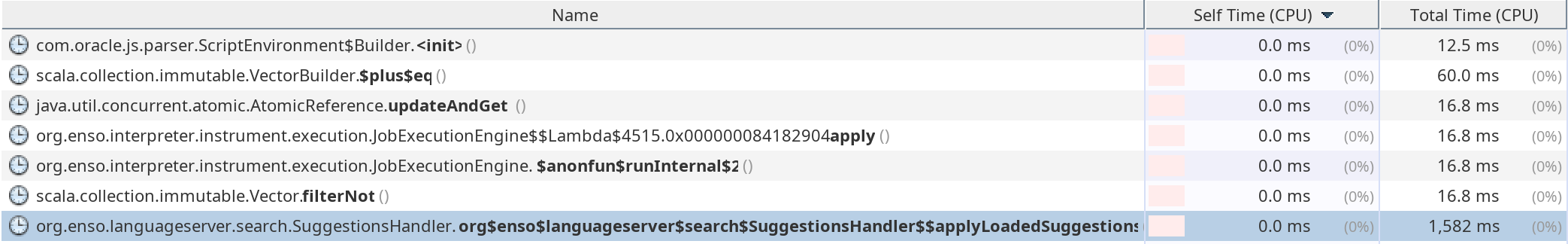

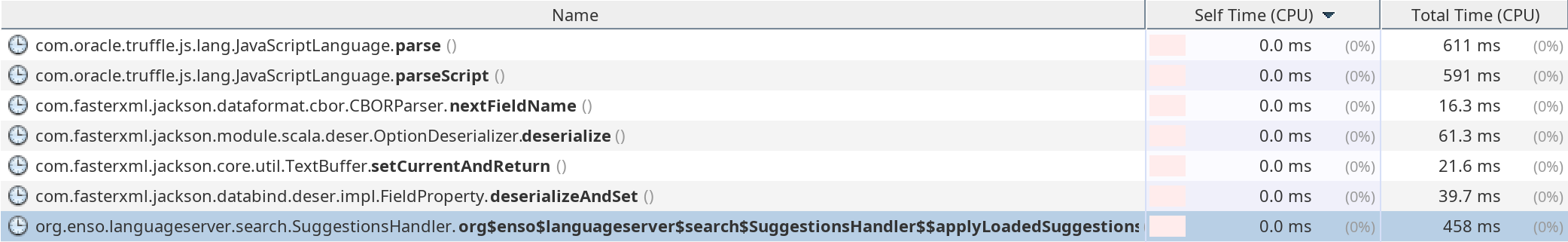

- add: implement `SuggestionsRepo.insertAll` as a batch SQL insert

- update: `search/getSuggestionsDatabase` returns empty suggestions. Currently, the method is only used at startup and returns the empty response anyway because the libs are not loaded at that point.

- update: serialize only global (defined in the module scope) suggestions during the distribution building. There's no sense in storing the local library suggestions.

- update: sqlite dependency

- remove: unused methods from `SuggestionsRepo`

- remove: Arguments table

# Important Notes

Speeds up libraries loading by ~1 second.

Add `--compare <bench-run-id-1> <bench-run-id-2>` option to `bench_download.py` script, that prints the difference of benchmark scores for two benchmark runs from GH as a table.

# Important Notes

I tried to add an option to compare two or more branches and visualize them in the graphs, but gave that up after some struggling.

Implement new Enso documentation parser; remove old Scala Enso parser.

Performance: Total time parsing documentation is now ~2ms.

# Important Notes

- Doc parsing is now done only in the frontend.

- Some engine tests had never been switched to the new parser. We should investigate tests that don't pass after the switch: #5894.

- The option to run the old searcher has been removed, as it is obsolete and was already broken before this (see #5909).

- Some interfaces used only by the old searcher have been removed.

Fixing node.js dependencies as:

```bash

enso/tools/enso4igv$ mvn clean install -Pvsix

```

was broken. Making sure the VSIX build is part of the _actions workflow_.

# Important Notes

To reproduce/verify remove `enso/tools/enso4igv/node_modules` first and try to build.

Automating the assembly of the engine and its execution into a single task. If you are modifying standard libraries, engine sources or Enso tests, you can launch `sbt` and then just:

```

sbt:enso> runEngineDistribution --run test/Tests/src/Data/Maybe_Spec.enso

[info] Engine package created at built-distribution/enso-engine-0.0.0-dev-linux-amd64/enso-0.0.0-dev

[info] Executing built-distribution/enso-engine-...-dev/bin/enso --run test/Tests/src/Data/Maybe_Spec.enso

Maybe: [5/5, 30ms]

- should have a None variant [14ms]

- should have a Some variant [5ms]

- should provide the `maybe` function [4ms]

- should provide `is_some` [2ms]

- should provide `is_none` [3ms]

5 tests succeeded.

0 tests failed.unEngineDistribution 4s

0 tests skipped.

```

the [runEngineDistribution](3a581f29ee/docs/CONTRIBUTING.md (running-enso)) `sbt` input task makes sure all your sources are properly compiled and only then executes your enso source. Everything ready at a single press of Enter.

# Important Notes

To debug in chrome dev tools, just add `--inspect`:

```

sbt:enso> runEngineDistribution --inspect --run test/Tests/src/Data/Maybe_Spec.enso

E.g. in Chrome open: devtools://devtools/bundled/js_app.html?ws=127.0.0.1:9229/7JsgjXlntK8

```

everything gets build and one can just attach the Enso debugger.

This PR aims to fix current issues with cloud IDE.

1. Backend image:

* bumping the system version to avoid glibc version mismatch on parser;

* explicitly installing required GraalVM components;

2. Frontend upload:

* update to follow the new file naming;

* uploading the whole shaders subtree.

The new jgit integration tries to create config file in ~/.config/jgit/config. We are creating default ensodev with explicit `-M, --no-create-home`. As long as there wasn't any strong reasoning behind that this commit changes it to fix problems with initialising jgit.

Error that this PR fixes:

```

Jan 30 11:21:47 ip-172-31-0-83.eu-west-1.compute.internal enso_runtime[3853]: [error] [2023-01-30T11:21:47.185Z] [org.eclipse.jgit.util.FS] Cannot save config file 'FileBasedConfig[/home/ensodev/.config/jgit/config]'

Jan 30 11:21:47 ip-172-31-0-83.eu-west-1.compute.internal enso_runtime[3853]: java.io.IOException: Some(Creating directories for /home/ensodev/.config/jgit failed)

Jan 30 11:21:47 ip-172-31-0-83.eu-west-1.compute.internal enso_runtime[3853]: at org.eclipse.jgit.util.FileUtils.mkdirs(FileUtils.java:413)

Jan 30 11:21:47 ip-172-31-0-83.eu-west-1.compute.internal enso_runtime[3853]: at org.eclipse.jgit.internal.storage.file.LockFile.lock(LockFile.java:140)

Jan 30 11:21:47 ip-172-31-0-83.eu-west-1.compute.internal enso_runtime[3853]: at org.eclipse.jgit.storage.file.FileBasedConfig.save(FileBasedConfig.java:184)

Jan 30 11:21:47 ip-172-31-0-83.eu-west-1.compute.internal enso_runtime[3853]: at org.eclipse.jgit.util.FS$FileStoreAttributes.saveToConfig(FS.java:761)

Jan 30 11:21:47 ip-172-31-0-83.eu-west-1.compute.internal enso_runtime[3853]: at org.eclipse.jgit.util.FS$FileStoreAttributes.lambda$5(FS.java:443)

Jan 30 11:21:47 ip-172-31-0-83.eu-west-1.compute.internal enso_runtime[3853]: at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1128)

Jan 30 11:21:47 ip-172-31-0-83.eu-west-1.compute.internal enso_runtime[3853]: at java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:628)

Jan 30 11:21:47 ip-172-31-0-83.eu-west-1.compute.internal enso_runtime[3853]: at java.base/java.lang.Thread.run(Thread.java:829)

```

In order to investigate `engine/language-server` project, I need to be able to open its sources in IGV and NetBeans.

# Important Notes

By adding same Java source (this time `package-info.java`) and compiling with our Frgaal compiler the necessary `.enso-sources*` files are generated for `engine/language-server` and then the `enso4igv` plugin can open them and properly understand their compile settings.

In addition to that this PR enhances the _"logical view"_ presentation of the project by including all source roots found under `src/*/*`.

Many engine sources are written in Scala. IGV doesn't have any support for Scala by default. This PR adds syntax coloring and debugging support for our `.scala` files.