The option asks to print a final test report for each projects at the

end `sbt> run`.

That way, when running the task in aggregate mode, we have a summary at

the end, rather than somewhere in the large output of the individual

subproject.

Updated the SQLite, PostgreSQL and Redshift drivers.

# Important Notes

Updated the API for Redshift and proved able to connect without the ini file workaround.

This PR merges existing variants of `LiteralNode` (`Integer`, `BigInteger`, `Decimal`, `Text`) into a single `LiteralNode`. It adds `PatchableLiteralNode` variant (with non `final` `value` field) and uses `Node.replace` to modify the AST to be patchable. With such change one can remove the `UnwindHelper` workaround as `IdExecutionInstrument` now sees _patched_ return values without any tricks.

This change makes sure that Runtime configuration of `runtime` is listed

as a dependency of `runtime-with-instruments`.

That way `buildEngineDistribution` which indirectly depends on

`runtime-with-instruments`/assembly triggers compilation for std-bits,

if necessary.

# Important Notes

Minor adjustments for a problem introduced in https://github.com/enso-org/enso/pull/3509

New plan to [fix the `sbt` build](https://www.pivotaltracker.com/n/projects/2539304/stories/182209126) and its annoying:

```

log.error(

"Truffle Instrumentation is not up to date, " +

"which will lead to runtime errors\n" +

"Fixes have been applied to ensure consistent Instrumentation state, " +

"but compilation has to be triggered again.\n" +

"Please re-run the previous command.\n" +

"(If this for some reason fails, " +

s"please do a clean build of the $projectName project)"

)

```

When it is hard to fix `sbt` incremental compilation, let's restructure our project sources so that each `@TruffleInstrument` and `@TruffleLanguage` registration is in individual compilation unit. Each such unit is either going to be compiled or not going to be compiled as a batch - that will eliminate the `sbt` incremental compilation issues without addressing them in `sbt` itself.

fa2cf6a33ec4a5b2e3370e1b22c2b5f712286a75 is the first step - it introduces `IdExecutionService` and moves all the `IdExecutionInstrument` API up to that interface. The rest of the `runtime` project then depends only on `IdExecutionService`. Such refactoring allows us to move the `IdExecutionInstrument` out of `runtime` project into independent compilation unit.

Auto-generate all builtin methods for builtin `File` type from method signatures.

Similarly, for `ManagedResource` and `Warning`.

Additionally, support for specializations for overloaded and non-overloaded methods is added.

Coverage can be tracked by the number of hard-coded builtin classes that are now deleted.

## Important notes

Notice how `type File` now lacks `prim_file` field and we were able to get rid off all of those

propagating method calls without writing a single builtin node class.

Similarly `ManagedResource` and `Warning` are now builtins and `Prim_Warnings` stub is now gone.

Drop `Core` implementation (replacement for IR) as it (sadly) looks increasingly

unlikely this effort will be continued. Also, it heavily relies

on implicits which increases some compilation time (~1sec from `clean`)

Related to https://www.pivotaltracker.com/story/show/182359029

This change introduces a custom LogManager for console that allows for

excluding certain log messages. The primarily reason for introducing

such LogManager/Appender is to stop issuing hundreds of pointless

warnings coming from the analyzing compiler (wrapper around javac) for

classes that are being generated by annotation processors.

The output looks like this:

```

[info] Cannot install GraalVM MBean due to Failed to load org.graalvm.nativebridge.jni.JNIExceptionWrapperEntryPoints

[info] compiling 129 Scala sources and 395 Java sources to /home/hubert/work/repos/enso/enso/engine/runtime/target/scala-2.13/classes ...

[warn] Unexpected javac output: warning: File for type 'org.enso.interpreter.runtime.type.ConstantsGen' created in the last round will not be subject to annotation processing.

[warn] 1 warning.

[info] [Use -Dgraal.LogFile=<path> to redirect Graal log output to a file.]

[info] Cannot install GraalVM MBean due to Failed to load org.graalvm.nativebridge.jni.JNIExceptionWrapperEntryPoints

[info] foojavac Filer

[warn] Could not determine source for class org.enso.interpreter.node.expression.builtin.number.decimal.CeilMethodGen

[warn] Could not determine source for class org.enso.interpreter.node.expression.builtin.resource.TakeNodeGen

[warn] Could not determine source for class org.enso.interpreter.node.expression.builtin.error.ThrowErrorMethodGen

[warn] Could not determine source for class org.enso.interpreter.node.expression.builtin.number.smallInteger.MultiplyMethodGen

[warn] Could not determine source for class org.enso.interpreter.node.expression.builtin.warning.GetWarningsNodeGen

[warn] Could not determine source for class org.enso.interpreter.node.expression.builtin.number.smallInteger.BitAndMethodGen

[warn] Could not determine source for class org.enso.interpreter.node.expression.builtin.error.ErrorToTextNodeGen

[warn] Could not determine source for class org.enso.interpreter.node.expression.builtin.warning.GetValueMethodGen

[warn] Could not determine source for class org.enso.interpreter.runtime.callable.atom.AtomGen$MethodDispatchLibraryExports$Cached

....

```

The output now has over 500 of those and there will be more. Much more

(generated by our and Truffle processors).

There is no way to tell SBT that those are OK. One could potentially

think of splitting compilation into 3 stages (Java processors, Java and

Scala) but that will already complicate the non-trivial build definition

and we may still end up with the initial problem.

This is a fix to make it possible to get reasonable feedback from

compilation without scrolling mutliple screens *every single time*.

Also fixed a spurious warning in javac processor complaining about

creating files in the last round.

Related to https://www.pivotaltracker.com/story/show/182138198

`interpreter-dsl` should only attempt to run explicitly specified

processors. That way, even if the generated

`META-INF/services/javax.annotation.processing.Processor` is present,

it does not attempt to apply those processors on itself.

This change makes errors related to

```

[warn] Unexpected javac output: error: Bad service configuration file, or

exception thrown while constructing Processor object:

javax.annotation.processing.Processor: Provider org.enso.interpreter.dsl....

```

a thing of the past. This was supper annoying when switching branches and

required to either clean the project or remove the file by hand.

Related to https://www.pivotaltracker.com/story/show/182297597

@radeusgd discovered that no formatting was being applied to std-bits projects.

This was caused by the fact that `enso` project didn't aggregate them. Compilation and

packaging still worked because one relied on the output of some tasks but

```

sbt> javafmtAll

```

didn't apply it to `std-bits`.

# Important Notes

Apart from `build.sbt` no manual changes were made.

This is the 2nd part of DSL improvements that allow us to generate a lot of

builtins-related boilerplate code.

- [x] generate multiple method nodes for methods/constructors with varargs

- [x] expanded processing to allow for @Builtin to be added to classes and

and generate @BuiltinType classes

- [x] generate code that wraps exceptions to panic via `wrapException`

annotation element (see @Builtin.WrapException`

Also rewrote @Builtin annotations to be more structured and introduced some nesting, such as

@Builtin.Method or @Builtin.WrapException.

This is part of incremental work and a follow up on https://github.com/enso-org/enso/pull/3444.

# Important Notes

Notice the number of boilerplate classes removed to see the impact.

For now only applied to `Array` but should be applicable to other types.

Before, when running Enso with `-ea`, some assertions were broken and the interpreter would not start.

This PR fixes two very minor bugs that were the cause of this - now we can successfully run Enso with `-ea`, to test that any assertions in Truffle or in our own libraries are indeed satisfied.

Additionally, this PR adds a setting to SBT that ensures that IntelliJ uses the right language level (Java 17) for our projects.

A low-hanging fruit where we can automate the generation of many

@BuiltinMethod nodes simply from the runtime's methods signatures.

This change introduces another annotation, @Builtin, to distinguish from

@BuiltinType and @BuiltinMethod processing. @Builtin processing will

always be the first stage of processing and its output will be fed to

the latter.

Note that the return type of Array.length() is changed from `int` to

`long` because we probably don't want to add a ton of specializations

for the former (see comparator nodes for details) and it is fine to cast

it in a small number of places.

Progress is visible in the number of deleted hardcoded classes.

This is an incremental step towards #181499077.

# Important Notes

This process does not attempt to cover all cases. Not yet, at least.

We only handle simple methods and constructors (see removed `Array` boilerplate methods).

Auxiliary sbt commands for building individual

stdlib packages.

The commands check if the engine distribution was built at least once,

and only copy the necessary package files if necessary.

So far added:

- `buildStdLibBase`

- `buildStdLibDatabase`

- `buildStdLibTable`

- `buildStdLibImage`

- `buildStdLibGoogle_Api`

Related to [#182014385](https://www.pivotaltracker.com/story/show/182014385)

In order to analyse why the `runner.jar` is slow to start, let's _"self sample"_ it using the [sampler library](https://bits.netbeans.org/dev/javadoc/org-netbeans-modules-sampler/org/netbeans/modules/sampler/Sampler.html). As soon as the `Main.main` is launched, the sampling starts and once the server is up, it writes its data into `/tmp/language-server.npss`.

Open the `/tmp/language-server.npss` with [VisualVM](https://visualvm.github.io) - you should have one copy in your

GraalVM `bin/jvisualvm` directory and there has to be a GraalVM to run Enso.

#### Changelog

- add: the `MethodsSampler` that gathers information in `.npss` format

- add: `--profiling` flag that enables the sampler

- add: language server processes the updates in batches

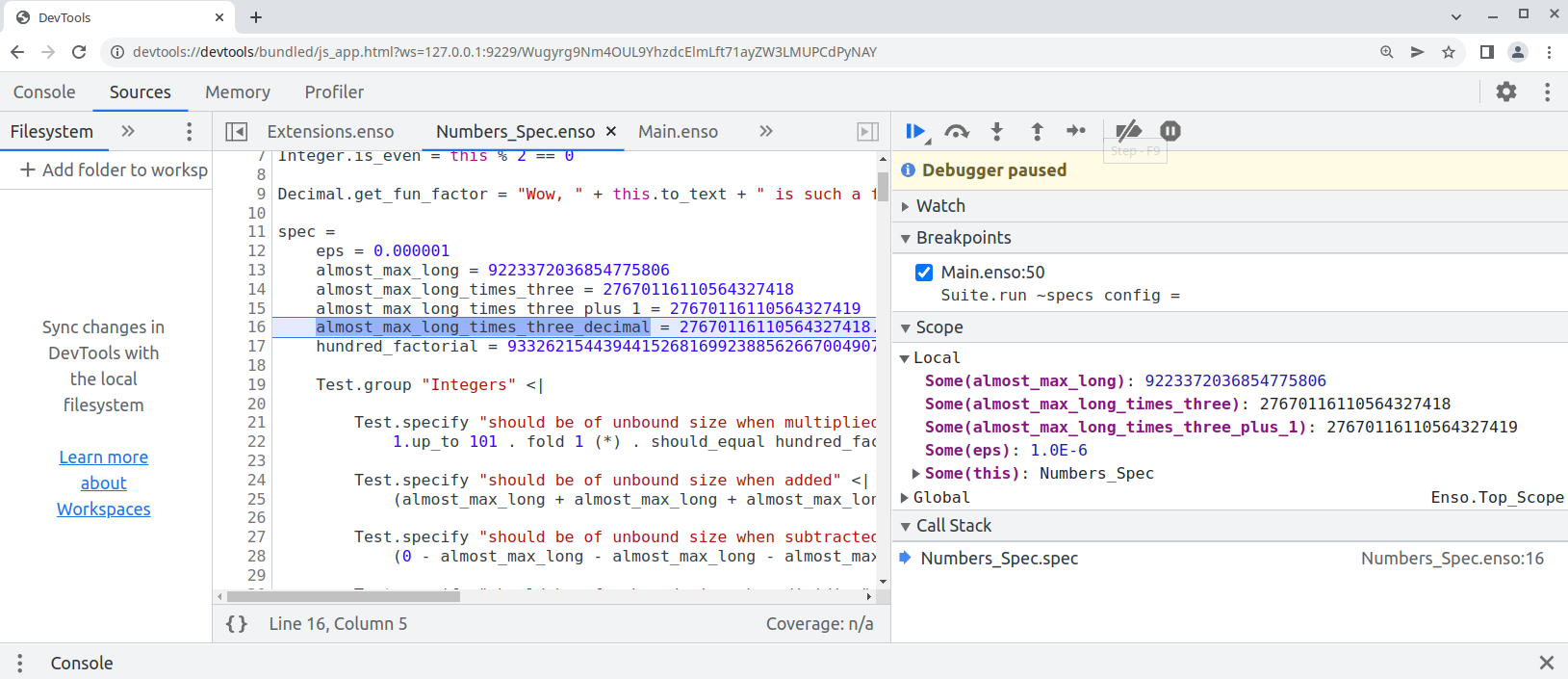

Finally this pull request proposes `--inspect` option to allow [debugging of `.enso`](e948f2535f/docs/debugger/README.md) in Chrome Developer Tools:

```bash

enso$ ./built-distribution/enso-engine-0.0.0-dev-linux-amd64/enso-0.0.0-dev/bin/enso --inspect --run ./test/Tests/src/Data/Numbers_Spec.enso

Debugger listening on ws://127.0.0.1:9229/Wugyrg9Nm4OUL9YhzdcElmLft71ayZW3LMUPCdPyNAY

For help, see: https://www.graalvm.org/tools/chrome-debugger

E.g. in Chrome open: devtools://devtools/bundled/js_app.html?ws=127.0.0.1:9229/Wugyrg9Nm4OUL9YhzdcElmLft71ayZW3LMUPCdPyNAY

```

copy the printed URL into chrome browser and you should see:

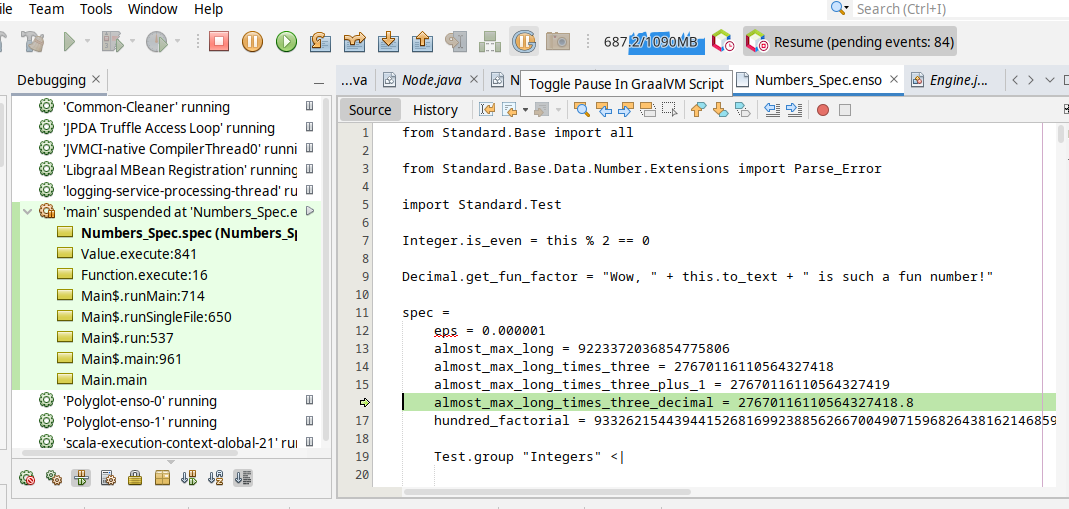

One can also debug the `.enso` files in NetBeans or [VS Code with Apache Language Server extension](https://cwiki.apache.org/confluence/display/NETBEANS/Apache+NetBeans+Extension+for+Visual+Studio+Code) just pass in special JVM arguments:

```bash

enso$ JAVA_OPTS=-agentlib:jdwp=transport=dt_socket,server=y,address=8000 ./built-distribution/enso-engine-0.0.0-dev-linux-amd64/enso-0.0.0-dev/bin/enso --run ./test/Tests/src/Data/Numbers_Spec.enso

Listening for transport dt_socket at address: 8000

```

and then _Debug/Attach Debugger_. Once connected choose the _Toggle Pause in GraalVM Script_ button in the toolbar (the "G" button):

and your execution shall stop on the next `.enso` line of code. This mode allows to debug both - the Enso code as well as Java code.

Originally started as an attempt to write test in Java:

* test written in Java

* support for JUnit in `build.sbt`

* compile Java with `-g` - so it can be debugged

* Implementation of `StatementNode` - only gets created when `materialize` request gets to `BlockNode`

This PR replaces hard-coded `@Builtin_Method` and `@Builtin_Type` nodes in Builtins with an automated solution

that a) collects metadata from such annotations b) generates `BuiltinTypes` c) registers builtin methods with corresponding

constructors.

The main differences are:

1) The owner of the builtin method does not necessarily have to be a builtin type

2) You can now mix regular methods and builtin ones in stdlib

3) No need to keep track of builtin methods and types in various places and register them by hand (a source of many typos or omissions as it found during the process of this PR)

Related to #181497846

Benchmarks also execute within the margin of error.

### Important Notes

The PR got a bit large over time as I was moving various builtin types and finding various corner cases.

Most of the changes however are rather simple c&p from Builtins.enso to the corresponding stdlib module.

Here is the list of the most crucial updates:

- `engine/runtime/src/main/java/org/enso/interpreter/runtime/builtin/Builtins.java` - the core of the changes. We no longer register individual builtin constructors and their methods by hand. Instead, the information about those is read from 2 metadata files generated by annotation processors. When the builtin method is encountered in stdlib, we do not ignore the method. Instead we lookup it up in the list of registered functions (see `getBuiltinFunction` and `IrToTruffle`)

- `engine/runtime/src/main/java/org/enso/interpreter/runtime/callable/atom/AtomConstructor.java` has now information whether it corresponds to the builtin type or not.

- `engine/runtime/src/main/scala/org/enso/compiler/codegen/RuntimeStubsGenerator.scala` - when runtime stubs generator encounters a builtin type, based on the @Builtin_Type annotation, it looks up an existing constructor for it and registers it in the provided scope, rather than creating a new one. The scope of the constructor is also changed to the one coming from stdlib, while ensuring that synthetic methods (for fields) also get assigned correctly

- `engine/runtime/src/main/scala/org/enso/compiler/codegen/IrToTruffle.scala` - when a builtin method is encountered in stdlib we don't generate a new function node for it, instead we look it up in the list of registered builtin methods. Note that Integer and Number present a bit of a challenge because they list a whole bunch of methods that don't have a corresponding method (instead delegating to small/big integer implementations).

During the translation new atom constructors get initialized but we don't want to do it for builtins which have gone through the process earlier, hence the exception

- `lib/scala/interpreter-dsl/src/main/java/org/enso/interpreter/dsl/MethodProcessor.java` - @Builtin_Method processor not only generates the actual code fpr nodes but also collects and writes the info about them (name, class, params) to a metadata file that is read during builtins initialization

- `lib/scala/interpreter-dsl/src/main/java/org/enso/interpreter/dsl/MethodProcessor.java` - @Builtin_Method processor no longer generates only (root) nodes but also collects and writes the info about them (name, class, params) to a metadata file that is read during builtins initialization

- `lib/scala/interpreter-dsl/src/main/java/org/enso/interpreter/dsl/TypeProcessor.java` - Similar to MethodProcessor but handles @Builtin_Type annotations. It doesn't, **yet**, generate any builtin objects. It also collects the names, as present in stdlib, if any, so that we can generate the names automatically (see generated `types/ConstantsGen.java`)

- `engine/runtime/src/main/java/org/enso/interpreter/node/expression/builtin` - various classes annotated with @BuiltinType to ensure that the atom constructor is always properly registered for the builitn. Note that in order to support types fields in those, annotation takes optional `params` parameter (comma separated).

- `engine/runtime/src/bench/scala/org/enso/interpreter/bench/fixtures/semantic/AtomFixtures.scala` - drop manual creation of test list which seemed to be a relict of the old design

* Initial integration with Frgaal in sbt

Half-working since it chokes on generated classes from annotation

processor.

* Replace AutoService with ServiceProvider

For reasons unknown AutoService would fail to initialize and fail to

generate required builtin method classes.

Hidden error message is not particularly revealing on the reason for

that:

```

[error] error: Bad service configuration file, or exception thrown while constructing Processor object: javax.annotation.processing.Processor: Provider com.google.auto.service.processor.AutoServiceProcessor could not be instantiated

```

The sample records is only to demonstrate that we can now use newer Java

features.

* Cleanup + fix benchmark compilation

Bench requires jmh classes which are not available because we obviously

had to limit `java.base` modules to get Frgaal to work nicely.

For now, we default to good ol' javac for Benchmarks.

Limiting Frgaal to runtime for now, if it plays nicely, we can expand it

to other projects.

* Update CHANGELOG

* Remove dummy record class

* Update licenses

* New line

* PR review

* Update legal review

Co-authored-by: Radosław Waśko <radoslaw.wasko@enso.org>

- Add parser & handler in IDE for `executionContext/visualisationEvaluationFailed` message from Engine (fixes a developer console error "Failed to decode a notification: unknown variant `executionContext/visualisationEvaluationFailed`"). The contents of the error message will now be properly deserialized and printed to Dev Console with appropriate details.

- Fix a bug in an Enso code snippet used internally by the IDE for error visualizations preprocessing. The snippet was using not currently supported double-quote escaping in double-quote delimited strings. This lack of processing is actually a bug in the Engine, and it was reported to the Engine team, but changing the strings to single-quoted makes the snippet also more readable, so it sounds like a win anyway.

- A test is also added to the Engine CI, verifying that the snippet compiles & works correctly, to protect against similar regressions in the future.

Related: #2815