The publish, deploy, and release process is annoying enough at the moment that I avoid doing it frequently. Let's automate most of it to reduce the friction

Follow up on this PR #435.

Currently, it took roughly 22 seconds to load my 1.3GB file. After inspecting the profiler, there's a large chunk of time spending in Frame.getOrInsert. I figure we can reduce the number of invocations by half. It reduces the load time to roughly 18 seconds.I also tested with a smaller file (~350MB), and it show similar gains, about 15-20%

Currently, importing files generated by linux perf tool whose some blocks exceed V8 strings limit can crash the application. This issue is similar to the one in #385.

This PR fixes it by changing parseEvents to work directly with lines instead of chunking lines into blocks first.

Fixes#433

The current behavior of splitLines is to eagerly split all the lines and return an array of strings.

This PR improves this by returning an iterator instead, which will emit lines. This lets callers decide how to best use the splitLines function (i.e. lazily enumerate over lines)

Relates to #433

The previous behavior was to use the StartLine of a function as the line number to show in speedscope. However, the Line object has more precise line information, and we should only fallback to StartLine if we don't have this more detailed information.

Looking at the [documentation for the pprof proto](https://github.com/google/pprof/tree/main/proto#general-structure-of-a-profile), this is more how it intends to interpret line information:

> location: A unique place in the program, commonly mapped to a single instruction address. It has a unique nonzero id, to be referenced from the samples. It contains source information in the form of lines, and a mapping id that points to a binary.

> function: A program function as defined in the program source. It has a unique nonzero id, referenced from the location lines. It contains a human-readable name for the function (eg a C++ demangled name), a system name (eg a C++ mangled name), the name of the corresponding source file, and other function attributes.

Here is a sample profile that had line-level info on the Line object of the profile:

Before:

<img width="449" alt="Screen Shot 2022-11-03 at 11 17 11 AM" src="https://user-images.githubusercontent.com/618615/199760730-712daa70-6cfb-4e90-b037-b571809c26d9.png">

After:

<img width="449" alt="Screen Shot 2022-11-03 at 11 17 22 AM" src="https://user-images.githubusercontent.com/618615/199760777-1c0d5581-7b29-42b7-b642-6035f7d25405.png">

This attempts to improve the quality of the on-CPU profiles stackprof provides. Rather than weighing samples by their timestamp deltas, which, in our opinion, are only valid in wall-clock mode, this weighs callchains by:

```

S = number of samples

P = sample period in nanoseconds

W = S * P

```

The difference after this change is quite substantial, specially in profiles that previously were showing up with heavy IO frames:

* Total profile weight is almost down by 90%, which actually makes sense for an on-CPU profile if the app is relatively idle

* Certain callchains that blocked in syscalls / IO are now much lower weight. This was what I was expecting to find.

Here is an example of the latter point.

In delta mode, we see an io select taking a long time, it is a significant portion of the profile:

<img width="1100" alt="236936508-709bee01-d616-4246-ba74-ab004331dcd3" src="https://github.com/dalehamel/speedscope/assets/4398256/39140f1e-50a9-4f33-8a61-ec98b6273fd4">

But in period scaling mode, it is only a couple of sample periods ultimately:

<img width="206" alt="236936693-9d44304e-a1c2-4906-b3c8-50e19e6f9f27" src="https://github.com/dalehamel/speedscope/assets/4398256/7d19077f-ef25-4d79-980b-cfa1775d928d">

Sometimes, stackprof frames don't get generated with a `name` in the frame.

I think it's probably worth tracking down why that is, but in the mean

time, speedscope simply crashes with a method call on `undefined`. The

crash is bad because it only shows up in the console--there's no visible

message saying that speedscope failed to parse and load a profile.

For more information, see #378

This fixes the crash by simply skipping the logic in demangle if the name

field isn't present on a frame. That's probably a fine tradeoff? Because in

this case, stackprof is generating ruby frames, which means that C++ name

demangling won't apply.

I have tested this by running the scripts/prepare-test-installation.sh

script and verifying that `bin/cli.js` can now successfully load the

included profile. Before these changes, I verified that speedscope failed

with the behavior mentioned in #378.

I've also included a snapshot test case, but it seems that the Jest test

harness only tests the parsing, not the rendering (correct me if I'm wrong).

So I haven't actually been able to create an automated test that would catch

a regression. Please let me know if there's a better way to have written this

test.

I've staged the commits on this branch so that the second commit (dcb9840)

showcases the minimal diff to a stackprof file that reproduces the bug. That is,

rather than look at the thousands of new lines in the stackprof profile, you can

view the second commit to see the salient part of the file.

Fixes#415

* Interprets pprof's defaultSampleType as an index into the string table [as documented in the proto](0414c2f617/src/import/profile.proto (L85)), not as an index into the sample types repeated-field. This allows parsing to succeed when the string-table index is not a valid sampleTypes index, which is common on allocation profiles.

* Update the pprof snapshot. This is necessarily because we were previously interpreting an empty defaultSampleType as truthy-but-zero when long.js is present, ie: the first sample-type. But Speedscope-in-the-browser doesn't seem to include long.js, so our tests were disagreeing with in-browser behavior. With this PR, that should be fixed.

This fixes a number of bugs with callgrind import. Dealing with this file format is a big pain because the documentation on https://www.valgrind.org/docs/manual/cl-format.html doesn't contain enough examples to disambiguate some of the behaviour, and because there's a fundamental impedance mismatch between call-trees and call-graphs.

In any case, after this PR, the behavior of callgrind file import is much better.

The file provided in #414 now imports correctly and, as far as I can tell, displays the same weights as what I see in KCacheGrind.

Some of the key changes:

- Implementing subposition compression. This was just a TODO in the code that was never implemented

- Fixing a misinterpretation of how `fe` and `fi` were intended to be used. Previously, I was using it to change the filename of a symbol, meaning that an `fi` or an `fe` line in the middle of a block describing costs for an `fn` would split a node in the call-graph into multiple nodes causing all manners of problems

- Fixing a bug where `cfn` was persisting beyond a single call, also resulting in call graph nodes being split when they shouldn't be

Fixes#414

The file format uses by Chrome Devtools performance tab periodically changes. It uses the Chrome trace event format (https://docs.google.com/document/d/1CvAClvFfyA5R-PhYUmn5OOQtYMH4h6I0nSsKchNAySU/preview).

This format, however, has two different types: one is `TraceEvent[]`, the other is `{traceEvents: traceEvent[]}`. The importer for non-Chrome devtools profiles already handled this, but the one for Chrome Devtools didn't because Chrome < 114 never used it. It seems like they changed the file format. This PR addresses that change.

Fixes#420

In #385, I introduced a dependency on the `uint8array-json-parser` npm package, but used a fork because of a typescript error. This was resolved in evanw/uint8array-json-parser#1 and published as part of `uint8array-json-parser@0.0.2`. Let's use the upstream.

This also conveniently fixes a new typechecking error that was preventing deployment. The error looked like this:

```

src/import/utils.ts(2,26): error TS2306: File '\''/Users/jlfwong/code/speedscope/node_modules/uint8array-json-parser/uint8array-json-parser.ts'\'' is not a module.'

```

After updating to the upstream, the problem is fixed.

In #297, I re-used the fuzzy matching logic I implemented in #282 for profile selection. Based on feedback from several people in #352, this is surprising behavior.

Upon reflection, this should have been obvious -- I hijacked the Ctrl/Cmd+F browser behaviour, so I should try to replicate the expected behaviour there as closely as possible. Given more patience, I also would've done some user research :)

This PR updates this logic to try to more closely match browser behaviour. This means case-insensitive, exact-substring matching.

I've left the fuzzy matching alone for profile selection since that doesn't attempt to mimic browser behaviour.

The non-fuzzy matching feels slightly odd to me given the filtering behaviour on the sandwich view, but I think consistency across this find UI is important.

Here are the before & after results when searching for the string "ca" in the example profile.

|Before|After|

|-|-|

|<img width="1791" alt="image" src="https://user-images.githubusercontent.com/150329/197232741-6d1d7a8a-8b8c-4a4f-98e3-2c043fd7efd5.png">|<img width="1789" alt="image" src="https://user-images.githubusercontent.com/150329/197232694-82697b68-ca15-49e7-887b-2606646ee5e9.png">|

Fixes#352

Supersedes #403

Unlike the Time Profiler, the CPU Profiler in Instruments use `cycles` for units instead of `ms`:

<img width="872" src="https://user-images.githubusercontent.com/406394/175755999-289cb7c0-f29a-44b1-b00e-b55ef17ee303.png">

Currently Speedscope fails to import the data with the following error in the console:

```

Failed to load format Error: Unrecognized units Gc

```

This PR adds support for `cycles` as a unit to the Instruments deep copy importer as well as `Kc`, `Mc`, and `Gc`, which I'm assuming are increasing in multiples of 1000. Hopefully I've added support for this correctly and this PR is helpful.

This changes the HoverTip placement logic to use measurements from the actual DOM node rather than basing everything on the maximum sizes.

This avoids some counter-intuitive behaviour, most importantly situations where the label would overflow off the left side of the screen for no obvious reason.

Fixes#394Fixes#256

This PR attempts to support stackprof's object mode which tracks the number of allocated objects. This differs from the other modes (cpu and wall) by taking samples every time a Ruby object is allocated using Ruby's [`NEWOBJ` tracepoint](df24b85953/ext/stackprof/stackprof.c (L198-L199)).

When importing an object mode profile into speedscope today it still works but what you see is a profile using time units. The profile will only have samples for when an object was allocated which means even if time is reported, the profile is not really meaningful when looking at time.

To address this I've done three things when `mode` is `object`:

+ adjusted the total size of the `StackListProfileBuilder` to use the number of samples (since each sample is one allocation)

+ adjusted the weight of each sample to be `nSamples` (which I believe is always `1` but I'm not positive)

+ do not set the value formatter to a time formatter

Here's what it looks like before and after my changes (note the units and weight of samples):

wall (before) | object (before) | object (after)

-- | -- | --

<img width="1624" alt="Screen Shot 2022-05-11 at 4 51 31 PM" src="https://user-images.githubusercontent.com/898172/167945635-2401ca73-4de7-4559-b884-cf8947ca9738.png"> | <img width="1624" alt="Screen Shot 2022-05-11 at 4 51 34 PM" src="https://user-images.githubusercontent.com/898172/167945641-ef302a60-730b-4afd-8e44-5f02e54b3cb7.png"> | <img width="1624" alt="Screen Shot 2022-05-11 at 4 51 42 PM" src="https://user-images.githubusercontent.com/898172/167945643-5611b267-f8b2-4227-a2bf-7145c4030aa2.png">

<details>

<summary>Test code</summary>

```ruby

require 'stackprof'

require 'json'

def do_test

5.times do

make_a_word

end

end

def make_a_word

('a'..'z').to_a.shuffle.map(&:upcase).join

end

StackProf.start(mode: :object, interval: 1, raw: true)

do_test

StackProf.stop

File.write('tmp/object_profile.json', JSON.generate(StackProf.results))

StackProf.start(mode: :wall, interval: 1, raw: true)

do_test

StackProf.stop

File.write('tmp/wall_profile.json', JSON.generate(StackProf.results))

```

</details>

Fixes#312

This turns out not to be very deep: you have to pass an optional second parameter to the [`URL` constructor](https://developer.mozilla.org/en-US/docs/Web/API/URL/URL) to resolve relative URLs.

```

> new URL('/path/to/file#hashcode').pathnaem

VM252:1 Uncaught TypeError: Failed to construct 'URL': Invalid URL

at <anonymous>:1:1

(anonymous) @ VM252:1

> new URL('/path/to/file#hashcode', 'http://example.com/').pathname

"/path/to/file"

```

Browsers have a limit on how big you can make strings. In Chrome on a 64 bit machine, this is around 512MB, which explains why in #340, a 600MB file fails to load.

To work around this issue, we avoid making strings this large.

To do so, we need two core changes:

1. Instead of sending large strings as the import mechanism to different file format importers, we introduce a new `TextFileContent` interface which exposes methods to get the lines in the file or the JSON representation. In the case of line splitting, we assume that no single line exceeds the 512MB limit.

2. We introduce a dependency on https://github.com/evanw/uint8array-json-parser to allow us to parse JSON files contained in `Uint8Array` objects

To ensure that this code doesn't code rot without introducing 600MB test files or test file generation into the repository, we also re-run a small set of tests with a mocked maximum string size of 100 bytes. You can see that the chunked string representation code is getting executed via test coverage.

Fixes#340

Ingest files containing collapsed stacks and tolerate invalid lines,

like FlameGraph does.

Some files might contain lines starting with a # to add comments to the

collected samples. Speedscope should still attempt to parse these files

as collapsed stacks and only keep the samples that it can find. Only

fail if there are no samples reported.

This is an experiment in replacing redux entirely with a tiny library I wrote for global application state management.

Redux has been okay, but all of the redux actions in speedscope are setters, which always made me think there must be a simpler way. This is an attempt to find that simpler way.

See `src/lib/atom.ts` for the library.

Implements import from the [callgrind format](https://www.valgrind.org/docs/manual/cl-format.html).

This comes with a big caveat that the call graph information contained with callgrind formatted files don't uniquely define a flamegraph, so the generated flamegraph is a best-effort guess. Here's the comment from the top of the main file for the callgrind importer with an examplataion:

```

// https://www.valgrind.org/docs/manual/cl-format.html

//

// Larger example files can be found by searching on github:

// https://github.com/search?q=cfn%3D&type=code

//

// Converting callgrind files into flamegraphs is challenging because callgrind

// formatted profiles contain call graphs with weighted nodes and edges, and

// such a weighted call graph does not uniquely define a flamegraph.

//

// Consider a program that looks like this:

//

// // example.js

// function backup(read) {

// if (read) {

// read()

// } else {

// write()

// }

// }

//

// function start() {

// backup(true)

// }

//

// function end() {

// backup(false)

// }

//

// start()

// end()

//

// Profiling this program might result in a profile that looks like the

// following flame graph defined in Brendan Gregg's plaintext format:

//

// start;backup;read 4

// end;backup;write 4

//

// When we convert this execution into a call-graph, we get the following:

//

// +------------------+ +---------------+

// | start (self: 0) | | end (self: 0) |

// +------------------+ +---------------|

// \ /

// (total: 4) \ / (total: 4)

// v v

// +------------------+

// | backup (self: 0) |

// +------------------+

// / \

// (total: 4) / \ (total: 4)

// v v

// +----------------+ +-----------------+

// | read (self: 4) | | write (self: 4) |

// +----------------+ +-----------------+

//

// In the process of the conversion, we've lost information about the ratio of

// time spent in read v.s. write in the start call v.s. the end call. The

// following flame graph would yield the exact same call-graph, and therefore

// the exact sample call-grind formatted profile:

//

// start;backup;read 3

// start;backup;write 1

// end;backup;read 1

// end;backup;write 3

//

// This is unfortunate, since it means we can't produce a flamegraph that isn't

// potentially lying about the what the actual execution behavior was. To

// produce a flamegraph at all from the call graph representation, we have to

// decide how much weight each sub-call should have. Given that we know the

// total weight of each node, we'll make the incorrect assumption that every

// invocation of a function will have the average distribution of costs among

// the sub-function invocations. In the example given, this means we assume that

// every invocation of backup() is assumed to spend half its time in read() and

// half its time in write().

//

// So the flamegraph we'll produce from the given call-graph will actually be:

//

// start;backup;read 2

// start;backup;write 2

// end;backup;read 2

// end;backup;write 2

//

// A particularly bad consequence is that the resulting flamegraph will suggest

// that there was at some point a call stack that looked like

// strat;backup;write, even though that never happened in the real program

// execution.

```

Fixes#18

The trace event format has a very unfortunate combination of requirements in order to give a best-effort interpretation of a given trace file:

1. Events may be recorded out-of-order by timestamp

2. Events with the *same* timestamp should be processed in the order they were provided in the file. Mostly.

The first requirement is written explicitly [in the spec](https://docs.google.com/document/d/1CvAClvFfyA5R-PhYUmn5OOQtYMH4h6I0nSsKchNAySU/preview).

> The events do not have to be in timestamp-sorted order.

The second one isn't explicitly written, but it's implicitly true because otherwise the interpretation of a file is ambiguous. For example, the following file has all events with the same `ts` field, but re-ordering the fields changes the interpretation.

```

[

{ "pid": 0, "tid": 0, "ph": "X", "ts": 0, "dur": 20, "name": "alpha" },

{ "pid": 0, "tid": 0, "ph": "X", "ts": 0, "dur": 20, "name": "beta" }

}

```

If we allowed arbitrary reordering, it would be ambiguous whether the alpha frame should be nested inside of the beta frame or vice versa. Since traces are interpreted as call trees, it's not okay to just arbitrarily choose.

So you might next guess that a reasonable approach would be to do a [stable sort](https://wiki.c2.com/?StableSort) by "ts", then process the events one-by-one. This almost works, except for two additional problems. The first problem is that in some situations this would still yield invalid results.

```

[

{"pid": 0, "tid": 0, "ph": "B", "name": "alpha", "ts": 0},

{"pid": 0, "tid": 0, "ph": "B", "name": "beta", "ts": 0},

{"pid": 0, "tid": 0, "ph": "E", "name": "alpha", "ts": 1},

{"pid": 0, "tid": 0, "ph": "E", "name": "beta", "ts": 1}

]

```

If we were to follow this rule, we would try to execute the `"E"` for alpha before the `"E"` for beta, even though beta is on the top of the stack. So in *that* case, we actually need to execute the `"E"` for beta first, otherwise the resulting profile is incorrect.

The other problem with this approach of using the stable sort order is the question of how to deal with `"X"` events. speedscope translates `"X"` events into a `"B"` and `"E"` event pair. But where should it put the `"E"` event? Your first guess might be "at the index where the `"X"` events occur in the file". This runs into trouble in cases like this:

```

[

{ "pid": 0, "tid": 0, "ph": "X", "ts": 9, "dur": 1, "name": "beta" },

{ "pid": 0, "tid": 0, "ph": "X", "ts": 9, "dur": 2, "name": "gamma" },

]

```

The most natural translation of this would be to convert it into the following `"B"` and `"E"` events:

```

[

{ "pid": 0, "tid": 0, "ph": "B", "ts": 9, "name": "beta" },

{ "pid": 0, "tid": 0, "ph": "E", "ts": 10, "name": "beta" },

{ "pid": 0, "tid": 0, "ph": "B", "ts": 9, "name": "gamma" },

{ "pid": 0, "tid": 0, "ph": "E", "ts": 11, "name": "gamma" },

]

```

Which, after a stable sort turns into this:

```

[

{ "pid": 0, "tid": 0, "ph": "B", "ts": 9, "name": "beta" },

{ "pid": 0, "tid": 0, "ph": "B", "ts": 9, "name": "gamma" },

{ "pid": 0, "tid": 0, "ph": "E", "ts": 10, "name": "beta" },

{ "pid": 0, "tid": 0, "ph": "E", "ts": 11, "name": "gamma" },

]

```

Notice that we again have a problem where we open "beta" before "gamma", but we need to close "beta" first because it ends first!

Ultimately, I couldn't figure out any sort order that would allow me to predict ahead-of-time what order to process the events in. So instead, I create two event queues: one for `"B"` events, and one for `"E"` events, and then try to be clever about how I merge them together.

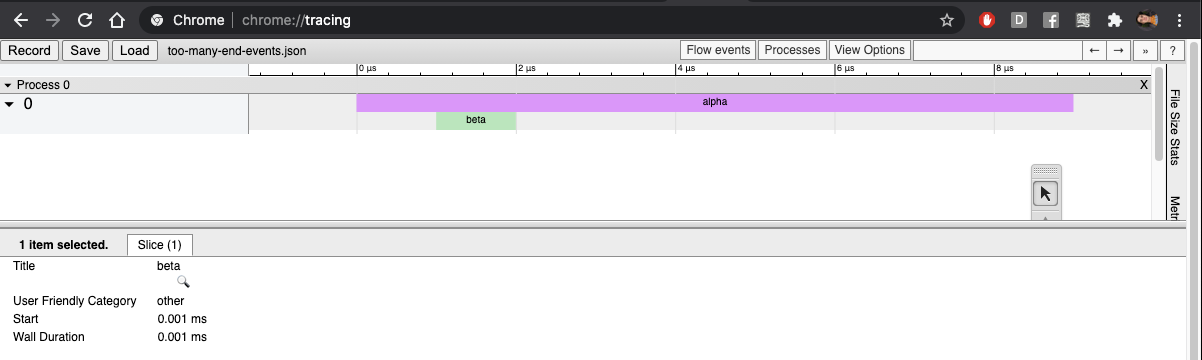

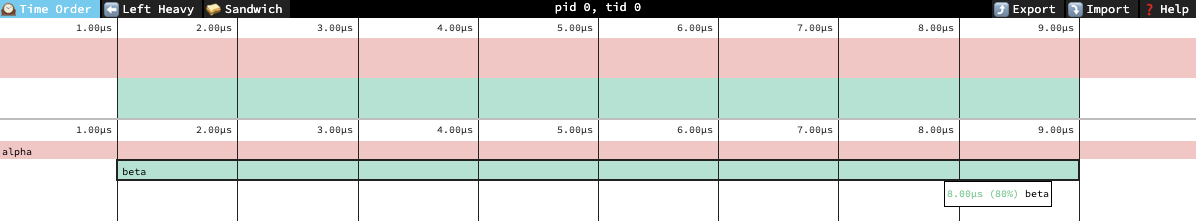

AFAICT, chrome://tracing does not sort events before processing them, which is kind of baffling. But chrome://tracing also has really bizarre behaviour for things like this where the resulting flamegraph isn't even a valid tree (there are overlapping ranges):

```

[

{ "pid": 0, "tid": 0, "ph": "X", "ts": 0, "dur": 10, "name": "alpha" },

{ "pid": 0, "tid": 0, "ph": "X", "ts": 5, "dur": 10, "name": "beta" }

}

```

So I'm going to call this "good enough" for now.

Fixes#223Fixes#320

In #273, I changed `CallTreeProfileBuilder.leaveFrame` to fail hard when you request to leave a frame different from the one at the top of the stack. It turns out we were intentionally doing this for trace event imports, because `args` are part of the frame key, and we want to allow profiles to be imported where the `"B"` and `"E"` events have differing `args` field.

This PR fixes the import code to permissively allow the `"args"` field to not match between the `"B"` and `"E"` fields.

**A note on intentional differences between speedscope and chrome://tracing**

`chrome://tracing` will close whichever frame is at the top when it gets an `"E"` event, regardless of whether the name or the args match. speedscope will ignore the event entirely if the `"name"` field doesn't match, but will warn but still close the frame if the `"name"`s match but the `"args"` don't.

```

[

{"pid": 0, "tid": 0, "ph": "B", "name": "alpha", "ts": 0},

{"pid": 0, "tid": 0, "ph": "B", "name": "beta", "ts": 1},

{"pid": 0, "tid": 0, "ph": "E", "name": "gamma", "ts": 2},

{"pid": 0, "tid": 0, "ph": "E", "name": "beta", "ts": 9},

{"pid": 0, "tid": 0, "ph": "E", "name": "alpha", "ts": 10}

]

```

### speedscope

```

warning: ts=2: Request to end "gamma" when "beta" was on the top of the stack. Doing nothing instead.

```

### chrome://tracing