mirror of

https://github.com/qnikst/ghc-timings-report.git

synced 2024-10-03 23:39:35 +03:00

commit

32ce03a582

178

Main.hs

178

Main.hs

@ -1,146 +1,48 @@

|

||||

{-# LANGUAGE TransformListComp #-}

|

||||

module Main where

|

||||

|

||||

import Options.Applicative

|

||||

|

||||

import qualified Options.Applicative as Options

|

||||

|

||||

import Control.Monad

|

||||

import Control.Monad.Trans.Resource

|

||||

import qualified Data.ByteString.Lazy as BSL

|

||||

import qualified Data.Binary.Builder as Builder

|

||||

import qualified Data.ByteString as B

|

||||

import Data.Conduit

|

||||

import Data.Conduit.Combinators as CL

|

||||

import Data.Conduit.List

|

||||

import Data.Set as Set

|

||||

import Data.Csv as Csv

|

||||

import Data.Csv.Builder as Csv

|

||||

import Data.Foldable

|

||||

import Data.Functor

|

||||

import Data.Function

|

||||

import Data.Traversable

|

||||

import qualified Data.Map as Map

|

||||

import qualified Data.Text.Encoding as T

|

||||

import qualified Data.Text.IO as T

|

||||

import qualified Data.Text as T

|

||||

import Data.Aeson

|

||||

import Data.Either

|

||||

import Data.List

|

||||

import Data.Maybe as M

|

||||

import GhcBuildPhase

|

||||

import GhcFile

|

||||

import GHC.Exts

|

||||

import qualified Data.Vector as V

|

||||

import Report

|

||||

import System.Directory(copyFile, createDirectoryIfMissing)

|

||||

import System.Environment

|

||||

import System.FilePath

|

||||

import TextShow

|

||||

import Text.Blaze (Markup)

|

||||

import Text.Blaze.Renderer.Utf8 (renderMarkupToByteStringIO)

|

||||

import Prelude hiding (mapM_, print)

|

||||

import qualified Prelude

|

||||

import Compare

|

||||

import Generate

|

||||

|

||||

main :: IO ()

|

||||

main = do

|

||||

[dir] <- getArgs

|

||||

createDirectoryIfMissing True "./tmp"

|

||||

|

||||

files <- findDumpTimings dir

|

||||

|

||||

let ( files_failed,

|

||||

files_parsed)

|

||||

= partitionEithers $ files <&> \srcFilePath ->

|

||||

case stripPrefix (splitDirectories dir) (splitDirectories srcFilePath) of

|

||||

Nothing -> Left srcFilePath

|

||||

Just x -> case x of

|

||||

("build" : hostOs : ghcVersion : packageName : componentType : subComponent : "build" : modulePath) ->

|

||||

Right GhcFile{..}

|

||||

("build" : hostOs : ghcVersion : packageName : componentType : subComponent : "noopt" : "build" : modulePath) ->

|

||||

Right GhcFile{..}

|

||||

("build" : hostOs : ghcVersion : packageName : "build" : modulePath) ->

|

||||

let componentType = ""

|

||||

subComponent = ""

|

||||

in Right GhcFile{..}

|

||||

("build" : hostOs : ghcVersion : packageName : "noopt" : "build" : modulePath) ->

|

||||

let componentType = ""

|

||||

subComponent = ""

|

||||

in Right GhcFile{..}

|

||||

("dist": hostOs : _cabalVersion : "build": modulePath) ->

|

||||

-- FIXME: should be retrieved from stack somehow

|

||||

let ghcVersion = "<GHC version>"

|

||||

packageName = "<Package name>"

|

||||

componentType = ""

|

||||

subComponent = ""

|

||||

in Right GhcFile{..}

|

||||

_ -> Left srcFilePath

|

||||

|

||||

unless (Prelude.null files_failed) $ do

|

||||

Prelude.putStrLn "Warning, some files failed to be parsed"

|

||||

Prelude.print files_failed

|

||||

|

||||

|

||||

-- Output all files in json form for later analysis.

|

||||

results <- for files_parsed $ \f -> do

|

||||

steps <- fmap parsePhases $ T.readFile (rebuildFilePath f)

|

||||

encodeFile (output </> rebuildPlainPath f <.> "json") steps

|

||||

let bs = encodeDefaultOrderedByName steps

|

||||

BSL.writeFile (output </> rebuildPlainPath f <.> "csv") bs

|

||||

mkHtmlFile (output </> rebuildPlainPath f <.> "html")

|

||||

$ Report.moduleTable f steps

|

||||

pure (f, steps)

|

||||

|

||||

let stats_by_package = Map.fromListWith (<>)

|

||||

[ (packageName, Map.singleton GhcFile{..} steps)

|

||||

| (GhcFile{..}, steps) <- results

|

||||

]

|

||||

-- FIXME: put this file back later

|

||||

-- encodeFile (output </> "stats_by_package" <.> "json") stats_by_package

|

||||

for_ (Map.toList stats_by_package) $ \(package, stat) -> do

|

||||

let headers = Set.toList $ Set.fromList

|

||||

[ phaseName

|

||||

| (_, steps) <- Map.toList stat

|

||||

, Phase{..} <- steps

|

||||

]

|

||||

let rows = [ ( GhcFile{..}

|

||||

, total

|

||||

, Prelude.map (\n -> Map.lookup n by_phase) headers)

|

||||

| (GhcFile{..}, steps) <- Map.toList stat

|

||||

, let total = Prelude.sum [phaseTime | Phase{..} <- steps]

|

||||

, let by_phase = Map.fromListWith (+)

|

||||

[ (phaseName, phaseTime)

|

||||

| Phase{..} <- steps

|

||||

]

|

||||

, then sortWith by (Down total)

|

||||

]

|

||||

mkHtmlFile ("./tmp/" <> package <> ".html")

|

||||

$ Report.packageTable package headers rows

|

||||

let bs = Csv.encodeHeader (V.fromList ("module": "total": Prelude.map T.encodeUtf8 headers))

|

||||

<> mconcat (Prelude.map Csv.encodeRecord

|

||||

[ Prelude.map T.encodeUtf8 $ T.pack (joinPath modulePath):(showt total):Prelude.map showt cols

|

||||

| (GhcFile{..}, total, cols) <- rows

|

||||

])

|

||||

BSL.writeFile (output </> package <.> "csv")

|

||||

$ Builder.toLazyByteString bs

|

||||

-- Prelude.print byPackage

|

||||

-- Report.

|

||||

mkHtmlFile "./tmp/index.html"

|

||||

$ Report.index $ Map.keys stats_by_package

|

||||

copyFile "files/main.css" "./tmp/main.css" -- TODO use data files

|

||||

main = join $ execParser (info (helper <*> opts) idm)

|

||||

where

|

||||

output = "./tmp"

|

||||

opts = subparser $

|

||||

command "generate" (info (helper <*> generate) (progDesc "Generate timings report"))

|

||||

<> command "compare" (info (helper <*> compareReports) (progDesc "Compare two reports"))

|

||||

|

||||

-- | Find all files that are related to the dump timings.

|

||||

--

|

||||

-- XXX: this method is not effective enough as it eagerly builds a list of FilePath

|

||||

findDumpTimings :: String -> IO [FilePath]

|

||||

findDumpTimings input = do

|

||||

runResourceT $ runConduit $ sourceDirectoryDeep False input

|

||||

.| CL.filter (\x -> x `endsWith` ".dump-timings")

|

||||

.| consume

|

||||

where

|

||||

endsWith x y = (reverse y) `isPrefixOf` (reverse x)

|

||||

-- | Gather arguments for report generation.

|

||||

generate :: Options.Parser (IO ())

|

||||

generate = runGenerate

|

||||

<$> strOption

|

||||

( short 'i'

|

||||

<> long "input"

|

||||

<> metavar "PROJECT_DIR"

|

||||

<> help "Haskell project directory"

|

||||

)

|

||||

|

||||

mkHtmlFile :: FilePath -> Markup -> IO ()

|

||||

mkHtmlFile fn markup = do

|

||||

B.writeFile fn "" -- TODO: properly cleanup the file

|

||||

renderMarkupToByteStringIO

|

||||

(B.appendFile fn) -- TODO: keep handle opened instead of reopening each time.

|

||||

markup

|

||||

-- | Gather arguments for comparing reports.

|

||||

compareReports :: Options.Parser (IO ())

|

||||

compareReports = runCompare

|

||||

<$> strOption

|

||||

( short 'b'

|

||||

<> long "before"

|

||||

<> metavar "REPORT_DIR"

|

||||

<> help "Directory with generated timings report from the previous build"

|

||||

)

|

||||

<*> strOption

|

||||

( short 'a'

|

||||

<> long "after"

|

||||

<> metavar "REPORT_DIR"

|

||||

<> help "Directory with generated timings report from the last build"

|

||||

)

|

||||

<*> strOption

|

||||

( short 'o'

|

||||

<> long "output"

|

||||

<> metavar "OUTPUT_DIR"

|

||||

<> help "Directory in which to store the output"

|

||||

)

|

||||

|

||||

@ -30,11 +30,11 @@ cabal v2-build

|

||||

```

|

||||

|

||||

At this point I don't suggest you to install the tool because

|

||||

at such an early stage it will likely require manual configuration

|

||||

at such an early stage it will likely require manual configuration

|

||||

a lot.

|

||||

|

||||

3. Configure your project in order to generate timing files:

|

||||

|

||||

|

||||

```bash

|

||||

cabal v2-configure --ghc-options=-ddump-timings --ghc-options=-ddump-to-file

|

||||

```

|

||||

@ -45,7 +45,7 @@ to store those reports to files.

|

||||

4. Running:

|

||||

|

||||

```bash

|

||||

cabal v2-run ghc-timings /Users/qnikst/workspace/another-project/dist-newstyle

|

||||

cabal v2-run ghc-timings generate -i /Users/qnikst/workspace/another-project/dist-newstyle

|

||||

```

|

||||

|

||||

In the `tmp` folder you'll get all the reports.

|

||||

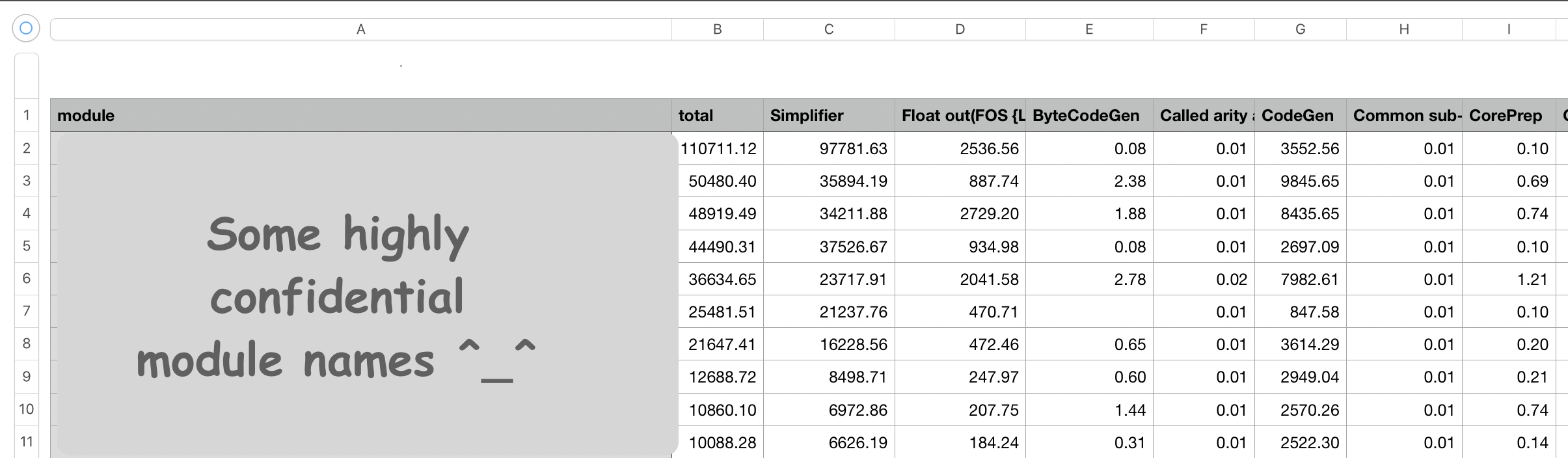

@ -84,6 +84,21 @@ package that works with tables like numbers, you'll see something like this:

|

||||

|

||||

|

||||

|

||||

# Compare

|

||||

|

||||

**Prerequisites**: you need to have `gnuplot` executable on your `$PATH` to use it.

|

||||

|

||||

With following `compare` command you may actually compare results of two different builds.

|

||||

|

||||

```bash

|

||||

cabal v2-run ghc-timings compare -b ./before -a ./after -o output_dir

|

||||

```

|

||||

|

||||

It will produce a bunch of plots with package/project module names and `.svg` extension and one more `package.svg` with a summary.

|

||||

|

||||

On the plot you can observe comparison between build times from two build runs per each phase (in `<module>.svg`) or per each module (in `package.svg`).

|

||||

|

||||

|

||||

# Project ideology.

|

||||

|

||||

Here I want to share a bits of how I work on this project and it's not usual one for me.

|

||||

|

||||

23

files/module.template.gnuplot

Normal file

23

files/module.template.gnuplot

Normal file

@ -0,0 +1,23 @@

|

||||

set datafile separator "\t"

|

||||

set terminal svg

|

||||

set output '<OUTPUT_FILE>'

|

||||

set datafile missing ""

|

||||

|

||||

set title "<TITLE>"

|

||||

set ylabel "ms"

|

||||

|

||||

set yrange [10:*]

|

||||

|

||||

set xtics rotate by 45 offset 0,-1 font ",9"

|

||||

|

||||

set boxwidth 0.3

|

||||

set style fill solid 1.000000 border -1

|

||||

set bmargin 3

|

||||

set pointsize 2

|

||||

set tics scale 0.0

|

||||

set grid y

|

||||

set style data lines

|

||||

plot '<INPUT_FILE>' using ($0-0.2):3 index 0 with boxes title "before", \

|

||||

'' using ($0+0.2):4 index 0 with boxes title "after", \

|

||||

'' using ($0):xtic(1) lw 0 notitle

|

||||

|

||||

22

files/package.template.gnuplot

Normal file

22

files/package.template.gnuplot

Normal file

@ -0,0 +1,22 @@

|

||||

set datafile separator "\t"

|

||||

set terminal svg size 8000,4000

|

||||

set output '<OUTPUT_FILE>'

|

||||

set datafile missing ""

|

||||

|

||||

set xlabel "ms"

|

||||

|

||||

set yrange [-1:*]

|

||||

|

||||

set xrange [10:*]

|

||||

|

||||

set boxwidth 0.3

|

||||

set style fill solid # solid color boxes

|

||||

# unset key # turn off all titles

|

||||

|

||||

myBoxWidth = 0.4

|

||||

set offsets 0,0,0.5-myBoxWidth/2.,0.5

|

||||

|

||||

plot '<INPUT_FILE>' using (2):0:0:2:($0-myBoxWidth/2.):($0+myBoxWidth/2.):($0+1) index 0 with boxxyerror title "before", \

|

||||

'' using ($3+0.2):0:0:3:($0+0.5-myBoxWidth/2.):($0+0.5+myBoxWidth/2.):($0+1) index 0 with boxxyerror title "after", \

|

||||

'' using 2:0:ytic(1) lw 0 ps 0 notitle

|

||||

|

||||

@ -1,4 +1,4 @@

|

||||

cabal-version: >=1.10

|

||||

cabal-version: 2.0

|

||||

synopsis: Get statistical report about how long files were compiled.

|

||||

description: Simple package that can gather information about compilation

|

||||

time for later analysis.

|

||||

@ -12,12 +12,18 @@ maintainer: alexander.vershilov@gmail.com

|

||||

-- category:

|

||||

build-type: Simple

|

||||

extra-source-files: CHANGELOG.md

|

||||

data-files: files/*.gnuplot

|

||||

files/*.css

|

||||

|

||||

executable ghc-timings

|

||||

main-is: Main.hs

|

||||

other-modules: GhcFile

|

||||

autogen-modules: Paths_ghc_timings

|

||||

other-modules: Compare

|

||||

GhcFile

|

||||

GhcBuildPhase

|

||||

Generate

|

||||

Report

|

||||

Paths_ghc_timings

|

||||

hs-source-dirs: src

|

||||

.

|

||||

default-extensions: DerivingStrategies

|

||||

@ -43,8 +49,11 @@ executable ghc-timings

|

||||

directory,

|

||||

resourcet,

|

||||

filepath,

|

||||

optparse-applicative,

|

||||

process,

|

||||

text,

|

||||

text-show,

|

||||

these,

|

||||

vector

|

||||

ghc-options: -Wall -Werror

|

||||

default-language: Haskell2010

|

||||

|

||||

391

src/Compare.hs

Normal file

391

src/Compare.hs

Normal file

@ -0,0 +1,391 @@

|

||||

{-# LANGUAGE BlockArguments #-}

|

||||

{-# LANGUAGE TypeApplications #-}

|

||||

{-# LANGUAGE NamedFieldPuns #-}

|

||||

{-# LANGUAGE RankNTypes #-}

|

||||

{-# LANGUAGE PatternSynonyms #-}

|

||||

module Compare

|

||||

( runCompare

|

||||

) where

|

||||

|

||||

import Control.Exception

|

||||

import Control.Monad

|

||||

import Data.Char (ord)

|

||||

import Data.Csv as Csv

|

||||

import Data.Csv.Builder as Csv

|

||||

import Data.Foldable

|

||||

import Data.Function

|

||||

import Data.Functor

|

||||

import Data.List

|

||||

import Data.Maybe as M

|

||||

import Data.These

|

||||

import Data.These.Combinators

|

||||

import Data.Traversable

|

||||

import Prelude hiding (mapM_, print)

|

||||

import System.Directory

|

||||

import System.Environment

|

||||

import System.FilePath

|

||||

import System.Process

|

||||

import System.IO.Unsafe

|

||||

|

||||

|

||||

import qualified Data.Binary.Builder as Builder

|

||||

import qualified Data.ByteString.Lazy as BSL

|

||||

import qualified Data.List as List

|

||||

import qualified Data.Map.Merge.Strict as Map

|

||||

import qualified Data.Map.Strict as Map

|

||||

import qualified Data.Text as T

|

||||

import qualified Data.Text.IO as T

|

||||

import qualified Data.Vector as V

|

||||

import qualified Prelude

|

||||

|

||||

import GhcBuildPhase

|

||||

import Paths_ghc_timings (getDataFileName)

|

||||

|

||||

pattern MODULE_TEMPLATE_FILENAME :: FilePath

|

||||

pattern MODULE_TEMPLATE_FILENAME = "module.template.gnuplot"

|

||||

|

||||

pattern PACKAGE_TEMPLATE_FILENAME :: FilePath

|

||||

pattern PACKAGE_TEMPLATE_FILENAME = "package.template.gnuplot"

|

||||

|

||||

pattern DUMP_TIMINGS_PATTERN :: FilePath

|

||||

pattern DUMP_TIMINGS_PATTERN = ".dump-timings.csv"

|

||||

|

||||

pattern INPUT_FILE, OUTPUT_FILE, TITLE :: T.Text

|

||||

pattern INPUT_FILE = "<INPUT_FILE>"

|

||||

pattern OUTPUT_FILE = "<OUTPUT_FILE>"

|

||||

pattern TITLE = "<TITLE>"

|

||||

|

||||

pattern GNUPLOT_CMD :: String

|

||||

pattern GNUPLOT_CMD = "gnuplot"

|

||||

|

||||

pattern DEBUG_ENV :: String

|

||||

pattern DEBUG_ENV = "DEBUG"

|

||||

|

||||

-- | A helper function that allows instead of writing comments emit an output.

|

||||

--

|

||||

-- Actually it's just a less generic Debug.Trace.traceM.

|

||||

explain :: String -> IO ()

|

||||

{-# NOINLINE explain #-}

|

||||

explain = unsafePerformIO $ do

|

||||

lookupEnv DEBUG_ENV >>= \case

|

||||

Nothing -> pure \_ -> pure ()

|

||||

Just{} -> pure \s -> putStrLn $ "Debug: " <> s

|

||||

|

||||

runCompare :: FilePath -> FilePath -> FilePath -> IO ()

|

||||

runCompare beforeDir afterDir out = do

|

||||

explain "ensure output directory created"

|

||||

createDirectoryIfMissing True out

|

||||

explain "ensure both report directories exist"

|

||||

checkDirectoriesExistence beforeDir afterDir

|

||||

explain "read CSV file paths from both directories"

|

||||

explain "combine requried data from both reports"

|

||||

mergedModulesData <- prepareData

|

||||

explain "and make a plot for all compilation stages for all modules"

|

||||

explain "and make one more report for a whole package/project"

|

||||

visualizeData mergedModulesData

|

||||

where

|

||||

prepareData :: IO (V.Vector ModuleReportRow)

|

||||

prepareData = do

|

||||

dirContentsBefore <- fixPaths beforeDir =<< filterCSV <$> listDirectory beforeDir

|

||||

dirContentsAfter <- fixPaths afterDir =<< filterCSV <$> listDirectory afterDir

|

||||

modulesDataBefore <- getModulesDataFromReport dirContentsBefore

|

||||

modulesDataAfter <- getModulesDataFromReport dirContentsAfter

|

||||

let mergedModulesData = mergeModulesReports modulesDataBefore modulesDataAfter

|

||||

pure mergedModulesData

|

||||

visualizeData :: V.Vector ModuleReportRow -> IO ()

|

||||

visualizeData mergedModulesData =

|

||||

withCurrentDirectory out $

|

||||

withMaterializedFile MODULE_TEMPLATE_FILENAME $

|

||||

withMaterializedFile PACKAGE_TEMPLATE_FILENAME $ do

|

||||

renderModules mergedModulesData

|

||||

renderPackage mergedModulesData

|

||||

|

||||

|

||||

-- ** Helpers for `compare`

|

||||

|

||||

-- | Check if required directores does exist.

|

||||

checkDirectoriesExistence :: FilePath -> FilePath -> IO ()

|

||||

checkDirectoriesExistence before after = traverse_ check [before, after] where

|

||||

check x = do

|

||||

exists <- doesDirectoryExist x

|

||||

when (not exists) $ error $ "Directory not exist: " <> x

|

||||

|

||||

-- | Filters .dump-timings files.

|

||||

filterCSV :: [FilePath] -> [FilePath]

|

||||

filterCSV = Prelude.filter ((== DUMP_TIMINGS_PATTERN) . takeExtensions)

|

||||

|

||||

-- | Makes path an absolute one

|

||||

fixPaths :: FilePath -> [FilePath] -> IO [FilePath]

|

||||

fixPaths dir = traverse (makeAbsolute . (dir </>))

|

||||

|

||||

getModulesDataFromReport :: [FilePath] -> IO (Map.Map T.Text (V.Vector Phase))

|

||||

getModulesDataFromReport xs = Map.fromList . M.catMaybes <$> for xs \file ->

|

||||

BSL.readFile file <&> decodeByName >>= \case

|

||||

Left err -> do

|

||||

putStrLn $ "getModulesDataFromReport : " <> show err

|

||||

pure Nothing

|

||||

Right (_headers, result)

|

||||

| V.null result -> pure Nothing

|

||||

| otherwise -> pure $ Just (phaseModule $ V.head result, result)

|

||||

|

||||

mergeModulesReports

|

||||

:: Map.Map (T.Text) (V.Vector Phase) -- ^ An old report

|

||||

-> Map.Map (T.Text) (V.Vector Phase) -- ^ A new port

|

||||

-> V.Vector ModuleReportRow

|

||||

mergeModulesReports modulesBefore modulesAfter = moduleReport where

|

||||

moduleReport :: V.Vector ModuleReportRow

|

||||

moduleReport = V.map makeModuleReport moduleDiffs

|

||||

moduleDiffs :: V.Vector ModuleDiff

|

||||

moduleDiffs = gatherModuleDiffs modulesBefore modulesAfter

|

||||

|

||||

|

||||

-- | Gather info for building a diff.

|

||||

--

|

||||

gatherModuleDiffs

|

||||

:: Map.Map T.Text (V.Vector Phase)

|

||||

-> Map.Map T.Text (V.Vector Phase)

|

||||

-> V.Vector ModuleDiff

|

||||

gatherModuleDiffs before after = V.fromList

|

||||

$ zipWith

|

||||

(\moduleDataIndex (moduleDataModuleName, moduleDataData) -> ModuleData{..})

|

||||

[0..]

|

||||

(Map.toList moduleMap)

|

||||

where

|

||||

moduleMap = Map.merge

|

||||

(Map.mapMissing $ \_key b -> makeBefore b) -- exists only in old datum

|

||||

(Map.mapMissing $ \_key a -> makeAfter a) -- exists only in new datum

|

||||

(Map.zipWithMatched $ \_key a b -> makeBoth b a) -- exists in both data

|

||||

before

|

||||

after

|

||||

|

||||

makeModuleReport :: ModuleDiff -> ModuleReportRow

|

||||

makeModuleReport ModuleData{..} = ModuleData

|

||||

{ moduleDataData = transform moduleDataData

|

||||

, ..

|

||||

}

|

||||

where

|

||||

transform :: Diff (V.Vector Phase) -> V.Vector PhaseReport

|

||||

transform = \case

|

||||

This b -> V.map (makePhaseReportWith makeBefore) (V.indexed b)

|

||||

That a -> V.map (makePhaseReportWith makeAfter) (V.indexed a)

|

||||

These b a -> V.fromList $ List.reverse

|

||||

$ buildWholeSequence (V.toList b) (V.toList a)

|

||||

|

||||

makePhaseReportWith make (ix, Phase{..}) = PhaseReport

|

||||

{ phaseReportPhaseName = phaseName

|

||||

, phaseReportIndex = ix

|

||||

, phaseDiffTime = make phaseTime

|

||||

}

|

||||

|

||||

buildWholeSequence bs as

|

||||

= runBuilder bs as $ optimize $ List.reverse (goFwd [] bs as)

|

||||

where

|

||||

goFwd result [] [] = result

|

||||

goFwd result [] ys = TakeSecond Forward (List.length ys) : result

|

||||

goFwd result xs [] = TakeFirst Forward (List.length xs) : result

|

||||

goFwd result (x : xs) (y : ys) =

|

||||

if phaseName x == phaseName y

|

||||

then goFwd (TakeBoth Forward 1 : result) xs ys

|

||||

else goBack result (xs, ys) (List.reverse xs) (List.reverse ys)

|

||||

|

||||

goBack result _prev [] [] = result

|

||||

goBack result _prev [] ys = TakeSecond Backward (List.length ys) : result

|

||||

goBack result _prev xs [] = TakeFirst Forward (List.length xs) : result

|

||||

goBack result prev@(oldX, oldY) (x : xs) (y : ys) =

|

||||

if phaseName x == phaseName y

|

||||

then goBack (TakeBoth Backward 1 : result) (initBothEnds prev) xs ys

|

||||

else goDeeper result oldX oldY

|

||||

|

||||

initBothEnds ([], []) = ([], [])

|

||||

initBothEnds ([], ys) = ([], List.init ys)

|

||||

initBothEnds (xs, []) = (List.init xs, [])

|

||||

initBothEnds (xs, ys) = (List.init xs, List.init ys)

|

||||

|

||||

goDeeper result [] [] = result

|

||||

goDeeper result [] ys = goFwd result [] ys

|

||||

goDeeper result xs [] = goFwd result xs []

|

||||

goDeeper result xs ys = if List.length xs <= List.length ys

|

||||

then let SubsequenceData{..} =

|

||||

goFindSubsequences (emptySubsequenceData @Phase) xs ys

|

||||

in goFwd (TakeBoth Forward commonSubsequenceLength : TakeFirst Forward prefixLength : result) restOfFirst restOfSecond

|

||||

else let SubsequenceData{..} = goFindSubsequences emptySubsequenceData ys xs

|

||||

in goFwd (TakeBoth Forward commonSubsequenceLength : TakeSecond Forward prefixLength : result) restOfSecond restOfFirst

|

||||

|

||||

goFindSubsequences prev [] ys = prev { restOfSecond = ys }

|

||||

goFindSubsequences prev xs [] = prev { restOfFirst = xs }

|

||||

goFindSubsequences prev xs ys =

|

||||

let msubsequence = listToMaybe

|

||||

$ List.filter ((== (fmap phaseName xs)) . fmap phaseName . snd)

|

||||

$ subsequencesWithLength (List.length xs) ys

|

||||

new = prev { prefixLength = prefixLength prev + 1 }

|

||||

in case msubsequence of

|

||||

Nothing -> goFindSubsequences new xs ys

|

||||

Just (ix, subseq) ->

|

||||

prev { prefixLength = prefixLength prev + 1

|

||||

, commonSubsequenceLength = List.length subseq

|

||||

, restOfFirst = []

|

||||

, restOfSecond = List.drop (List.length subseq + ix) ys

|

||||

}

|

||||

subsequencesWithLength :: Int -> [a] -> [(Int, [a])]

|

||||

subsequencesWithLength l = snd . f (0, [])

|

||||

where

|

||||

f res [] = res

|

||||

f (ix, prev) listElems

|

||||

= f (ix + 1, (ix, List.take l listElems) : prev) (List.drop 1 listElems)

|

||||

|

||||

optimize = id -- TODO: improve

|

||||

|

||||

runBuilder xs' ys' actions = go [] (withIndices xs') (withIndices ys') actions

|

||||

where

|

||||

withIndices = zip [(0 :: Int) ..]

|

||||

go res _ _ [] = res

|

||||

go res xs ys (listAction : listActions) =

|

||||

let (newXs, newYs, newRes) = case listAction of

|

||||

TakeFirst Forward l ->

|

||||

(List.drop l xs, ys, (mkBefore <$> List.take l xs) <> res)

|

||||

TakeSecond Forward l ->

|

||||

(xs, List.drop l ys, (mkAfter <$> List.take l ys) <> res)

|

||||

TakeBoth Forward l ->

|

||||

(List.drop l xs, List.drop l ys, (mkBoth <$> List.zip (List.take l xs) (List.take l ys)) <> res)

|

||||

TakeFirst Backward l ->

|

||||

(dropEnd l xs, ys, res <> (mkBefore <$> takeEnd l xs))

|

||||

TakeSecond Backward l ->

|

||||

(xs, dropEnd l ys, res <> (mkAfter <$> takeEnd l ys))

|

||||

TakeBoth Backward l ->

|

||||

(dropEnd l xs, dropEnd l ys, res <> (mkBoth <$> List.zip (takeEnd l xs) (takeEnd l ys)))

|

||||

mkBefore (ix, Phase{..}) = PhaseReport

|

||||

{ phaseReportPhaseName = phaseName

|

||||

, phaseReportIndex = ix

|

||||

, phaseDiffTime = makeBefore phaseTime

|

||||

}

|

||||

mkAfter (ix, Phase{..}) = PhaseReport

|

||||

{ phaseReportPhaseName = phaseName

|

||||

, phaseReportIndex = ix

|

||||

, phaseDiffTime = makeAfter phaseTime

|

||||

}

|

||||

mkBoth ((ixb, b), (ixa, a)) = PhaseReport

|

||||

{ phaseReportPhaseName = phaseName b

|

||||

, phaseReportIndex = min ixb ixa

|

||||

, phaseDiffTime = makeBoth (phaseTime b) (phaseTime a)

|

||||

}

|

||||

dropEnd l = List.reverse . List.drop l . List.reverse

|

||||

takeEnd l = List.reverse . List.take l . List.reverse

|

||||

in go newRes newXs newYs listActions

|

||||

|

||||

data PhaseReport = PhaseReport

|

||||

{ phaseReportPhaseName :: T.Text

|

||||

, phaseReportIndex :: Int

|

||||

, phaseDiffTime :: Diff Double

|

||||

} deriving Show

|

||||

|

||||

data ModuleData a = ModuleData

|

||||

{ moduleDataIndex :: Int

|

||||

, moduleDataModuleName :: T.Text

|

||||

, moduleDataData :: a

|

||||

} deriving Show

|

||||

|

||||

|

||||

type Diff a = These a a

|

||||

|

||||

makeAfter, makeBefore :: a -> Diff a

|

||||

makeBefore a = This a

|

||||

makeAfter a = That a

|

||||

|

||||

makeBoth :: a -> a -> Diff a

|

||||

makeBoth b a = These b a

|

||||

|

||||

type ModuleDiff = ModuleData (Diff (V.Vector Phase))

|

||||

|

||||

type ModuleReportRow = ModuleData (V.Vector PhaseReport)

|

||||

|

||||

-- ** Parser Helpers

|

||||

|

||||

data Direction = Forward | Backward

|

||||

deriving (Eq, Show, Ord)

|

||||

|

||||

data SequenceAction

|

||||

= TakeFirst Direction Int

|

||||

| TakeSecond Direction Int

|

||||

| TakeBoth Direction Int

|

||||

deriving (Eq, Show, Ord)

|

||||

|

||||

data SubsequenceData a = SubsequenceData

|

||||

{ prefixLength :: Int

|

||||

, commonSubsequenceLength :: Int

|

||||

, restOfFirst :: [a]

|

||||

, restOfSecond :: [a]

|

||||

}

|

||||

|

||||

emptySubsequenceData :: forall a. SubsequenceData a

|

||||

emptySubsequenceData = SubsequenceData

|

||||

{ prefixLength = 0

|

||||

, commonSubsequenceLength = 0

|

||||

, restOfFirst = []

|

||||

, restOfSecond = []

|

||||

}

|

||||

|

||||

renderModules, renderPackage :: V.Vector ModuleReportRow -> IO ()

|

||||

renderModules rows = do

|

||||

let output = renderRow <$> V.toList rows

|

||||

Prelude.mapM_ buildPlot output

|

||||

where

|

||||

opts = Csv.defaultEncodeOptions { Csv.encDelimiter = fromIntegral (ord '\t') }

|

||||

renderRow row =

|

||||

let content = mconcat

|

||||

(Prelude.map (Csv.encodeRecordWith opts)

|

||||

[ ( phaseReportPhaseName pr

|

||||

, moduleDataModuleName row

|

||||

, fromMaybe 0.0 $ justHere $ phaseDiffTime pr

|

||||

, fromMaybe 0.0 $ justThere $ phaseDiffTime pr

|

||||

)

|

||||

| pr <- V.toList (moduleDataData row)

|

||||

])

|

||||

in (moduleDataModuleName row, content)

|

||||

buildPlot (moduleName, bs) = do

|

||||

plotSettingsTemplate <- T.readFile MODULE_TEMPLATE_FILENAME

|

||||

let plotSettingsFile = T.unpack moduleName <.> "gnuplot"

|

||||

outputFile = T.unpack moduleName <.> "svg"

|

||||

plotDataFile = T.unpack moduleName <.> "dat"

|

||||

plotSettingsContents

|

||||

= T.replace INPUT_FILE (T.pack plotDataFile)

|

||||

$ T.replace TITLE moduleName

|

||||

$ T.replace OUTPUT_FILE (T.pack outputFile)

|

||||

plotSettingsTemplate

|

||||

T.writeFile plotSettingsFile plotSettingsContents

|

||||

BSL.writeFile plotDataFile $ Builder.toLazyByteString bs

|

||||

createPlot plotSettingsFile

|

||||

removeFile plotSettingsFile

|

||||

createPlot plotFile = callProcess GNUPLOT_CMD [plotFile]

|

||||

|

||||

renderPackage rows = do

|

||||

let output = renderRow <$> V.toList rows

|

||||

buildPlot "package.svg" output

|

||||

where

|

||||

opts = Csv.defaultEncodeOptions { Csv.encDelimiter = fromIntegral (ord '\t') }

|

||||

renderRow row = Csv.encodeRecordWith opts

|

||||

(moduleDataModuleName row, totalBefore row, totalAfter row)

|

||||

totalBefore row = sum $ catThere $ fmap phaseDiffTime . V.toList $ moduleDataData $ row

|

||||

totalAfter row = sum $ catThere $ fmap phaseDiffTime . V.toList $ moduleDataData $ row

|

||||

buildPlot outputFile bs = do

|

||||

plotSettingsTemplate <- T.readFile PACKAGE_TEMPLATE_FILENAME

|

||||

let plotDataFile = "package.dat"

|

||||

plotSettingsFile = "package.gnuplot"

|

||||

plotSettingsContents

|

||||

= T.replace INPUT_FILE (T.pack plotDataFile)

|

||||

$ T.replace OUTPUT_FILE outputFile

|

||||

plotSettingsTemplate

|

||||

T.writeFile plotSettingsFile plotSettingsContents

|

||||

BSL.writeFile plotDataFile $ Builder.toLazyByteString $ mconcat bs

|

||||

createPlot plotSettingsFile

|

||||

createPlot plotFile = callProcess GNUPLOT_CMD [plotFile]

|

||||

|

||||

-- | Temporary materialize a file in the given directory. File is

|

||||

-- removed when the function exits.

|

||||

withMaterializedFile

|

||||

:: FilePath -- ^ File name

|

||||

-> IO a

|

||||

-> IO a

|

||||

withMaterializedFile name f =

|

||||

getDataFileName name >>= \fp ->

|

||||

bracket_ (copyFile fp name) (removeFile name) f

|

||||

|

||||

139

src/Generate.hs

Normal file

139

src/Generate.hs

Normal file

@ -0,0 +1,139 @@

|

||||

{-# LANGUAGE TransformListComp #-}

|

||||

module Generate where

|

||||

|

||||

import Control.Monad

|

||||

import Control.Monad.Trans.Resource

|

||||

import Data.Aeson

|

||||

import Data.Conduit

|

||||

import Data.Conduit.Combinators as CL

|

||||

import Data.Conduit.List

|

||||

import Data.Csv as Csv

|

||||

import Data.Csv.Builder as Csv

|

||||

import Data.Either

|

||||

import Data.Foldable

|

||||

import Data.Functor

|

||||

import Data.List

|

||||

import Data.Set as Set

|

||||

import Data.Traversable

|

||||

import GHC.Exts

|

||||

import System.Directory

|

||||

import System.FilePath

|

||||

import Text.Blaze (Markup)

|

||||

import Text.Blaze.Renderer.Utf8 (renderMarkupToByteStringIO)

|

||||

import TextShow

|

||||

|

||||

import qualified Data.ByteString as B

|

||||

import qualified Data.ByteString.Lazy as BSL

|

||||

import qualified Data.Binary.Builder as Builder

|

||||

import qualified Data.Map.Strict as Map

|

||||

import qualified Data.Text.Encoding as T

|

||||

import qualified Data.Text.IO as T

|

||||

import qualified Data.Text as T

|

||||

import qualified Data.Vector as V

|

||||

|

||||

import GhcBuildPhase

|

||||

import GhcFile

|

||||

import Report

|

||||

|

||||

runGenerate :: FilePath -> IO ()

|

||||

runGenerate dir = do

|

||||

createDirectoryIfMissing True output

|

||||

|

||||

files <- findDumpTimings dir

|

||||

|

||||

let ( files_failed,

|

||||

files_parsed)

|

||||

= partitionEithers $ files <&> \srcFilePath ->

|

||||

case stripPrefix dir srcFilePath of

|

||||

Nothing -> Left srcFilePath

|

||||

Just x -> case splitDirectories x of

|

||||

("/" : "build" : hostOs : ghcVersion : packageName : componentType : subComponent : "build" : modulePath) ->

|

||||

Right GhcFile{..}

|

||||

("/" : "build" : hostOs : ghcVersion : packageName : "build" : modulePath) ->

|

||||

let componentType = ""

|

||||

subComponent = ""

|

||||

in Right GhcFile{..}

|

||||

("/": "dist": hostOs : _cabalVersion : "build": modulePath) ->

|

||||

-- FIXME: should be retrieved from stack somehow

|

||||

let ghcVersion = "<GHC version>"

|

||||

packageName = "<Package name>"

|

||||

componentType = ""

|

||||

subComponent = ""

|

||||

in Right GhcFile{..}

|

||||

_ -> Left srcFilePath

|

||||

|

||||

unless (Prelude.null files_failed) $ do

|

||||

Prelude.putStrLn "Warning, some files are failed to be parsed"

|

||||

Prelude.print files_failed

|

||||

|

||||

|

||||

-- Output all files in json form for later analysis.

|

||||

results <- for files_parsed $ \f -> do

|

||||

steps <- fmap parsePhases $ T.readFile (rebuildFilePath f)

|

||||

encodeFile (output </> rebuildPlainPath f <.> "json") steps

|

||||

let bs = encodeDefaultOrderedByName steps

|

||||

BSL.writeFile (output </> rebuildPlainPath f <.> "csv") bs

|

||||

mkHtmlFile (output </> rebuildPlainPath f <.> "html")

|

||||

$ Report.moduleTable f steps

|

||||

pure (f, steps)

|

||||

|

||||

let stats_by_package = Map.fromListWith (<>)

|

||||

[ (packageName, Map.singleton GhcFile{..} steps)

|

||||

| (GhcFile{..}, steps) <- results

|

||||

]

|

||||

-- FIXME: put this file back later

|

||||

-- encodeFile (output </> "stats_by_package" <.> "json") stats_by_package

|

||||

for_ (Map.toList stats_by_package) $ \(package, stat) -> do

|

||||

let headers = Set.toList $ Set.fromList

|

||||

[ phaseName

|

||||

| (_, steps) <- Map.toList stat

|

||||

, Phase{..} <- steps

|

||||

]

|

||||

let rows = [ ( GhcFile{..}

|

||||

, total

|

||||

, Prelude.map (\n -> Map.lookup n by_phase) headers)

|

||||

| (GhcFile{..}, steps) <- Map.toList stat

|

||||

, let total = Prelude.sum [phaseTime | Phase{..} <- steps]

|

||||

, let by_phase = Map.fromListWith (+)

|

||||

[ (phaseName, phaseTime)

|

||||

| Phase{..} <- steps

|

||||

]

|

||||

, then sortWith by (Down total)

|

||||

]

|

||||

mkHtmlFile (output <> "/" <> package <> ".html")

|

||||

$ Report.packageTable package headers rows

|

||||

let bs = Csv.encodeHeader (V.fromList ("module": "total": Prelude.map T.encodeUtf8 headers))

|

||||

<> mconcat (Prelude.map Csv.encodeRecord

|

||||

[ Prelude.map T.encodeUtf8 $ T.pack (joinPath modulePath):(showt total):Prelude.map showt cols

|

||||

| (GhcFile{..}, total, cols) <- rows

|

||||

])

|

||||

BSL.writeFile (output </> package <.> "csv")

|

||||

$ Builder.toLazyByteString bs

|

||||

-- Prelude.print byPackage

|

||||

-- Report.

|

||||

mkHtmlFile (output <> "/index.html")

|

||||

$ Report.index $ Map.keys stats_by_package

|

||||

copyFile "files/main.css" (output <> "/main.css") -- TODO use data files

|

||||

where

|

||||

output :: IsString a => a

|

||||

output = "./tmp"

|

||||

|

||||

-- ** Helpers

|

||||

|

||||

-- | Find all files that are related to the dump timings.

|

||||

--

|

||||

-- XXX: this method is not effective enough as it eagerly builds a list of FilePath

|

||||

findDumpTimings :: String -> IO [FilePath]

|

||||

findDumpTimings input = do

|

||||

runResourceT $ runConduit $ sourceDirectoryDeep False input

|

||||

.| CL.filter (\x -> x `endsWith` ".dump-timings")

|

||||

.| consume

|

||||

where

|

||||

endsWith x y = (reverse y) `isPrefixOf` (reverse x)

|

||||

|

||||

mkHtmlFile :: FilePath -> Markup -> IO ()

|

||||

mkHtmlFile fn markup = do

|

||||

B.writeFile fn "" -- TODO: properly cleanup the file

|

||||

renderMarkupToByteStringIO

|

||||

(B.appendFile fn) -- TODO: keep handle opened instead of reopening each time.

|

||||

markup

|

||||

@ -21,9 +21,9 @@ data Phase = Phase

|

||||

, phaseAlloc :: Int

|

||||

, phaseTime :: Double

|

||||

}

|

||||

deriving stock (Generic)

|

||||

deriving stock (Generic, Eq, Show)

|

||||

deriving anyclass (ToJSON, FromJSON)

|

||||

deriving anyclass (ToNamedRecord, DefaultOrdered)

|

||||

deriving anyclass (ToNamedRecord, DefaultOrdered, FromNamedRecord)

|

||||

deriving TextShow via (FromGeneric Phase)

|

||||

|

||||

-- | Parse .ghc-timings file timings file and get list of phases.

|

||||

|

||||

Loading…

Reference in New Issue

Block a user