mirror of

https://github.com/sd-webui/stable-diffusion-webui.git

synced 2024-12-14 23:02:00 +03:00

Merge branch 'dev' into master

This commit is contained in:

commit

25cc2af82b

@ -34,7 +34,6 @@ maxMessageSize = 200

|

||||

enableWebsocketCompression = false

|

||||

|

||||

[browser]

|

||||

serverAddress = "localhost"

|

||||

gatherUsageStats = false

|

||||

serverPort = 8501

|

||||

|

||||

|

||||

29

README.md

29

README.md

@ -6,16 +6,16 @@

|

||||

|

||||

## Installation instructions for:

|

||||

|

||||

- **[Windows](https://sd-webui.github.io/stable-diffusion-webui/docs/1.windows-installation.html)**

|

||||

- **[Linux](https://sd-webui.github.io/stable-diffusion-webui/docs/2.linux-installation.html)**

|

||||

- **[Windows](https://sygil-dev.github.io/sygil-webui/docs/1.windows-installation.html)**

|

||||

- **[Linux](https://sygil-dev.github.io/sygil-webui/docs/2.linux-installation.html)**

|

||||

|

||||

### Want to ask a question or request a feature?

|

||||

|

||||

Come to our [Discord Server](https://discord.gg/gyXNe4NySY) or use [Discussions](https://github.com/sygil-dev/stable-diffusion-webui/discussions).

|

||||

Come to our [Discord Server](https://discord.gg/gyXNe4NySY) or use [Discussions](https://github.com/sygil-dev/sygil-webui/discussions).

|

||||

|

||||

## Documentation

|

||||

|

||||

[Documentation is located here](https://sd-webui.github.io/stable-diffusion-webui/)

|

||||

[Documentation is located here](https://sygil-dev.github.io/sygil-webui/)

|

||||

|

||||

## Want to contribute?

|

||||

|

||||

@ -30,7 +30,9 @@ Check the [Contribution Guide](CONTRIBUTING.md)

|

||||

### Project Features:

|

||||

|

||||

* Built-in image enhancers and upscalers, including GFPGAN and realESRGAN

|

||||

|

||||

* Generator Preview: See your image as its being made

|

||||

|

||||

* Run additional upscaling models on CPU to save VRAM

|

||||

|

||||

* Textual inversion: [Reaserch Paper](https://textual-inversion.github.io/)

|

||||

@ -64,7 +66,7 @@ Check the [Contribution Guide](CONTRIBUTING.md)

|

||||

|

||||

* Prompt matrix: Separate multiple prompts using the `|` character, and the system will produce an image for every combination of them.

|

||||

|

||||

* [Gradio] Advanced img2img editor with Mask and crop capabilities

|

||||

* [Gradio] Advanced img2img editor with Mask and crop capabilities

|

||||

|

||||

* [Gradio] Mask painting 🖌️: Powerful tool for re-generating only specific parts of an image you want to change (currently Gradio only)

|

||||

|

||||

@ -83,9 +85,11 @@ An easy way to work with Stable Diffusion right from your browser.

|

||||

- Easily customizable defaults, right from the WebUI's Settings tab

|

||||

- An integrated gallery to show the generations for a prompt

|

||||

- *Optimized VRAM* usage for bigger generations or usage on lower end GPUs

|

||||

- *Text2Video:* Generate video clips from text prompts right from the WebUI (WIP)

|

||||

- *Text to Video:* Generate video clips from text prompts right from the WebUI (WIP)

|

||||

- Image to Text: Use [CLIP Interrogator](https://github.com/pharmapsychotic/clip-interrogator) to interrogate an image and get a prompt that you can use to generate a similar image using Stable Diffusion.

|

||||

- *Concepts Library:* Run custom embeddings others have made via textual inversion.

|

||||

- **Currently in development: [Stable Hord](https://stablehorde.net/) integration; ImgLab, batch inputs, & mask editor from Gradio

|

||||

- Textual Inversion training: Train your own embeddings on any photo you want and use it on your prompt.

|

||||

- **Currently in development: [Stable Horde](https://stablehorde.net/) integration; ImgLab, batch inputs, & mask editor from Gradio

|

||||

|

||||

**Prompt Weights & Negative Prompts:**

|

||||

|

||||

@ -128,7 +132,7 @@ Lets you improve faces in pictures using the GFPGAN model. There is a checkbox i

|

||||

|

||||

If you want to use GFPGAN to improve generated faces, you need to install it separately.

|

||||

Download [GFPGANv1.4.pth](https://github.com/TencentARC/GFPGAN/releases/download/v1.3.4/GFPGANv1.4.pth) and put it

|

||||

into the `/stable-diffusion-webui/models/gfpgan` directory.

|

||||

into the `/sygil-webui/models/gfpgan` directory.

|

||||

|

||||

### RealESRGAN

|

||||

|

||||

@ -138,20 +142,16 @@ Lets you double the resolution of generated images. There is a checkbox in every

|

||||

There is also a separate tab for using RealESRGAN on any picture.

|

||||

|

||||

Download [RealESRGAN_x4plus.pth](https://github.com/xinntao/Real-ESRGAN/releases/download/v0.1.0/RealESRGAN_x4plus.pth) and [RealESRGAN_x4plus_anime_6B.pth](https://github.com/xinntao/Real-ESRGAN/releases/download/v0.2.2.4/RealESRGAN_x4plus_anime_6B.pth).

|

||||

Put them into the `stable-diffusion-webui/models/realesrgan` directory.

|

||||

|

||||

|

||||

Put them into the `sygil-webui/models/realesrgan` directory.

|

||||

|

||||

### LSDR

|

||||

|

||||

Download **LDSR** [project.yaml](https://heibox.uni-heidelberg.de/f/31a76b13ea27482981b4/?dl=1) and [model last.cpkt](https://heibox.uni-heidelberg.de/f/578df07c8fc04ffbadf3/?dl=1). Rename last.ckpt to model.ckpt and place both under `stable-diffusion-webui/models/ldsr/`

|

||||

Download **LDSR** [project.yaml](https://heibox.uni-heidelberg.de/f/31a76b13ea27482981b4/?dl=1) and [model last.cpkt](https://heibox.uni-heidelberg.de/f/578df07c8fc04ffbadf3/?dl=1). Rename last.ckpt to model.ckpt and place both under `sygil-webui/models/ldsr/`

|

||||

|

||||

### GoBig, and GoLatent *(Currently on the Gradio version Only)*

|

||||

|

||||

More powerful upscalers that uses a seperate Latent Diffusion model to more cleanly upscale images.

|

||||

|

||||

|

||||

|

||||

Please see the [Image Enhancers Documentation](docs/6.image_enhancers.md) to learn more.

|

||||

|

||||

-----

|

||||

@ -211,5 +211,4 @@ Details on the training procedure and data, as well as the intended use of the m

|

||||

archivePrefix={arXiv},

|

||||

primaryClass={cs.CV}

|

||||

}

|

||||

|

||||

```

|

||||

|

||||

@ -23,7 +23,7 @@

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"source": [

|

||||

"[](https://colab.research.google.com/github/sd-webui/stable-diffusion-webui/blob/dev/Web_based_UI_for_Stable_Diffusion_colab.ipynb)"

|

||||

"[](https://colab.research.google.com/github/Sygil-Dev/sygil-webui/blob/dev/Web_based_UI_for_Stable_Diffusion_colab.ipynb)"

|

||||

],

|

||||

"metadata": {

|

||||

"id": "S5RoIM-5IPZJ"

|

||||

@ -43,28 +43,28 @@

|

||||

"source": [

|

||||

"###<center>Web-based UI for Stable Diffusion</center>\n",

|

||||

"\n",

|

||||

"## Created by [sd-webui](https://github.com/sd-webui)\n",

|

||||

"## Created by [Sygil-Dev](https://github.com/Sygil-Dev)\n",

|

||||

"\n",

|

||||

"## [Visit sd-webui's Discord Server](https://discord.gg/gyXNe4NySY) [](https://discord.gg/gyXNe4NySY)\n",

|

||||

"## [Visit Sygil-Dev's Discord Server](https://discord.gg/gyXNe4NySY) [](https://discord.gg/gyXNe4NySY)\n",

|

||||

"\n",

|

||||

"## Installation instructions for:\n",

|

||||

"\n",

|

||||

"- **[Windows](https://sd-webui.github.io/stable-diffusion-webui/docs/1.windows-installation.html)** \n",

|

||||

"- **[Linux](https://sd-webui.github.io/stable-diffusion-webui/docs/2.linux-installation.html)**\n",

|

||||

"- **[Windows](https://sygil-dev.github.io/sygil-webui/docs/1.windows-installation.html)** \n",

|

||||

"- **[Linux](https://sygil-dev.github.io/sygil-webui/docs/2.linux-installation.html)**\n",

|

||||

"\n",

|

||||

"### Want to ask a question or request a feature?\n",

|

||||

"\n",

|

||||

"Come to our [Discord Server](https://discord.gg/gyXNe4NySY) or use [Discussions](https://github.com/sd-webui/stable-diffusion-webui/discussions).\n",

|

||||

"Come to our [Discord Server](https://discord.gg/gyXNe4NySY) or use [Discussions](https://github.com/Sygil-Dev/sygil-webui/discussions).\n",

|

||||

"\n",

|

||||

"## Documentation\n",

|

||||

"\n",

|

||||

"[Documentation is located here](https://sd-webui.github.io/stable-diffusion-webui/)\n",

|

||||

"[Documentation is located here](https://sygil-dev.github.io/sygil-webui/)\n",

|

||||

"\n",

|

||||

"## Want to contribute?\n",

|

||||

"\n",

|

||||

"Check the [Contribution Guide](CONTRIBUTING.md)\n",

|

||||

"\n",

|

||||

"[sd-webui](https://github.com/sd-webui) main devs:\n",

|

||||

"[Sygil-Dev](https://github.com/Sygil-Dev) main devs:\n",

|

||||

"\n",

|

||||

"*  [hlky](https://github.com/hlky)\n",

|

||||

"* [ZeroCool940711](https://github.com/ZeroCool940711)\n",

|

||||

@ -172,7 +172,7 @@

|

||||

"\n",

|

||||

"If you want to use GFPGAN to improve generated faces, you need to install it separately.\n",

|

||||

"Download [GFPGANv1.4.pth](https://github.com/TencentARC/GFPGAN/releases/download/v1.3.4/GFPGANv1.4.pth) and put it\n",

|

||||

"into the `/stable-diffusion-webui/models/gfpgan` directory. \n",

|

||||

"into the `/sygil-webui/models/gfpgan` directory. \n",

|

||||

"\n",

|

||||

"### RealESRGAN\n",

|

||||

"\n",

|

||||

@ -182,13 +182,13 @@

|

||||

"There is also a separate tab for using RealESRGAN on any picture.\n",

|

||||

"\n",

|

||||

"Download [RealESRGAN_x4plus.pth](https://github.com/xinntao/Real-ESRGAN/releases/download/v0.1.0/RealESRGAN_x4plus.pth) and [RealESRGAN_x4plus_anime_6B.pth](https://github.com/xinntao/Real-ESRGAN/releases/download/v0.2.2.4/RealESRGAN_x4plus_anime_6B.pth).\n",

|

||||

"Put them into the `stable-diffusion-webui/models/realesrgan` directory. \n",

|

||||

"Put them into the `sygil-webui/models/realesrgan` directory. \n",

|

||||

"\n",

|

||||

"\n",

|

||||

"\n",

|

||||

"### LSDR\n",

|

||||

"\n",

|

||||

"Download **LDSR** [project.yaml](https://heibox.uni-heidelberg.de/f/31a76b13ea27482981b4/?dl=1) and [model last.cpkt](https://heibox.uni-heidelberg.de/f/578df07c8fc04ffbadf3/?dl=1). Rename last.ckpt to model.ckpt and place both under `stable-diffusion-webui/models/ldsr/`\n",

|

||||

"Download **LDSR** [project.yaml](https://heibox.uni-heidelberg.de/f/31a76b13ea27482981b4/?dl=1) and [model last.cpkt](https://heibox.uni-heidelberg.de/f/578df07c8fc04ffbadf3/?dl=1). Rename last.ckpt to model.ckpt and place both under `sygil-webui/models/ldsr/`\n",

|

||||

"\n",

|

||||

"### GoBig, and GoLatent *(Currently on the Gradio version Only)*\n",

|

||||

"\n",

|

||||

@ -265,7 +265,7 @@

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"source": [

|

||||

"# Utils"

|

||||

"# Setup"

|

||||

],

|

||||

"metadata": {

|

||||

"id": "IZjJSr-WPNxB"

|

||||

@ -274,113 +274,14 @@

|

||||

{

|

||||

"cell_type": "code",

|

||||

"metadata": {

|

||||

"id": "yKFE49BHaWTb",

|

||||

"cellView": "form"

|

||||

},

|

||||

"source": [

|

||||

"#@title <-- Press play on the music player to keep the tab alive, then you can continue with everything below (Uses only 13MB of data)\n",

|

||||

"%%html\n",

|

||||

"<b>Press play on the music player to keep the tab alive, then start your generation below (Uses only 13MB of data)</b><br/>\n",

|

||||

"<audio src=\"https://henk.tech/colabkobold/silence.m4a\" controls>"

|

||||

],

|

||||

"execution_count": null,

|

||||

"outputs": []

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {

|

||||

"id": "-o8F1NCNTK2u"

|

||||

},

|

||||

"source": [

|

||||

"JS to prevent idle timeout:\n",

|

||||

"\n",

|

||||

"Press F12 OR CTRL + SHIFT + I OR right click on this website -> inspect.\n",

|

||||

"Then click on the console tab and paste in the following code.\n",

|

||||

"\n",

|

||||

"```javascript\n",

|

||||

"function ClickConnect(){\n",

|

||||

"console.log(\"Working\");\n",

|

||||

"document.querySelector(\"colab-toolbar-button#connect\").click()\n",

|

||||

"}\n",

|

||||

"setInterval(ClickConnect,60000)\n",

|

||||

"```"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"metadata": {

|

||||

"cellView": "form",

|

||||

"id": "eq0-E5mjSpmP"

|

||||

},

|

||||

"source": [

|

||||

"#@markdown #**Check GPU type**\n",

|

||||

"#@markdown ### Factory reset runtime if you don't have the desired GPU.\n",

|

||||

"\n",

|

||||

"#@markdown ---\n",

|

||||

"\n",

|

||||

"\n",

|

||||

"\n",

|

||||

"\n",

|

||||

"#@markdown V100 = Excellent (*Available only for Colab Pro users*)\n",

|

||||

"\n",

|

||||

"#@markdown P100 = Very Good\n",

|

||||

"\n",

|

||||

"#@markdown T4 = Good (*preferred*)\n",

|

||||

"\n",

|

||||

"#@markdown K80 = Meh\n",

|

||||

"\n",

|

||||

"#@markdown P4 = (*Not Recommended*) \n",

|

||||

"\n",

|

||||

"#@markdown ---\n",

|

||||

"\n",

|

||||

"!nvidia-smi -L"

|

||||

],

|

||||

"execution_count": null,

|

||||

"outputs": []

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"source": [

|

||||

"# Clone the repository and install dependencies."

|

||||

],

|

||||

"metadata": {

|

||||

"id": "WcZH9VE6JOCd"

|

||||

}

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"id": "NG3JxFE6IreU"

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"!git clone https://github.com/sd-webui/stable-diffusion-webui.git"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"source": [

|

||||

"%cd /content/stable-diffusion-webui/"

|

||||

],

|

||||

"metadata": {

|

||||

"id": "pZHGf03Vp305"

|

||||

},

|

||||

"execution_count": null,

|

||||

"outputs": []

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"source": [

|

||||

"!git checkout dev\n",

|

||||

"!git pull"

|

||||

],

|

||||

"metadata": {

|

||||

"id": "__8TYN2_jfga"

|

||||

},

|

||||

"execution_count": null,

|

||||

"outputs": []

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"source": [

|

||||

@ -402,10 +303,14 @@

|

||||

{

|

||||

"cell_type": "code",

|

||||

"source": [

|

||||

"!python --version"

|

||||

"!git clone https://github.com/Sygil-Dev/sygil-webui.git\n",

|

||||

"%cd /content/sygil-webui/\n",

|

||||

"!git checkout dev\n",

|

||||

"!git pull\n",

|

||||

"!wget -O arial.ttf https://github.com/matomo-org/travis-scripts/blob/master/fonts/Arial.ttf?raw=true"

|

||||

],

|

||||

"metadata": {

|

||||

"id": "xd_2zFWSfNCB"

|

||||

"id": "pZHGf03Vp305"

|

||||

},

|

||||

"execution_count": null,

|

||||

"outputs": []

|

||||

@ -424,32 +329,12 @@

|

||||

{

|

||||

"cell_type": "code",

|

||||

"source": [

|

||||

"%cd /content/stable-diffusion-webui/"

|

||||

],

|

||||

"metadata": {

|

||||

"id": "vXX0OaR8KyLQ"

|

||||

},

|

||||

"execution_count": null,

|

||||

"outputs": []

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"source": [

|

||||

"#@title Install dependencies.\n",

|

||||

"!python --version\n",

|

||||

"!pip install -r requirements.txt"

|

||||

],

|

||||

"metadata": {

|

||||

"id": "REEG0zJtRC8w"

|

||||

},

|

||||

"execution_count": null,

|

||||

"outputs": []

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"source": [

|

||||

"%cd /content/stable-diffusion-webui/"

|

||||

],

|

||||

"metadata": {

|

||||

"id": "Kp1PjqxPijZ1"

|

||||

"id": "vXX0OaR8KyLQ"

|

||||

},

|

||||

"execution_count": null,

|

||||

"outputs": []

|

||||

@ -468,15 +353,39 @@

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"source": [

|

||||

"# Huggingface Token"

|

||||

"#Launch the WebUI"

|

||||

],

|

||||

"metadata": {

|

||||

"id": "RnlaaLAVGYal"

|

||||

"id": "csi6cj6gQZmC"

|

||||

}

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"source": [

|

||||

"#@title Mount Google Drive\n",

|

||||

"import os\n",

|

||||

"mount_google_drive = True #@param {type:\"boolean\"}\n",

|

||||

"save_outputs_to_drive = True #@param {type:\"boolean\"}\n",

|

||||

"\n",

|

||||

"if mount_google_drive:\n",

|

||||

" # Mount google drive to store your outputs.\n",

|

||||

" from google.colab import drive\n",

|

||||

" drive.mount('/content/drive/', force_remount=True)\n",

|

||||

"\n",

|

||||

"if save_outputs_to_drive:\n",

|

||||

" os.makedirs(\"/content/drive/MyDrive/sygil-webui/outputs\", exist_ok=True)\n",

|

||||

" os.symlink(\"/content/drive/MyDrive/sygil-webui/outputs\", \"/content/sygil-webui/outputs\", target_is_directory=True)\n"

|

||||

],

|

||||

"metadata": {

|

||||

"id": "pcSWo9Zkzbsf"

|

||||

},

|

||||

"execution_count": null,

|

||||

"outputs": []

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"source": [

|

||||

"#@title Enter Huggingface token\n",

|

||||

"!git config --global credential.helper store\n",

|

||||

"!huggingface-cli login"

|

||||

],

|

||||

@ -486,36 +395,16 @@

|

||||

"execution_count": null,

|

||||

"outputs": []

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"source": [

|

||||

"# Google drive config"

|

||||

],

|

||||

"metadata": {

|

||||

"id": "xMWVQOg0G1Pj"

|

||||

}

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"source": [

|

||||

"import os, shutil\n",

|

||||

"mount_google_drive = True #@param {type:\"boolean\"}\n",

|

||||

"save_outputs_to_drive = True #@param {type:\"boolean\"}\n",

|

||||

"#save_model_to_drive = True #@param {type:\"boolean\"}\n",

|

||||

"\n",

|

||||

"if mount_google_drive:\n",

|

||||

" # Mount google drive to store your outputs.\n",

|

||||

" from google.colab import drive\n",

|

||||

" drive.mount('/content/drive/', force_remount=True)\n",

|

||||

"\n",

|

||||

"if save_outputs_to_drive:\n",

|

||||

" os.makedirs(\"/content/drive/MyDrive/stable-diffusion-webui/outputs\", exist_ok=True)\n",

|

||||

" #os.makedirs(\"/content/stable-diffusion-webui/outputs\", exist_ok=True)\n",

|

||||

" os.symlink(\"/content/drive/MyDrive/stable-diffusion-webui/outputs\", \"/content/stable-diffusion-webui/outputs\", target_is_directory=True)\n"

|

||||

"#@title <-- Press play on the music player to keep the tab alive (Uses only 13MB of data)\n",

|

||||

"%%html\n",

|

||||

"<b>Press play on the music player to keep the tab alive, then start your generation below (Uses only 13MB of data)</b><br/>\n",

|

||||

"<audio src=\"https://henk.tech/colabkobold/silence.m4a\" controls>"

|

||||

],

|

||||

"metadata": {

|

||||

"cellView": "form",

|

||||

"id": "pcSWo9Zkzbsf"

|

||||

"id": "-WknaU7uu_q6"

|

||||

},

|

||||

"execution_count": null,

|

||||

"outputs": []

|

||||

@ -523,39 +412,26 @@

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"source": [

|

||||

"#Launch the WebUI"

|

||||

],

|

||||

"metadata": {

|

||||

"id": "csi6cj6gQZmC"

|

||||

}

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"source": [

|

||||

"!streamlit run scripts/webui_streamlit.py --theme.base dark --server.headless True &>/content/logs.txt &"

|

||||

],

|

||||

"metadata": {

|

||||

"id": "SN7C9-dyRlkM"

|

||||

},

|

||||

"execution_count": null,

|

||||

"outputs": []

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"source": [

|

||||

"## Expose the port 8501\n",

|

||||

"Then just click in the `url` showed.\n",

|

||||

"JS to prevent idle timeout:\n",

|

||||

"\n",

|

||||

"A `log.txt`file will be created."

|

||||

"Press F12 OR CTRL + SHIFT + I OR right click on this website -> inspect. Then click on the console tab and paste in the following code.\n",

|

||||

"\n",

|

||||

"function ClickConnect(){\n",

|

||||

"console.log(\"Working\");\n",

|

||||

"document.querySelector(\"colab-toolbar-button#connect\").click()\n",

|

||||

"}\n",

|

||||

"setInterval(ClickConnect,60000)"

|

||||

],

|

||||

"metadata": {

|

||||

"id": "h_KW9juhOCuH"

|

||||

"id": "pjIjiCuJysJI"

|

||||

}

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"source": [

|

||||

"!npx localtunnel --port 8501"

|

||||

"#@title Open port 8501 and start Streamlit server. Open link in 'link.txt' file in file pane on left.\n",

|

||||

"!npx localtunnel --port 8501 &>/content/link.txt &\n",

|

||||

"!streamlit run scripts/webui_streamlit.py --theme.base dark --server.headless true 2>&1 | tee -a /content/log.txt"

|

||||

],

|

||||

"metadata": {

|

||||

"id": "5whXm2nfSZ39"

|

||||

|

||||

@ -1,6 +1,6 @@

|

||||

# This file is part of stable-diffusion-webui (https://github.com/sd-webui/stable-diffusion-webui/).

|

||||

# This file is part of sygil-webui (https://github.com/Sygil-Dev/sygil-webui/).

|

||||

|

||||

# Copyright 2022 sd-webui team.

|

||||

# Copyright 2022 Sygil-Dev team.

|

||||

# This program is free software: you can redistribute it and/or modify

|

||||

# it under the terms of the GNU Affero General Public License as published by

|

||||

# the Free Software Foundation, either version 3 of the License, or

|

||||

|

||||

@ -1,6 +1,6 @@

|

||||

# This file is part of stable-diffusion-webui (https://github.com/sd-webui/stable-diffusion-webui/).

|

||||

# This file is part of sygil-webui (https://github.com/Sygil-Dev/sygil-webui/).

|

||||

|

||||

# Copyright 2022 sd-webui team.

|

||||

# Copyright 2022 Sygil-Dev team.

|

||||

# This program is free software: you can redistribute it and/or modify

|

||||

# it under the terms of the GNU Affero General Public License as published by

|

||||

# the Free Software Foundation, either version 3 of the License, or

|

||||

@ -19,7 +19,7 @@

|

||||

# You may add overrides in a file named "userconfig_streamlit.yaml" in this folder, which can contain any subset

|

||||

# of the properties below.

|

||||

general:

|

||||

version: 1.20.0

|

||||

version: 1.24.6

|

||||

streamlit_telemetry: False

|

||||

default_theme: dark

|

||||

huggingface_token: ''

|

||||

@ -51,7 +51,7 @@ general:

|

||||

save_format: "png"

|

||||

skip_grid: False

|

||||

skip_save: False

|

||||

grid_format: "jpg:95"

|

||||

grid_quality: 95

|

||||

n_rows: -1

|

||||

no_verify_input: False

|

||||

no_half: False

|

||||

@ -212,7 +212,7 @@ txt2vid:

|

||||

format: "%.5f"

|

||||

|

||||

beta_scheduler_type: "scaled_linear"

|

||||

max_frames: 100

|

||||

max_duration_in_seconds: 30

|

||||

|

||||

LDSR_config:

|

||||

sampling_steps: 50

|

||||

@ -304,7 +304,7 @@ img2img:

|

||||

write_info_files: True

|

||||

|

||||

img2txt:

|

||||

batch_size: 420

|

||||

batch_size: 2000

|

||||

blip_image_eval_size: 512

|

||||

keep_all_models_loaded: False

|

||||

|

||||

@ -378,13 +378,21 @@ model_manager:

|

||||

file_name: "trinart.ckpt"

|

||||

download_link: "https://huggingface.co/naclbit/trinart_stable_diffusion_v2/resolve/main/trinart2_step95000.ckpt"

|

||||

|

||||

sd_wd_ld_trinart_merged:

|

||||

model_name: "SD1.5-WD1.3-LD-Trinart-Merged"

|

||||

save_location: "./models/custom"

|

||||

files:

|

||||

sd_wd_ld_trinart_merged:

|

||||

file_name: "SD1.5-WD1.3-LD-Trinart-Merged.ckpt"

|

||||

download_link: "https://huggingface.co/ZeroCool94/sd1.5-wd1.3-ld-trinart-merged/resolve/main/SD1.5-WD1.3-LD-Trinart-Merged.ckpt"

|

||||

|

||||

stable_diffusion_concept_library:

|

||||

model_name: "Stable Diffusion Concept Library"

|

||||

save_location: "./models/custom/sd-concepts-library/"

|

||||

files:

|

||||

concept_library:

|

||||

file_name: ""

|

||||

download_link: "https://github.com/sd-webui/sd-concepts-library"

|

||||

download_link: "https://github.com/Sygil-Dev/sd-concepts-library"

|

||||

|

||||

blip_model:

|

||||

model_name: "Blip Model"

|

||||

|

||||

File diff suppressed because it is too large

Load Diff

File diff suppressed because it is too large

Load Diff

File diff suppressed because it is too large

Load Diff

102247

data/img2txt/flavors.txt

102247

data/img2txt/flavors.txt

File diff suppressed because it is too large

Load Diff

160

data/img2txt/subreddits.txt

Normal file

160

data/img2txt/subreddits.txt

Normal file

@ -0,0 +1,160 @@

|

||||

/r/ImaginaryAetherpunk

|

||||

/r/ImaginaryAgriculture

|

||||

/r/ImaginaryAirships

|

||||

/r/ImaginaryAliens

|

||||

/r/ImaginaryAngels

|

||||

/r/ImaginaryAnimals

|

||||

/r/ImaginaryArchers

|

||||

/r/ImaginaryArchitecture

|

||||

/r/ImaginaryArmor

|

||||

/r/ImaginaryArtisans

|

||||

/r/ImaginaryAssassins

|

||||

/r/ImaginaryAstronauts

|

||||

/r/ImaginaryAsylums

|

||||

/r/ImaginaryAutumnscapes

|

||||

/r/ImaginaryAviation

|

||||

/r/ImaginaryAzeroth

|

||||

/r/ImaginaryBattlefields

|

||||

/r/ImaginaryBeasts

|

||||

/r/ImaginaryBehemoths

|

||||

/r/ImaginaryBodyscapes

|

||||

/r/ImaginaryBooks

|

||||

/r/ImaginaryCanyons

|

||||

/r/ImaginaryCarnage

|

||||

/r/ImaginaryCastles

|

||||

/r/ImaginaryCaves

|

||||

/r/ImaginaryCentaurs

|

||||

/r/ImaginaryCharacters

|

||||

/r/ImaginaryCityscapes

|

||||

/r/ImaginaryClerics

|

||||

/r/ImaginaryCowboys

|

||||

/r/ImaginaryCrawlers

|

||||

/r/ImaginaryCultists

|

||||

/r/ImaginaryCybernetics

|

||||

/r/ImaginaryCyberpunk

|

||||

/r/ImaginaryDarkSouls

|

||||

/r/ImaginaryDemons

|

||||

/r/ImaginaryDerelicts

|

||||

/r/ImaginaryDeserts

|

||||

/r/ImaginaryDieselpunk

|

||||

/r/ImaginaryDinosaurs

|

||||

/r/ImaginaryDragons

|

||||

/r/ImaginaryDruids

|

||||

/r/ImaginaryDwarves

|

||||

/r/ImaginaryDwellings

|

||||

/r/ImaginaryElementals

|

||||

/r/ImaginaryElves

|

||||

/r/ImaginaryExplosions

|

||||

/r/ImaginaryFactories

|

||||

/r/ImaginaryFaeries

|

||||

/r/ImaginaryFallout

|

||||

/r/ImaginaryFamilies

|

||||

/r/ImaginaryFashion

|

||||

/r/ImaginaryFood

|

||||

/r/ImaginaryForests

|

||||

/r/ImaginaryFutureWar

|

||||

/r/ImaginaryFuturism

|

||||

/r/ImaginaryGardens

|

||||

/r/ImaginaryGatherings

|

||||

/r/ImaginaryGiants

|

||||

/r/ImaginaryGlaciers

|

||||

/r/ImaginaryGnomes

|

||||

/r/ImaginaryGoblins

|

||||

/r/ImaginaryHellscapes

|

||||

/r/ImaginaryHistory

|

||||

/r/ImaginaryHorrors

|

||||

/r/ImaginaryHumans

|

||||

/r/ImaginaryHybrids

|

||||

/r/ImaginaryIcons

|

||||

/r/ImaginaryImmortals

|

||||

/r/ImaginaryInteriors

|

||||

/r/ImaginaryIslands

|

||||

/r/ImaginaryJedi

|

||||

/r/ImaginaryKanto

|

||||

/r/ImaginaryKnights

|

||||

/r/ImaginaryLakes

|

||||

/r/ImaginaryLandscapes

|

||||

/r/ImaginaryLesbians

|

||||

/r/ImaginaryLeviathans

|

||||

/r/ImaginaryLovers

|

||||

/r/ImaginaryMarvel

|

||||

/r/ImaginaryMeIRL

|

||||

/r/ImaginaryMechs

|

||||

/r/ImaginaryMen

|

||||

/r/ImaginaryMerchants

|

||||

/r/ImaginaryMerfolk

|

||||

/r/ImaginaryMiddleEarth

|

||||

/r/ImaginaryMindscapes

|

||||

/r/ImaginaryMonsterBoys

|

||||

/r/ImaginaryMonsterGirls

|

||||

/r/ImaginaryMonsters

|

||||

/r/ImaginaryMonuments

|

||||

/r/ImaginaryMountains

|

||||

/r/ImaginaryMovies

|

||||

/r/ImaginaryMythology

|

||||

/r/ImaginaryNatives

|

||||

/r/ImaginaryNecronomicon

|

||||

/r/ImaginaryNightscapes

|

||||

/r/ImaginaryNinjas

|

||||

/r/ImaginaryNobles

|

||||

/r/ImaginaryNomads

|

||||

/r/ImaginaryOrcs

|

||||

/r/ImaginaryPathways

|

||||

/r/ImaginaryPirates

|

||||

/r/ImaginaryPolice

|

||||

/r/ImaginaryPolitics

|

||||

/r/ImaginaryPortals

|

||||

/r/ImaginaryPrisons

|

||||

/r/ImaginaryPropaganda

|

||||

/r/ImaginaryRivers

|

||||

/r/ImaginaryRobotics

|

||||

/r/ImaginaryRuins

|

||||

/r/ImaginaryScholars

|

||||

/r/ImaginaryScience

|

||||

/r/ImaginarySeascapes

|

||||

/r/ImaginarySkyscapes

|

||||

/r/ImaginarySlavery

|

||||

/r/ImaginarySoldiers

|

||||

/r/ImaginarySpirits

|

||||

/r/ImaginarySports

|

||||

/r/ImaginarySpringscapes

|

||||

/r/ImaginaryStarscapes

|

||||

/r/ImaginaryStarships

|

||||

/r/ImaginaryStatues

|

||||

/r/ImaginarySteampunk

|

||||

/r/ImaginarySummerscapes

|

||||

/r/ImaginarySwamps

|

||||

/r/ImaginaryTamriel

|

||||

/r/ImaginaryTaverns

|

||||

/r/ImaginaryTechnology

|

||||

/r/ImaginaryTemples

|

||||

/r/ImaginaryTowers

|

||||

/r/ImaginaryTrees

|

||||

/r/ImaginaryTrolls

|

||||

/r/ImaginaryUndead

|

||||

/r/ImaginaryUnicorns

|

||||

/r/ImaginaryVampires

|

||||

/r/ImaginaryVehicles

|

||||

/r/ImaginaryVessels

|

||||

/r/ImaginaryVikings

|

||||

/r/ImaginaryVillages

|

||||

/r/ImaginaryVolcanoes

|

||||

/r/ImaginaryWTF

|

||||

/r/ImaginaryWalls

|

||||

/r/ImaginaryWarhammer

|

||||

/r/ImaginaryWarriors

|

||||

/r/ImaginaryWarships

|

||||

/r/ImaginaryWastelands

|

||||

/r/ImaginaryWaterfalls

|

||||

/r/ImaginaryWaterscapes

|

||||

/r/ImaginaryWeaponry

|

||||

/r/ImaginaryWeather

|

||||

/r/ImaginaryWerewolves

|

||||

/r/ImaginaryWesteros

|

||||

/r/ImaginaryWildlands

|

||||

/r/ImaginaryWinterscapes

|

||||

/r/ImaginaryWitcher

|

||||

/r/ImaginaryWitches

|

||||

/r/ImaginaryWizards

|

||||

/r/ImaginaryWorldEaters

|

||||

/r/ImaginaryWorlds

|

||||

1936

data/img2txt/tags.txt

Normal file

1936

data/img2txt/tags.txt

Normal file

File diff suppressed because it is too large

Load Diff

63

data/img2txt/techniques.txt

Normal file

63

data/img2txt/techniques.txt

Normal file

@ -0,0 +1,63 @@

|

||||

Fine Art

|

||||

Diagrammatic

|

||||

Geometric

|

||||

Architectural

|

||||

Analytic

|

||||

3D

|

||||

Anamorphic

|

||||

Pencil

|

||||

Color Pencil

|

||||

Charcoal

|

||||

Graphite

|

||||

Chalk

|

||||

Pen

|

||||

Ink

|

||||

Crayon

|

||||

Pastel

|

||||

Sand

|

||||

Beach Art

|

||||

Rangoli

|

||||

Mehndi

|

||||

Flower

|

||||

Food Art

|

||||

Tattoo

|

||||

Digital

|

||||

Pixel

|

||||

Embroidery

|

||||

Line

|

||||

Pointillism

|

||||

Single Color

|

||||

Stippling

|

||||

Contour

|

||||

Hatching

|

||||

Scumbling

|

||||

Scribble

|

||||

Geometric Portait

|

||||

Triangulation

|

||||

Caricature

|

||||

Photorealism

|

||||

Photo realistic

|

||||

Doodling

|

||||

Wordtoons

|

||||

Cartoon

|

||||

Anime

|

||||

Manga

|

||||

Graffiti

|

||||

Typography

|

||||

Calligraphy

|

||||

Mosaic

|

||||

Figurative

|

||||

Anatomy

|

||||

Life

|

||||

Still life

|

||||

Portrait

|

||||

Landscape

|

||||

Perspective

|

||||

Funny

|

||||

Surreal

|

||||

Wall Mural

|

||||

Street

|

||||

Realistic

|

||||

Photo Realistic

|

||||

Hyper Realistic

|

||||

Doodle

|

||||

@ -1,10 +1,12 @@

|

||||

---

|

||||

|

||||

title: Windows Installation

|

||||

---

|

||||

<!--

|

||||

This file is part of stable-diffusion-webui (https://github.com/sd-webui/stable-diffusion-webui/).

|

||||

|

||||

Copyright 2022 sd-webui team.

|

||||

<!--

|

||||

This file is part of sygil-webui (https://github.com/Sygil-Dev/sygil-webui/).

|

||||

|

||||

Copyright 2022 Sygil-Dev team.

|

||||

This program is free software: you can redistribute it and/or modify

|

||||

it under the terms of the GNU Affero General Public License as published by

|

||||

the Free Software Foundation, either version 3 of the License, or

|

||||

@ -20,6 +22,7 @@ along with this program. If not, see <http://www.gnu.org/licenses/>.

|

||||

-->

|

||||

|

||||

# Initial Setup

|

||||

|

||||

> This is a windows guide. [To install on Linux, see this page.](2.linux-installation.md)

|

||||

|

||||

## Pre requisites

|

||||

@ -30,19 +33,18 @@ along with this program. If not, see <http://www.gnu.org/licenses/>.

|

||||

|

||||

|

||||

|

||||

|

||||

* Download Miniconda3:

|

||||

[https://repo.anaconda.com/miniconda/Miniconda3-latest-Windows-x86_64.exe](https://repo.anaconda.com/miniconda/Miniconda3-latest-Windows-x86_64.exe) Get this installed so that you have access to the Miniconda3 Prompt Console.

|

||||

[https://repo.anaconda.com/miniconda/Miniconda3-latest-Windows-x86_64.exe](https://repo.anaconda.com/miniconda/Miniconda3-latest-Windows-x86_64.exe) Get this installed so that you have access to the Miniconda3 Prompt Console.

|

||||

|

||||

* Open Miniconda3 Prompt from your start menu after it has been installed

|

||||

|

||||

* _(Optional)_ Create a new text file in your root directory `/stable-diffusion-webui/custom-conda-path.txt` that contains the path to your relevant Miniconda3, for example `C:\Users\<username>\miniconda3` (replace `<username>` with your own username). This is required if you have more than 1 miniconda installation or are using custom installation location.

|

||||

* _(Optional)_ Create a new text file in your root directory `/sygil-webui/custom-conda-path.txt` that contains the path to your relevant Miniconda3, for example `C:\Users\<username>\miniconda3` (replace `<username>` with your own username). This is required if you have more than 1 miniconda installation or are using custom installation location.

|

||||

|

||||

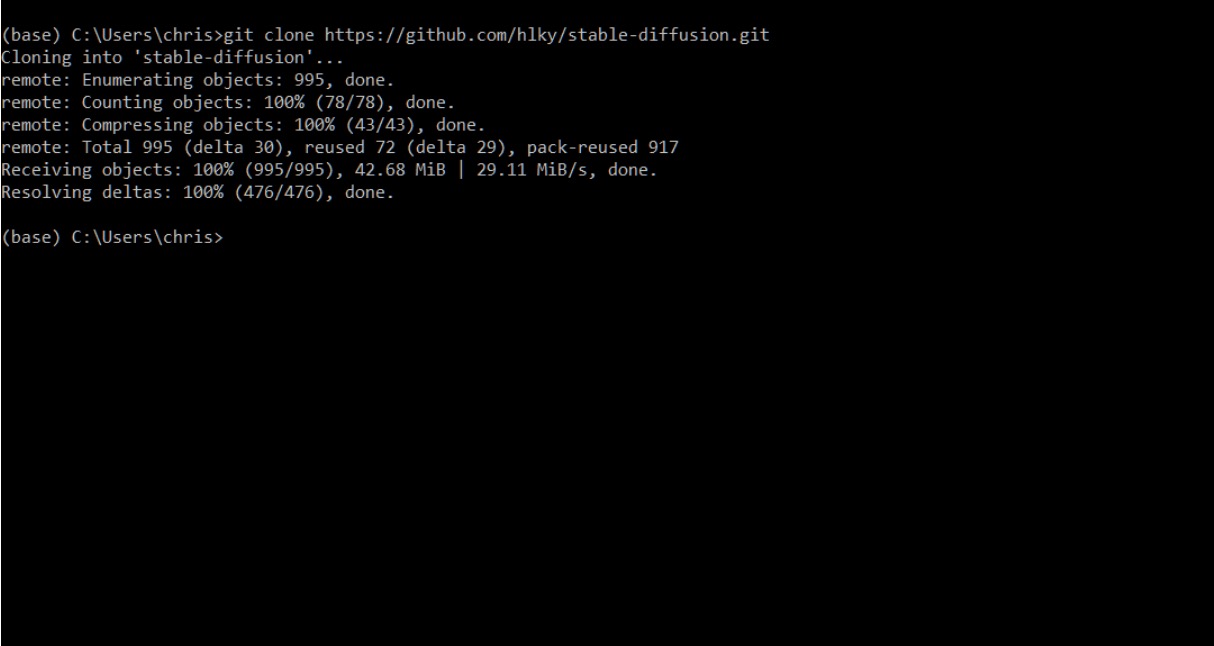

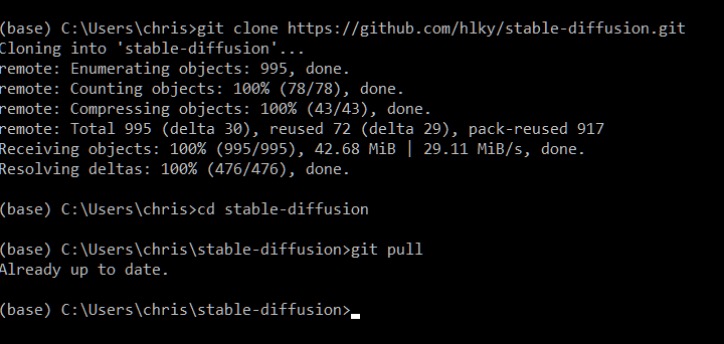

## Cloning the repo

|

||||

|

||||

Type `git clone https://github.com/sd-webui/stable-diffusion-webui.git` into the prompt.

|

||||

Type `git clone https://github.com/Sygil-Dev/sygil-webui.git` into the prompt.

|

||||

|

||||

This will create the `stable-diffusion-webui` directory in your Windows user folder.

|

||||

This will create the `sygil-webui` directory in your Windows user folder.

|

||||

|

||||

|

||||

---

|

||||

@ -51,35 +53,30 @@ Once a repo has been cloned, updating it is as easy as typing `git pull` inside

|

||||

|

||||

|

||||

|

||||

|

||||

* Next you are going to want to create a Hugging Face account: [https://huggingface.co/](https://huggingface.co/)

|

||||

|

||||

|

||||

* After you have signed up, and are signed in go to this link and click on Authorize: [https://huggingface.co/CompVis/stable-diffusion-v-1-4-original](https://huggingface.co/CompVis/stable-diffusion-v-1-4-original)

|

||||

|

||||

|

||||

* After you have authorized your account, go to this link to download the model weights for version 1.4 of the model, future versions will be released in the same way, and updating them will be a similar process :

|

||||

[https://huggingface.co/CompVis/stable-diffusion-v-1-4-original/resolve/main/sd-v1-4.ckpt](https://huggingface.co/CompVis/stable-diffusion-v-1-4-original/resolve/main/sd-v1-4.ckpt)

|

||||

|

||||

|

||||

* Download the model into this directory: `C:\Users\<username>\stable-diffusion-webui\models\ldm\stable-diffusion-v1`

|

||||

[https://huggingface.co/CompVis/stable-diffusion-v-1-4-original/resolve/main/sd-v1-4.ckpt](https://huggingface.co/CompVis/stable-diffusion-v-1-4-original/resolve/main/sd-v1-4.ckpt)

|

||||

|

||||

* Download the model into this directory: `C:\Users\<username>\sygil-webui\models\ldm\stable-diffusion-v1`

|

||||

|

||||

* Rename `sd-v1-4.ckpt` to `model.ckpt` once it is inside the stable-diffusion-v1 folder.

|

||||

|

||||

* Since we are already in our sygil-webui folder in Miniconda, our next step is to create the environment Stable Diffusion needs to work.

|

||||

|

||||

* Since we are already in our stable-diffusion-webui folder in Miniconda, our next step is to create the environment Stable Diffusion needs to work.

|

||||

|

||||

|

||||

* _(Optional)_ If you already have an environment set up for an installation of Stable Diffusion named ldm open up the `environment.yaml` file in `\stable-diffusion-webui\` change the environment name inside of it from `ldm` to `ldo`

|

||||

* _(Optional)_ If you already have an environment set up for an installation of Stable Diffusion named ldm open up the `environment.yaml` file in `\sygil-webui\` change the environment name inside of it from `ldm` to `ldo`

|

||||

|

||||

---

|

||||

|

||||

## First run

|

||||

* `webui.cmd` at the root folder (`\stable-diffusion-webui\`) is your main script that you'll always run. It has the functions to automatically do the followings:

|

||||

* Create conda env

|

||||

* Install and update requirements

|

||||

* Run the relauncher and webui.py script for gradio UI options

|

||||

|

||||

* `webui.cmd` at the root folder (`\sygil-webui\`) is your main script that you'll always run. It has the functions to automatically do the followings:

|

||||

|

||||

* Create conda env

|

||||

* Install and update requirements

|

||||

* Run the relauncher and webui.py script for gradio UI options

|

||||

|

||||

* Run `webui.cmd` by double clicking the file.

|

||||

|

||||

@ -95,34 +92,36 @@ Once a repo has been cloned, updating it is as easy as typing `git pull` inside

|

||||

|

||||

* You should be able to see progress in your `webui.cmd` window. The [http://localhost:7860/](http://localhost:7860/) will be automatically updated to show the final image once progress reach 100%

|

||||

|

||||

* Images created with the web interface will be saved to `\stable-diffusion-webui\outputs\` in their respective folders alongside `.yaml` text files with all of the details of your prompts for easy referencing later. Images will also be saved with their seed and numbered so that they can be cross referenced with their `.yaml` files easily.

|

||||

* Images created with the web interface will be saved to `\sygil-webui\outputs\` in their respective folders alongside `.yaml` text files with all of the details of your prompts for easy referencing later. Images will also be saved with their seed and numbered so that they can be cross referenced with their `.yaml` files easily.

|

||||

|

||||

---

|

||||

|

||||

### Optional additional models

|

||||

|

||||

There are three more models that we need to download in order to get the most out of the functionality offered by sd-webui.

|

||||

There are three more models that we need to download in order to get the most out of the functionality offered by Sygil-Dev.

|

||||

|

||||

> The models are placed inside `src` folder. If you don't have `src` folder inside your root directory it means that you haven't installed the dependencies for your environment yet. [Follow this step](#first-run) before proceeding.

|

||||

|

||||

### GFPGAN

|

||||

1. If you want to use GFPGAN to improve generated faces, you need to install it separately.

|

||||

1. Download [GFPGANv1.3.pth](https://github.com/TencentARC/GFPGAN/releases/download/v1.3.0/GFPGANv1.3.pth) and [GFPGANv1.4.pth](https://github.com/TencentARC/GFPGAN/releases/download/v1.3.4/GFPGANv1.4.pth) and put it into the `/stable-diffusion-webui/models/gfpgan` directory.

|

||||

|

||||

1. If you want to use GFPGAN to improve generated faces, you need to install it separately.

|

||||

2. Download [GFPGANv1.3.pth](https://github.com/TencentARC/GFPGAN/releases/download/v1.3.0/GFPGANv1.3.pth) and [GFPGANv1.4.pth](https://github.com/TencentARC/GFPGAN/releases/download/v1.3.4/GFPGANv1.4.pth) and put it into the `/sygil-webui/models/gfpgan` directory.

|

||||

|

||||

### RealESRGAN

|

||||

|

||||

1. Download [RealESRGAN_x4plus.pth](https://github.com/xinntao/Real-ESRGAN/releases/download/v0.1.0/RealESRGAN_x4plus.pth) and [RealESRGAN_x4plus_anime_6B.pth](https://github.com/xinntao/Real-ESRGAN/releases/download/v0.2.2.4/RealESRGAN_x4plus_anime_6B.pth).

|

||||

1. Put them into the `stable-diffusion-webui/models/realesrgan` directory.

|

||||

2. Put them into the `sygil-webui/models/realesrgan` directory.

|

||||

|

||||

### LDSR

|

||||

1. Detailed instructions [here](https://github.com/Hafiidz/latent-diffusion). Brief instruction as follows.

|

||||

1. Git clone [Hafiidz/latent-diffusion](https://github.com/Hafiidz/latent-diffusion) into your `/stable-diffusion-webui/src/` folder.

|

||||

1. Run `/stable-diffusion-webui/models/ldsr/download_model.bat` to automatically download and rename the models.

|

||||

1. Wait until it is done and you can confirm by confirming two new files in `stable-diffusion-webui/models/ldsr/`

|

||||

1. _(Optional)_ If there are no files there, you can manually download **LDSR** [project.yaml](https://heibox.uni-heidelberg.de/f/31a76b13ea27482981b4/?dl=1) and [model last.cpkt](https://heibox.uni-heidelberg.de/f/578df07c8fc04ffbadf3/?dl=1).

|

||||

1. Rename last.ckpt to model.ckpt and place both under `stable-diffusion-webui/models/ldsr/`.

|

||||

1. Refer to [here](https://github.com/sd-webui/stable-diffusion-webui/issues/488) for any issue.

|

||||

|

||||

1. Detailed instructions [here](https://github.com/Hafiidz/latent-diffusion). Brief instruction as follows.

|

||||

2. Git clone [Hafiidz/latent-diffusion](https://github.com/Hafiidz/latent-diffusion) into your `/sygil-webui/src/` folder.

|

||||

3. Run `/sygil-webui/models/ldsr/download_model.bat` to automatically download and rename the models.

|

||||

4. Wait until it is done and you can confirm by confirming two new files in `sygil-webui/models/ldsr/`

|

||||

5. _(Optional)_ If there are no files there, you can manually download **LDSR** [project.yaml](https://heibox.uni-heidelberg.de/f/31a76b13ea27482981b4/?dl=1) and [model last.cpkt](https://heibox.uni-heidelberg.de/f/578df07c8fc04ffbadf3/?dl=1).

|

||||

6. Rename last.ckpt to model.ckpt and place both under `sygil-webui/models/ldsr/`.

|

||||

7. Refer to [here](https://github.com/Sygil-Dev/sygil-webui/issues/488) for any issue.

|

||||

|

||||

# Credits

|

||||

> Modified by [Hafiidz](https://github.com/Hafiidz) with helps from sd-webui discord and team.

|

||||

|

||||

> Modified by [Hafiidz](https://github.com/Hafiidz) with helps from Sygil-Dev discord and team.

|

||||

|

||||

@ -2,9 +2,9 @@

|

||||

title: Linux Installation

|

||||

---

|

||||

<!--

|

||||

This file is part of stable-diffusion-webui (https://github.com/sd-webui/stable-diffusion-webui/).

|

||||

This file is part of sygil-webui (https://github.com/Sygil-Dev/sygil-webui/).

|

||||

|

||||

Copyright 2022 sd-webui team.

|

||||

Copyright 2022 Sygil-Dev team.

|

||||

This program is free software: you can redistribute it and/or modify

|

||||

it under the terms of the GNU Affero General Public License as published by

|

||||

the Free Software Foundation, either version 3 of the License, or

|

||||

@ -42,9 +42,9 @@ along with this program. If not, see <http://www.gnu.org/licenses/>.

|

||||

|

||||

**Step 3:** Make the script executable by opening the directory in your Terminal and typing `chmod +x linux-sd.sh`, or whatever you named this file as.

|

||||

|

||||

**Step 4:** Run the script with `./linux-sd.sh`, it will begin by cloning the [WebUI Github Repo](https://github.com/sd-webui/stable-diffusion-webui) to the directory the script is located in. This folder will be named `stable-diffusion-webui`.

|

||||

**Step 4:** Run the script with `./linux-sd.sh`, it will begin by cloning the [WebUI Github Repo](https://github.com/Sygil-Dev/sygil-webui) to the directory the script is located in. This folder will be named `sygil-webui`.

|

||||

|

||||

**Step 5:** The script will pause and ask that you move/copy the downloaded 1.4 AI models to the `stable-diffusion-webui` folder. Press Enter once you have done so to continue.

|

||||

**Step 5:** The script will pause and ask that you move/copy the downloaded 1.4 AI models to the `sygil-webui` folder. Press Enter once you have done so to continue.

|

||||

|

||||

**If you are running low on storage space, you can just move the 1.4 AI models file directly to this directory, it will not be deleted, simply moved and renamed. However my personal suggestion is to just **copy** it to the repo folder, in case you desire to delete and rebuild your Stable Diffusion build again.**

|

||||

|

||||

@ -76,7 +76,7 @@ The user will have the ability to set these to yes or no using the menu choices.

|

||||

- Uses An Older Interface Style

|

||||

- Will Not Receive Major Updates

|

||||

|

||||

**Step 9:** If everything has gone successfully, either a new browser window will open with the Streamlit version, or you should see `Running on local URL: http://localhost:7860/` in your Terminal if you launched the Gradio Interface version. Generated images will be located in the `outputs` directory inside of `stable-diffusion-webui`. Enjoy the definitive Stable Diffusion WebUI experience on Linux! :)

|

||||

**Step 9:** If everything has gone successfully, either a new browser window will open with the Streamlit version, or you should see `Running on local URL: http://localhost:7860/` in your Terminal if you launched the Gradio Interface version. Generated images will be located in the `outputs` directory inside of `sygil-webui`. Enjoy the definitive Stable Diffusion WebUI experience on Linux! :)

|

||||

|

||||

## Ultimate Stable Diffusion Customizations

|

||||

|

||||

@ -87,7 +87,7 @@ If the user chooses to Customize their setup, then they will be presented with t

|

||||

- Update the Stable Diffusion WebUI fork from the GitHub Repo

|

||||

- Customize the launch arguments for Gradio Interface version of Stable Diffusion (See Above)

|

||||

|

||||

### Refer back to the original [WebUI Github Repo](https://github.com/sd-webui/stable-diffusion-webui) for useful tips and links to other resources that can improve your Stable Diffusion experience

|

||||

### Refer back to the original [WebUI Github Repo](https://github.com/Sygil-Dev/sygil-webui) for useful tips and links to other resources that can improve your Stable Diffusion experience

|

||||

|

||||

## Planned Additions

|

||||

- Investigate ways to handle Anaconda automatic installation on a user's system.

|

||||

|

||||

@ -2,7 +2,7 @@

|

||||

title: Running Stable Diffusion WebUI Using Docker

|

||||

---

|

||||

<!--

|

||||

This file is part of stable-diffusion-webui (https://github.com/sd-webui/stable-diffusion-webui/).

|

||||

This file is part of sygil-webui (https://github.com/Sygil-Dev/sygil-webui/).

|

||||

|

||||

Copyright 2022 sd-webui team.

|

||||

This program is free software: you can redistribute it and/or modify

|

||||

@ -69,7 +69,7 @@ Additional Requirements:

|

||||

|

||||

Other Notes:

|

||||

* "Optional" packages commonly used with Stable Diffusion WebUI workflows such as, RealESRGAN, GFPGAN, will be installed by default.

|

||||

* An older version of running Stable Diffusion WebUI using Docker exists here: https://github.com/sd-webui/stable-diffusion-webui/discussions/922

|

||||

* An older version of running Stable Diffusion WebUI using Docker exists here: https://github.com/Sygil-Dev/sygil-webui/discussions/922

|

||||

|

||||

### But what about AMD?

|

||||

There is tentative support for AMD GPUs through docker which can be enabled via `docker-compose.amd.yml`,

|

||||

@ -91,7 +91,7 @@ in your `.profile` or through a tool like `direnv`

|

||||

|

||||

### Clone Repository

|

||||

* Clone this repository to your host machine:

|

||||

* `git clone https://github.com/sd-webui/stable-diffusion-webui.git`

|

||||

* `git clone https://github.com/Sygil-Dev/sygil-webui.git`

|

||||

* If you plan to use Docker Compose to run the image in a container (most users), create an `.env_docker` file using the example file:

|

||||

* `cp .env_docker.example .env_docker`

|

||||

* Edit `.env_docker` using the text editor of your choice.

|

||||

@ -105,7 +105,7 @@ The default `docker-compose.yml` file will create a Docker container instance n

|

||||

* Create an instance of the Stable Diffusion WebUI image as a Docker container:

|

||||

* `docker compose up`

|

||||

* During the first run, the container image will be build containing all of the dependencies necessary to run Stable Diffusion. This build process will take several minutes to complete

|

||||

* After the image build has completed, you will have a docker image for running the Stable Diffusion WebUI tagged `stable-diffusion-webui:dev`

|

||||

* After the image build has completed, you will have a docker image for running the Stable Diffusion WebUI tagged `sygil-webui:dev`

|

||||

|

||||

(Optional) Daemon mode:

|

||||

* You can start the container in "daemon" mode by applying the `-d` option: `docker compose up -d`. This will run the server in the background so you can close your console window without losing your work.

|

||||

@ -160,9 +160,9 @@ You will need to re-download all associated model files/weights used by Stable D

|

||||

* `docker exec -it st-webui /bin/bash`

|

||||

* `docker compose exec stable-diffusion bash`

|

||||

* To start a container using the Stable Diffusion WebUI Docker image without Docker Compose, you can do so with the following command:

|

||||

* `docker run --rm -it --entrypoint /bin/bash stable-diffusion-webui:dev`

|

||||

* `docker run --rm -it --entrypoint /bin/bash sygil-webui:dev`

|

||||

* To start a container, with mapped ports, GPU resource access, and a local directory bound as a container volume, you can do so with the following command:

|

||||

* `docker run --rm -it -p 8501:8501 -p 7860:7860 --gpus all -v $(pwd):/sd --entrypoint /bin/bash stable-diffusion-webui:dev`

|

||||

* `docker run --rm -it -p 8501:8501 -p 7860:7860 --gpus all -v $(pwd):/sd --entrypoint /bin/bash sygil-webui:dev`

|

||||

|

||||

---

|

||||

|

||||

|

||||

@ -2,9 +2,9 @@

|

||||

title: Streamlit Web UI Interface

|

||||

---

|

||||

<!--

|

||||

This file is part of stable-diffusion-webui (https://github.com/sd-webui/stable-diffusion-webui/).

|

||||

This file is part of sygil-webui (https://github.com/Sygil-Dev/sygil-webui/).

|

||||

|

||||

Copyright 2022 sd-webui team.

|

||||

Copyright 2022 Sygil-Dev team.

|

||||

This program is free software: you can redistribute it and/or modify

|

||||

it under the terms of the GNU Affero General Public License as published by

|

||||

the Free Software Foundation, either version 3 of the License, or

|

||||

@ -94,7 +94,7 @@ Streamlit Image2Image allows for you to take an image, be it generated by Stable

|

||||

|

||||

The Concept Library allows for the easy usage of custom textual inversion models. These models may be loaded into `models/custom/sd-concepts-library` and will appear in the Concepts Library in Streamlit. To use one of these custom models in a prompt, either copy it using the button on the model, or type `<model-name>` in the prompt where you wish to use it.

|

||||

|

||||

Please see the [Concepts Library](https://github.com/sd-webui/stable-diffusion-webui/blob/master/docs/7.concepts-library.md) section to learn more about how to use these tools.

|

||||

Please see the [Concepts Library](https://github.com/Sygil-Dev/sygil-webui/blob/master/docs/7.concepts-library.md) section to learn more about how to use these tools.

|

||||

|

||||

## Textual Inversion

|

||||

---

|

||||

|

||||

@ -2,9 +2,9 @@

|

||||

title: Gradio Web UI Interface

|

||||

---

|

||||

<!--

|

||||

This file is part of stable-diffusion-webui (https://github.com/sd-webui/stable-diffusion-webui/).

|

||||

This file is part of sygil-webui (https://github.com/Sygil-Dev/sygil-webui/).

|

||||

|

||||

Copyright 2022 sd-webui team.

|

||||

Copyright 2022 Sygil-Dev team.

|

||||

This program is free software: you can redistribute it and/or modify

|

||||

it under the terms of the GNU Affero General Public License as published by

|

||||

the Free Software Foundation, either version 3 of the License, or

|

||||

|

||||

@ -2,9 +2,9 @@

|

||||

title: Upscalers

|

||||

---

|

||||

<!--

|

||||

This file is part of stable-diffusion-webui (https://github.com/sd-webui/stable-diffusion-webui/).

|

||||

This file is part of sygil-webui (https://github.com/Sygil-Dev/sygil-webui/).

|

||||

|

||||

Copyright 2022 sd-webui team.

|

||||

Copyright 2022 Sygil-Dev team.

|

||||

This program is free software: you can redistribute it and/or modify

|

||||

it under the terms of the GNU Affero General Public License as published by

|

||||

the Free Software Foundation, either version 3 of the License, or

|

||||

@ -32,7 +32,7 @@ GFPGAN is designed to help restore faces in Stable Diffusion outputs. If you hav

|

||||

|

||||

If you want to use GFPGAN to improve generated faces, you need to download the models for it seperately if you are on Windows or doing so manually on Linux.

|

||||

Download [GFPGANv1.3.pth](https://github.com/TencentARC/GFPGAN/releases/download/v1.3.0/GFPGANv1.3.pth) and put it

|

||||

into the `/stable-diffusion-webui/models/gfpgan` directory after you have setup the conda environment for the first time.

|

||||

into the `/sygil-webui/models/gfpgan` directory after you have setup the conda environment for the first time.

|

||||

|

||||

## RealESRGAN

|

||||

---

|

||||

@ -42,7 +42,7 @@ RealESRGAN is a 4x upscaler built into both versions of the Web UI interface. It

|

||||

|

||||

If you want to use RealESRGAN to upscale your images, you need to download the models for it seperately if you are on Windows or doing so manually on Linux.

|

||||

Download [RealESRGAN_x4plus.pth](https://github.com/xinntao/Real-ESRGAN/releases/download/v0.1.0/RealESRGAN_x4plus.pth) and [RealESRGAN_x4plus_anime_6B.pth](https://github.com/xinntao/Real-ESRGAN/releases/download/v0.2.2.4/RealESRGAN_x4plus_anime_6B.pth).

|

||||

Put them into the `stable-diffusion-webui/models/realesrgan` directory after you have setup the conda environment for the first time.

|

||||

Put them into the `sygil-webui/models/realesrgan` directory after you have setup the conda environment for the first time.

|

||||

|

||||

## GoBig (Gradio only currently)

|

||||

---

|

||||

@ -57,7 +57,7 @@ To use GoBig, you will need to download the RealESRGAN models as directed above.

|

||||

LSDR is a 4X upscaler with high VRAM usage that uses a Latent Diffusion model to upscale the image. This will accentuate the details of an image, but won't change the composition. This might introduce sharpening, but it is great for textures or compositions with plenty of details. However, it is slower and will use more VRAM.

|

||||

|

||||

If you want to use LSDR to upscale your images, you need to download the models for it seperately if you are on Windows or doing so manually on Linux.

|

||||

Download the LDSR [project.yaml](https://heibox.uni-heidelberg.de/f/31a76b13ea27482981b4/?dl=1) and [ model last.cpkt](https://heibox.uni-heidelberg.de/f/578df07c8fc04ffbadf3/?dl=1). Rename `last.ckpt` to `model.ckpt` and place both in the `stable-diffusion-webui/models/ldsr` directory after you have setup the conda environment for the first time.

|

||||

Download the LDSR [project.yaml](https://heibox.uni-heidelberg.de/f/31a76b13ea27482981b4/?dl=1) and [ model last.cpkt](https://heibox.uni-heidelberg.de/f/578df07c8fc04ffbadf3/?dl=1). Rename `last.ckpt` to `model.ckpt` and place both in the `sygil-webui/models/ldsr` directory after you have setup the conda environment for the first time.

|

||||

|

||||

## GoLatent (Gradio only currently)

|

||||

---

|

||||

|

||||

@ -1,7 +1,7 @@

|

||||

<!--

|

||||

This file is part of stable-diffusion-webui (https://github.com/sd-webui/stable-diffusion-webui/).

|

||||

This file is part of sygil-webui (https://github.com/Sygil-Dev/sygil-webui/).

|

||||

|

||||

Copyright 2022 sd-webui team.

|

||||

Copyright 2022 Sygil-Dev team.

|

||||

This program is free software: you can redistribute it and/or modify

|

||||

it under the terms of the GNU Affero General Public License as published by

|

||||

the Free Software Foundation, either version 3 of the License, or

|

||||

|

||||

@ -2,9 +2,9 @@

|

||||

title: Custom models

|

||||

---

|

||||

<!--

|

||||

This file is part of stable-diffusion-webui (https://github.com/sd-webui/stable-diffusion-webui/).

|

||||

This file is part of sygil-webui (https://github.com/Sygil-Dev/sygil-webui/).

|

||||

|

||||

Copyright 2022 sd-webui team.

|

||||

Copyright 2022 Sygil-Dev team.

|

||||

This program is free software: you can redistribute it and/or modify

|

||||

it under the terms of the GNU Affero General Public License as published by

|

||||

the Free Software Foundation, either version 3 of the License, or

|

||||

|

||||

@ -1,7 +1,7 @@

|

||||

#!/bin/bash

|

||||

# This file is part of stable-diffusion-webui (https://github.com/sd-webui/stable-diffusion-webui/).

|

||||

# This file is part of sygil-webui (https://github.com/Sygil-Dev/sygil-webui/).

|

||||

|

||||

# Copyright 2022 sd-webui team.

|

||||

# Copyright 2022 Sygil-Dev team.

|

||||

# This program is free software: you can redistribute it and/or modify

|

||||

# it under the terms of the GNU Affero General Public License as published by

|

||||

# the Free Software Foundation, either version 3 of the License, or

|

||||

@ -111,7 +111,7 @@ if [[ -e "${MODEL_DIR}/sd-concepts-library" ]]; then

|

||||

else

|

||||

# concept library does not exist, clone

|

||||

cd ${MODEL_DIR}

|

||||

git clone https://github.com/sd-webui/sd-concepts-library.git

|

||||

git clone https://github.com/Sygil-Dev/sd-concepts-library.git

|

||||

fi

|

||||

# create directory and link concepts library

|

||||

mkdir -p ${SCRIPT_DIR}/models/custom

|

||||

|

||||

@ -1,7 +1,7 @@

|

||||

name: ldm

|

||||

# This file is part of stable-diffusion-webui (https://github.com/sd-webui/stable-diffusion-webui/).

|

||||

# This file is part of sygil-webui (https://github.com/Sygil-Dev/sygil-webui/).

|

||||

|

||||

# Copyright 2022 sd-webui team.

|

||||

# Copyright 2022 Sygil-Dev team.

|

||||

# This program is free software: you can redistribute it and/or modify

|

||||

# it under the terms of the GNU Affero General Public License as published by

|

||||

# the Free Software Foundation, either version 3 of the License, or

|

||||

|

||||

@ -1,7 +1,7 @@

|

||||

/*

|

||||

This file is part of stable-diffusion-webui (https://github.com/sd-webui/stable-diffusion-webui/).

|

||||

This file is part of sygil-webui (https://github.com/Sygil-Dev/sygil-webui/).

|

||||

|

||||

Copyright 2022 sd-webui team.

|

||||

Copyright 2022 Sygil-Dev team.

|

||||

This program is free software: you can redistribute it and/or modify

|

||||

it under the terms of the GNU Affero General Public License as published by

|

||||

the Free Software Foundation, either version 3 of the License, or

|

||||

|

||||

@ -1,7 +1,7 @@

|

||||

/*

|

||||

This file is part of stable-diffusion-webui (https://github.com/sd-webui/stable-diffusion-webui/).

|

||||

This file is part of sygil-webui (https://github.com/Sygil-Dev/sygil-webui/).

|

||||

|

||||

Copyright 2022 sd-webui team.

|

||||

Copyright 2022 Sygil-Dev team.

|

||||

This program is free software: you can redistribute it and/or modify

|

||||

it under the terms of the GNU Affero General Public License as published by

|

||||

the Free Software Foundation, either version 3 of the License, or

|

||||

|

||||

@ -1,7 +1,7 @@

|

||||

/*

|

||||

This file is part of stable-diffusion-webui (https://github.com/sd-webui/stable-diffusion-webui/).

|

||||

This file is part of sygil-webui (https://github.com/Sygil-Dev/sygil-webui/).

|

||||

|

||||

Copyright 2022 sd-webui team.

|

||||

Copyright 2022 Sygil-Dev team.

|

||||

This program is free software: you can redistribute it and/or modify

|

||||

it under the terms of the GNU Affero General Public License as published by

|

||||

the Free Software Foundation, either version 3 of the License, or

|

||||

|

||||

@ -1,6 +1,6 @@

|

||||

# This file is part of stable-diffusion-webui (https://github.com/sd-webui/stable-diffusion-webui/).

|

||||

# This file is part of sygil-webui (https://github.com/Sygil-Dev/sygil-webui/).

|

||||

|

||||

# Copyright 2022 sd-webui team.

|

||||