[pre-commit.ci] auto fixes from pre-commit.com hooks

for more information, see https://pre-commit.ci

@ -1,3 +1,3 @@

|

||||

outputs/

|

||||

src/

|

||||

configs/webui/userconfig_streamlit.yaml

|

||||

configs/webui/userconfig_streamlit.yaml

|

||||

|

||||

2

.gitattributes

vendored

@ -1,4 +1,4 @@

|

||||

* text=auto

|

||||

*.{cmd,[cC][mM][dD]} text eol=crlf

|

||||

*.{bat,[bB][aA][tT]} text eol=crlf

|

||||

*.sh text eol=lf

|

||||

*.sh text eol=lf

|

||||

|

||||

6

.github/ISSUE_TEMPLATE/bug_report.yml

vendored

@ -40,7 +40,7 @@ body:

|

||||

- type: dropdown

|

||||

id: os

|

||||

attributes:

|

||||

label: Where are you running the webui?

|

||||

label: Where are you running the webui?

|

||||

multiple: true

|

||||

options:

|

||||

- Windows

|

||||

@ -52,7 +52,7 @@ body:

|

||||

attributes:

|

||||

label: Custom settings

|

||||

description: If you are running the webui with specifi settings, please paste them here for reference (like --nitro)

|

||||

render: shell

|

||||

render: shell

|

||||

- type: textarea

|

||||

id: logs

|

||||

attributes:

|

||||

@ -66,4 +66,4 @@ body:

|

||||

description: By submitting this issue, you agree to follow our [Code of Conduct](https://docs.github.com/en/site-policy/github-terms/github-community-code-of-conduct)

|

||||

options:

|

||||

- label: I agree to follow this project's Code of Conduct

|

||||

required: true

|

||||

required: true

|

||||

|

||||

2

.github/PULL_REQUEST_TEMPLATE.md

vendored

@ -13,4 +13,4 @@ Closes: # (issue)

|

||||

- [ ] I have changed the base branch to `dev`

|

||||

- [ ] I have performed a self-review of my own code

|

||||

- [ ] I have commented my code in hard-to-understand areas

|

||||

- [ ] I have made corresponding changes to the documentation

|

||||

- [ ] I have made corresponding changes to the documentation

|

||||

|

||||

2

.github/workflows/deploy.yml

vendored

@ -37,4 +37,4 @@ jobs:

|

||||

# The GH actions bot is used by default if you didn't specify the two fields.

|

||||

# You can swap them out with your own user credentials.

|

||||

user_name: github-actions[bot]

|

||||

user_email: 41898282+github-actions[bot]@users.noreply.github.com

|

||||

user_email: 41898282+github-actions[bot]@users.noreply.github.com

|

||||

|

||||

2

.github/workflows/test-deploy.yml

vendored

@ -21,4 +21,4 @@ jobs:

|

||||

- name: Install dependencies

|

||||

run: yarn install

|

||||

- name: Test build website

|

||||

run: yarn build

|

||||

run: yarn build

|

||||

|

||||

28

README.md

@ -6,7 +6,7 @@

|

||||

|

||||

## Installation instructions for:

|

||||

|

||||

- **[Windows](https://sygil-dev.github.io/sygil-webui/docs/Installation/windows-installation)**

|

||||

- **[Windows](https://sygil-dev.github.io/sygil-webui/docs/Installation/windows-installation)**

|

||||

- **[Linux](https://sygil-dev.github.io/sygil-webui/docs/Installation/linux-installation)**

|

||||

|

||||

### Want to ask a question or request a feature?

|

||||

@ -34,10 +34,10 @@ Check the [Contribution Guide](CONTRIBUTING.md)

|

||||

|

||||

* Run additional upscaling models on CPU to save VRAM

|

||||

|

||||

* Textual inversion: [Reaserch Paper](https://textual-inversion.github.io/)

|

||||

* Textual inversion: [Reaserch Paper](https://textual-inversion.github.io/)

|

||||

|

||||

* K-Diffusion Samplers: A great collection of samplers to use, including:

|

||||

|

||||

|

||||

- `k_euler`

|

||||

- `k_lms`

|

||||

- `k_euler_a`

|

||||

@ -95,8 +95,8 @@ An easy way to work with Stable Diffusion right from your browser.

|

||||

To give a token (tag recognized by the AI) a specific or increased weight (emphasis), add `:0.##` to the prompt, where `0.##` is a decimal that will specify the weight of all tokens before the colon.

|

||||

Ex: `cat:0.30, dog:0.70` or `guy riding a bicycle :0.7, incoming car :0.30`

|

||||

|

||||

Negative prompts can be added by using `###` , after which any tokens will be seen as negative.

|

||||

Ex: `cat playing with string ### yarn` will negate `yarn` from the generated image.

|

||||

Negative prompts can be added by using `###` , after which any tokens will be seen as negative.

|

||||

Ex: `cat playing with string ### yarn` will negate `yarn` from the generated image.

|

||||

|

||||

Negatives are a very powerful tool to get rid of contextually similar or related topics, but **be careful when adding them since the AI might see connections you can't**, and end up outputting gibberish

|

||||

|

||||

@ -131,7 +131,7 @@ Lets you improve faces in pictures using the GFPGAN model. There is a checkbox i

|

||||

|

||||

If you want to use GFPGAN to improve generated faces, you need to install it separately.

|

||||

Download [GFPGANv1.4.pth](https://github.com/TencentARC/GFPGAN/releases/download/v1.3.4/GFPGANv1.4.pth) and put it

|

||||

into the `/sygil-webui/models/gfpgan` directory.

|

||||

into the `/sygil-webui/models/gfpgan` directory.

|

||||

|

||||

### RealESRGAN

|

||||

|

||||

@ -141,7 +141,7 @@ Lets you double the resolution of generated images. There is a checkbox in every

|

||||

There is also a separate tab for using RealESRGAN on any picture.

|

||||

|

||||

Download [RealESRGAN_x4plus.pth](https://github.com/xinntao/Real-ESRGAN/releases/download/v0.1.0/RealESRGAN_x4plus.pth) and [RealESRGAN_x4plus_anime_6B.pth](https://github.com/xinntao/Real-ESRGAN/releases/download/v0.2.2.4/RealESRGAN_x4plus_anime_6B.pth).

|

||||

Put them into the `sygil-webui/models/realesrgan` directory.

|

||||

Put them into the `sygil-webui/models/realesrgan` directory.

|

||||

|

||||

### LSDR

|

||||

|

||||

@ -174,8 +174,8 @@ which is available on [GitHub](https://github.com/CompVis/latent-diffusion). PDF

|

||||

|

||||

[Stable Diffusion](#stable-diffusion-v1) is a latent text-to-image diffusion

|

||||

model.

|

||||

Thanks to a generous compute donation from [Stability AI](https://stability.ai/) and support from [LAION](https://laion.ai/), we were able to train a Latent Diffusion Model on 512x512 images from a subset of the [LAION-5B](https://laion.ai/blog/laion-5b/) database.

|

||||

Similar to Google's [Imagen](https://arxiv.org/abs/2205.11487),

|

||||

Thanks to a generous compute donation from [Stability AI](https://stability.ai/) and support from [LAION](https://laion.ai/), we were able to train a Latent Diffusion Model on 512x512 images from a subset of the [LAION-5B](https://laion.ai/blog/laion-5b/) database.

|

||||

Similar to Google's [Imagen](https://arxiv.org/abs/2205.11487),

|

||||

this model uses a frozen CLIP ViT-L/14 text encoder to condition the model on text prompts.

|

||||

With its 860M UNet and 123M text encoder, the model is relatively lightweight and runs on a GPU with at least 10GB VRAM.

|

||||

See [this section](#stable-diffusion-v1) below and the [model card](https://huggingface.co/CompVis/stable-diffusion).

|

||||

@ -184,26 +184,26 @@ See [this section](#stable-diffusion-v1) below and the [model card](https://hugg

|

||||

|

||||

Stable Diffusion v1 refers to a specific configuration of the model

|

||||

architecture that uses a downsampling-factor 8 autoencoder with an 860M UNet

|

||||

and CLIP ViT-L/14 text encoder for the diffusion model. The model was pretrained on 256x256 images and

|

||||

and CLIP ViT-L/14 text encoder for the diffusion model. The model was pretrained on 256x256 images and

|

||||

then finetuned on 512x512 images.

|

||||

|

||||

*Note: Stable Diffusion v1 is a general text-to-image diffusion model and therefore mirrors biases and (mis-)conceptions that are present

|

||||

in its training data.

|

||||

in its training data.

|

||||

Details on the training procedure and data, as well as the intended use of the model can be found in the corresponding [model card](https://huggingface.co/CompVis/stable-diffusion).

|

||||

|

||||

## Comments

|

||||

|

||||

- Our code base for the diffusion models builds heavily on [OpenAI's ADM codebase](https://github.com/openai/guided-diffusion)

|

||||

and [https://github.com/lucidrains/denoising-diffusion-pytorch](https://github.com/lucidrains/denoising-diffusion-pytorch).

|

||||

and [https://github.com/lucidrains/denoising-diffusion-pytorch](https://github.com/lucidrains/denoising-diffusion-pytorch).

|

||||

Thanks for open-sourcing!

|

||||

|

||||

- The implementation of the transformer encoder is from [x-transformers](https://github.com/lucidrains/x-transformers) by [lucidrains](https://github.com/lucidrains?tab=repositories).

|

||||

- The implementation of the transformer encoder is from [x-transformers](https://github.com/lucidrains/x-transformers) by [lucidrains](https://github.com/lucidrains?tab=repositories).

|

||||

|

||||

## BibTeX

|

||||

|

||||

```

|

||||

@misc{rombach2021highresolution,

|

||||

title={High-Resolution Image Synthesis with Latent Diffusion Models},

|

||||

title={High-Resolution Image Synthesis with Latent Diffusion Models},

|

||||

author={Robin Rombach and Andreas Blattmann and Dominik Lorenz and Patrick Esser and Björn Ommer},

|

||||

year={2021},

|

||||

eprint={2112.10752},

|

||||

|

||||

@ -21,7 +21,7 @@ This model card focuses on the model associated with the Stable Diffusion model,

|

||||

|

||||

# Uses

|

||||

|

||||

## Direct Use

|

||||

## Direct Use

|

||||

The model is intended for research purposes only. Possible research areas and

|

||||

tasks include

|

||||

|

||||

@ -68,11 +68,11 @@ Using the model to generate content that is cruel to individuals is a misuse of

|

||||

considerations.

|

||||

|

||||

### Bias

|

||||

While the capabilities of image generation models are impressive, they can also reinforce or exacerbate social biases.

|

||||

Stable Diffusion v1 was trained on subsets of [LAION-2B(en)](https://laion.ai/blog/laion-5b/),

|

||||

which consists of images that are primarily limited to English descriptions.

|

||||

Texts and images from communities and cultures that use other languages are likely to be insufficiently accounted for.

|

||||

This affects the overall output of the model, as white and western cultures are often set as the default. Further, the

|

||||

While the capabilities of image generation models are impressive, they can also reinforce or exacerbate social biases.

|

||||

Stable Diffusion v1 was trained on subsets of [LAION-2B(en)](https://laion.ai/blog/laion-5b/),

|

||||

which consists of images that are primarily limited to English descriptions.

|

||||

Texts and images from communities and cultures that use other languages are likely to be insufficiently accounted for.

|

||||

This affects the overall output of the model, as white and western cultures are often set as the default. Further, the

|

||||

ability of the model to generate content with non-English prompts is significantly worse than with English-language prompts.

|

||||

|

||||

|

||||

@ -84,7 +84,7 @@ The model developers used the following dataset for training the model:

|

||||

- LAION-2B (en) and subsets thereof (see next section)

|

||||

|

||||

**Training Procedure**

|

||||

Stable Diffusion v1 is a latent diffusion model which combines an autoencoder with a diffusion model that is trained in the latent space of the autoencoder. During training,

|

||||

Stable Diffusion v1 is a latent diffusion model which combines an autoencoder with a diffusion model that is trained in the latent space of the autoencoder. During training,

|

||||

|

||||

- Images are encoded through an encoder, which turns images into latent representations. The autoencoder uses a relative downsampling factor of 8 and maps images of shape H x W x 3 to latents of shape H/f x W/f x 4

|

||||

- Text prompts are encoded through a ViT-L/14 text-encoder.

|

||||

@ -108,12 +108,12 @@ filtered to images with an original size `>= 512x512`, estimated aesthetics scor

|

||||

- **Batch:** 32 x 8 x 2 x 4 = 2048

|

||||

- **Learning rate:** warmup to 0.0001 for 10,000 steps and then kept constant

|

||||

|

||||

## Evaluation Results

|

||||

## Evaluation Results

|

||||

Evaluations with different classifier-free guidance scales (1.5, 2.0, 3.0, 4.0,

|

||||

5.0, 6.0, 7.0, 8.0) and 50 PLMS sampling

|

||||

steps show the relative improvements of the checkpoints:

|

||||

|

||||

|

||||

|

||||

|

||||

Evaluated using 50 PLMS steps and 10000 random prompts from the COCO2017 validation set, evaluated at 512x512 resolution. Not optimized for FID scores.

|

||||

## Environmental Impact

|

||||

@ -137,4 +137,3 @@ Based on that information, we estimate the following CO2 emissions using the [Ma

|

||||

}

|

||||

|

||||

*This model card was written by: Robin Rombach and Patrick Esser and is based on the [DALL-E Mini model card](https://huggingface.co/dalle-mini/dalle-mini).*

|

||||

|

||||

|

||||

@ -582,4 +582,4 @@

|

||||

"outputs": []

|

||||

}

|

||||

]

|

||||

}

|

||||

}

|

||||

|

||||

@ -23,7 +23,7 @@ Hopefully demand will be high, we want to train **hundreds** of new concepts!

|

||||

|

||||

# What does `most inventive use` mean?

|

||||

|

||||

Whatever you want it to mean! be creative! experiment!

|

||||

Whatever you want it to mean! be creative! experiment!

|

||||

|

||||

There are several categories we will look at:

|

||||

|

||||

@ -33,7 +33,7 @@ There are several categories we will look at:

|

||||

|

||||

* composition; meaning anything related to how big things are, their position, the angle, etc

|

||||

|

||||

* styling;

|

||||

* styling;

|

||||

|

||||

|

||||

|

||||

@ -45,7 +45,7 @@ There are several categories we will look at:

|

||||

|

||||

## `The Sims(TM): Stable Diffusion edition` ?

|

||||

|

||||

For this event the theme is “The Sims: Stable Diffusion edition”.

|

||||

For this event the theme is “The Sims: Stable Diffusion edition”.

|

||||

|

||||

So we have selected a subset of [products from Amazon Berkely Objects dataset](https://github.com/sd-webui/abo).

|

||||

|

||||

|

||||

@ -17,5 +17,5 @@

|

||||

"type_vocab_size": 2,

|

||||

"vocab_size": 30522,

|

||||

"encoder_width": 768,

|

||||

"add_cross_attention": true

|

||||

"add_cross_attention": true

|

||||

}

|

||||

|

||||

@ -21,7 +21,7 @@ init_lr: 1e-5

|

||||

image_size: 384

|

||||

|

||||

# generation configs

|

||||

max_length: 20

|

||||

max_length: 20

|

||||

min_length: 5

|

||||

num_beams: 3

|

||||

prompt: 'a picture of '

|

||||

@ -30,4 +30,3 @@ prompt: 'a picture of '

|

||||

weight_decay: 0.05

|

||||

min_lr: 0

|

||||

max_epoch: 5

|

||||

|

||||

|

||||

@ -17,5 +17,5 @@

|

||||

"type_vocab_size": 2,

|

||||

"vocab_size": 30524,

|

||||

"encoder_width": 768,

|

||||

"add_cross_attention": true

|

||||

"add_cross_attention": true

|

||||

}

|

||||

|

||||

@ -1,4 +1,4 @@

|

||||

image_root: '/export/share/datasets/vision/NLVR2/'

|

||||

image_root: '/export/share/datasets/vision/NLVR2/'

|

||||

ann_root: 'annotation'

|

||||

|

||||

# set pretrained as a file path or an url

|

||||

@ -6,8 +6,8 @@ pretrained: 'https://storage.googleapis.com/sfr-vision-language-research/BLIP/mo

|

||||

|

||||

#size of vit model; base or large

|

||||

vit: 'base'

|

||||

batch_size_train: 16

|

||||

batch_size_test: 64

|

||||

batch_size_train: 16

|

||||

batch_size_test: 64

|

||||

vit_grad_ckpt: False

|

||||

vit_ckpt_layer: 0

|

||||

max_epoch: 15

|

||||

@ -18,4 +18,3 @@ image_size: 384

|

||||

weight_decay: 0.05

|

||||

init_lr: 3e-5

|

||||

min_lr: 0

|

||||

|

||||

|

||||

@ -12,4 +12,4 @@ image_size: 384

|

||||

max_length: 20

|

||||

min_length: 5

|

||||

num_beams: 3

|

||||

prompt: 'a picture of '

|

||||

prompt: 'a picture of '

|

||||

|

||||

@ -1,7 +1,7 @@

|

||||

train_file: ['/export/share/junnan-li/VL_pretrain/annotation/coco_karpathy_train.json',

|

||||

'/export/share/junnan-li/VL_pretrain/annotation/vg_caption.json',

|

||||

]

|

||||

laion_path: ''

|

||||

laion_path: ''

|

||||

|

||||

# size of vit model; base or large

|

||||

vit: 'base'

|

||||

@ -22,6 +22,3 @@ warmup_lr: 1e-6

|

||||

lr_decay_rate: 0.9

|

||||

max_epoch: 20

|

||||

warmup_steps: 3000

|

||||

|

||||

|

||||

|

||||

|

||||

@ -31,4 +31,3 @@ negative_all_rank: True

|

||||

weight_decay: 0.05

|

||||

min_lr: 0

|

||||

max_epoch: 6

|

||||

|

||||

|

||||

@ -31,4 +31,3 @@ negative_all_rank: False

|

||||

weight_decay: 0.05

|

||||

min_lr: 0

|

||||

max_epoch: 6

|

||||

|

||||

|

||||

@ -9,4 +9,4 @@ vit: 'base'

|

||||

batch_size: 64

|

||||

k_test: 128

|

||||

image_size: 384

|

||||

num_frm_test: 8

|

||||

num_frm_test: 8

|

||||

|

||||

@ -8,8 +8,8 @@ pretrained: 'https://storage.googleapis.com/sfr-vision-language-research/BLIP/mo

|

||||

|

||||

# size of vit model; base or large

|

||||

vit: 'base'

|

||||

batch_size_train: 16

|

||||

batch_size_test: 32

|

||||

batch_size_train: 16

|

||||

batch_size_test: 32

|

||||

vit_grad_ckpt: False

|

||||

vit_ckpt_layer: 0

|

||||

init_lr: 2e-5

|

||||

@ -22,4 +22,4 @@ inference: 'rank'

|

||||

# optimizer

|

||||

weight_decay: 0.05

|

||||

min_lr: 0

|

||||

max_epoch: 10

|

||||

max_epoch: 10

|

||||

|

||||

@ -83,4 +83,4 @@ lightning:

|

||||

increase_log_steps: False

|

||||

|

||||

trainer:

|

||||

benchmark: True

|

||||

benchmark: True

|

||||

|

||||

@ -95,4 +95,4 @@ lightning:

|

||||

increase_log_steps: False

|

||||

|

||||

trainer:

|

||||

benchmark: True

|

||||

benchmark: True

|

||||

|

||||

@ -15,7 +15,7 @@ model:

|

||||

conditioning_key: crossattn

|

||||

monitor: val/loss

|

||||

use_ema: False

|

||||

|

||||

|

||||

unet_config:

|

||||

target: ldm.modules.diffusionmodules.openaimodel.UNetModel

|

||||

params:

|

||||

@ -37,7 +37,7 @@ model:

|

||||

use_spatial_transformer: true

|

||||

transformer_depth: 1

|

||||

context_dim: 512

|

||||

|

||||

|

||||

first_stage_config:

|

||||

target: ldm.models.autoencoder.VQModelInterface

|

||||

params:

|

||||

@ -59,7 +59,7 @@ model:

|

||||

dropout: 0.0

|

||||

lossconfig:

|

||||

target: torch.nn.Identity

|

||||

|

||||

|

||||

cond_stage_config:

|

||||

target: ldm.modules.encoders.modules.ClassEmbedder

|

||||

params:

|

||||

|

||||

@ -82,4 +82,4 @@ lightning:

|

||||

increase_log_steps: False

|

||||

|

||||

trainer:

|

||||

benchmark: True

|

||||

benchmark: True

|

||||

|

||||

@ -82,4 +82,4 @@ lightning:

|

||||

increase_log_steps: False

|

||||

|

||||

trainer:

|

||||

benchmark: True

|

||||

benchmark: True

|

||||

|

||||

@ -88,4 +88,4 @@ lightning:

|

||||

|

||||

|

||||

trainer:

|

||||

benchmark: True

|

||||

benchmark: True

|

||||

|

||||

@ -65,4 +65,4 @@ model:

|

||||

lossconfig:

|

||||

target: torch.nn.Identity

|

||||

cond_stage_config:

|

||||

target: torch.nn.Identity

|

||||

target: torch.nn.Identity

|

||||

|

||||

@ -70,5 +70,3 @@ model:

|

||||

params:

|

||||

freeze: True

|

||||

layer: "penultimate"

|

||||

|

||||

|

||||

|

||||

@ -73,4 +73,3 @@ model:

|

||||

params:

|

||||

freeze: True

|

||||

layer: "penultimate"

|

||||

|

||||

|

||||

@ -12,7 +12,7 @@

|

||||

# GNU Affero General Public License for more details.

|

||||

|

||||

# You should have received a copy of the GNU Affero General Public License

|

||||

# along with this program. If not, see <http://www.gnu.org/licenses/>.

|

||||

# along with this program. If not, see <http://www.gnu.org/licenses/>.

|

||||

|

||||

# UI defaults configuration file. Is read automatically if located at configs/webui/webui.yaml, or specify path via --defaults.

|

||||

|

||||

|

||||

@ -436,4 +436,4 @@ model_manager:

|

||||

files:

|

||||

sygil_diffusion:

|

||||

file_name: "sygil-diffusion-v0.4.ckpt"

|

||||

download_link: "https://huggingface.co/Sygil/Sygil-Diffusion/resolve/main/sygil-diffusion-v0.4.ckpt"

|

||||

download_link: "https://huggingface.co/Sygil/Sygil-Diffusion/resolve/main/sygil-diffusion-v0.4.ckpt"

|

||||

|

||||

48

daisi_app.py

@ -1,26 +1,46 @@

|

||||

import os, subprocess

|

||||

import yaml

|

||||

|

||||

print (os.getcwd)

|

||||

print(os.getcwd)

|

||||

|

||||

try:

|

||||

with open("environment.yaml") as file_handle:

|

||||

environment_data = yaml.safe_load(file_handle, Loader=yaml.FullLoader)

|

||||

with open("environment.yaml") as file_handle:

|

||||

environment_data = yaml.safe_load(file_handle, Loader=yaml.FullLoader)

|

||||

except FileNotFoundError:

|

||||

try:

|

||||

with open(os.path.join("..", "environment.yaml")) as file_handle:

|

||||

environment_data = yaml.safe_load(file_handle, Loader=yaml.FullLoader)

|

||||

except:

|

||||

pass

|

||||

try:

|

||||

with open(os.path.join("..", "environment.yaml")) as file_handle:

|

||||

environment_data = yaml.safe_load(file_handle, Loader=yaml.FullLoader)

|

||||

except:

|

||||

pass

|

||||

|

||||

try:

|

||||

for dependency in environment_data["dependencies"]:

|

||||

package_name, package_version = dependency.split("=")

|

||||

os.system("pip install {}=={}".format(package_name, package_version))

|

||||

for dependency in environment_data["dependencies"]:

|

||||

package_name, package_version = dependency.split("=")

|

||||

os.system("pip install {}=={}".format(package_name, package_version))

|

||||

except:

|

||||

pass

|

||||

pass

|

||||

|

||||

try:

|

||||

subprocess.run(['python', '-m', 'streamlit', "run" ,os.path.join("..","scripts/webui_streamlit.py"), "--theme.base dark"], stdout=subprocess.DEVNULL)

|

||||

subprocess.run(

|

||||

[

|

||||

"python",

|

||||

"-m",

|

||||

"streamlit",

|

||||

"run",

|

||||

os.path.join("..", "scripts/webui_streamlit.py"),

|

||||

"--theme.base dark",

|

||||

],

|

||||

stdout=subprocess.DEVNULL,

|

||||

)

|

||||

except FileExistsError:

|

||||

subprocess.run(['python', '-m', 'streamlit', "run" ,"scripts/webui_streamlit.py", "--theme.base dark"], stdout=subprocess.DEVNULL)

|

||||

subprocess.run(

|

||||

[

|

||||

"python",

|

||||

"-m",

|

||||

"streamlit",

|

||||

"run",

|

||||

"scripts/webui_streamlit.py",

|

||||

"--theme.base dark",

|

||||

],

|

||||

stdout=subprocess.DEVNULL,

|

||||

)

|

||||

|

||||

@ -10580,4 +10580,4 @@ zdzisław beksinski

|

||||

Ödön Márffy

|

||||

Þórarinn B Þorláksson

|

||||

Þórarinn B. Þorláksson

|

||||

Ștefan Luchian

|

||||

Ștefan Luchian

|

||||

|

||||

@ -102634,4 +102634,4 @@ zzislaw beksinski

|

||||

🦑 design

|

||||

🦩🪐🐞👩🏻🦳

|

||||

🧒 📸 🎨

|

||||

🪔 🎨;🌞🌄

|

||||

🪔 🎨;🌞🌄

|

||||

|

||||

@ -101,4 +101,4 @@ graffiti art

|

||||

lineart

|

||||

pixel art

|

||||

poster art

|

||||

vector art

|

||||

vector art

|

||||

|

||||

@ -197,4 +197,4 @@ verdadism

|

||||

video art

|

||||

viennese actionism

|

||||

visual art

|

||||

vorticism

|

||||

vorticism

|

||||

|

||||

@ -15,4 +15,4 @@ reddit

|

||||

shutterstock

|

||||

tumblr

|

||||

unsplash

|

||||

zbrush central

|

||||

zbrush central

|

||||

|

||||

@ -157,4 +157,4 @@

|

||||

/r/ImaginaryWitches

|

||||

/r/ImaginaryWizards

|

||||

/r/ImaginaryWorldEaters

|

||||

/r/ImaginaryWorlds

|

||||

/r/ImaginaryWorlds

|

||||

|

||||

@ -1799,7 +1799,7 @@ vacbed

|

||||

vaginal-birth

|

||||

vaginal-sticker

|

||||

vampire

|

||||

variant-set

|

||||

variant-set

|

||||

vegetablenabe

|

||||

vel

|

||||

very-long-hair

|

||||

@ -1933,4 +1933,4 @@ zenzai-monaka

|

||||

zijou

|

||||

zin-crow

|

||||

zinkurou

|

||||

zombie

|

||||

zombie

|

||||

|

||||

@ -23,7 +23,7 @@ Food Art

|

||||

Tattoo

|

||||

Digital

|

||||

Pixel

|

||||

Embroidery

|

||||

Embroidery

|

||||

Line

|

||||

Pointillism

|

||||

Single Color

|

||||

@ -60,4 +60,4 @@ Street

|

||||

Realistic

|

||||

Photo Realistic

|

||||

Hyper Realistic

|

||||

Doodle

|

||||

Doodle

|

||||

|

||||

@ -6,7 +6,7 @@ denoising_strength: 0.55

|

||||

variation: 3

|

||||

initial_seed: 1

|

||||

|

||||

# put foreground onto background

|

||||

# put foreground onto background

|

||||

size: 512, 512

|

||||

color: 0,0,0

|

||||

|

||||

@ -16,7 +16,7 @@ color:0,0,0,0

|

||||

resize: 300, 300

|

||||

pos: 256, 350

|

||||

|

||||

// select mask by probing some pixels from the image

|

||||

// select mask by probing some pixels from the image

|

||||

mask_by_color_at: 15, 15, 15, 256, 85, 465, 100, 480

|

||||

mask_by_color_threshold:80

|

||||

mask_by_color_space: HLS

|

||||

|

||||

@ -27,7 +27,7 @@ transform3d_max_mask: 255

|

||||

transform3d_inpaint_radius: 1

|

||||

transform3d_inpaint_method: 0

|

||||

|

||||

## put foreground onto background

|

||||

## put foreground onto background

|

||||

size: 512, 512

|

||||

|

||||

|

||||

|

||||

@ -4,7 +4,7 @@ ddim_steps: 50

|

||||

denoising_strength: 0.5

|

||||

initial_seed: 2

|

||||

|

||||

# put foreground onto background

|

||||

# put foreground onto background

|

||||

size: 512, 512

|

||||

|

||||

## create foreground

|

||||

|

||||

@ -23,4 +23,4 @@

|

||||

"8": ["seagreen", "darkseagreen"]

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

@ -36701,4 +36701,4 @@

|

||||

}

|

||||

]

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

@ -14,7 +14,7 @@ Home Page: https://github.com/Sygil-Dev/sygil-webui

|

||||

|

||||

- Open the `installer` folder and copy the `install.bat` to the root folder next to the `webui.bat`

|

||||

|

||||

- Double-click the `install.bat` file and wait for it to handle everything for you.

|

||||

- Double-click the `install.bat` file and wait for it to handle everything for you.

|

||||

|

||||

### Installation on Linux:

|

||||

|

||||

@ -26,4 +26,4 @@ Home Page: https://github.com/Sygil-Dev/sygil-webui

|

||||

|

||||

- Wait for the installer to handle everything for you.

|

||||

|

||||

After installation, you can run the `webui.cmd` file (on Windows) or `webui.sh` file (on Linux/Mac) to start the WebUI.

|

||||

After installation, you can run the `webui.cmd` file (on Windows) or `webui.sh` file (on Linux/Mac) to start the WebUI.

|

||||

|

||||

@ -37,45 +37,45 @@ along with this program. If not, see <http://www.gnu.org/licenses/>.

|

||||

|

||||

* Open Miniconda3 Prompt from your start menu after it has been installed

|

||||

|

||||

* _(Optional)_ Create a new text file in your root directory `/sygil-webui/custom-conda-path.txt` that contains the path to your relevant Miniconda3, for example `C:\Users\<username>\miniconda3` (replace `<username>` with your own username). This is required if you have more than 1 miniconda installation or are using custom installation location.

|

||||

* _(Optional)_ Create a new text file in your root directory `/sygil-webui/custom-conda-path.txt` that contains the path to your relevant Miniconda3, for example `C:\Users\<username>\miniconda3` (replace `<username>` with your own username). This is required if you have more than 1 miniconda installation or are using custom installation location.

|

||||

|

||||

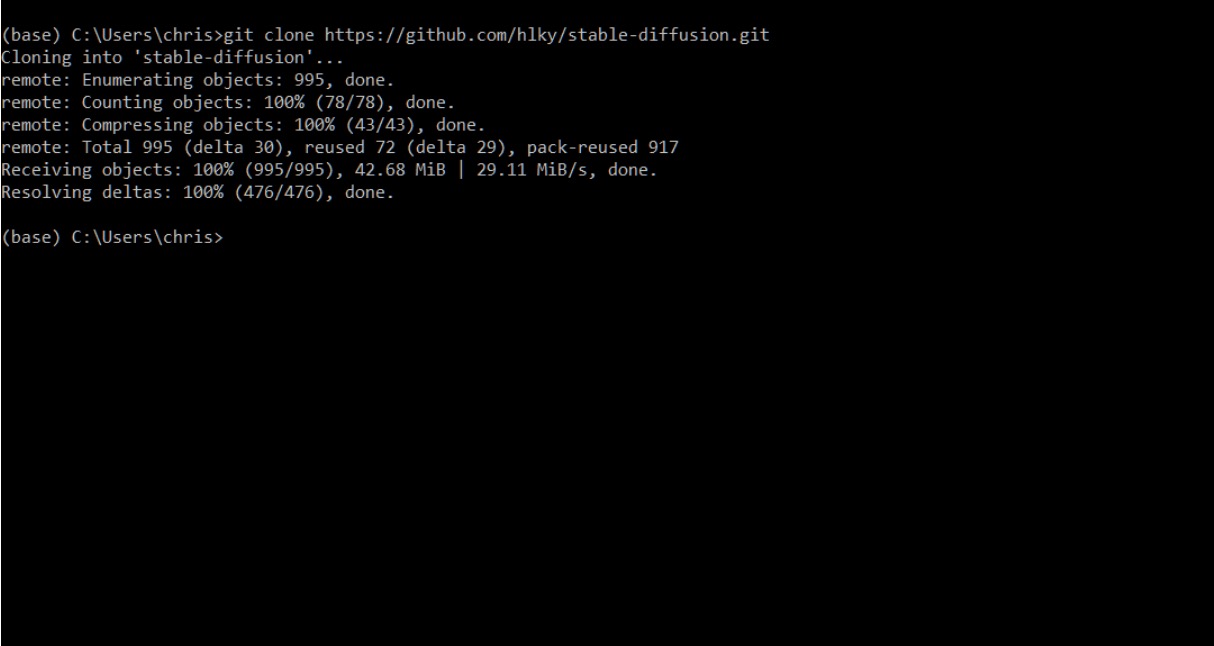

## Cloning the repo

|

||||

|

||||

Type `git clone https://github.com/Sygil-Dev/sygil-webui.git` into the prompt.

|

||||

Type `git clone https://github.com/Sygil-Dev/sygil-webui.git` into the prompt.

|

||||

|

||||

This will create the `sygil-webui` directory in your Windows user folder.

|

||||

This will create the `sygil-webui` directory in your Windows user folder.

|

||||

|

||||

|

||||

---

|

||||

---

|

||||

|

||||

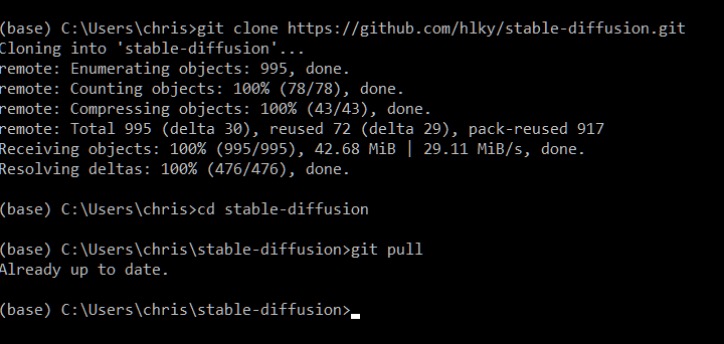

Once a repo has been cloned, updating it is as easy as typing `git pull` inside of Miniconda when in the repo’s topmost directory downloaded by the clone command. Below you can see I used the `cd` command to navigate into that folder.

|

||||

|

||||

|

||||

|

||||

* Next you are going to want to create a Hugging Face account: [https://huggingface.co/](https://huggingface.co/)

|

||||

* Next you are going to want to create a Hugging Face account: [https://huggingface.co/](https://huggingface.co/)

|

||||

|

||||

* After you have signed up, and are signed in go to this link and click on Authorize: [https://huggingface.co/CompVis/stable-diffusion-v-1-4-original](https://huggingface.co/CompVis/stable-diffusion-v-1-4-original)

|

||||

* After you have signed up, and are signed in go to this link and click on Authorize: [https://huggingface.co/CompVis/stable-diffusion-v-1-4-original](https://huggingface.co/CompVis/stable-diffusion-v-1-4-original)

|

||||

|

||||

* After you have authorized your account, go to this link to download the model weights for version 1.4 of the model, future versions will be released in the same way, and updating them will be a similar process :

|

||||

* After you have authorized your account, go to this link to download the model weights for version 1.4 of the model, future versions will be released in the same way, and updating them will be a similar process :

|

||||

[https://huggingface.co/CompVis/stable-diffusion-v-1-4-original/resolve/main/sd-v1-4.ckpt](https://huggingface.co/CompVis/stable-diffusion-v-1-4-original/resolve/main/sd-v1-4.ckpt)

|

||||

|

||||

* Download the model into this directory: `C:\Users\<username>\sygil-webui\models\ldm\stable-diffusion-v1`

|

||||

|

||||

* Rename `sd-v1-4.ckpt` to `model.ckpt` once it is inside the stable-diffusion-v1 folder.

|

||||

|

||||

* Since we are already in our sygil-webui folder in Miniconda, our next step is to create the environment Stable Diffusion needs to work.

|

||||

* Since we are already in our sygil-webui folder in Miniconda, our next step is to create the environment Stable Diffusion needs to work.

|

||||

|

||||

* _(Optional)_ If you already have an environment set up for an installation of Stable Diffusion named ldm open up the `environment.yaml` file in `\sygil-webui\` change the environment name inside of it from `ldm` to `ldo`

|

||||

|

||||

---

|

||||

---

|

||||

|

||||

## First run

|

||||

|

||||

* `webui.cmd` at the root folder (`\sygil-webui\`) is your main script that you'll always run. It has the functions to automatically do the followings:

|

||||

|

||||

* Create conda env

|

||||

|

||||

* Create conda env

|

||||

* Install and update requirements

|

||||

* Run the relauncher and webui.py script for gradio UI options

|

||||

* Run the relauncher and webui.py script for gradio UI options

|

||||

|

||||

* Run `webui.cmd` by double clicking the file.

|

||||

|

||||

@ -83,7 +83,7 @@ Once a repo has been cloned, updating it is as easy as typing `git pull` inside

|

||||

|

||||

|

||||

|

||||

* You'll receive warning messages on **GFPGAN**, **RealESRGAN** and **LDSR** but these are optionals and will be further explained below.

|

||||

* You'll receive warning messages on **GFPGAN**, **RealESRGAN** and **LDSR** but these are optionals and will be further explained below.

|

||||

|

||||

* In the meantime, you can now go to your web browser and open the link to [http://localhost:7860/](http://localhost:7860/).

|

||||

|

||||

@ -91,9 +91,9 @@ Once a repo has been cloned, updating it is as easy as typing `git pull` inside

|

||||

|

||||

* You should be able to see progress in your `webui.cmd` window. The [http://localhost:7860/](http://localhost:7860/) will be automatically updated to show the final image once progress reach 100%

|

||||

|

||||

* Images created with the web interface will be saved to `\sygil-webui\outputs\` in their respective folders alongside `.yaml` text files with all of the details of your prompts for easy referencing later. Images will also be saved with their seed and numbered so that they can be cross referenced with their `.yaml` files easily.

|

||||

* Images created with the web interface will be saved to `\sygil-webui\outputs\` in their respective folders alongside `.yaml` text files with all of the details of your prompts for easy referencing later. Images will also be saved with their seed and numbered so that they can be cross referenced with their `.yaml` files easily.

|

||||

|

||||

---

|

||||

---

|

||||

|

||||

### Optional additional models

|

||||

|

||||

@ -104,12 +104,12 @@ There are three more models that we need to download in order to get the most ou

|

||||

### GFPGAN

|

||||

|

||||

1. If you want to use GFPGAN to improve generated faces, you need to install it separately.

|

||||

2. Download [GFPGANv1.3.pth](https://github.com/TencentARC/GFPGAN/releases/download/v1.3.0/GFPGANv1.3.pth) and [GFPGANv1.4.pth](https://github.com/TencentARC/GFPGAN/releases/download/v1.3.4/GFPGANv1.4.pth) and put it into the `/sygil-webui/models/gfpgan` directory.

|

||||

2. Download [GFPGANv1.3.pth](https://github.com/TencentARC/GFPGAN/releases/download/v1.3.0/GFPGANv1.3.pth) and [GFPGANv1.4.pth](https://github.com/TencentARC/GFPGAN/releases/download/v1.3.4/GFPGANv1.4.pth) and put it into the `/sygil-webui/models/gfpgan` directory.

|

||||

|

||||

### RealESRGAN

|

||||

|

||||

1. Download [RealESRGAN_x4plus.pth](https://github.com/xinntao/Real-ESRGAN/releases/download/v0.1.0/RealESRGAN_x4plus.pth) and [RealESRGAN_x4plus_anime_6B.pth](https://github.com/xinntao/Real-ESRGAN/releases/download/v0.2.2.4/RealESRGAN_x4plus_anime_6B.pth).

|

||||

2. Put them into the `sygil-webui/models/realesrgan` directory.

|

||||

2. Put them into the `sygil-webui/models/realesrgan` directory.

|

||||

|

||||

### LDSR

|

||||

|

||||

@ -117,7 +117,7 @@ There are three more models that we need to download in order to get the most ou

|

||||

2. Git clone [Hafiidz/latent-diffusion](https://github.com/Hafiidz/latent-diffusion) into your `/sygil-webui/src/` folder.

|

||||

3. Run `/sygil-webui/models/ldsr/download_model.bat` to automatically download and rename the models.

|

||||

4. Wait until it is done and you can confirm by confirming two new files in `sygil-webui/models/ldsr/`

|

||||

5. _(Optional)_ If there are no files there, you can manually download **LDSR** [project.yaml](https://heibox.uni-heidelberg.de/f/31a76b13ea27482981b4/?dl=1) and [model last.cpkt](https://heibox.uni-heidelberg.de/f/578df07c8fc04ffbadf3/?dl=1).

|

||||

5. _(Optional)_ If there are no files there, you can manually download **LDSR** [project.yaml](https://heibox.uni-heidelberg.de/f/31a76b13ea27482981b4/?dl=1) and [model last.cpkt](https://heibox.uni-heidelberg.de/f/578df07c8fc04ffbadf3/?dl=1).

|

||||

6. Rename last.ckpt to model.ckpt and place both under `sygil-webui/models/ldsr/`.

|

||||

7. Refer to [here](https://github.com/Sygil-Dev/sygil-webui/issues/488) for any issue.

|

||||

|

||||

|

||||

@ -46,7 +46,7 @@ along with this program. If not, see <http://www.gnu.org/licenses/>.

|

||||

|

||||

**Step 3:** Make the script executable by opening the directory in your Terminal and typing `chmod +x linux-sd.sh`, or whatever you named this file as.

|

||||

|

||||

**Step 4:** Run the script with `./linux-sd.sh`, it will begin by cloning the [WebUI Github Repo](https://github.com/Sygil-Dev/sygil-webui) to the directory the script is located in. This folder will be named `sygil-webui`.

|

||||

**Step 4:** Run the script with `./linux-sd.sh`, it will begin by cloning the [WebUI Github Repo](https://github.com/Sygil-Dev/sygil-webui) to the directory the script is located in. This folder will be named `sygil-webui`.

|

||||

|

||||

**Step 5:** The script will pause and ask that you move/copy the downloaded 1.4 AI models to the `sygil-webui` folder. Press Enter once you have done so to continue.

|

||||

|

||||

@ -67,8 +67,8 @@ The user will have the ability to set these to yes or no using the menu choices.

|

||||

|

||||

**Building the Conda environment may take upwards of 15 minutes, depending on your network connection and system specs. This is normal, just leave it be and let it finish. If you are trying to update and the script hangs at `Installing PIP Dependencies` for more than 10 minutes, you will need to `Ctrl-C` to stop the script, delete your `src` folder, and rerun `linux-sd.sh` again.**

|

||||

|

||||

**Step 8:** Once the conda environment has been created and the upscaler models have been downloaded, then the user is presented with a choice to choose between the Streamlit or Gradio versions of the WebUI Interface.

|

||||

- Streamlit:

|

||||

**Step 8:** Once the conda environment has been created and the upscaler models have been downloaded, then the user is presented with a choice to choose between the Streamlit or Gradio versions of the WebUI Interface.

|

||||

- Streamlit:

|

||||

- Has A More Modern UI

|

||||

- More Features Planned

|

||||

- Will Be The Main UI Going Forward

|

||||

|

||||

@ -56,9 +56,9 @@ Requirements:

|

||||

* Host computer is AMD64 architecture (e.g. Intel/AMD x86 64-bit CPUs)

|

||||

* Host computer operating system (Linux or Windows with WSL2 enabled)

|

||||

* See [Microsoft WSL2 Installation Guide for Windows 10] (https://learn.microsoft.com/en-us/windows/wsl/) for more information on installing.

|

||||

* Ubuntu (Default) for WSL2 is recommended for Windows users

|

||||

* Ubuntu (Default) for WSL2 is recommended for Windows users

|

||||

* Host computer has Docker, or compatible container runtime

|

||||

* Docker Compose (v1.29+) or later

|

||||

* Docker Compose (v1.29+) or later

|

||||

* See [Install Docker Engine] (https://docs.docker.com/engine/install/#supported-platforms) to learn more about installing Docker on your Linux operating system

|

||||

* 10+ GB Free Disk Space (used by Docker base image, the Stable Diffusion WebUI Docker image for dependencies, model files/weights)

|

||||

|

||||

@ -78,7 +78,7 @@ to issues with AMDs support of GPU passthrough. You also _must_ have ROCm driver

|

||||

```

|

||||

docker compose -f docker-compose.yml -f docker-compose.amd.yml ...

|

||||

```

|

||||

or, by setting

|

||||

or, by setting

|

||||

```

|

||||

export COMPOSE_FILE=docker-compose.yml:docker-compose.amd.yml

|

||||

```

|

||||

|

||||

@ -98,22 +98,22 @@ It is hierarchical, so each layer can have their own child layers.

|

||||

In the frontend you can find a brief documentation for the syntax, examples and reference for the various arguments.

|

||||

Here a summary:

|

||||

|

||||

Markdown headings, e.g. '# layer0', define layers.

|

||||

The content of sections define the arguments for image generation.

|

||||

Markdown headings, e.g. '# layer0', define layers.

|

||||

The content of sections define the arguments for image generation.

|

||||

Arguments are defined by lines of the form 'arg:value' or 'arg=value'.

|

||||

|

||||

Layers are hierarchical, i.e. each layer can contain more layers.

|

||||

Layers are hierarchical, i.e. each layer can contain more layers.

|

||||

The number of '#' increases in the headings of a child layers.

|

||||

Child layers are blended together by their image masks, like layers in image editors.

|

||||

By default alpha composition is used for blending.

|

||||

By default alpha composition is used for blending.

|

||||

Other blend modes from [ImageChops](https://pillow.readthedocs.io/en/stable/reference/ImageChops.html) can also be used.

|

||||

|

||||

Sections with "prompt" and child layers invoke Image2Image, without child layers they invoke Text2Image.

|

||||

Sections with "prompt" and child layers invoke Image2Image, without child layers they invoke Text2Image.

|

||||

The result of blending child layers will be the input for Image2Image.

|

||||

|

||||

Without "prompt" they are just images, useful for mask selection, image composition, etc.

|

||||

Images can be initialized with "color", resized with "resize" and their position specified with "pos".

|

||||

Rotation and rotation center are "rotation" and "center".

|

||||

Rotation and rotation center are "rotation" and "center".

|

||||

|

||||

Mask can automatically be selected by color, color at pixels of the image, or by estimated depth.

|

||||

|

||||

@ -128,15 +128,15 @@ The poses describe the camera position and orientation as x,y,z,rotate_x,rotate_

|

||||

The camera coordinate system is the pinhole camera as described and pictured in [OpenCV "Camera Calibration and 3D Reconstruction" documentation](https://docs.opencv.org/4.x/d9/d0c/group__calib3d.html).

|

||||

|

||||

When the camera pose `transform3d_from_pose` where the input image was taken is not specified, the camera pose `transform3d_to_pose` to which the image is to be transformed is in terms of the input camera coordinate system:

|

||||

Walking forwards one depth unit in the input image corresponds to a position `0,0,1`.

|

||||

Walking to the right is something like `1,0,0`.

|

||||

Walking forwards one depth unit in the input image corresponds to a position `0,0,1`.

|

||||

Walking to the right is something like `1,0,0`.

|

||||

Going downwards is then `0,1,0`.

|

||||

|

||||

## Gradio Optional Customizations

|

||||

|

||||

---

|

||||

|

||||

Gradio allows for a number of possible customizations via command line arguments/terminal parameters. If you are running these manually, they would need to be run like this: `python scripts/webui.py --param`. Otherwise, you may add your own parameter customizations to `scripts/relauncher.py`, the program that automatically relaunches the Gradio interface should a crash happen.

|

||||

Gradio allows for a number of possible customizations via command line arguments/terminal parameters. If you are running these manually, they would need to be run like this: `python scripts/webui.py --param`. Otherwise, you may add your own parameter customizations to `scripts/relauncher.py`, the program that automatically relaunches the Gradio interface should a crash happen.

|

||||

|

||||

Inside of `relauncher.py` are a few preset defaults most people would likely access:

|

||||

|

||||

@ -171,7 +171,7 @@ additional_arguments = ""

|

||||

|

||||

---

|

||||

|

||||

This is a list of the full set of optional parameters you can launch the Gradio Interface with.

|

||||

This is a list of the full set of optional parameters you can launch the Gradio Interface with.

|

||||

|

||||

```

|

||||

usage: webui.py [-h] [--ckpt CKPT] [--cli CLI] [--config CONFIG] [--defaults DEFAULTS] [--esrgan-cpu] [--esrgan-gpu ESRGAN_GPU] [--extra-models-cpu] [--extra-models-gpu] [--gfpgan-cpu] [--gfpgan-dir GFPGAN_DIR] [--gfpgan-gpu GFPGAN_GPU] [--gpu GPU]

|

||||

|

||||

@ -61,7 +61,7 @@ To use GoBig, you will need to download the RealESRGAN models as directed above.

|

||||

LSDR is a 4X upscaler with high VRAM usage that uses a Latent Diffusion model to upscale the image. This will accentuate the details of an image, but won't change the composition. This might introduce sharpening, but it is great for textures or compositions with plenty of details. However, it is slower and will use more VRAM.

|

||||

|

||||

If you want to use LSDR to upscale your images, you need to download the models for it separately if you are on Windows or doing so manually on Linux.

|

||||

Download the LDSR [project.yaml](https://heibox.uni-heidelberg.de/f/31a76b13ea27482981b4/?dl=1) and [ model last.cpkt](https://heibox.uni-heidelberg.de/f/578df07c8fc04ffbadf3/?dl=1). Rename `last.ckpt` to `model.ckpt` and place both in the `sygil-webui/models/ldsr` directory after you have setup the conda environment for the first time.

|

||||

Download the LDSR [project.yaml](https://heibox.uni-heidelberg.de/f/31a76b13ea27482981b4/?dl=1) and [ model last.cpkt](https://heibox.uni-heidelberg.de/f/578df07c8fc04ffbadf3/?dl=1). Rename `last.ckpt` to `model.ckpt` and place both in the `sygil-webui/models/ldsr` directory after you have setup the conda environment for the first time.

|

||||

|

||||

## GoLatent (Gradio only currently)

|

||||

|

||||

|

||||

@ -6,4 +6,4 @@

|

||||

|

||||

|

||||

|

||||

The Concept Library allows for the easy usage of custom textual inversion models. These models may be loaded into `models/custom/sd-concepts-library` and will appear in the Concepts Library in Streamlit. To use one of these custom models in a prompt, either copy it using the button on the model, or type `<model-name>` in the prompt where you wish to use it.

|

||||

The Concept Library allows for the easy usage of custom textual inversion models. These models may be loaded into `models/custom/sd-concepts-library` and will appear in the Concepts Library in Streamlit. To use one of these custom models in a prompt, either copy it using the button on the model, or type `<model-name>` in the prompt where you wish to use it.

|

||||

|

||||

@ -18,7 +18,7 @@ You should have received a copy of the GNU Affero General Public License

|

||||

along with this program. If not, see <http://www.gnu.org/licenses/>.

|

||||

-->

|

||||

|

||||

You can use other *versions* of Stable Diffusion, and *fine-tunes* of Stable Diffusion.

|

||||

You can use other *versions* of Stable Diffusion, and *fine-tunes* of Stable Diffusion.

|

||||

|

||||

Any model with the `.ckpt` extension can be placed into the `models/custom` folder and used in the UI. The filename of the model will be used to show the model on the drop-down menu on the UI from which you can select and use your custom model so, make sure it has a good filename so you can recognize it from the drop-down menu.

|

||||

|

||||

@ -44,7 +44,7 @@ Any model with the `.ckpt` extension can be placed into the `models/custom` fold

|

||||

|

||||

- ### [Trinart v2](https://huggingface.co/naclbit/trinart_stable_diffusion_v2)

|

||||

|

||||

-

|

||||

-

|

||||

|

||||

## Unofficial Model List:

|

||||

|

||||

|

||||

@ -27,7 +27,7 @@ const config = {

|

||||

defaultLocale: 'en',

|

||||

locales: ['en'],

|

||||

},

|

||||

|

||||

|

||||

// ...

|

||||

plugins: [

|

||||

[

|

||||

@ -108,7 +108,7 @@ const config = {

|

||||

/** @type {import('@docusaurus/preset-classic').Options} */

|

||||

({

|

||||

docs: {

|

||||

|

||||

|

||||

sidebarCollapsed: false,

|

||||

sidebarPath: require.resolve('./sidebars.js'),

|

||||

// Please change this to your repo.

|

||||

@ -193,4 +193,4 @@ const config = {

|

||||

}),

|

||||

};

|

||||

|

||||

module.exports = config;

|

||||

module.exports = config;

|

||||

|

||||

@ -13,7 +13,7 @@

|

||||

# GNU Affero General Public License for more details.

|

||||

|

||||

# You should have received a copy of the GNU Affero General Public License

|

||||

# along with this program. If not, see <http://www.gnu.org/licenses/>.

|

||||

# along with this program. If not, see <http://www.gnu.org/licenses/>.

|

||||

#

|

||||

# Starts the webserver inside the docker container

|

||||

#

|

||||

|

||||

@ -13,7 +13,7 @@ name: ldm

|

||||

# GNU Affero General Public License for more details.

|

||||

|

||||

# You should have received a copy of the GNU Affero General Public License

|

||||

# along with this program. If not, see <http://www.gnu.org/licenses/>.

|

||||

# along with this program. If not, see <http://www.gnu.org/licenses/>.

|

||||

channels:

|

||||

- pytorch

|

||||

- defaults

|

||||

|

||||

@ -20,4 +20,4 @@ module.exports = {

|

||||

'no-console': process.env.NODE_ENV === 'production' ? 'warn' : 'off',

|

||||

'no-debugger': process.env.NODE_ENV === 'production' ? 'warn' : 'off'

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

@ -1,7 +1,7 @@

|

||||

/* ----------------------------------------------

|

||||

* Generated by Animista on 2022-9-3 12:0:51

|

||||

* Licensed under FreeBSD License.

|

||||

* See http://animista.net/license for more info.

|

||||

* See http://animista.net/license for more info.

|

||||

* w: http://animista.net, t: @cssanimista

|

||||

* ---------------------------------------------- */

|

||||

|

||||

@ -26,7 +26,7 @@

|

||||

opacity: 1;

|

||||

}

|

||||

}

|

||||

|

||||

|

||||

|

||||

/* CSS HEX */

|

||||

:root {

|

||||

@ -130,7 +130,7 @@ background-color:#9d85fbdf!important;

|

||||

border: none!important;}

|

||||

/* Background for Gradio stuff along with colors for text */

|

||||

.dark .gr-box {

|

||||

|

||||

|

||||

|

||||

background-color:rgba(55, 55, 55, 0.105)!important;

|

||||

border: solid 0.5px!important;

|

||||

@ -206,4 +206,3 @@ button, select, textarea {

|

||||

.dark .gr-check-radio{

|

||||

background-color: #373737ff!important;

|

||||

}

|

||||

|

||||

|

||||

@ -18,4 +18,4 @@ along with this program. If not, see <http://www.gnu.org/licenses/>.

|

||||

.wrap .m-12 svg { display:none!important; }

|

||||

.wrap .m-12::before { content:"Loading..." }

|

||||

.progress-bar { display:none!important; }

|

||||

.meta-text { display:none!important; }

|

||||

.meta-text { display:none!important; }

|

||||

|

||||

@ -146,7 +146,7 @@ div.gallery:hover {

|

||||

.css-jn99sy {

|

||||

display: none

|

||||

}

|

||||

|

||||

|

||||

/* Make the text area widget have a similar height as the text input field */

|

||||

.st-dy{

|

||||

height: 54px;

|

||||

@ -154,14 +154,14 @@ div.gallery:hover {

|

||||

}

|

||||

.css-17useex{

|

||||

gap: 3px;

|

||||

|

||||

|

||||

}

|

||||

|

||||

/* Remove some empty spaces to make the UI more compact. */

|

||||

.css-18e3th9{

|

||||

padding-left: 10px;

|

||||

padding-right: 30px;

|

||||

position: unset !important; /* Fixes the layout/page going up when an expander or another item is expanded and then collapsed */

|

||||

position: unset !important; /* Fixes the layout/page going up when an expander or another item is expanded and then collapsed */

|

||||

}

|

||||

.css-k1vhr4{

|

||||

padding-top: initial;

|

||||

|

||||

@ -12,7 +12,7 @@

|

||||

# GNU Affero General Public License for more details.

|

||||

|

||||

# You should have received a copy of the GNU Affero General Public License

|

||||

# along with this program. If not, see <http://www.gnu.org/licenses/>.

|

||||

# along with this program. If not, see <http://www.gnu.org/licenses/>.

|

||||

from os import path

|

||||

import json

|

||||

|

||||

@ -28,7 +28,7 @@ def readTextFile(*args):

|

||||

def css(opt):

|

||||

styling = readTextFile("css", "styles.css")

|

||||

if not opt.no_progressbar_hiding:

|

||||

styling += readTextFile("css", "no_progress_bar.css")

|

||||

styling += readTextFile("css", "no_progress_bar.css")

|

||||

return styling

|

||||

|

||||

|

||||

|

||||

@ -10,4 +10,4 @@

|

||||

<div id="app"></div>

|

||||

|

||||

</body>

|

||||

</html>

|

||||

</html>

|

||||

|

||||

@ -187,7 +187,7 @@ PERFORMANCE OF THIS SOFTWARE.

|

||||

***************************************************************************** */var Oa=function(){return Oa=Object.assign||function(t){for(var n,r=1,i=arguments.length;r<i;r++){n=arguments[r];for(var o in n)Object.prototype.hasOwnProperty.call(n,o)&&(t[o]=n[o])}return t},Oa.apply(this,arguments)},sO={thumbnail:!0,animateThumb:!0,currentPagerPosition:"middle",alignThumbnails:"middle",thumbWidth:100,thumbHeight:"80px",thumbMargin:5,appendThumbnailsTo:".lg-components",toggleThumb:!1,enableThumbDrag:!0,enableThumbSwipe:!0,thumbnailSwipeThreshold:10,loadYouTubeThumbnail:!0,youTubeThumbSize:1,thumbnailPluginStrings:{toggleThumbnails:"Toggle thumbnails"}},ys={afterAppendSlide:"lgAfterAppendSlide",init:"lgInit",hasVideo:"lgHasVideo",containerResize:"lgContainerResize",updateSlides:"lgUpdateSlides",afterAppendSubHtml:"lgAfterAppendSubHtml",beforeOpen:"lgBeforeOpen",afterOpen:"lgAfterOpen",slideItemLoad:"lgSlideItemLoad",beforeSlide:"lgBeforeSlide",afterSlide:"lgAfterSlide",posterClick:"lgPosterClick",dragStart:"lgDragStart",dragMove:"lgDragMove",dragEnd:"lgDragEnd",beforeNextSlide:"lgBeforeNextSlide",beforePrevSlide:"lgBeforePrevSlide",beforeClose:"lgBeforeClose",afterClose:"lgAfterClose",rotateLeft:"lgRotateLeft",rotateRight:"lgRotateRight",flipHorizontal:"lgFlipHorizontal",flipVertical:"lgFlipVertical",autoplay:"lgAutoplay",autoplayStart:"lgAutoplayStart",autoplayStop:"lgAutoplayStop"},oO=function(){function e(t,n){return this.thumbOuterWidth=0,this.thumbTotalWidth=0,this.translateX=0,this.thumbClickable=!1,this.core=t,this.$LG=n,this}return e.prototype.init=function(){this.settings=Oa(Oa({},sO),this.core.settings),this.thumbOuterWidth=0,this.thumbTotalWidth=this.core.galleryItems.length*(this.settings.thumbWidth+this.settings.thumbMargin),this.translateX=0,this.setAnimateThumbStyles(),this.core.settings.allowMediaOverlap||(this.settings.toggleThumb=!1),this.settings.thumbnail&&(this.build(),this.settings.animateThumb?(this.settings.enableThumbDrag&&this.enableThumbDrag(),this.settings.enableThumbSwipe&&this.enableThumbSwipe(),this.thumbClickable=!1):this.thumbClickable=!0,this.toggleThumbBar(),this.thumbKeyPress())},e.prototype.build=function(){var t=this;this.setThumbMarkup(),this.manageActiveClassOnSlideChange(),this.$lgThumb.first().on("click.lg touchend.lg",function(n){var r=t.$LG(n.target);!r.hasAttribute("data-lg-item-id")||setTimeout(function(){if(t.thumbClickable&&!t.core.lgBusy){var i=parseInt(r.attr("data-lg-item-id"));t.core.slide(i,!1,!0,!1)}},50)}),this.core.LGel.on(ys.beforeSlide+".thumb",function(n){var r=n.detail.index;t.animateThumb(r)}),this.core.LGel.on(ys.beforeOpen+".thumb",function(){t.thumbOuterWidth=t.core.outer.get().offsetWidth}),this.core.LGel.on(ys.updateSlides+".thumb",function(){t.rebuildThumbnails()}),this.core.LGel.on(ys.containerResize+".thumb",function(){!t.core.lgOpened||setTimeout(function(){t.thumbOuterWidth=t.core.outer.get().offsetWidth,t.animateThumb(t.core.index),t.thumbOuterWidth=t.core.outer.get().offsetWidth},50)})},e.prototype.setThumbMarkup=function(){var t="lg-thumb-outer ";this.settings.alignThumbnails&&(t+="lg-thumb-align-"+this.settings.alignThumbnails);var n='<div class="'+t+`">

|

||||

<div class="lg-thumb lg-group">

|

||||

</div>

|

||||

</div>`;this.core.outer.addClass("lg-has-thumb"),this.settings.appendThumbnailsTo===".lg-components"?this.core.$lgComponents.append(n):this.core.outer.append(n),this.$thumbOuter=this.core.outer.find(".lg-thumb-outer").first(),this.$lgThumb=this.core.outer.find(".lg-thumb").first(),this.settings.animateThumb&&this.core.outer.find(".lg-thumb").css("transition-duration",this.core.settings.speed+"ms").css("width",this.thumbTotalWidth+"px").css("position","relative"),this.setThumbItemHtml(this.core.galleryItems)},e.prototype.enableThumbDrag=function(){var t=this,n={cords:{startX:0,endX:0},isMoved:!1,newTranslateX:0,startTime:new Date,endTime:new Date,touchMoveTime:0},r=!1;this.$thumbOuter.addClass("lg-grab"),this.core.outer.find(".lg-thumb").first().on("mousedown.lg.thumb",function(i){t.thumbTotalWidth>t.thumbOuterWidth&&(i.preventDefault(),n.cords.startX=i.pageX,n.startTime=new Date,t.thumbClickable=!1,r=!0,t.core.outer.get().scrollLeft+=1,t.core.outer.get().scrollLeft-=1,t.$thumbOuter.removeClass("lg-grab").addClass("lg-grabbing"))}),this.$LG(window).on("mousemove.lg.thumb.global"+this.core.lgId,function(i){!t.core.lgOpened||r&&(n.cords.endX=i.pageX,n=t.onThumbTouchMove(n))}),this.$LG(window).on("mouseup.lg.thumb.global"+this.core.lgId,function(){!t.core.lgOpened||(n.isMoved?n=t.onThumbTouchEnd(n):t.thumbClickable=!0,r&&(r=!1,t.$thumbOuter.removeClass("lg-grabbing").addClass("lg-grab")))})},e.prototype.enableThumbSwipe=function(){var t=this,n={cords:{startX:0,endX:0},isMoved:!1,newTranslateX:0,startTime:new Date,endTime:new Date,touchMoveTime:0};this.$lgThumb.on("touchstart.lg",function(r){t.thumbTotalWidth>t.thumbOuterWidth&&(r.preventDefault(),n.cords.startX=r.targetTouches[0].pageX,t.thumbClickable=!1,n.startTime=new Date)}),this.$lgThumb.on("touchmove.lg",function(r){t.thumbTotalWidth>t.thumbOuterWidth&&(r.preventDefault(),n.cords.endX=r.targetTouches[0].pageX,n=t.onThumbTouchMove(n))}),this.$lgThumb.on("touchend.lg",function(){n.isMoved?n=t.onThumbTouchEnd(n):t.thumbClickable=!0})},e.prototype.rebuildThumbnails=function(){var t=this;this.$thumbOuter.addClass("lg-rebuilding-thumbnails"),setTimeout(function(){t.thumbTotalWidth=t.core.galleryItems.length*(t.settings.thumbWidth+t.settings.thumbMargin),t.$lgThumb.css("width",t.thumbTotalWidth+"px"),t.$lgThumb.empty(),t.setThumbItemHtml(t.core.galleryItems),t.animateThumb(t.core.index)},50),setTimeout(function(){t.$thumbOuter.removeClass("lg-rebuilding-thumbnails")},200)},e.prototype.setTranslate=function(t){this.$lgThumb.css("transform","translate3d(-"+t+"px, 0px, 0px)")},e.prototype.getPossibleTransformX=function(t){return t>this.thumbTotalWidth-this.thumbOuterWidth&&(t=this.thumbTotalWidth-this.thumbOuterWidth),t<0&&(t=0),t},e.prototype.animateThumb=function(t){if(this.$lgThumb.css("transition-duration",this.core.settings.speed+"ms"),this.settings.animateThumb){var n=0;switch(this.settings.currentPagerPosition){case"left":n=0;break;case"middle":n=this.thumbOuterWidth/2-this.settings.thumbWidth/2;break;case"right":n=this.thumbOuterWidth-this.settings.thumbWidth}this.translateX=(this.settings.thumbWidth+this.settings.thumbMargin)*t-1-n,this.translateX>this.thumbTotalWidth-this.thumbOuterWidth&&(this.translateX=this.thumbTotalWidth-this.thumbOuterWidth),this.translateX<0&&(this.translateX=0),this.setTranslate(this.translateX)}},e.prototype.onThumbTouchMove=function(t){return t.newTranslateX=this.translateX,t.isMoved=!0,t.touchMoveTime=new Date().valueOf(),t.newTranslateX-=t.cords.endX-t.cords.startX,t.newTranslateX=this.getPossibleTransformX(t.newTranslateX),this.setTranslate(t.newTranslateX),this.$thumbOuter.addClass("lg-dragging"),t},e.prototype.onThumbTouchEnd=function(t){t.isMoved=!1,t.endTime=new Date,this.$thumbOuter.removeClass("lg-dragging");var n=t.endTime.valueOf()-t.startTime.valueOf(),r=t.cords.endX-t.cords.startX,i=Math.abs(r)/n;return i>.15&&t.endTime.valueOf()-t.touchMoveTime<30?(i+=1,i>2&&(i+=1),i=i+i*(Math.abs(r)/this.thumbOuterWidth),this.$lgThumb.css("transition-duration",Math.min(i-1,2)+"settings"),r=r*i,this.translateX=this.getPossibleTransformX(this.translateX-r),this.setTranslate(this.translateX)):this.translateX=t.newTranslateX,Math.abs(t.cords.endX-t.cords.startX)<this.settings.thumbnailSwipeThreshold&&(this.thumbClickable=!0),t},e.prototype.getThumbHtml=function(t,n){var r=this.core.galleryItems[n].__slideVideoInfo||{},i;return r.youtube&&this.settings.loadYouTubeThumbnail?i="//img.youtube.com/vi/"+r.youtube[1]+"/"+this.settings.youTubeThumbSize+".jpg":i=t,'<div data-lg-item-id="'+n+'" class="lg-thumb-item '+(n===this.core.index?" active":"")+`"

|

||||