mirror of

https://github.com/sd-webui/stable-diffusion-webui.git

synced 2024-09-19 05:47:09 +03:00

Updated Installation (markdown)

parent

bb6f226826

commit

ae7de8868e

@ -2,10 +2,9 @@

|

||||

[To install on Linux, see this page.](https://github.com/sd-webui/stable-diffusion-webui/wiki/Linux-Automated-Setup-Guide)

|

||||

[To install on Colab, see Altryne's notebook.](https://github.com/altryne/sd-webui-colab)**

|

||||

|

||||

# Windows - step by step Installation guide

|

||||

> Big thanks to Arkitecc#0339 from the Stable Diffusion discord for the original guide (support them [here](https://ko-fi.com/arkitecc))

|

||||

# Windows - step by step Installation guide

|

||||

|

||||

# Initial Setup

|

||||

# Initial Setup

|

||||

|

||||

## Pre requisites

|

||||

|

||||

@ -14,12 +13,13 @@

|

||||

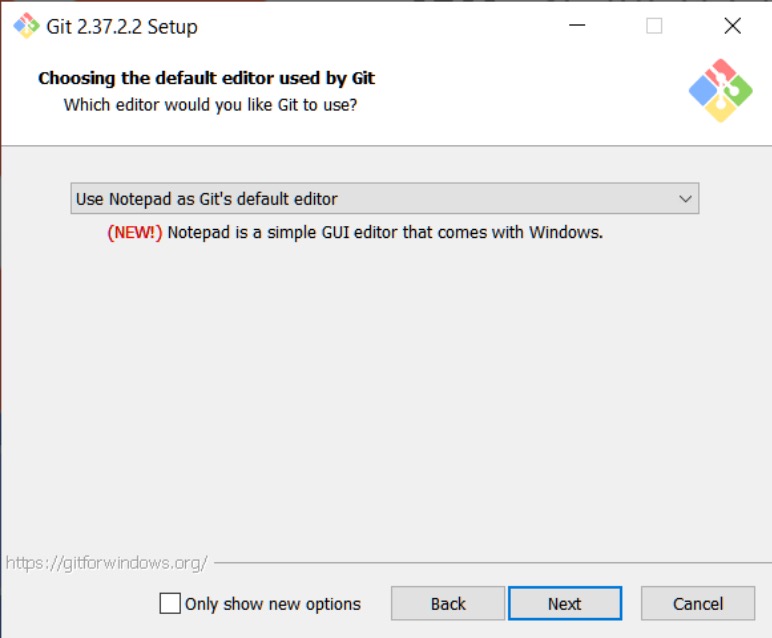

* https://gitforwindows.org/ Download this, and accept all of the default settings it offers except for the default editor selection. Once it asks for what the default editor is, most people who are unfamiliar with this should just choose Notepad because everyone has Notepad on Windows.

|

||||

|

||||

|

||||

|

||||

|

||||

* Download Miniconda3:

|

||||

[https://repo.anaconda.com/miniconda/Miniconda3-latest-Windows-x86_64.exe](https://repo.anaconda.com/miniconda/Miniconda3-latest-Windows-x86_64.exe) Get this installed so that you have access to the Miniconda3 Prompt Console.

|

||||

[https://repo.anaconda.com/miniconda/Miniconda3-latest-Windows-x86_64.exe](https://repo.anaconda.com/miniconda/Miniconda3-latest-Windows-x86_64.exe) Get this installed so that you have access to the Miniconda3 Prompt Console.

|

||||

|

||||

* Open Minconda3 Prompt from your start menu after it has been installed

|

||||

* Open Miniconda3 Prompt from your start menu after it has been installed

|

||||

|

||||

* _(Optional)_ Create a new text file in your root directory `/stable-diffusion-webui/custom-conda-path.txt` that contains the path to your relevant Miniconda3, for example `C:\Users\<username>\miniconda3` (replace `<username>` with your own username). This is required if you have more than 1 miniconda installation or are using custom installation location.

|

||||

|

||||

## Cloning the repo

|

||||

|

||||

@ -31,59 +31,75 @@ This will create the `stable-diffusion-webui` directory in your Windows user fol

|

||||

---

|

||||

|

||||

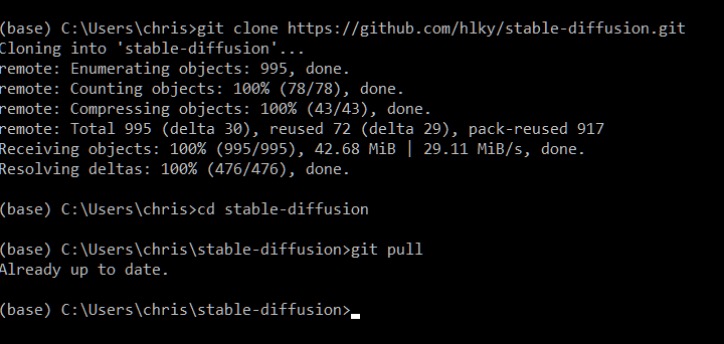

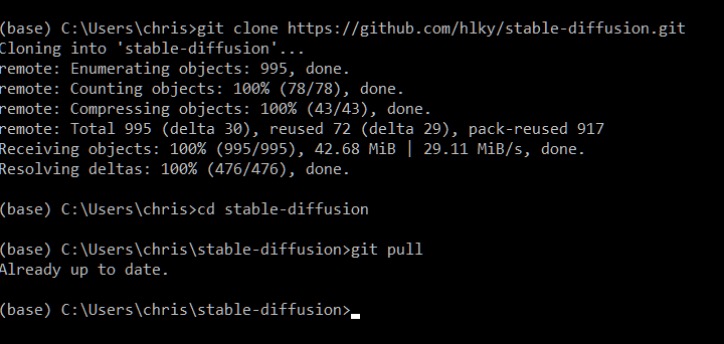

Once a repo has been cloned, updating it is as easy as typing `git pull` inside of Miniconda when in the repo’s topmost directory downloaded by the clone command. Below you can see I used the `cd` command to navigate into that folder.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

* Next you are going to want to create a Hugging Face account: [https://huggingface.co/](https://huggingface.co/)

|

||||

|

||||

|

||||

* After you have signed up, and are signed in go to this link and click on Authorize: [https://huggingface.co/CompVis/stable-diffusion-v-1-4-original](https://huggingface.co/CompVis/stable-diffusion-v-1-4-original)

|

||||

|

||||

|

||||

* After you have authorized your account, go to this link to download the model weights for version 1.4 of the model, future versions will be released in the same way, and updating them will be a similar process :

|

||||

[https://huggingface.co/CompVis/stable-diffusion-v-1-4-original/resolve/main/sd-v1-4.ckpt](https://huggingface.co/CompVis/stable-diffusion-v-1-4-original/resolve/main/sd-v1-4.ckpt)

|

||||

|

||||

|

||||

|

||||

[https://huggingface.co/CompVis/stable-diffusion-v-1-4-original/resolve/main/sd-v1-4.ckpt](https://huggingface.co/CompVis/stable-diffusion-v-1-4-original/resolve/main/sd-v1-4.ckpt)

|

||||

|

||||

* Download the model into this directory: `C:\Users\<username>\stable-diffusion-webui\models\ldm\stable-diffusion-v1`

|

||||

|

||||

|

||||

* Rename `sd-v1-4.ckpt` to `model.ckpt` once it is inside the stable-diffusion-v1 folder.

|

||||

|

||||

|

||||

* Since we are already in our stable-diffusion-webui folder in Miniconda, our next step is to create the environment Stable Diffusion needs to work.

|

||||

|

||||

|

||||

* _(Optional)_ If you already have an environment set up for an installation of Stable Diffusion named ldm open up the `environment.yaml` file in `\stable-diffusion-webui\` change the environment name inside of it from `ldm` to `ldo`

|

||||

|

||||

## Setting up the environment

|

||||

---

|

||||

|

||||

* create the Conda environment with `conda env create -f environment.yaml`

|

||||

## Running webui,cmd for the first time

|

||||

|

||||

* `webui.cmd` at the root folder (`\stable-diffusion-webui\`) is your main script that you'll always run. It has the functions to automatically do the followings:

|

||||

|

||||

* Create conda env

|

||||

* Install and update requirements

|

||||

* Run the relauncher and webui.py script for gradio UI options

|

||||

|

||||

* Run `webui.cmd` by double clicking the file.

|

||||

|

||||

* Wait for it to process, this could take some time. Eventually it’ll look like this:

|

||||

|

||||

|

||||

|

||||

### Optional additional models

|

||||

|

||||

|

||||

There are three more models that we need to download in order to get the most out of the functionality offered by HLKY. (next versions will do this automatically)

|

||||

* You'll receive warning messages on **GFPGAN**, **RealESRGAN** and **LDSR** but these are optionals and will be further explained below.

|

||||

|

||||

* The first of which is **GFPGAN**, a model that HLKY takes advantage of in order to _(optionally)_ help improve the look of generated faces.

|

||||

* In the meantime, you can now go to your web browser and open the link to [http://localhost:7860/](http://localhost:7860/).

|

||||

|

||||

* Download the model from [here](https://github.com/TencentARC/GFPGAN/releases/download/v1.3.0/GFPGANv1.3.pth) and save it into this folder: `\stable-diffusion-webui\src\gfpgan\experiments\pretrained_models`

|

||||

|

||||

* The next two models are for **RealESRGAN** an upscaling model that you can _(optionally)_ use to upscale your generations by 4x their original resolution.

|

||||

|

||||

|

||||

* Download the models from [here](https://github.com/xinntao/Real-ESRGAN/releases/download/v0.1.0/RealESRGAN_x4plus.pth) and [here](https://github.com/xinntao/Real-ESRGAN/releases/download/v0.2.2.4/RealESRGAN_x4plus_anime_6B.pth) and save them both into this folder: `\stable-diffusion-webui\src\realesrgan\experiments\pretrained_models`

|

||||

|

||||

* Next, in the `\stable-diffusion-webui\` folder, you’ll see a file named `webui.cmd`

|

||||

|

||||

* **webui.cmd is the main script you'll always run**. After it finishes initializing it’ll spit out a localhost link: [http://localhost:7860](http://localhost:7860) that you can copy and paste into your web browser to start dreaming with!

|

||||

|

||||

|

||||

* Enter the text prompt required and click generate.

|

||||

|

||||

* You should be able to see progress in your `webui.cmd` window. The [http://localhost:7860/](http://localhost:7860/) will be automatically updated to show the final image once progress reach 100%

|

||||

|

||||

* Images created with the web interface will be saved to `\stable-diffusion-webui\outputs\` in their respective folders alongside `.yaml` text files with all of the details of your prompts for easy referencing later. Images will also be saved with their seed and numbered so that they can be cross referenced with their `.yaml` files easily.

|

||||

|

||||

---

|

||||

|

||||

### Optional additional models

|

||||

|

||||

There are three more models that we need to download in order to get the most out of the functionality offered by sd-webui.

|

||||

|

||||

### GFPGAN

|

||||

|

||||

1. If you want to use GFPGAN to improve generated faces, you need to install it separately.

|

||||

2. Download [GFPGANv1.3.pth](https://github.com/TencentARC/GFPGAN/releases/download/v1.3.0/GFPGANv1.3.pth) and put it

|

||||

into the `/stable-diffusion-webui/src/gfpgan/experiments/pretrained_models` directory.

|

||||

|

||||

### RealESRGAN

|

||||

|

||||

1. Download [RealESRGAN_x4plus.pth](https://github.com/xinntao/Real-ESRGAN/releases/download/v0.1.0/RealESRGAN_x4plus.pth) and [RealESRGAN_x4plus_anime_6B.pth](https://github.com/xinntao/Real-ESRGAN/releases/download/v0.2.2.4/RealESRGAN_x4plus_anime_6B.pth).

|

||||

2. Put them into the `stable-diffusion-webui/src/realesrgan/experiments/pretrained_models` directory.

|

||||

|

||||

### LDSR

|

||||

|

||||

1. Git clone [devilismyfriend/latent-diffusion](https://github.com/devilismyfriend/latent-diffusion) into your `/stable-diffusion-webui/src/` folder.

|

||||

2. Download **LDSR** [project.yaml](https://heibox.uni-heidelberg.de/f/31a76b13ea27482981b4/?dl=1) and [model last.cpkt](https://heibox.uni-heidelberg.de/f/578df07c8fc04ffbadf3/?dl=1).

|

||||

3. Rename last.ckpt to model.ckpt and place both under `stable-diffusion-webui/src/latent-diffusion/experiments/pretrained_models/`.

|

||||

4. Refer to [here](https://github.com/sd-webui/stable-diffusion-webui/issues/488) for any issue.

|

||||

|

||||

# Credits

|

||||

|

||||

> Big thanks to Arkitecc#0339 from the Stable Diffusion discord for the original guide (support them [here](https://ko-fi.com/arkitecc)).

|

||||

> Modified by [Hafiidz](https://github.com/Hafiidz) with helps from sd-webui discord and team.

|

||||

Loading…

Reference in New Issue

Block a user