| Database | ||

| tests | ||

| .gitignore | ||

| .travis.yml | ||

| bloodhound.cabal | ||

| bloodhound.jpg | ||

| DSL.org | ||

| LICENSE | ||

| Makefile | ||

| README.org | ||

| Setup.hs | ||

- Bloodhound

- Elasticsearch client and query DSL for Haskell

- Hackage page and Haddock documentation

- Examples

- Possible future functionality

- Span Queries

- Function Score Query

- Node discovery and failover

- Support for TCP access to Elasticsearch

- Bulk cluster-join merge

- GeoShapeQuery

- GeoShapeFilter

- Geohash cell filter

- HasChild Filter

- HasParent Filter

- Indices Filter

- Query Filter

- Script based sorting

- Collapsing redundantly nested and/or structures

- Runtime checking for cycles in data structures

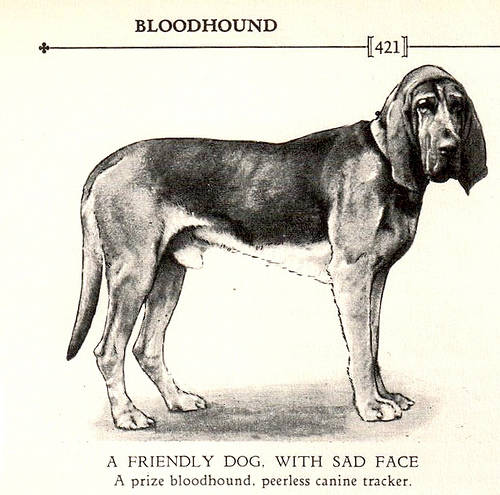

- Photo Origin

Elasticsearch client and query DSL for Haskell

Why?

Search doesn't have to be hard. Let the dog do it.

Stability

Bloodhound is alpha at the moment. The library works fine, but I don't want to mislead anyone into thinking the API is final or stable. I wouldn't call the library "complete" or representative of everything you can do in Elasticsearch but compared to clients in other languages, the usability is good.

Hackage page and Haddock documentation

Examples

Index Operations

Create Index

-- Formatted for use in ghci, so there are "let"s in front of the decls.

-- if you see :{ and :}, they're so you can copy-paste

-- the multi-line examples into your ghci REPL.

:set -XDeriveGeneric

import Database.Bloodhound

import Data.Aeson

import Data.Either (Either(..))

import Data.Maybe (fromJust)

import Data.Time.Calendar (Day(..))

import Data.Time.Clock (secondsToDiffTime, UTCTime(..))

import Data.Text (Text)

import GHC.Generics (Generic)

import Network.HTTP.Conduit

import qualified Network.HTTP.Types.Status as NHTS

-- no trailing slashes in servers, library handles building the path.

let testServer = (Server "http://localhost:9200")

let testIndex = IndexName "twitter"

let testMapping = MappingName "tweet"

-- defaultIndexSettings is exported by Database.Bloodhound as well

let defaultIndexSettings = IndexSettings (ShardCount 3) (ReplicaCount 2)

-- createIndex returns IO Reply

-- response :: Reply, Reply is a synonym for Network.HTTP.Conduit.Response

response <- createIndex testServer defaultIndexSettings testIndexDelete Index

Code

-- response :: Reply

response <- deleteIndex testServer testIndexExample Response

-- print response if it was a success

Response {responseStatus = Status {statusCode = 200, statusMessage = "OK"}

, responseVersion = HTTP/1.1

, responseHeaders = [("Content-Type", "application/json; charset=UTF-8")

, ("Content-Length", "21")]

, responseBody = "{\"acknowledged\":true}"

, responseCookieJar = CJ {expose = []}

, responseClose' = ResponseClose}

-- if the index to be deleted didn't exist anyway

Response {responseStatus = Status {statusCode = 404, statusMessage = "Not Found"}

, responseVersion = HTTP/1.1

, responseHeaders = [("Content-Type", "application/json; charset=UTF-8")

, ("Content-Length","65")]

, responseBody = "{\"error\":\"IndexMissingException[[twitter] missing]\",\"status\":404}"

, responseCookieJar = CJ {expose = []}

, responseClose' = ResponseClose}Refresh Index

Note, you have to do this if you expect to read what you just wrote

resp <- refreshIndex testServer testIndexExample Response

-- print resp on success

Response {responseStatus = Status {statusCode = 200, statusMessage = "OK"}

, responseVersion = HTTP/1.1

, responseHeaders = [("Content-Type", "application/json; charset=UTF-8")

, ("Content-Length","50")]

, responseBody = "{\"_shards\":{\"total\":10,\"successful\":5,\"failed\":0}}"

, responseCookieJar = CJ {expose = []}

, responseClose' = ResponseClose}Mapping Operations

Create Mapping

-- don't forget imports and the like at the top.

data TweetMapping = TweetMapping deriving (Eq, Show)

-- I know writing the JSON manually sucks.

-- I don't have a proper data type for Mappings yet.

-- Let me know if this is something you need.

:{

instance ToJSON TweetMapping where

toJSON TweetMapping =

object ["tweet" .=

object ["properties" .=

object ["location" .=

object ["type" .= ("geo_point" :: Text)]]]]

:}

resp <- createMapping testServer testIndex testMapping TweetMappingDelete Mapping

resp <- deleteMapping testServer testIndex testMappingDocument Operations

Indexing Documents

-- don't forget the imports and derive generic setting for ghci

-- at the beginning of the examples.

:{

data Location = Location { lat :: Double

, lon :: Double } deriving (Eq, Generic, Show)

data Tweet = Tweet { user :: Text

, postDate :: UTCTime

, message :: Text

, age :: Int

, location :: Location } deriving (Eq, Generic, Show)

exampleTweet = Tweet { user = "bitemyapp"

, postDate = UTCTime

(ModifiedJulianDay 55000)

(secondsToDiffTime 10)

, message = "Use haskell!"

, age = 10000

, location = Location 40.12 (-71.34) }

-- automagic (generic) derivation of instances because we're lazy.

instance ToJSON Tweet

instance FromJSON Tweet

instance ToJSON Location

instance FromJSON Location

:}

-- Should be able to toJSON and encode the data structures like this:

-- λ> toJSON $ Location 10.0 10.0

-- Object fromList [("lat",Number 10.0),("lon",Number 10.0)]

-- λ> encode $ Location 10.0 10.0

-- "{\"lat\":10,\"lon\":10}"

resp <- indexDocument testServer testIndex testMapping exampleTweet (DocId "1")Example Response

Response {responseStatus =

Status {statusCode = 200, statusMessage = "OK"}

, responseVersion = HTTP/1.1, responseHeaders =

[("Content-Type","application/json; charset=UTF-8"),

("Content-Length","75")]

, responseBody = "{\"_index\":\"twitter\",\"_type\":\"tweet\",\"_id\":\"1\",\"_version\":2,\"created\":false}"

, responseCookieJar = CJ {expose = []}, responseClose' = ResponseClose}Deleting Documents

resp <- deleteDocument testServer testIndex testMapping (DocId "1")Getting Documents

-- n.b., you'll need the earlier imports. responseBody is from http-conduit

resp <- getDocument testServer testIndex testMapping (DocId "1")

-- responseBody :: Response body -> body

let body = responseBody resp

-- you have two options, you use decode and just get Maybe (EsResult Tweet)

-- or you can use eitherDecode and get Either String (EsResult Tweet)

let maybeResult = decode body :: Maybe (EsResult Tweet)

-- the explicit typing is so Aeson knows how to parse the JSON.

-- use either if you want to know why something failed to parse.

-- (string errors, sadly)

let eitherResult = decode body :: Either String (EsResult Tweet)

-- print eitherResult should look like:

Right (EsResult {_index = "twitter"

, _type = "tweet"

, _id = "1"

, _version = 2

, found = Just True

, _source = Tweet {user = "bitemyapp"

, postDate = 2009-06-18 00:00:10 UTC

, message = "Use haskell!"

, age = 10000

, location = Location {lat = 40.12, lon = -71.34}}})

-- _source in EsResult is parametric, we dispatch the type by passing in what we expect (Tweet) as a parameter to EsResult.

-- use the _source record accessor to get at your document

λ> fmap _source result

Right (Tweet {user = "bitemyapp"

, postDate = 2009-06-18 00:00:10 UTC

, message = "Use haskell!"

, age = 10000

, location = Location {lat = 40.12, lon = -71.34}})Search

Querying

Term Query

-- exported by the Client module, just defaults some stuff.

-- mkSearch :: Maybe Query -> Maybe Filter -> Search

-- mkSearch query filter = Search query filter Nothing False 0 10

let query = TermQuery (Term "user" "bitemyapp") Nothing

-- AND'ing identity filter with itself and then tacking it onto a query

-- search should be a null-operation. I include it for the sake of example.

-- <||> (or/plus) should make it into a search that returns everything.

let filter = IdentityFilter <&&> IdentityFilter

-- constructing the search object the searchByIndex function dispatches on.

let search = mkSearch (Just query) (Just filter)

-- you can also searchByType and specify the mapping name.

reply <- searchByIndex testServer testIndex search

let result = eitherDecode (responseBody reply) :: Either String (SearchResult Tweet)

λ> fmap (hits . searchHits) result

Right [Hit {hitIndex = IndexName "twitter"

, hitType = MappingName "tweet"

, hitDocId = DocId "1"

, hitScore = 0.30685282

, hitSource = Tweet {user = "bitemyapp"

, postDate = 2009-06-18 00:00:10 UTC

, message = "Use haskell!"

, age = 10000

, location = Location {lat = 40.12, lon = -71.34}}}]Match Query

let query = QueryMatchQuery $ mkMatchQuery (FieldName "user") (QueryString "bitemyapp")

let search = mkSearch (Just query) NothingMulti-Match Query

let fields = [FieldName "user", FieldName "message"]

let query = QueryMultiMatchQuery $ mkMultiMatchQuery fields (QueryString "bitemyapp")

let search = mkSearch (Just query) NothingBool Query

let innerQuery = QueryMatchQuery $

mkMatchQuery (FieldName "user") (QueryString "bitemyapp")

let query = QueryBoolQuery $

mkBoolQuery (Just innerQuery) Nothing Nothing

let search = mkSearch (Just query) NothingBoosting Query

let posQuery = QueryMatchQuery $

mkMatchQuery (FieldName "user") (QueryString "bitemyapp")

let negQuery = QueryMatchQuery $

mkMatchQuery (FieldName "user") (QueryString "notmyapp")

let query = QueryBoostingQuery $

BoostingQuery posQuery negQuery (Boost 0.2)Rest of the query/filter types

Just follow the pattern you've seen here and check the Hackage API documentation.

Sorting

let sortSpec = DefaultSortSpec $ mkSort (FieldName "age") Ascending

-- mkSort is a shortcut function that takes a FieldName and a SortOrder

-- to generate a vanilla DefaultSort.

-- checkt the DefaultSort type for the full list of customizable options.

-- From and size are integers for pagination.

-- When sorting on a field, scores are not computed. By setting TrackSortScores to true, scores will still be computed and tracked.

-- type Sort = [SortSpec]

-- type TrackSortScores = Bool

-- type From = Int

-- type Size = Int

-- Search takes Maybe Query

-- -> Maybe Filter

-- -> Maybe Sort

-- -> TrackSortScores

-- -> From -> Size

-- just add more sortspecs to the list if you want tie-breakers.

let search = Search Nothing (Just IdentityFilter) (Just [sortSpec]) False 0 10Filtering

And, Not, and Or filters

Filters form a monoid and seminearring.

instance Monoid Filter where

mempty = IdentityFilter

mappend a b = AndFilter [a, b] defaultCache

instance Seminearring Filter where

a <||> b = OrFilter [a, b] defaultCache

-- AndFilter and OrFilter take [Filter] as an argument.

-- This will return anything, because IdentityFilter returns everything

OrFilter [IdentityFilter, someOtherFilter] False

-- This will return exactly what someOtherFilter returns

AndFilter [IdentityFilter, someOtherFilter] False

-- Thanks to the seminearring and monoid, the above can be expressed as:

-- "and"

IdentityFilter <&&> someOtherFilter

-- "or"

IdentityFilter <||> someOtherFilter

-- Also there is a NotFilter, it only accepts a single filter, not a list.

NotFilter someOtherFilter FalseIdentity Filter

-- And'ing two Identity

let queryFilter = IdentityFilter <&&> IdentityFilter

let search = mkSearch Nothing (Just queryFilter)

reply <- searchByType testServer testIndex testMapping searchBoolean Filter

Similar to boolean queries.

-- Will return only items whose "user" field contains the term "bitemyapp"

let queryFilter = BoolFilter (MustMatch (Term "user" "bitemyapp") False)

-- Will return only items whose "user" field does not contain the term "bitemyapp"

let queryFilter = BoolFilter (MustNotMatch (Term "user" "bitemyapp") False)

-- The clause (query) should appear in the matching document.

-- In a boolean query with no must clauses, one or more should

-- clauses must match a document. The minimum number of should

-- clauses to match can be set using the minimum_should_match parameter.

let queryFilter = BoolFilter (ShouldMatch [(Term "user" "bitemyapp")] False)Exists Filter

-- Will filter for documents that have the field "user"

let existsFilter = ExistsFilter (FieldName "user")Geo BoundingBox Filter

-- topLeft and bottomRight

let box = GeoBoundingBox (LatLon 40.73 (-74.1)) (LatLon 40.10 (-71.12))

let constraint = GeoBoundingBoxConstraint (FieldName "tweet.location") box False

-- second argument is GeoFilterType, memory or indexed.

let geoFilter = GeoBoundingBoxFilter constraint GeoFilterMemoryGeo Distance Filter

let geoPoint = GeoPoint (FieldName "tweet.location") (LatLon 40.12 (-71.34))

-- coefficient and units

let distance = Distance 10.0 Miles

-- GeoFilterType or NoOptimizeBbox

let optimizeBbox = OptimizeGeoFilterType GeoFilterMemory

-- SloppyArc is the usual/default optimization in Elasticsearch today

-- but pre-1.0 versions will need to pick Arc or Plane.

let geoFilter = GeoDistanceFilter geoPoint distance SloppyArc optimizeBbox FalseGeo Distance Range Filter

Think of a donut and you won't be far off.

let geoPoint = GeoPoint (FieldName "tweet.location") (LatLon 40.12 (-71.34))

let distanceRange = DistanceRange (Distance 0.0 Miles) (Distance 10.0 Miles)

let geoFilter = GeoDistanceRangeFilter geoPoint distanceRangeGeo Polygon Filter

-- I think I drew a square here.

let points = [LatLon 40.0 (-70.00),

LatLon 40.0 (-72.00),

LatLon 41.0 (-70.00),

LatLon 41.0 (-72.00)]

let geoFilter = GeoPolygonFilter (FieldName "tweet.location") pointsDocument IDs filter

-- takes a mapping name and a list of DocIds

IdsFilter (MappingName "tweet") [DocId "1"]Range Filter

Full Range

-- RangeFilter :: FieldName

-- -> Either HalfRange Range

-- -> RangeExecution

-- -> Cache -> Filter

let filter = RangeFilter (FieldName "age")

(Right (RangeLtGt (LessThan 100000.0) (GreaterThan 1000.0)))

RangeExecutionIndex FalseHalf Range

let filter = RangeFilter (FieldName "age")

(Left (HalfRangeLt (LessThan 100000.0)))

RangeExecutionIndex FalseRegexp Filter

-- RegexpFilter

-- :: FieldName

-- -> Regexp

-- -> RegexpFlags

-- -> CacheName

-- -> Cache

-- -> CacheKey

-- -> Filter

let filter = RegexpFilter (FieldName "user") (Regexp "bite.*app")

RegexpAll (CacheName "test") False (CacheKey "key")

-- RegexpFlags can be a combination of RegexpAll, Complement,

-- Interval, Intersection, AnyString, and a combination of two options thereof.Possible future functionality

Span Queries

Function Score Query

Node discovery and failover

Might require TCP support.

Support for TCP access to Elasticsearch

Pretend to be a transport client?

Bulk cluster-join merge

Might require making a lucene index on disk with the appropriate format.

GeoShapeQuery

GeoShapeFilter

Geohash cell filter

HasChild Filter

HasParent Filter

Indices Filter

Query Filter

Script based sorting

Collapsing redundantly nested and/or structures

The Seminearring instance, if deeply nested can possibly produce nested structure that is redundant. Depending on how this affects ES perforamnce, reducing this structure might be valuable.

Runtime checking for cycles in data structures

Photo Origin

Photo from HA! Designs: https://www.flickr.com/photos/hadesigns/