mirror of

https://github.com/urbit/developers.urbit.org.git

synced 2024-08-17 09:40:33 +03:00

Adjust urbit.org links.

This commit is contained in:

parent

9dcefea4d3

commit

9057158217

@ -8,7 +8,7 @@ ship = "~sitful-hatred"

|

||||

image = "https://media.urbit.org/site/posts/essays/l2-blogpost.png"

|

||||

+++

|

||||

|

||||

Urbit’s [Layer 2](https://urbit.org/reference/azimuth/l2/layer2) system, naive rollups, allows planets to be spawned at substantially lower cost. This system operates in parallel to Layer 1 Azimuth, but introduces some new concepts and differences that are important to understand. Read on for a high-level survey, and check the star and planet guides linked at the bottom for details and instructions.

|

||||

Urbit’s [Layer 2](/reference/azimuth/l2/layer2) system, naive rollups, allows planets to be spawned at substantially lower cost. This system operates in parallel to Layer 1 Azimuth, but introduces some new concepts and differences that are important to understand. Read on for a high-level survey, and check the star and planet guides linked at the bottom for details and instructions.

|

||||

|

||||

After a year of development and testing, **Urbit’s Layer 2 system is live and operational**. Star and planet operators can now take advantage of subsidized Azimuth operations. If you operate a star, you can distribute planets cheaply or for free; if you’ve been waiting to buy an ID due to transaction fees, you will find planets are available much more cheaply.

|

||||

|

||||

|

||||

14

content/guides/additional/dist/guide.md

vendored

14

content/guides/additional/dist/guide.md

vendored

@ -7,15 +7,15 @@ In this document we'll walk through an example of creating and publishing a desk

|

||||

|

||||

## Create desk

|

||||

|

||||

To begin, we'll need to clone the [urbit git repo](https://github.com/urbit/urbit) from the Unix terminal:

|

||||

To begin, we'll need to clone the [Urbit Git repo](https://github.com/urbit/urbit) from the Unix terminal:

|

||||

|

||||

```

|

||||

```sh

|

||||

[user@host ~]$ git clone https://github.com/urbit/urbit urbit-git

|

||||

```

|

||||

|

||||

Once that's done, we can navigate to the `pkg` directory in our cloned repo:

|

||||

|

||||

```

|

||||

```sh

|

||||

[user@host ~]$ cd urbit-git/pkg

|

||||

[user@host pkg]$ ls .

|

||||

arvo btc-wallet garden grid interface npm webterm

|

||||

@ -29,7 +29,7 @@ To make the creation of a new desk easier, `base-dev` and `garden-dev` contain s

|

||||

|

||||

Let's create a new `hello` desk:

|

||||

|

||||

```

|

||||

```sh

|

||||

[user@host pkg]$ mkdir hello

|

||||

[user@host pkg]$ ./symbolic-merge.sh base-dev hello

|

||||

[user@host pkg]$ ./symbolic-merge.sh garden-dev hello

|

||||

@ -42,7 +42,7 @@ lib mar sur

|

||||

|

||||

Our desk must include a `sys.kelvin` file which specifies the kernel version it's compatible with. Let's create that:

|

||||

|

||||

```

|

||||

```sh

|

||||

[user@host hello]$ echo "[%zuse 418]" > sys.kelvin

|

||||

[user@host hello]$ cat sys.kelvin

|

||||

[%zuse 418]

|

||||

@ -52,7 +52,7 @@ Our desk must include a `sys.kelvin` file which specifies the kernel version it'

|

||||

|

||||

We can also add a `desk.ship` file to specify the original publisher of this desk. We'll try this on a fakezod so let's just add `~zod` as the publisher:

|

||||

|

||||

```

|

||||

```sh

|

||||

[user@host hello]$ echo "~zod" > desk.ship

|

||||

[user@host hello]$ cat desk.ship

|

||||

~zod

|

||||

@ -88,7 +88,7 @@ In the text editor, we'll add the following:

|

||||

base+'hello'

|

||||

glob-ames+[~zod 0v0]

|

||||

version+[0 0 1]

|

||||

website+'https://urbit.org/guides/additional/dist/guide'

|

||||

website+'https://developers.urbit.org/guides/additional/dist/guide'

|

||||

license+'MIT'

|

||||

==

|

||||

```

|

||||

|

||||

@ -29,13 +29,13 @@ Urbit consists of an identity protocol (“Azimuth”, or “Urbit ID”) and a

|

||||

|

||||

2. **Urbit OS (Arvo)** is an operating system which provides the software for the personal server platform that constitutes the day-to-day usage of Urbit. Arvo works over a [peer-to-peer](https://en.wikipedia.org/wiki/Peer-to-peer) [end-to-end-encrypted](https://en.wikipedia.org/wiki/End-to-end_encryption) network to interact with other Urbit ships (or unique instances).

|

||||

|

||||

Arvo is an axiomatic operating system which restricts itself to pure mathematical functions, making it [deterministic](https://en.wikipedia.org/wiki/Deterministic_algorithm) and [functional-as-in-programming](https://en.wikipedia.org/wiki/Functional_programming). Such strong guarantees require an operating protocol, the [Nock virtual machine](https://urbit.org/reference/nock/definition), which will be persistent across hardware changes and always provide an upgrade path for necessary changes.

|

||||

Arvo is an axiomatic operating system which restricts itself to pure mathematical functions, making it [deterministic](https://en.wikipedia.org/wiki/Deterministic_algorithm) and [functional-as-in-programming](https://en.wikipedia.org/wiki/Functional_programming). Such strong guarantees require an operating protocol, the [Nock virtual machine](/reference/nock/definition), which will be persistent across hardware changes and always provide an upgrade path for necessary changes.

|

||||

|

||||

It's hard to write a purely functional operating system on hardware which doesn't make such guarantees, so Urbit OS uses a new language, Hoon, which compiles to Nock and hews to the necessary conceptual models for a platform like Urbit. [The Hoon overview](/reference/hoon/overview) covers more of the high-level design decisions behind the language, as does [developer ~rovnys-ricfer's explanation](https://urbit.org/blog/why-hoon/).

|

||||

|

||||

Hoon School introduces and explains the fundamental concepts you need in order to understand Hoon's semantics. It then introduces a number of key examples and higher-order abstractions which will make you a more fluent Hoon programmer.

|

||||

|

||||

Once you have completed Hoon School, you should work through the [Gall Guide](https://urbit.org/guides/core/app-school/intro) to learn how to build full applications on Urbit.

|

||||

Once you have completed Hoon School, you should work through [App School](/guides/core/app-school/1-intro) to learn how to build full applications on Urbit.

|

||||

|

||||

|

||||

## Environment Setup

|

||||

@ -46,7 +46,7 @@ Since live network identities (_liveships_) are finite, scarce, and valuable, mo

|

||||

|

||||

Two fakeships can communicate with each other on the same machine, but have no awareness of the broader Urbit network. We won't need to use this capability in Hoon School Live, but it will be helpful later when you start developing networked apps.

|

||||

|

||||

Before beginning, you'll need to get a development ship running and configure an appropriate editor. See the [Environment Setup](https://urbit.org/guides/additional/development/environment) guide for details.

|

||||

Before beginning, you'll need to get a development ship running and configure an appropriate editor. See the [Environment Setup](/guides/additional/development/environment) guide for details.

|

||||

|

||||

Once you have a `dojo>` prompt, the system is ready to go and waiting on input.

|

||||

|

||||

|

||||

@ -28,7 +28,7 @@ Hoon expressions can be either basic or complex. Basic expressions of Hoon are

|

||||

|

||||

The Urbit operating system hews to a conceptual model wherein each expression takes place in a certain context (the _subject_). While sharing a lot of practicality with other programming paradigms and platforms, Urbit's model is mathematically well-defined and unambiguously specified. Every expression of Hoon is evaluated relative to its subject, a piece of data that represents the environment, or the context, of an expression.

|

||||

|

||||

At its root, Urbit is completely specified by [Nock](https://urbit.org/reference/nock/definition), sort of a machine language for the Urbit virtual machine layer and event log. However, Nock code is basically unreadable (and unwriteable) for a human. [One worked example](https://urbit.org/reference/nock/example) yields, for decrementing a value by one, the Nock formula:

|

||||

At its root, Urbit is completely specified by [Nock](/reference/nock/definition), sort of a machine language for the Urbit virtual machine layer and event log. However, Nock code is basically unreadable (and unwriteable) for a human. [One worked example](/reference/nock/example) yields, for decrementing a value by one, the Nock formula:

|

||||

|

||||

```hoon

|

||||

[8 [1 0] 8 [1 6 [5 [0 7] 4 0 6] [0 6] 9 2 [0 2] [4 0 6] 0 7] 9 2 0 1]

|

||||

|

||||

@ -14,7 +14,7 @@ _This module will discuss how we can have confidence that a program does what it

|

||||

>

|

||||

> It's natural to feel fear of code; however, you must act as though you are able to master and change any part of it. To code courageously is to walk into any abyss, bring light, and make it right.

|

||||

>

|

||||

> (~wicdev-wisryt, [“Urbit Precepts” C1](https://urbit.org/blog/precepts))

|

||||

> (~wicdev-wisryt, [“Urbit Precepts” C1](/guides/additional/development/precepts))

|

||||

|

||||

When you produce software, how much confidence do you have that it does what you think it does? Bugs in code are common, but judicious testing can manifest failures so that the bugs can be identified and corrected. We can classify a testing regimen for Urbit code into a couple of layers: fences and unit tests.

|

||||

|

||||

|

||||

@ -477,7 +477,7 @@ We get a different value from the same generator between runs, something that is

|

||||

|

||||

## Scrying (In Brief)

|

||||

|

||||

A _peek_ or a _scry_ is a request to Arvo to tell you something about the state of part of the Urbit OS. Scries are used to determine the state of an agent or a vane. The [`.^` dotket](/reference/hoon/rune/dot#dotket) rune sends the scry request to a particular vane with a certain _care_ or type of scry. The request is then routed to a particular path in that vane. Scries are discused in detail in [the App Guide](https://urbit.org/guides/core/app-school/10-scry). We will only briefly introduce them here as we can use them later to find out about Arvo's system state, such as file contents and agent state.

|

||||

A _peek_ or a _scry_ is a request to Arvo to tell you something about the state of part of the Urbit OS. Scries are used to determine the state of an agent or a vane. The [`.^` dotket](/reference/hoon/rune/dot#dotket) rune sends the scry request to a particular vane with a certain _care_ or type of scry. The request is then routed to a particular path in that vane. Scries are discused in detail in [App School](/guides/core/app-school/10-scry). We will only briefly introduce them here as we can use them later to find out about Arvo's system state, such as file contents and agent state.

|

||||

|

||||

### `%c` Clay

|

||||

|

||||

|

||||

@ -237,7 +237,7 @@ Previously, we introduced the concept of a `%say` generator to produce a more ve

|

||||

|

||||

We use an `%ask` generator when we want to create an interactive program that prompts for inputs as it runs, rather than expecting arguments to be passed in at the time of initiation.

|

||||

|

||||

This section will briefly walk through an `%ask` generator to give you a taste of how they work. The [CLI app guide](https://urbit.org/guides/additional/hoon/cli-tutorial) walks through the libraries necessary for working with `%ask` generators in greater detail. We also recommend reading [~wicdev-wisryt's “Input and Output in Hoon”](https://urbit.org/blog/io-in-hoon) for an extended consideration of relevant input/output issues.

|

||||

This section will briefly walk through an `%ask` generator to give you a taste of how they work. The [CLI app guide](/guides/additional/hoon/cli-tutorial) walks through the libraries necessary for working with `%ask` generators in greater detail. We also recommend reading [~wicdev-wisryt's “Input and Output in Hoon”](https://urbit.org/blog/io-in-hoon) for an extended consideration of relevant input/output issues.

|

||||

|

||||

##### Tutorial: `%ask` Generator

|

||||

|

||||

|

||||

@ -140,7 +140,7 @@ Basically, one uses a `rule` on `[hair tape]` to yield an `edge`.

|

||||

|

||||

A substantial swath of the standard library is built around parsing for various scenarios, and there's a lot to know to effectively use these tools. **If you can parse arbitrary input using Hoon after this lesson, you're in fantastic shape for building things later.** It's worth spending extra effort to understand how these programs work.

|

||||

|

||||

There is a [full guide on parsing](https://urbit.org/guides/additional/hoon/parsing) which goes into more detail than this quick overview.

|

||||

There is a [full guide on parsing](/guides/additional/hoon/parsing) which goes into more detail than this quick overview.

|

||||

|

||||

### Scanning Through a `tape`

|

||||

|

||||

@ -310,7 +310,7 @@ However, to parse iteratively, we need to use the [`++knee`]() function, which t

|

||||

|-(;~(plug prn ;~(pose (knee *tape |.(^$)) (easy ~))))

|

||||

```

|

||||

|

||||

There is an example of a calculator [in the docs](https://urbit.org/guides/additional/hoon/parsing#recursive-parsers) that's worth a read. It uses `++knee` to scan in a set of numbers at a time.

|

||||

There is an example of a calculator [in the parsing guide](/guides/additional/hoon/parsing#recursive-parsers) that's worth a read. It uses `++knee` to scan in a set of numbers at a time.

|

||||

|

||||

```hoon

|

||||

|= math=tape

|

||||

|

||||

28

content/reference/additional/dist/_index.md

vendored

28

content/reference/additional/dist/_index.md

vendored

@ -1,28 +0,0 @@

|

||||

+++

|

||||

title = "Distribution"

|

||||

weight = 900

|

||||

sort_by = "weight"

|

||||

insert_anchor_links = "right"

|

||||

+++

|

||||

|

||||

Developer documentation for desk/app distribution and management.

|

||||

|

||||

## [Overview](/guides/additional/dist/dist)

|

||||

|

||||

An overview of desk/app distribution and management.

|

||||

|

||||

## [Docket Files](/guides/additional/dist/docket)

|

||||

|

||||

Documentation of `desk.docket` files.

|

||||

|

||||

## [Glob](/guides/additional/dist/glob)

|

||||

|

||||

Documentation of `glob`s (client bundles).

|

||||

|

||||

## [Guide](/guides/additional/dist/guide)

|

||||

|

||||

A walkthrough of creating, installing and publishing a new desk with a tile and front-end.

|

||||

|

||||

## [Dojo Tools](/guides/additional/dist/tools)

|

||||

|

||||

Documentation of useful generators for managing and distributing desks.

|

||||

104

content/reference/additional/dist/dist.md

vendored

104

content/reference/additional/dist/dist.md

vendored

@ -1,104 +0,0 @@

|

||||

+++

|

||||

title = "Overview"

|

||||

weight = 1

|

||||

+++

|

||||

|

||||

Urbit allows peer-to-peer distribution and installation of applications. A user can click on a link to an app hosted by another ship to install that app. The homescreen interface lets users manage their installed apps and launch their interfaces in new tabs.

|

||||

|

||||

This document describes the architecture of Urbit's app distribution system. For a walkthrough of creating and distributing an app, see the [`Guide`](/guides/additional/dist/guide) document.

|

||||

|

||||

## Architecture

|

||||

|

||||

The unit of software distribution is the desk. A desk is a lot like a git branch, but full of typed files, and designed to work with the Arvo kernel. In addition to files full of source code, a desk specifies the Kelvin version of the kernel that it's expecting to interact with, and it includes a manifest file describing which of the Gall agents it defines should be run by default.

|

||||

|

||||

Every desk is self-contained: the result of validating its files and building its agents is a pure function of its contents and the code in the specified Kelvin version of the kernel. A desk on one ship will build into the same files and programs, noun for noun, as on any other ship.

|

||||

|

||||

This symmetry is broken during agent installation, which can emit effects that might trigger other actions that cause the Arvo event to fail and be rolled back. An agent can ask the kernel to kill the Arvo event by using the new `%pyre` effect. Best practice, though, is for no desk to have a hard dependency on another desk.

|

||||

|

||||

If you're publishing an app that expects another app to be installed in order to function, the best practice is to check in `+on-init` for the presence of that other app's desk. If it's not installed, your app should display a message to the user and a link to the app that they should install in order to support your app. App-install links are well-supported in Tlon's Landscape, a suite of user-facing applications developed by Tlon.

|

||||

|

||||

For the moment, every live desk must have the same Kelvin version as the kernel. Future kernels that know how to maintain backward compatibility with older kernels will also allow older desks, but no commitment has yet been made to maintain backward compatibility across kernel versions, so for the time being, app developers should expect to update their apps accordingly.

|

||||

|

||||

Each desk defines its own filetypes (called `mark`s), in its `/mar` folder. There are no longer shared system marks that all userspace code knows, nor common libraries in `/lib` or `/sur` — each desk is completely self-contained.

|

||||

|

||||

It's common for a desk to want to use files that were originally defined in another desk, so that it can interact with agents on that desk. The convention is that if I'm publishing an app that I expect other devs to build client apps for (on other desks), I split out a "dev desk" containing just the external interface to my desk. Typically, both my app desk and clients' app desks will sync from this dev desk.

|

||||

|

||||

Tlon has done this internally. Most desks will want to sync the `%base-dev` desk so they can easily interact with the kernel and system apps in the `%base` desk. The `%base` desk includes agents such as `%dojo` and `%hood` (with Kiln as an informal sub-agent of `%hood` that manages desk installations).

|

||||

|

||||

A "landscape app", i.e. a desk that defines a tile that the user can launch from the home screen, should also sync from the `%garden-dev` desk. This desk includes the versioned `%docket-0` mark, which the app needs in order to include a `/desk/docket-0` file.

|

||||

|

||||

The `%docket` agent reads the `/desk/docket-0` file to display an app tile on the home screen and hook up other front-end functionality, such as downloading the app's client bundle ([glob](/guides/additional/dist/glob)). Docket is a new agent, in the `%garden` desk, that manages app installations. Docket serves the home screen, downloads client bundles, and communicates with Kiln to configure the apps on your system.

|

||||

|

||||

For those of you familiar with the old `%glob` and `%file-server` agents, they have now been replaced by Docket.

|

||||

|

||||

### Anatomy of a Desk

|

||||

|

||||

Desks still contain helper files in `/lib` and `/sur`, generators in `/gen`, marks in `/mar`, threads in `/ted`, tests in `/tests`, and agents in `/app`. In addition, desks now also contain these files:

|

||||

|

||||

```

|

||||

/sys/kelvin :: Kernel kelvin, e.g. [%zuse 418]

|

||||

/desk/bill :: (optional, read by Kiln) list of agents to run

|

||||

/desk/docket-0 :: (optional, read by Docket) app metadata

|

||||

/desk/ship :: (optional, read by Docket) ship of original desk publisher, e.g. ~zod

|

||||

```

|

||||

|

||||

Only the `%base` desk contains a `/sys` directory with the standard library, zuse, Arvo code and vanes. All other desks simply specify the kernel version with which they're compatible in the `/sys/kelvin` file.

|

||||

|

||||

### Updates

|

||||

|

||||

The main idea is that an app should only ever be run by a kernel that knows how to run it. For now, since there are not yet kernels that know how to run apps designed for an older kernel, this constraint boils down to ensuring that all live desks have the same kernel Kelvin version as the running kernel itself.

|

||||

|

||||

To upgrade your kernel to a new version, you need to make a commit to the `%base` desk. Clay will then check if any files in `/sys` changed in this commit. If so, Clay sends the new commit to Arvo, which decides if it needs to upgrade (or upgrade parts of itself, such as a vane). After Arvo upgrades (or decides not to), it wakes up Clay, which finalizes the commit to the `%base` desk and notify the rest of the system.

|

||||

|

||||

That's the basic flow for upgrading the kernel. However, some kernel updates also change the Kelvin version. If the user has also installed apps, those apps are designed to work with the old Kelvin, so they won't work with the new Kelvin — at least, not at the commit that's currently running.

|

||||

|

||||

Kiln, part of the system app `%hood` in the `%base` desk, manages desk installations, including the `%base` desk. It can install an app in two ways: a local install, sourced from a desk on the user's machine, or a remote install, which downloads a desk from another ship. Both are performed using the same generator, `|install`.

|

||||

|

||||

A remote install syncs an upstream desk into a local desk by performing a merge into the local desk whenever the upstream desk changes.

|

||||

|

||||

The Kelvin update problem is especially thorny for remote installs, which are the most common. By default, a planet has its `%base` desk synced from its sponsor's `%kids` desk, and it will typically have app desks synced from their publishers' ships.

|

||||

|

||||

Kiln listens (through Clay, which knows how to query remote Clays) for new commits on a remote-installed app's upstream ship and desk. When Clay hears about a new commit, it downloads the files and stores them as a "foreign desk", without validating or building them. It also tells Kiln.

|

||||

|

||||

When Kiln learns of these new foreign files, it reads the new `/sys/kelvin`. If it's the same as the live kernel's, Kiln asks Clay to merge the new files into the local desk where the app is installed. If the new foreign Kelvin is further ahead (closer to zero) than the kernel's, Kiln does not merge it into the local desk yet. Instead, it enqueues it.

|

||||

|

||||

Later, when Kiln hears of a new kernel Kelvin version on the upstream `%base` desk, it checks whether all the other live desks have a commit enqueued at that Kelvin. If so, it updates `%base` and then all the other desks, in one big Arvo event. This brings the system from fully at the old Kelvin, to fully at the new Kelvin, atomically — if any part of that fails, the Arvo event will abort and be rolled back, leaving the system back fully at the old Kelvin.

|

||||

|

||||

If not all live desks have an enqueued commit at the new kernel Kelvin, then Kiln notifies its clients that a kernel update is blocked on a set of desks. Docket, listening to Kiln, presents the user with a choice: either dismiss the notification and keep the old kernel, or suspend the blocking desks and apply the kernel update.

|

||||

|

||||

Suspending a desk turns off all its agents, saving their states in Gall. If there are no agents running from a desk, that desk doesn't force the kernel to be at the same Kelvin version. It's just inert data. If a later upstream update allows this desk to be run with a newer kernel, the user can revive the desk, and the system will migrate the old state into the new agent.

|

||||

|

||||

### Managing Apps and Desks in Kiln

|

||||

|

||||

Turning agents on and off is managed declaratively, rather than imperatively. Kiln maintains state for each desk about which agents should be forced on and which should be forced off. The set of running agents is now a function of the desk's `/desk/bill` manifest file and that user configuration state in Kiln. This means starting or stopping an agent is idempotent, both in Kiln and Gall.

|

||||

|

||||

For details of the generators for managing desks and agents in Kiln, see the [`Dojo Tools`](/guides/additional/dist/tools) document.

|

||||

|

||||

### Landscape apps

|

||||

|

||||

It's possible to create and distribute desks without a front-end, but typically you'll want to distribute an app with a user interface. Such an app has two primary components:

|

||||

|

||||

- Gall agents and associated backend code which reside in the desk.

|

||||

- A client bundle called a [`glob`](/guides/additional/dist/glob), which contains the front-end files like HTML, CSS, JS, images, and so forth.

|

||||

|

||||

When a desk is installed, Kiln will start up the Gall agents in the `desk.bill` manifest, and the `%docket` agent will read the `desk.docket-0` file. This file will specify the name of the app, various metadata, the appearance of the app's tile in the homescreen, and the source of the `glob` so it can serve the interface. For more details of the docket file, see the [Docket File](/guides/additional/dist/docket) document.

|

||||

|

||||

### Globs

|

||||

|

||||

The reason to separate a glob from Clay is that Clay is a revision-controlled system. Like in most revision control systems, deleting data from it is nontrivial due to newer commits referencing old commits. If Clay grows the ability to delete data, perhaps glob data could be moved into it. Until then, since client bundles tend to be updated frequently, it's best practice not to put your glob in your app host ship's Clay at all to make sure it doesn't fill up your ship's "loom" memory arena.

|

||||

|

||||

If the glob is to be served over Ames, there is an HTTP-based glob uploader that allows you to use a web form to upload a folder into your ship, which will convert the folder to a glob and link to it in your app desk's docket manifest file.

|

||||

|

||||

Note that serving a glob over Ames might increase the install time for your app, since Ames is currently pretty slow compared to HTTP — but being able to serve a glob from your ship allows you to serve your whole app, both server-side and client-side, without setting up a CDN or any other external web tooling. Your ship can do it all on its own.

|

||||

|

||||

For further details of globs, see the [Glob](/guides/additional/dist/glob) document.

|

||||

|

||||

## Sections

|

||||

|

||||

- [Glob](/guides/additional/dist/glob) - Documentation of `glob`s (client bundles).

|

||||

|

||||

- [Docket Files](/guides/additional/dist/docket) - Documentation of docket files.

|

||||

|

||||

- [Guide](/guides/additional/dist/guide) - A walkthrough of creating, installing and publishing a new desk with a tile and front-end.

|

||||

|

||||

- [Dojo Tools](/guides/additional/dist/tools) - Documentation of useful generators for managing and distributing desks.

|

||||

295

content/reference/additional/dist/docket.md

vendored

295

content/reference/additional/dist/docket.md

vendored

@ -1,295 +0,0 @@

|

||||

+++

|

||||

title = "Docket File"

|

||||

weight = 3

|

||||

+++

|

||||

|

||||

The docket file sets various options for desks with a tile and (usually) a browser-based front-end of some kind. Mainly it configures the appearance of an app's tile, the source of its [glob](/guides/additional/dist/glob), and some additional metadata.

|

||||

|

||||

The docket file is read by the `%docket` agent when a desk is `|install`ed. The `%docket` agent will fetch the glob if applicable and create the tile as specified on the homescreen. If the desk is published with `:treaty|publish`, the information specified in the docket file will also be displayed for others who are browsing apps to install on your ship.

|

||||

|

||||

The docket file is _optional_ in the general case. If it is omitted, however, the app cannot have a tile in the homescreen, nor can it be published with the `%treaty` agent, so others will not be able to browse for it from their homescreens.

|

||||

|

||||

The docket file must be named `desk.docket-0`. The `%docket` `mark` is versioned to facilitate changes down the line, so the `-0` suffix may be incremented in the future.

|

||||

|

||||

The file must contain a `hoon` list with a series of clauses. The clauses are defined in `/sur/docket.hoon` as:

|

||||

|

||||

```hoon

|

||||

+$ clause

|

||||

$% [%title title=@t]

|

||||

[%info info=@t]

|

||||

[%color color=@ux]

|

||||

[%glob-http url=cord hash=@uvH]

|

||||

[%glob-ames =ship hash=@uvH]

|

||||

[%image =url]

|

||||

[%site =path]

|

||||

[%base base=term]

|

||||

[%version =version]

|

||||

[%website website=url]

|

||||

[%license license=cord]

|

||||

==

|

||||

```

|

||||

|

||||

The `%image` clause is optional. It is mandatory to have exactly one of either `%site`, `%glob-http` or `%glob-ames`. All other clauses are mandatory.

|

||||

|

||||

Here's what a typical docket file might look like:

|

||||

|

||||

```hoon

|

||||

:~

|

||||

title+'Foo'

|

||||

info+'An app that does a thing.'

|

||||

color+0xf9.8e40

|

||||

glob-ames+[~zod 0v0]

|

||||

image+'https://example.com/tile.svg'

|

||||

base+'foo'

|

||||

version+[0 0 1]

|

||||

license+'MIT'

|

||||

website+'https://example.com'

|

||||

==

|

||||

```

|

||||

|

||||

Details of each clause and their purpose are described below.

|

||||

|

||||

---

|

||||

|

||||

## `%title`

|

||||

|

||||

_required_

|

||||

|

||||

The `%title` field specifies the name of the app. The title will be the name shown on the app's tile, as well as the name of the app when others search for it.

|

||||

|

||||

#### Type

|

||||

|

||||

```hoon

|

||||

[%title title=@t]

|

||||

```

|

||||

|

||||

#### Example

|

||||

|

||||

```hoon

|

||||

title+'Bitcoin'

|

||||

```

|

||||

|

||||

---

|

||||

|

||||

## `%info`

|

||||

|

||||

_required_

|

||||

|

||||

The `%info` field is a brief summary of what the app does. It will be shown as the subtitle in _App Info_.

|

||||

|

||||

#### Type

|

||||

|

||||

```hoon

|

||||

[%info info=@t]

|

||||

```

|

||||

|

||||

#### Example

|

||||

|

||||

```hoon

|

||||

info+'A Bitcoin Wallet that lets you send and receive Bitcoin directly to and from other Urbit users'

|

||||

```

|

||||

|

||||

---

|

||||

|

||||

## `%color`

|

||||

|

||||

_required_

|

||||

|

||||

The `%color` field specifies the color of the app tile as an `@ux`-formatted hex value.

|

||||

|

||||

#### Type

|

||||

|

||||

```hoon

|

||||

[%color color=@ux]

|

||||

```

|

||||

|

||||

#### Example

|

||||

|

||||

```hoon

|

||||

color+0xf9.8e40

|

||||

```

|

||||

|

||||

---

|

||||

|

||||

## `%glob-http`

|

||||

|

||||

_exactly one of either this, [glob-ames](#glob-ames) or [site](#site) is required_

|

||||

|

||||

The `%glob-http` field specifies the URL and hash of the app's [glob](/guides/additional/dist/glob) if it is distributed via HTTP.

|

||||

|

||||

#### Type

|

||||

|

||||

```hoon

|

||||

[%glob-http url=cord hash=@uvH]

|

||||

```

|

||||

|

||||

#### Example

|

||||

|

||||

```hoon

|

||||

glob-http+['https://example.com/glob-0v1.s0me.h4sh.glob' 0v1.s0me.h4sh]

|

||||

```

|

||||

|

||||

---

|

||||

|

||||

## `%glob-ames`

|

||||

|

||||

_exactly one of either this, [glob-http](#glob-http) or [site](#site) is required_

|

||||

|

||||

The `%glob-ames` field specifies the ship and hash of the app's [glob](/guides/additional/dist/glob) if it is distributed from a ship over Ames. If the glob will be distributed from our ship, the hash can initially be `0v0` as it will be overwritten with the hash produced by the [Globulator](/guides/additional/dist/glob#globulator).

|

||||

|

||||

#### Type

|

||||

|

||||

```hoon

|

||||

[%glob-ames =ship hash=@uvH]

|

||||

```

|

||||

|

||||

#### Example

|

||||

|

||||

```hoon

|

||||

glob-ames+[~zod 0v0]

|

||||

```

|

||||

|

||||

---

|

||||

|

||||

## `%site`

|

||||

|

||||

_exactly one of either this, [glob-ames](#glob-ames) or [glob-http](#glob-http) is required_

|

||||

|

||||

It's possible for an app to handle HTTP requests from the client directly rather than with a separate [glob](/guides/additional/dist/glob). In that case, the `%site` field specifies the `path` of the Eyre endpoint the app will bind. If `%site` is used, clicking the app's tile will simply open a new tab with a GET request to the specified Eyre endpoint.

|

||||

|

||||

For more information on direct HTTP handling with a Gall agent or generator, see the [Eyre Internal API Reference](/reference/arvo/eyre/tasks) documentation.

|

||||

|

||||

#### Type

|

||||

|

||||

```hoon

|

||||

[%site =path]

|

||||

```

|

||||

|

||||

#### Example

|

||||

|

||||

```hoon

|

||||

site+/foo/bar

|

||||

```

|

||||

|

||||

---

|

||||

|

||||

## `%image`

|

||||

|

||||

_optional_

|

||||

|

||||

The `%image` field specifies the URL of an image to be displayed on the app's tile. This field is optional and may be omitted entirely.

|

||||

|

||||

The given image will be displayed on top of the [color](#color)ed tile. The app [title](#title) (and hamburger menu upon hover) will be displayed on top of the given image, in small rounded boxes with the same background color as the main tile. The given image will be displayed at 100% of the width of the tile. The image's corners will be hidden by the rounded corners of the tile, so the image itself needn't have rounded corners. The tile is a perfect square, so if the image should occupy the whole tile, it should also be a perfect square. If the image should be a smaller icon in the center of the tile (like the bitcoin tile), it should just have a square of transparent negative space around it.

|

||||

|

||||

It may be tempting to set the image URL as a root-relative path like `/apps/myapp/img/tile.svg` and bundle it in the glob. While this would work locally, it means the image would fail to load for those browsing apps to install. Therefore, the image should be hosted somewhere globally available.

|

||||

|

||||

#### Type

|

||||

|

||||

```hoon

|

||||

[%image =url]

|

||||

```

|

||||

|

||||

The `url` type is a simple `cord`:

|

||||

|

||||

```hoon

|

||||

+$ url cord

|

||||

```

|

||||

|

||||

#### Example

|

||||

|

||||

```hoon

|

||||

image+'http://example.com/icon.svg'

|

||||

```

|

||||

|

||||

---

|

||||

|

||||

## `%base`

|

||||

|

||||

_required_

|

||||

|

||||

The `%base` field specifies the base of the URL path of the glob resources. In the browser, the path will begin with `/apps`, then the specified base, then the rest of the path to the particular glob resource like `http://localhost:8080/apps/my-base/index.html`. Note the `path`s of the glob contents themselves should not include this base element.

|

||||

|

||||

#### Type

|

||||

|

||||

```hoon

|

||||

[%base base=term]

|

||||

```

|

||||

|

||||

#### Example

|

||||

|

||||

```hoon

|

||||

base+'bitcoin'

|

||||

```

|

||||

|

||||

---

|

||||

|

||||

## `%version`

|

||||

|

||||

_required_

|

||||

|

||||

The `%version` field specifies the current version of the app. It's a triple of three `@ud` numbers representing the major version, minor version and patch version. In the client, `[1 2 3]` will be rendered as `1.2.3`. You would typically increase the appropriate number each time you published a change to the app.

|

||||

|

||||

#### Type

|

||||

|

||||

```hoon

|

||||

[%version =version]

|

||||

```

|

||||

|

||||

The `version` type is just a triple of three numbers:

|

||||

|

||||

```hoon

|

||||

+$ version

|

||||

[major=@ud minor=@ud patch=@ud]

|

||||

```

|

||||

|

||||

#### Example

|

||||

|

||||

```hoon

|

||||

version+[0 0 1]

|

||||

```

|

||||

|

||||

---

|

||||

|

||||

## `%website`

|

||||

|

||||

_required_

|

||||

|

||||

The `%website` field is for a link to a relevant website. This might be a link to the app's github repo, company website, or whatever is appropriate. This field will be displayed when people are browsing apps to install.

|

||||

|

||||

#### Type

|

||||

|

||||

```hoon

|

||||

[%website website=url]

|

||||

```

|

||||

|

||||

The `url` type is a simple `cord`:

|

||||

|

||||

```hoon

|

||||

+$ url cord

|

||||

```

|

||||

|

||||

#### Example

|

||||

|

||||

```hoon

|

||||

website+'https://example.com'

|

||||

```

|

||||

|

||||

---

|

||||

|

||||

## `%license`

|

||||

|

||||

_required_

|

||||

|

||||

The `%license` field specifies the license for the app in question. It would typically be a short name like `MIT`, `GPLv2`, or what have you. The field just takes a `cord` so any license can be specified.

|

||||

|

||||

#### Type

|

||||

|

||||

```hoon

|

||||

[%license license=cord]

|

||||

```

|

||||

|

||||

#### Example

|

||||

|

||||

```hoon

|

||||

license+'MIT'

|

||||

```

|

||||

101

content/reference/additional/dist/glob.md

vendored

101

content/reference/additional/dist/glob.md

vendored

@ -1,101 +0,0 @@

|

||||

+++

|

||||

title = "Glob"

|

||||

weight = 4

|

||||

+++

|

||||

|

||||

A `glob` contains the client bundle—client-side resources like HTML, JS, and CSS files—for a landscape app distributed in a desk. Globs are managed separately from other files in desks because they often contain large files that frequently change, and would therefore bloat a ship's state if they were subject to Clay's revision control mechanisms.

|

||||

|

||||

The hash and source of an app's glob is defined in a desk's [docket file](/guides/additional/dist/docket). The `%docket` agent reads the docket file, obtains the glob from the specified source, and makes its contents available to the browser client. On a desk publisher's ship, if the glob is to be distributed over Ames, the glob is also made available to desk subscribers.

|

||||

|

||||

## The `glob` type

|

||||

|

||||

The `%docket`agent defines the type of a `glob` as:

|

||||

|

||||

```hoon

|

||||

+$ glob (map path mime)

|

||||

```

|

||||

|

||||

Given the following file heirarchy:

|

||||

|

||||

```

|

||||

foo

|

||||

├── css

|

||||

│ └── style.css

|

||||

├── img

|

||||

│ ├── favicon.png

|

||||

│ ├── foo.svg

|

||||

│ └── bar.svg

|

||||

├── index.html

|

||||

└── js

|

||||

└── baz.js

|

||||

```

|

||||

|

||||

...its `$glob` form would look like:

|

||||

|

||||

```hoon

|

||||

{ [p=/img/foo/svg q=[p=/image/svg+xml q=[p=0 q=0]]]

|

||||

[p=/css/style/css q=[p=/text/css q=[p=0 q=0]]]

|

||||

[p=/img/favicon/png q=[p=/image/png q=[p=0 q=0]]]

|

||||

[p=/js/baz/js q=[p=/application/javascript q=[p=0 q=0]]]

|

||||

[p=/img/bar/svg q=[p=/image/svg+xml q=[p=0 q=0]]]

|

||||

[p=/index/html q=[p=/text/html q=[p=0 q=0]]]

|

||||

}

|

||||

```

|

||||

|

||||

Note: The mime byte-length and data are 0 in this example because it was made with empty dummy files.

|

||||

|

||||

A glob may contain any number of files and folders in any kind of heirarchy. The one important thing is that an `index.html` file is present in its root. The `index.html` file is automatically served when the app is opened in the browser and will fail if it is missing.

|

||||

|

||||

In addition to the `$glob` type, a glob can also be output to Unix with a `.glob` file extension for distribution over HTTP. This file simply contains a [`jam`](/reference/hoon/stdlib/2p#jam)med `$glob` structure.

|

||||

|

||||

## Docket file clause

|

||||

|

||||

The `desk.docket-0` file must include exactly one of the following clauses:

|

||||

|

||||

#### `site+/some/path`

|

||||

|

||||

If an app binds an Eyre endpoint and handles HTTP directly, for example with a [`%connect` task:eyre](/reference/arvo/eyre/tasks#connect), the `%site` clause is used, specifying the Eyre binding. In this case a glob is omitted entirely.

|

||||

|

||||

#### `glob-ames+[~zod 0vs0me.h4sh]`

|

||||

|

||||

If the glob is to be distributed over Ames, the `%glob-ames` clause is used, with a cell of the `ship` which has the glob and the `@uv` hash of the glob. If it's our ship, the hash can just be `0v0` and the glob can instead be created with the [Globulator](#globulator).

|

||||

|

||||

#### `glob-http+['https://example.com/some.glob' 0vs0me.h4sh]`

|

||||

|

||||

If the glob is to be distributed over HTTP, for example from an s3 instance, the `%glob-http` clause is used. It takes a cell of a `cord` with the URL serving the glob and the `@uv` hash of the glob.

|

||||

|

||||

## Making a glob

|

||||

|

||||

There are a couple of different methods depending on whether the glob will be distributed over HTTP or Ames.

|

||||

|

||||

### Globulator

|

||||

|

||||

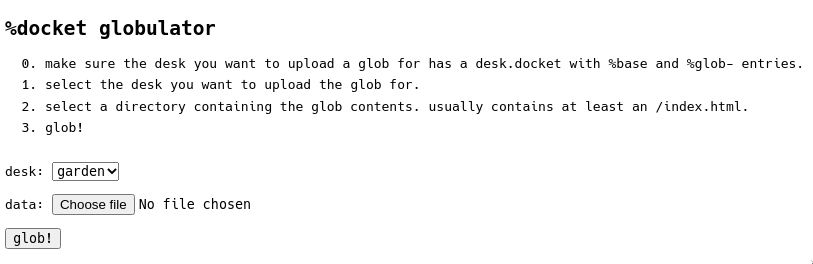

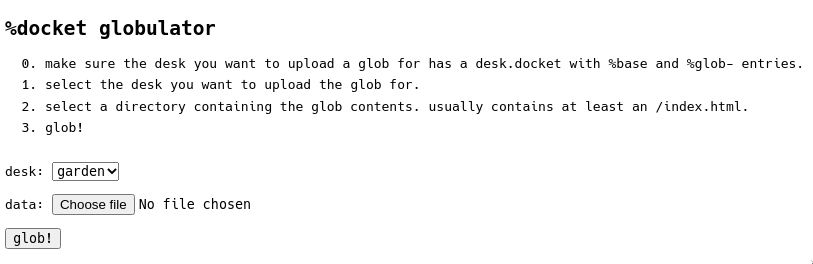

For globs distributed over Ames from our ship, the client bundle can be uploaded directly with `%docket`'s Globulator tool, which is available in the browser at `http[s]://[host]/docket/upload`. It looks like this:

|

||||

|

||||

|

||||

|

||||

Simply select the target desk, select the folder to be globulated, and hit `glob!`.

|

||||

|

||||

Note the target desk must have been `|install`ed before uploading its glob. When installed, `%docket` will print `docket: awaiting manual glob for %desk-name desk` in the terminal and wait for the upload. The hash in the `%ames-glob` clause of the docket file will be overwritten by the hash of the new glob. As a result, there's no need to specify the actual glob hash in `desk.docket` - you can just use any `@uv` like `0v0`. Once uploaded, the desk can then be published with `:treaty|publish %desk-name` and the glob will become available for download by subscribers.

|

||||

|

||||

### `-make-glob`

|

||||

|

||||

There's a different process for globs to be distributed over HTTP from a webserver rather than over Ames from a ship. For this purpose, the `%garden` desk includes a `%make-glob` thread. The thread takes a folder in a desk and produces a glob of the files it contains, which it then saves to Unix in a [`jam`](/reference/hoon/stdlib/2p#jam)file with a `.glob` extension.

|

||||

|

||||

To begin, you'll need to spin up a ship (typically a fake ship) and `|mount` a desk for which to add the files. In order for Clay to add the files, the desk must contain `mark` files in its `/mar` directory for all file extensions your folder contains. The `%garden` desk is a good bet because it includes `mark` files for `.js`, `.html`, `.png`, `.svg`, `.woff2` and a couple of others. If there's no desk with a mark for a particular file type you want included in your glob, you may need to add a new mark file. A very rudimentary mark file like the `png.hoon` mark will suffice.

|

||||

|

||||

With the desk mounted, add the folder to be globbed to the root of the desk in Unix. It's imporant it's in the root because the `%make-glob` thread will only strip the first level of the folder heirarchy.

|

||||

|

||||

Next, `|commit` the files to the desk, then run `-garden!make-glob %the-desk /folder-name`, where `%the-desk` is the desk containing the folder to be globbed and `/folder-name` is its name.

|

||||

|

||||

On Unix, if you look in `/path/to/pier/.urb/put`, you'll now see a file which looks like:

|

||||

|

||||

```

|

||||

glob-0v1.7vpqa.r8pn5.6t0s1.rhc7r.5e9vo.glob

|

||||

```

|

||||

|

||||

This file can be uploaded to your webserver and the `desk.docket-0` file of the desk you're publishing can be updated with:

|

||||

|

||||

```hoon

|

||||

glob-http+['https://s3.example.com/glob-0v1.7vpqa.r8pn5.6t0s1.rhc7r.5e9vo.glob' 0v1.7vpqa.r8pn5.6t0s1.rhc7r.5e9vo]

|

||||

```

|

||||

281

content/reference/additional/dist/guide.md

vendored

281

content/reference/additional/dist/guide.md

vendored

@ -1,281 +0,0 @@

|

||||

+++

|

||||

title = "Guide"

|

||||

weight = 2

|

||||

+++

|

||||

|

||||

In this document we'll walk through an example of creating and publishing a desk that others can install. We'll create a simple "Hello World!" front-end with a "Hello" tile to launch it. For simplicity, the desk won't include an actual Gall agent, but we'll note everything necessary if there were one.

|

||||

|

||||

## Create desk

|

||||

|

||||

To begin, we'll need to clone the [urbit git repo](https://github.com/urbit/urbit) from the Unix terminal:

|

||||

|

||||

```

|

||||

[user@host ~]$ git clone https://github.com/urbit/urbit urbit-git

|

||||

```

|

||||

|

||||

Once that's done, we can navigate to the `pkg` directory in our cloned repo:

|

||||

|

||||

```

|

||||

[user@host ~]$ cd urbit-git/pkg

|

||||

[user@host pkg]$ ls .

|

||||

arvo btc-wallet garden grid interface npm webterm

|

||||

base-dev docker-image garden-dev herb landscape symbolic-merge.sh

|

||||

bitcoin ent ge-additions hs libaes_siv urbit

|

||||

```

|

||||

|

||||

Each desk defines its own `mark`s, in its `/mar` folder. There are no longer shared system marks that all userspace code knows, nor common libraries in `/lib` or `/sur`. Each desk is completely self-contained. This means any new desk will need a number of base files.

|

||||

|

||||

To make the creation of a new desk easier, `base-dev` and `garden-dev` contain symlinks to all `/sur`, `/lib` and `/mar` files necessary for interacting with the `%base` and `%garden` desks respectively. These dev desks can be copied and merged with the `symbolic-merge.sh` included.

|

||||

|

||||

Let's create a new `hello` desk:

|

||||

|

||||

```

|

||||

[user@host pkg]$ mkdir hello

|

||||

[user@host pkg]$ ./symbolic-merge.sh base-dev hello

|

||||

[user@host pkg]$ ./symbolic-merge.sh garden-dev hello

|

||||

[user@host pkg]$ cd hello

|

||||

[user@host hello]$ ls

|

||||

lib mar sur

|

||||

```

|

||||

|

||||

### `sys.kelvin`

|

||||

|

||||

Our desk must include a `sys.kelvin` file which specifies the kernel version it's compatible with. Let's create that:

|

||||

|

||||

```

|

||||

[user@host hello]$ echo "[%zuse 418]" > sys.kelvin

|

||||

[user@host hello]$ cat sys.kelvin

|

||||

[%zuse 418]

|

||||

```

|

||||

|

||||

### `desk.ship`

|

||||

|

||||

We can also add a `desk.ship` file to specify the original publisher of this desk. We'll try this on a fakezod so let's just add `~zod` as the publisher:

|

||||

|

||||

```

|

||||

[user@host hello]$ echo "~zod" > desk.ship

|

||||

[user@host hello]$ cat desk.ship

|

||||

~zod

|

||||

```

|

||||

|

||||

### `desk.bill`

|

||||

|

||||

If we had Gall agents in this desk which should be automatically started when the desk is installed, we'd add them to a `hoon` list in the `desk.bill` file. It would look something like this:

|

||||

|

||||

```hoon

|

||||

:~ %some-app

|

||||

%another

|

||||

==

|

||||

```

|

||||

|

||||

In this example we're not adding any agents, so we'll simply omit the `desk.bill` file.

|

||||

|

||||

### `desk.docket-0`

|

||||

|

||||

The final file we need is `desk.docket-0`. This one's more complicated, so we'll open it in our preferred text editor:

|

||||

|

||||

```

|

||||

[user@host hello]$ nano desk.docket-0

|

||||

```

|

||||

|

||||

In the text editor, we'll add the following:

|

||||

|

||||

```hoon

|

||||

:~ title+'Hello'

|

||||

info+'A simple hello world app.'

|

||||

color+0x81.88c9

|

||||

image+'https://media.urbit.org/guides/additional/dist/wut.svg'

|

||||

base+'hello'

|

||||

glob-ames+[~zod 0v0]

|

||||

version+[0 0 1]

|

||||

website+'https://urbit.org/guides/additional/dist/guide'

|

||||

license+'MIT'

|

||||

==

|

||||

```

|

||||

|

||||

You can refer to the [Docket File](/guides/additional/dist/docket) documentation for more details of what is required. In brief, the `desk.docket-0` file contains a `hoon` list of [clauses](/guides/additional/dist/docket) which configure the appearance of the app tile, the source of the [glob](/guides/additional/dist/glob), and some other metadata.

|

||||

|

||||

We've given the app a [`%title`](/guides/additional/dist/docket#title) of "Hello", which will be displayed on the app tile and will be the name of the app when others browse to install it. We've given the app tile a [`%color`](/guides/additional/dist/docket#color) of `#8188C9`, and also specified the URL of an [`%image`](/guides/additional/dist/docket#image) to display on the tile.

|

||||

|

||||

The [`%base`](/guides/additional/dist/docket#base) clause specifies the base URL path for the app. We've specified "hello" so it'll be `http://localhost:8080/apps/hello/...` in the browser. For the [glob](/guides/additional/dist/glob), we've used a clause of [`%glob-ames`](/guides/additional/dist/docket#glob-ames), which means the glob will be served from a ship over Ames, as opposed to being served over HTTP with a [`%glob-http`](/guides/additional/dist/docket#glob-http) clause or having an Eyre binding with a [`%site`](/guides/additional/dist/docket#site) clause. You can refer to the [glob](/guides/additional/dist/glob) documentation for more details of the glob options. In our case we've specified `[~zod 0v0]`. Since `~zod` is the fakeship we'll install it on, the `%docket` agent will await a separate upload of the `glob`, so we can just specify `0v0` here as it'll get overwritten later.

|

||||

|

||||

The [`%version`](/guides/additional/dist/docket#version) clause specifies the version as a triple of major version, minor version and patch version. The rest is just some additional informative metadata which will be displayed in _App Info_.

|

||||

|

||||

So let's save that to the `desk.docket-0` file and have a look at our desk:

|

||||

|

||||

```

|

||||

[user@host hello]$ ls

|

||||

desk.docket-0 desk.ship lib mar sur sys.kelvin

|

||||

```

|

||||

|

||||

That's everything we need for now.

|

||||

|

||||

## Install

|

||||

|

||||

Let's spin up a fakezod in which we can install our desk. By default a fakezod will be out of date, so we need to bootstrap with a pill from our urbit-git repo. The pills are stored in git lfs and need to be pulled into our repo first:

|

||||

|

||||

```

|

||||

[user@host hello]$ cd ~/urbit-git

|

||||

[user@host urbit-git]$ git lfs install

|

||||

[user@host urbit-git]$ git lfs pull

|

||||

[user@host urbit-git]$ cd ~/piers/fake

|

||||

[user@host fake]$ urbit -F zod -B ~/urbit-git/bin/multi-brass.pill

|

||||

```

|

||||

|

||||

Once our fakezod is booted, we'll need to create a new `%hello` desk for our app and mount it. We can do this in the dojo like so:

|

||||

|

||||

```

|

||||

> |merge %hello our %base

|

||||

>=

|

||||

> |mount %hello

|

||||

>=

|

||||

```

|

||||

|

||||

Now, back in the Unix terminal, we should see the new desk mounted:

|

||||

|

||||

```

|

||||

[user@host fake]$ cd zod

|

||||

[user@host zod]$ ls

|

||||

hello

|

||||

```

|

||||

|

||||

Currently it's just a clone of the `%base` desk, so let's delete its contents:

|

||||

|

||||

```

|

||||

[user@host zod]$ rm -r hello/*

|

||||

```

|

||||

|

||||

Next, we'll copy in the contents of the `hello` desk we created earlier. We must use `cp -LR` to resolve all the symlinks:

|

||||

|

||||

```

|

||||

[user@host zod]$ cp -LR ~/urbit-git/pkg/hello/* hello/

|

||||

```

|

||||

|

||||

Back in the dojo we can commit the changes and install the desk:

|

||||

|

||||

```

|

||||

> |commit %hello

|

||||

> |install our %hello

|

||||

kiln: installing %hello locally

|

||||

docket: awaiting manual glob for %hello desk

|

||||

```

|

||||

|

||||

The `docket: awaiting manual glob for %hello desk` message is because our `desk.docket-0` file includes a [`%glob-ames`](/guides/additional/dist/docket#glob-ames) clause which specifies our ship as the source, so it's waiting for us to upload the glob.

|

||||

|

||||

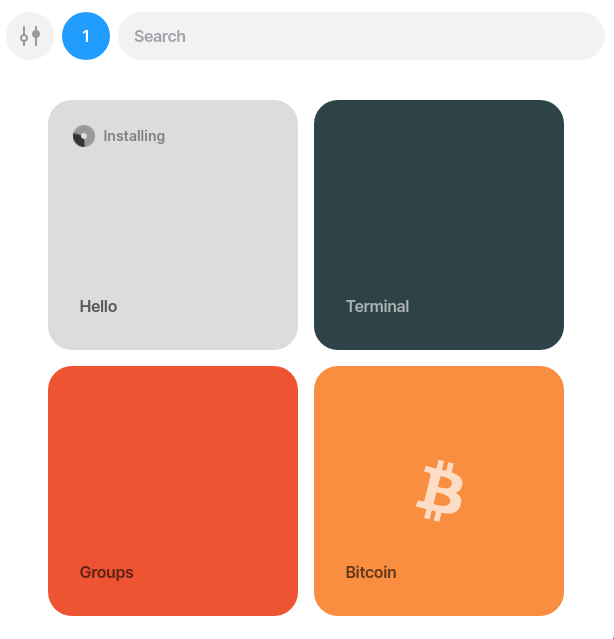

If we open a browser now, navigate to `http://localhost:8080` and login with the default fakezod code `lidlut-tabwed-pillex-ridrup`, we'll see our tile's appeared but it says "installing" with a spinner due to the missing glob:

|

||||

|

||||

|

||||

|

||||

## Create files for glob

|

||||

|

||||

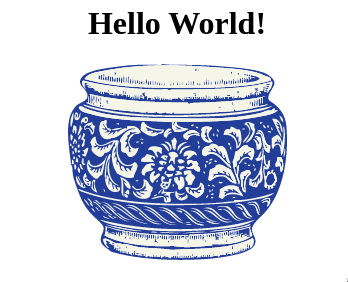

We'll now create the files for the glob. We'll use a very simple static HTML page that just displayes "Hello World!" and an image. Typically we'd have a more complex JS web app that talked to apps on our ship through Eyre's channel system, but for the sake of simplicity we'll forgo that. Let's hop back in the Unix terminal:

|

||||

|

||||

```

|

||||

[user@host zod]$ cd ~

|

||||

[user@host ~]$ mkdir hello-glob

|

||||

[user@host ~]$ cd hello-glob

|

||||

[user@host hello-glob]$ mkdir img

|

||||

[user@host hello-glob]$ wget -P img https://media.urbit.org/guides/additional/dist/pot.svg

|

||||

[user@host hello-glob]$ tree

|

||||

.

|

||||

└── img

|

||||

└── pot.svg

|

||||

|

||||

1 directory, 1 file

|

||||

```

|

||||

|

||||

We've grabbed an image to use in our "Hello world!" page. The next thing we need to add is an `index.html` file in the root of the folder. The `index.html` file is mandatory; it's what will be loaded when the app's tile is clicked. Let's open our preferred editor and create it:

|

||||

|

||||

```

|

||||

[user@host hello-glob]$ nano index.html

|

||||

```

|

||||

|

||||

In the editor, paste in the following HTML and save it:

|

||||

|

||||

```html

|

||||

<!DOCTYPE html>

|

||||

<html>

|

||||

<head>

|

||||

<style>

|

||||

div {

|

||||

text-align: center;

|

||||

}

|

||||

</style>

|

||||

</head>

|

||||

<title>Hello World</title>

|

||||

<body>

|

||||

<div>

|

||||

<h1>Hello World!</h1>

|

||||

<img src="img/pot.svg" alt="pot" width="219" height="196" />

|

||||

</div>

|

||||

</body>

|

||||

</html>

|

||||

```

|

||||

|

||||

Our `hello-glob` folder should now look like this:

|

||||

|

||||

```

|

||||

[user@host hello-glob]$ tree

|

||||

.

|

||||

├── img

|

||||

│ └── pot.svg

|

||||

└── index.html

|

||||

|

||||

1 directory, 2 files

|

||||

```

|

||||

|

||||

## Upload to glob

|

||||

|

||||

We can now create a glob from the directory. To do so, navigate to `http://localhost:8080/docket/upload` in the browser. This will bring up the `%docket` app's [Globulator](/guides/additional/dist/glob#globulator) tool:

|

||||

|

||||

|

||||

|

||||

Simply select the `hello` desk from the drop-down, click `Choose file` and select the `hello-glob` folder in the the file browser, then hit `glob!`.

|

||||

|

||||

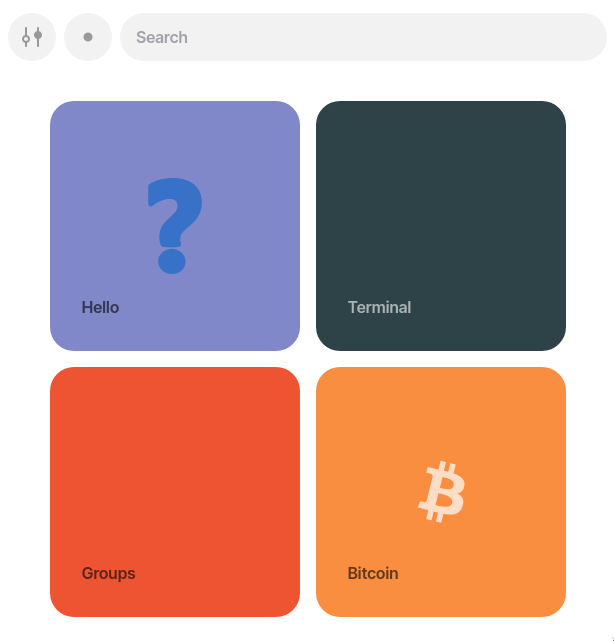

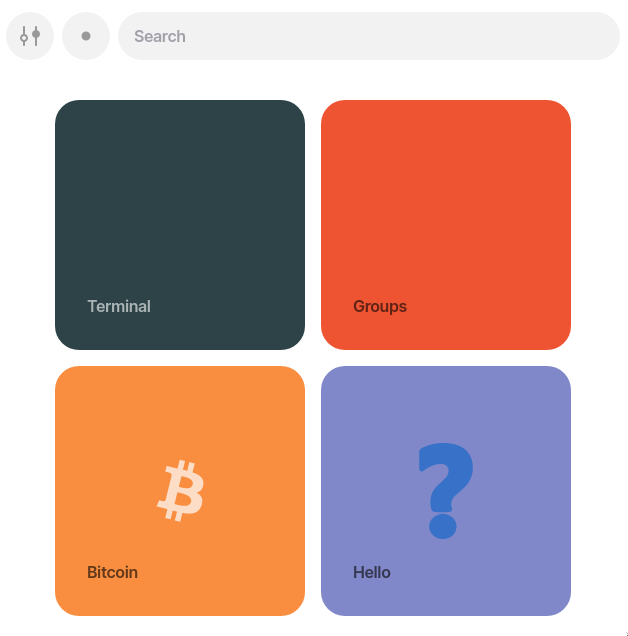

Now if we return to our ship's homescreen, we should see the tile looks as we specified in the docket file:

|

||||

|

||||

|

||||

|

||||

And if we click on the tile, it'll load the `index.html` in our glob:

|

||||

|

||||

|

||||

|

||||

Our app is working!

|

||||

|

||||

## Publish

|

||||

|

||||

The final step is publishing our desk with the `%treaty` agent so others can install it. To do this, there's a simple command in the dojo:

|

||||

|

||||

```

|

||||

> :treaty|publish %hello

|

||||

>=

|

||||

```

|

||||

|

||||

Note: For desks without a docket file (and therefore without a tile and glob), treaty can't be used. Instead you can make the desk public with `|public %desk-name`.

|

||||

|

||||

## Remote install

|

||||

|

||||

Let's spin up another fake ship so we can try install it:

|

||||

|

||||

```

|

||||

[user@host hello-glob]$ cd ~/piers/fake

|

||||

[user@host fake]$ urbit -F bus

|

||||

```

|

||||

|

||||

Note: For desks without a docket file (and therefore without a tile and glob), users cannot install them through the web interface. Instead remote users can install it from the dojo with `|install ~our-ship %desk-name`.

|

||||

|

||||

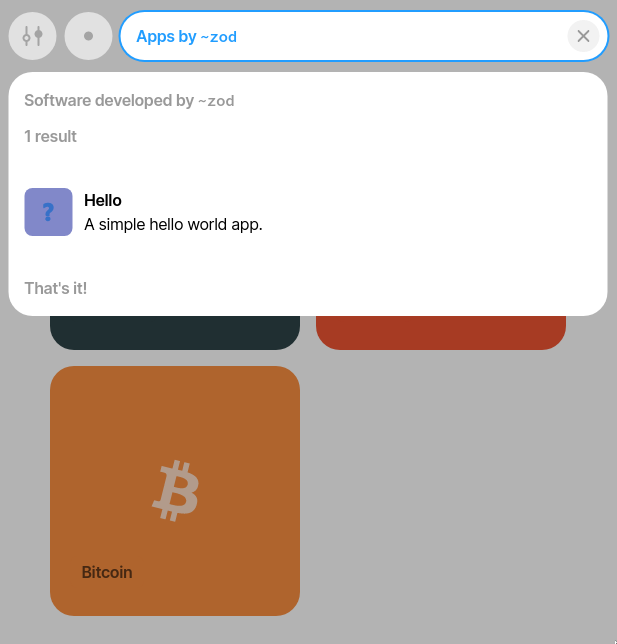

In the browser, navigate to `http://localhost:8081` and login with `~bus`'s code `riddec-bicrym-ridlev-pocsef`. Next, type `~zod/` in the search bar, and it should pop up a list of `~zod`'s published apps, which in this case is our `Hello` app:

|

||||

|

||||

|

||||

|

||||

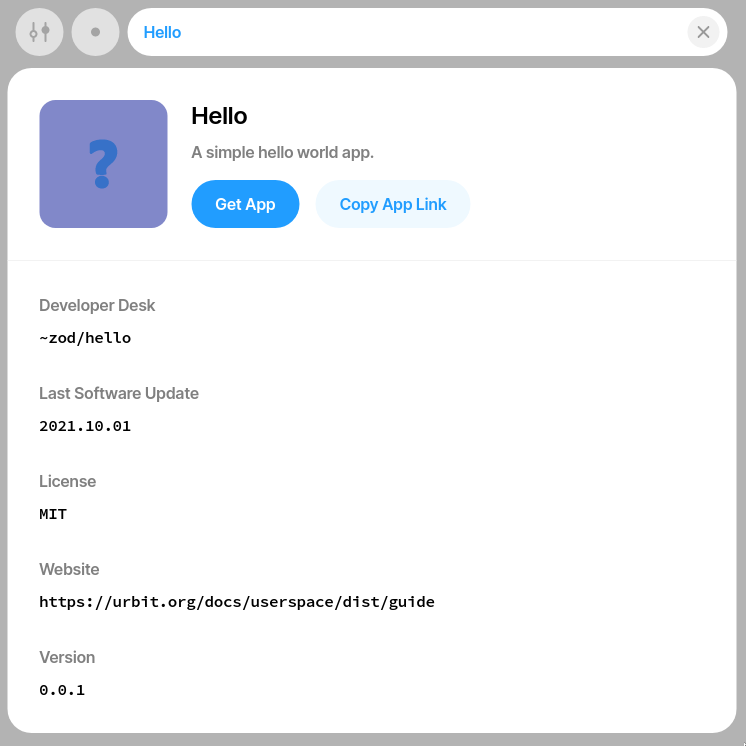

When we click on the app, it'll show some of the information from the clauses in the docket file:

|

||||

|

||||

|

||||

|

||||

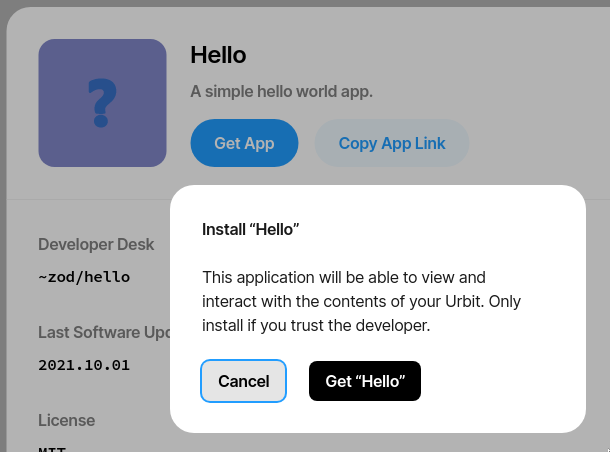

Click `Get App` and it'll ask as if we want to install it:

|

||||

|

||||

|

||||

|

||||

Finally, click `Get "Hello"` and it'll be installed as a tile on `~bus` which can then be opened:

|

||||

|

||||

|

||||

341

content/reference/additional/dist/tools.md

vendored

341

content/reference/additional/dist/tools.md

vendored

@ -1,341 +0,0 @@

|

||||

+++

|

||||

title = "Dojo Tools"

|

||||

weight = 5

|

||||

+++

|

||||

|

||||

A number of generators are included for managing and viewing the status of desks and their agents. Each of these are documented below.

|

||||

|

||||

Note that some old generators have been replaced:

|

||||

|

||||

- `|start` - Replaced with [`|rein`](#rein).

|

||||

- `|ota` - Replaced with [`|install`](#install), [`|uninstall`](#uninstall), [`|pause`](#pause) and [`|resume`](#resume).

|

||||

- `|fade` - Replaced with [`|nuke`](#nuke).

|

||||

- `|doze` - Replaced with [`|rein`](#rein) or [`|suspend`](#suspend).

|

||||

- `|wake` - Replaced with [`|rein`](#rein) or [`|revive`](#revive).

|

||||

- `+trouble` - This still exists but is deprecated, and is now an alias for [`+vats`](#vats).

|

||||

|

||||

---

|

||||

|

||||

## `+vats`

|

||||

|

||||

Print out the status of each installed desk.

|

||||

|

||||

Desks in Clay which aren't installed will be omitted.

|

||||

|

||||

Fields:

|

||||

|

||||

- `/sys/kelvin` - The version of `%zuse` the desk is compatible with.

|

||||

- `base hash` - The merge base (common ancestor) between the desk and its upstream source.

|

||||

- `%cz hash` - The hash of the desk.

|

||||

- `app status` - May be `suspended` or `running`.

|

||||

- `force on` - The set of agents on the desk which have been manually started despite not being on the `desk.bill` manifest.

|

||||

- `force off` - The set of agents on the desk which have been manually stopped despite being on the `desk.bill` manifest.

|

||||

- `publishing ship` - The original publisher if the source ship is republishing the desk.

|

||||

- `updates` - May be `local`, `tracking` or `paused`. Local means it will receive updates via commits on the local ship. Tracking means it will receive updates from the `source ship`. Paused means it will not receive updates.

|

||||

- `source desk` - The desk on the `source ship`.

|

||||

- `source aeon` - The revision number of the desk on the `source ship`.

|

||||

- `pending updates` - Updates waiting to be applied due to incompatibility.

|

||||

|

||||

#### Arguments

|

||||

|

||||

None.

|

||||

|

||||

#### Examples

|

||||

|

||||

```

|

||||

> +vats

|

||||

%base

|

||||

/sys/kelvin: [%zuse 418]

|

||||

base hash: ~

|

||||

%cz hash: 0v6.2nqmu.oqm24.ighl6.n0gp9.s8res.feql1.dl8ap.isli3.jk0hu.acrd2

|

||||

app status: running

|

||||

force on: ~

|

||||

force off: ~

|

||||

publishing ship: ~

|

||||

updates: tracking

|

||||

source ship: ~zod

|

||||

source desk: %base

|

||||

source aeon: 3

|

||||

pending updates: ~

|

||||

::

|

||||

%garden

|

||||

/sys/kelvin: [%zuse 418]

|

||||

base hash: ~

|

||||

%cz hash: 0v1e.2h7hs.elq3g.1sdt7.qfga6.ganga.7p95j.aog44.8p5fe.kpr6v.7ai82

|

||||

app status: running

|

||||

force on: ~

|

||||

force off: ~

|

||||

publishing ship: ~

|

||||

updates: tracking

|

||||

source ship: ~zod

|

||||

source desk: %garden

|

||||

source aeon: 3

|

||||

pending updates: ~

|

||||

```

|

||||

|

||||

---

|

||||

|

||||

## `+agents`

|

||||

|

||||

Print out the status of Gall agents on a desk.

|

||||

|

||||

Agents may either be `archived` or `running`. Nuked or unstarted agents which are not on the manifest are omitted.

|

||||

|

||||

#### Arguments

|

||||

|

||||

```

|

||||

desk

|

||||

```

|

||||

|

||||

#### Example

|

||||

|

||||

```

|

||||

> +agents %garden

|

||||

status: running %hark-system-hook

|

||||

status: running %treaty

|

||||

status: running %docket

|

||||

status: running %settings-store

|

||||

status: running %hark-store

|

||||

```

|

||||

|

||||

---

|

||||

|

||||

## `|suspend`

|

||||

|

||||

Shut down all agents on a desk, archiving their states.

|

||||

|

||||

The tile in the homescreen (if it has one) will turn gray and say "Suspended" in the top-left corner. This generator does the same thing as selecting "Suspend" from an app tile's hamburger menu.

|

||||

|

||||

#### Arguments

|

||||

|

||||

```

|

||||

desk

|

||||

```

|

||||

|

||||

#### Examples

|

||||

|

||||

```

|

||||

|suspend %bitcoin

|

||||

```

|

||||

|

||||

---

|

||||

|

||||

## `|revive`

|

||||

|

||||

Revive all agents on a desk, migrating archived states.

|

||||

|

||||

All agents specified in `desk.bill` which are suspended will be restarted. If updates to the agents have occurred since their states were archived, they'll be migrated with the state transition functions in the agent. This generator does the same thing as selecting "Resume App" from the app tile's hamburger menu.

|

||||

|

||||

#### Arguments

|

||||

|

||||

```

|

||||

desk

|

||||

```

|

||||

|

||||

#### Examples

|

||||

|

||||

```

|

||||

|revive %bitcoin

|

||||

```

|

||||

|

||||

---

|

||||

|

||||

## `|install`

|

||||

|

||||

Install a desk, starting its agents and listening for updates.

|

||||

|

||||

If it's a remote desk we don't already have, it will be fetched. The agents started will be those specified in the `desk.bill` manifest. If it has a docket file, its tile will be added to the homescreen and its glob fetched. If we already have the desk, the source for updates will be switched to the ship specified.

|

||||

|

||||