|

|

||

|---|---|---|

| files | ||

| LICENSE | ||

| README_RUS.md | ||

| README.md | ||

This repository is a visual cheatsheet on the main topics in Backend-development. All material is divided into chapters that include different topics. There are three main parts to each topic:

- Visual part - various images/tables/cheatsheets for better understanding (may not be available). All pictures and tables are made from scratch, specifically for this repository.

- Summary - A very brief summary with a list of key terms and concepts. The terms are hyperlinked to the appropriate section on Wikipedia or a similar reference resource.

- References to sources - resources where you may find complete information on a particular issue (they are hidden under a spoiler, which opens when clicked). If possible, the most authoritative sources are indicated, or those that provide information in as simple and comprehensible language as possible.

🤝 If you want to help the project, feel free to send your issues or pull requests.

🌙 For better experience enable dark theme.

Contents

Network & Internet

Internet is a worldwide system that connects computer networks from around the world into a single network for storing/transferring information. The Internet was originally developed for the military. But soon it began to be implemented in universities, and then it could be used by private companies, which began to organize networks of providers that provide Internet access services to ordinary citizens. By early 2020, the number of Internet users exceeded 4.5 billion.

-

How the Internet works

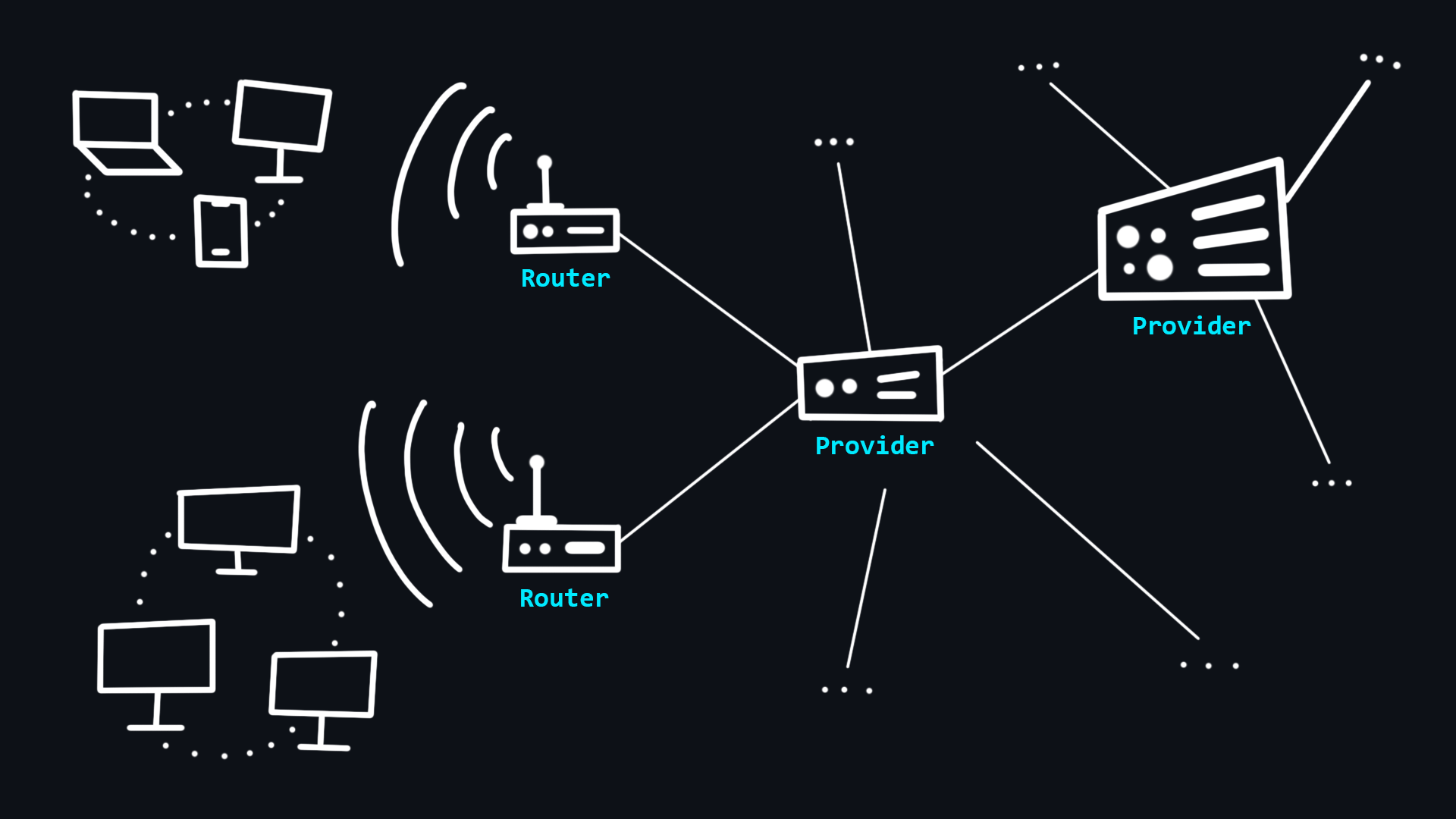

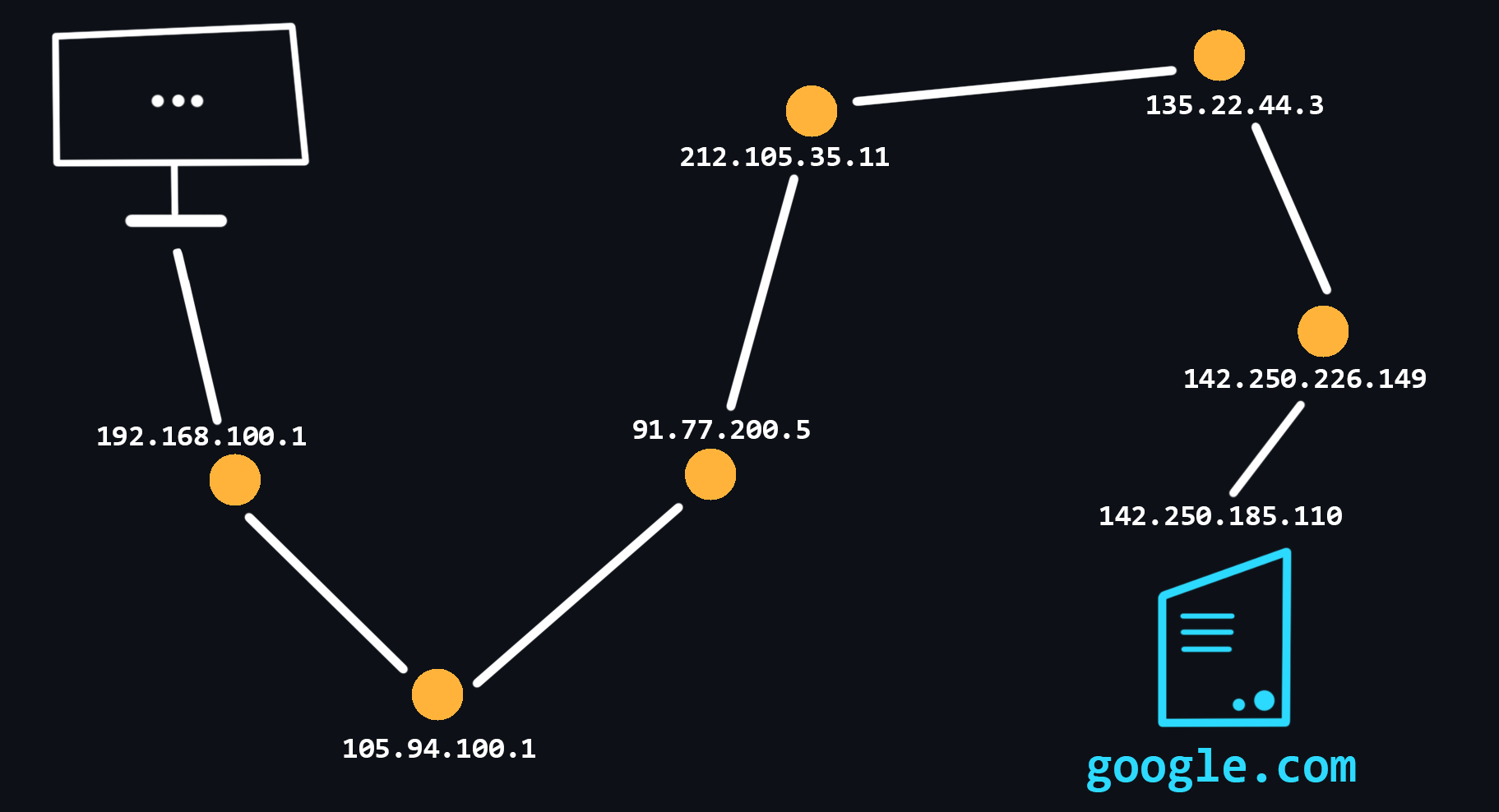

Your computer does not have direct access to the Internet. Instead, it has access to your local network to which other devices are connected via a wired (Ethernet) or wireless (Wi-Fi) connection. The organizer of such a network is a special minicomputer - router. This device connects you to your Internet Service Provider (ISP), which in turn is connected to other higher-level ISPs. Thus, all these interactions make up the Internet, and your messages always transit through different networks before reaching the final recipient.

- Host

Any device that is on any network.

- Server

A special computer on the network that serves requests from other computers.

- Network topologies

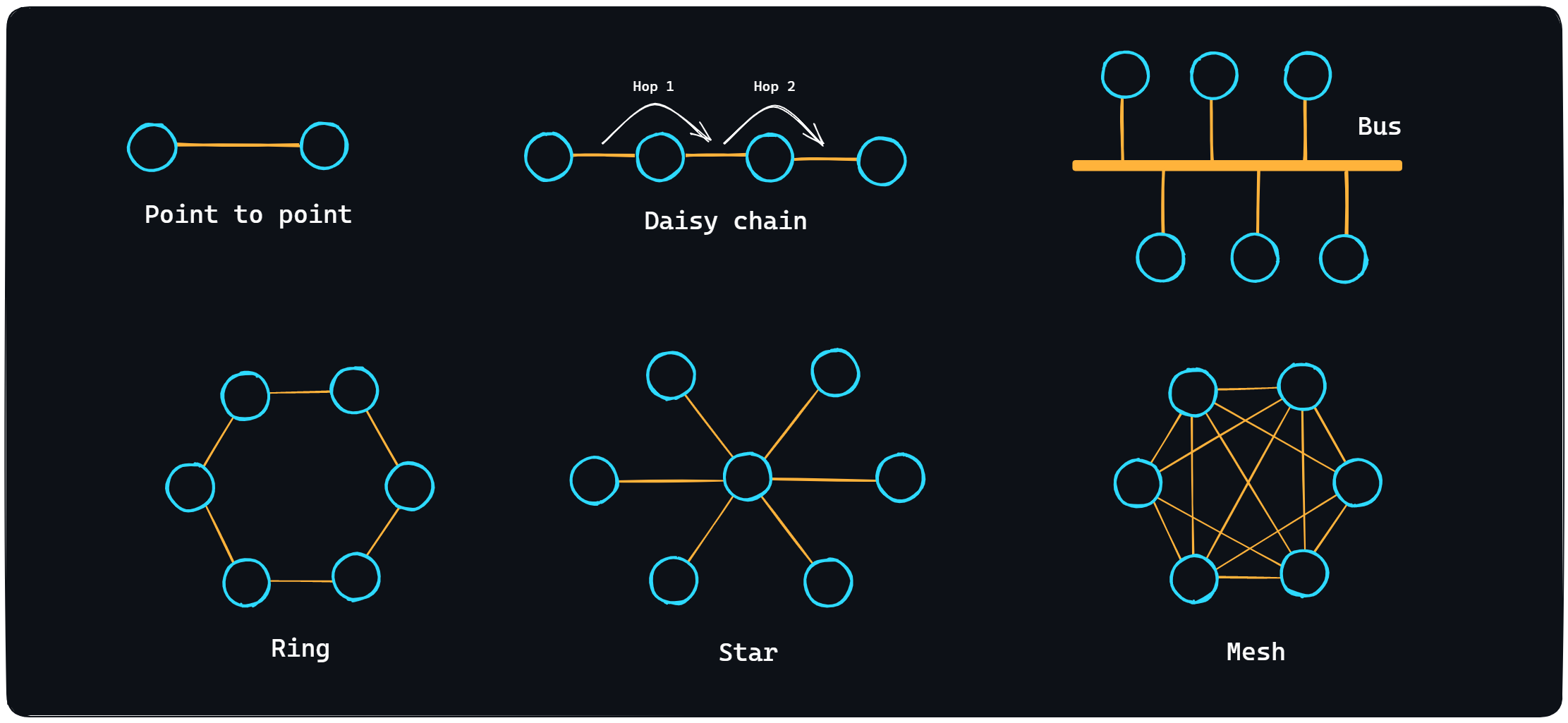

There are several topologies (ways of organizing a network): Point to point, Daisy chain, Bus, Ring, Star and Mesh. The Internet itself cannot be referred to any one topology, because it is an incredibly complex system mixed with different topologies.

- Host

🔗 References

-

What is a domain name

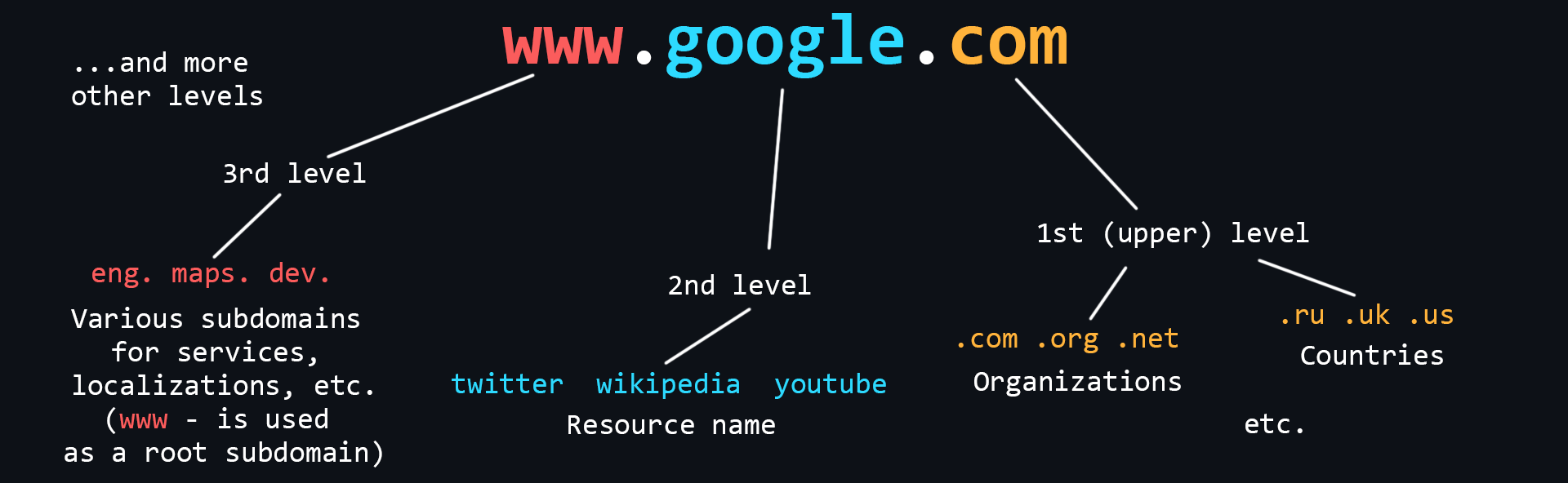

Domain Names are human-readable addresses of web servers available on the Internet. They consist of parts (levels) separated from each other by a dot. Each of these parts provides specific information about the domain name. For example country, service name, localization, etc.

- Who owns domain names

The ICANN Corporation is the founder of the distributed domain registration system. It gives accreditations to companies that want to sell domains. In this way a competitive domain market is formed.

- How to buy a domain name

A domain name cannot be bought forever. It is leased for a certain period of time. It is better to buy domains from accredited registrars (you can find them in almost any country).

- Who owns domain names

-

IP address

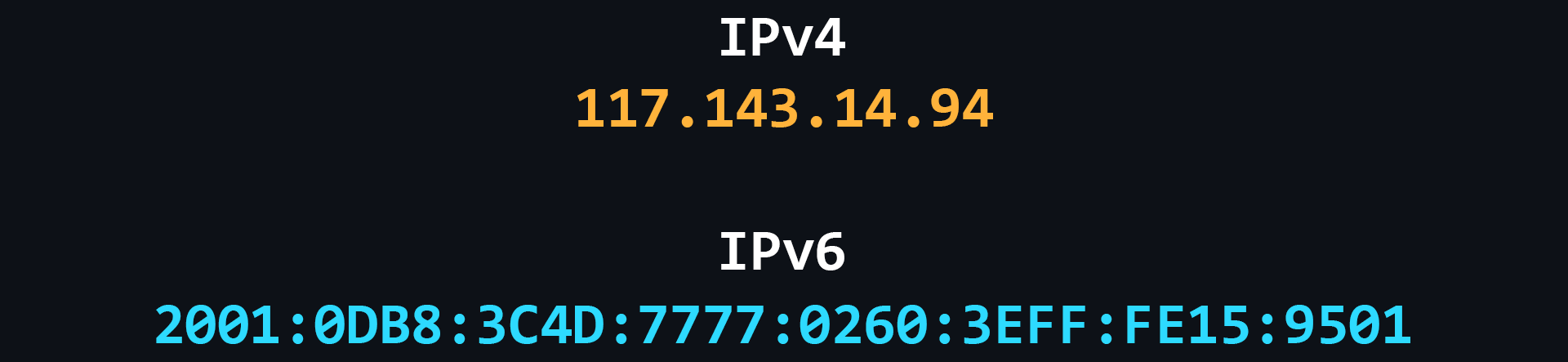

IP address is a unique numeric address that is used to recognize a particular device on the network.

- Levels of visibility

- External and publicly accessible IP address that belongs to your ISP and is used to access the Internet by hundreds of other users.

- The IP address of your router in your ISP's local network, the same IP address from which you access the Internet.

- The IP address of your computer in the local (home) network created by the router, to which you can connect your devices. Typically, it looks like 192.168.XXX.XXX.

- The internal IP address of the computer, inaccessible from the outside and used only for communication between the running processes. It is the same for everyone - 127.0.0.1 or just localhost.

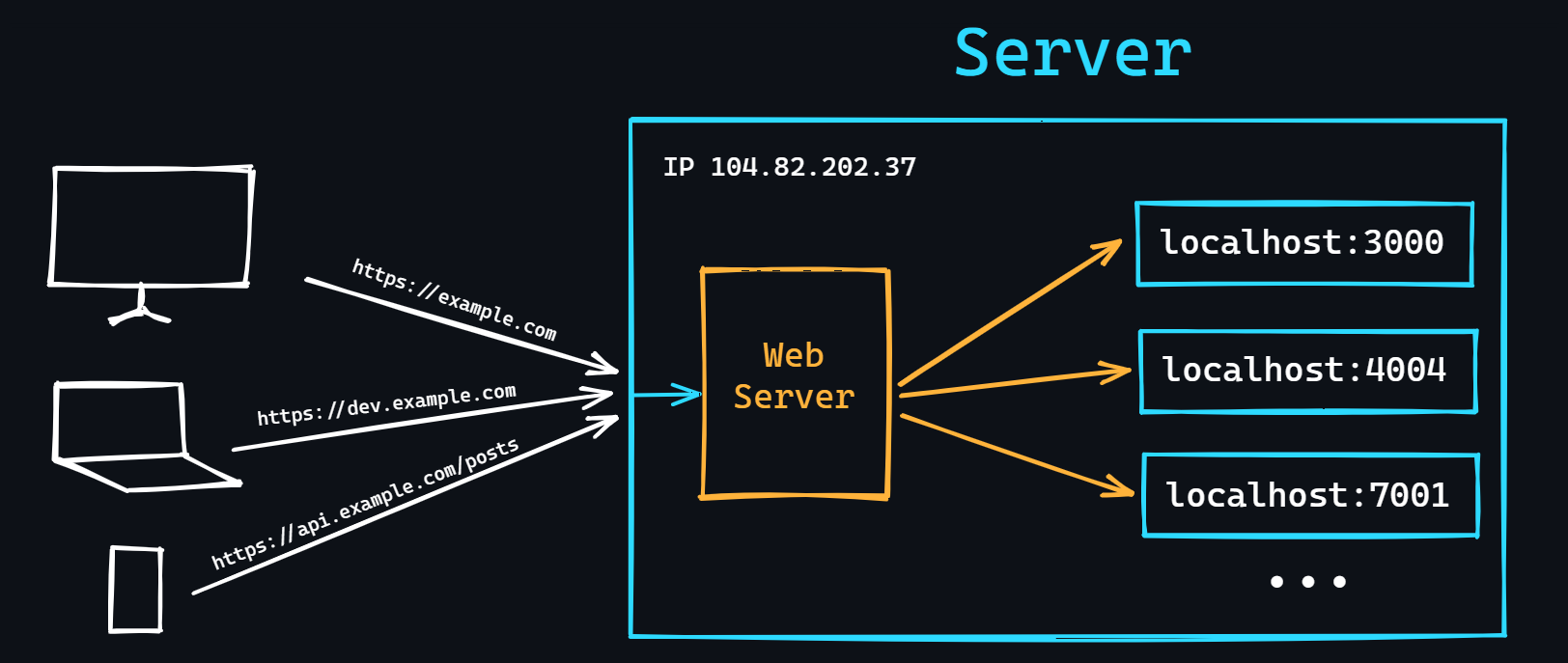

- Port

One device (computer) can run many applications that use the network. In order to correctly recognize where and which data coming over the network should be delivered (to which of the applications) a special numerical number - a port is used. That is, each running process on a computer which uses a network connection has its own personal port.

- IPv4

Version 4 of the IP protocol. It was developed in 1981 and limits the address space to about 4.3 billion (2^32) possible unique addresses.

- IPv6

Over time, the allocation of address space began to happen at a much faster rate, forcing the creation of a new version of the IP protocol to store more addresses. IPv6 is capable of issuing 2^128 (is huge number) unique addresses.

- Levels of visibility

🔗 References

- 📺 IP addresses. Explained – YouTube

- 📺 Public IP vs. Private IP and Port Forwarding (Explained by Example) – YouTube

- 📺 Network Ports Explained – YouTube

- 📺 What is IP address and types of IP address - IPv4 and IPv6 – YouTube

- 📺 IP Address - IPv4 vs. IPv6 Tutorial – YouTube

- 📄 IP Address Subnet Cheat Sheet – freeCodeCamp

-

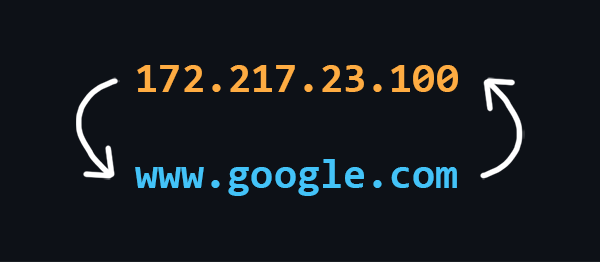

What is DNS

DNS (Domain Name System) is a decentralized Internet address naming system that allows you to create human-readable alphabetical names (domain names) corresponding to the numeric IP addresses used by computers.

- Structure of DNS

DNS consists of many independent nodes, each of which stores only those data that fall within its area of responsibility.

- DNS Resolver

A server that is located in close proximity to your Internet Service Provider. It is the server that searches for addresses by domain name, and also caches them (temporarily storing them for quick retrieval in future requests).

- DNS record types

- A record - associates the domain name with an IPv4 address.

- AAAA record - links a domain name with an IPv6 address.

- CNAME record - redirects to another domain name.

- and others - MX record, NS record, PTR record, SOA record.

- Structure of DNS

🔗 References

-

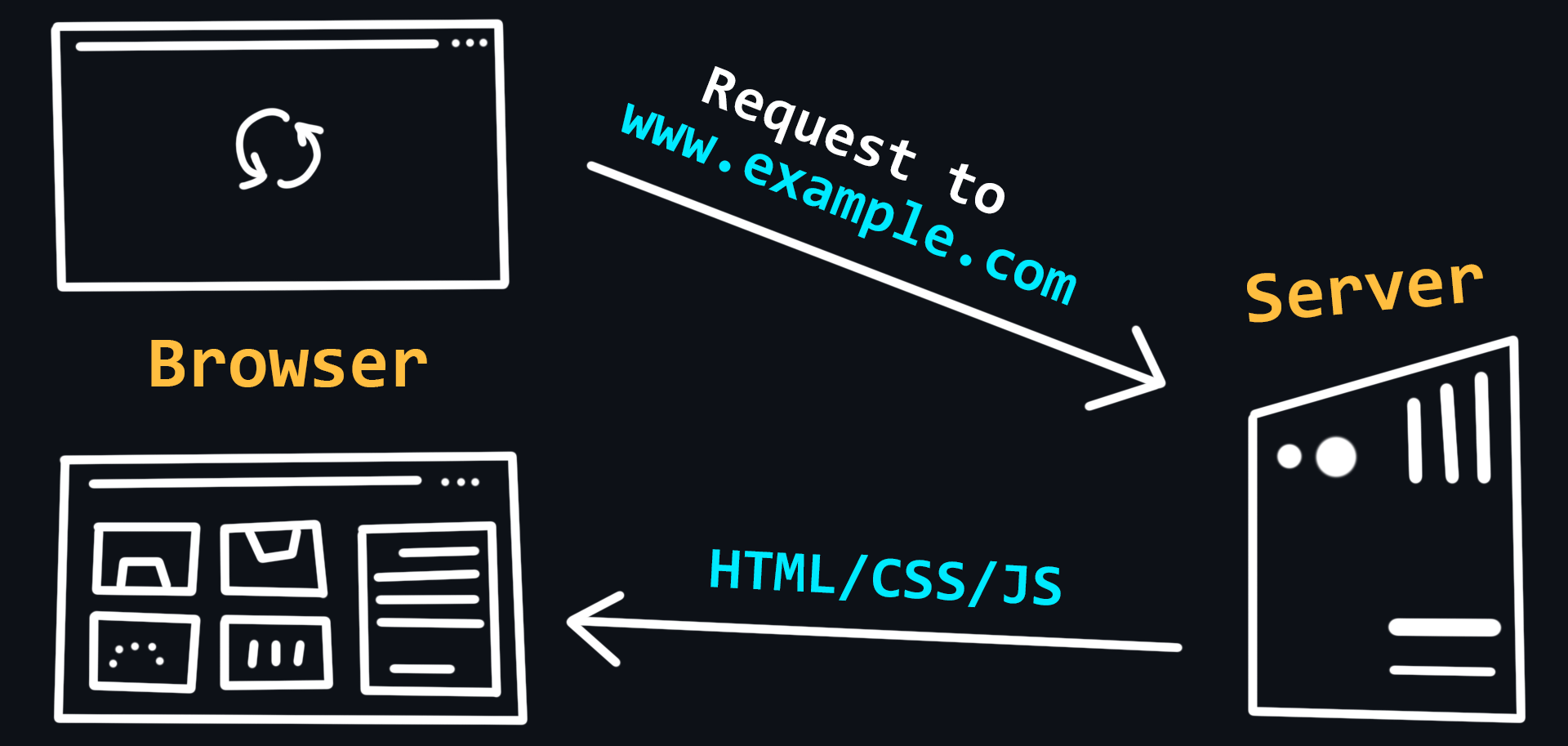

Web application design

Modern web applications consist of two parts: Frontend and Backend. Thus implementing a client-server model.

The tasks of the Frontend are:

- Implementation of the user interface (appearance of the application)

- A special markup language HTML is used to create web pages.

- CSS style language is used to style fonts, layout of content, etc.

- JavaScript programming language is used to add dynamics and interactivity.

As a rule, these tools are rarely used in their pure form, as so-called frameworks and preprocessors exist for more convenient and faster development.

- Creating functionality for generating requests to the server

These are usually different types of input forms that can be conveniently interacted with.

- Receives data from the server and then processes it for output to the client

Tasks of the Backend:

- Handling client requests

Checking for permissions and access, all sorts of validations, etc.

- Implementing business logic

A wide range of tasks can be implied here: working with databases, information processing, computation, etc. This is, so to speak, the heart of the Backend world. This is where all the important and interesting stuff happens.

- Generating a response and sending it to the client

- Implementation of the user interface (appearance of the application)

🔗 References

-

Browsers and how they work

Browser is a client which can be used to send requests to a server for files which can then be used to render web pages. In simple terms, a browser can be thought of as a program for viewing HTML files, which can also search for and download them from the Internet.

- Working Principle

Query handling, page rendering, and the tabs feature (each tab has its own process to prevent the contents of one tab from affecting the contents of the other).

- Extensions

Allow you to change the browser's user interface, modify the contents of web pages, and modify the browser's network requests.

- Chrome DevTools

An indispensable tool for any web developer. It allows you to analyze all possible information related to web pages, monitor their performance, logs and, most importantly for us, track information about network requests.

- Working Principle

🔗 References

- 📄 How browsers work – MDN

- 📄 How browsers work: Behind the scenes of modern web browsers – web.dev

- 📄 Inside look at modern web browser – Google

- 📺 What is a web browser? – YouTube

- 📺 Anatomy of the browser 101 (Chrome University 2019) – YouTube

- 📺 Chrome DevTools - Crash Course – YouTube

- 📺 Demystifying the Browser Networking Tab in DevTools – YouTube

- 📺 21+ Browser Dev Tools & Tips You Need To Know – YouTube

-

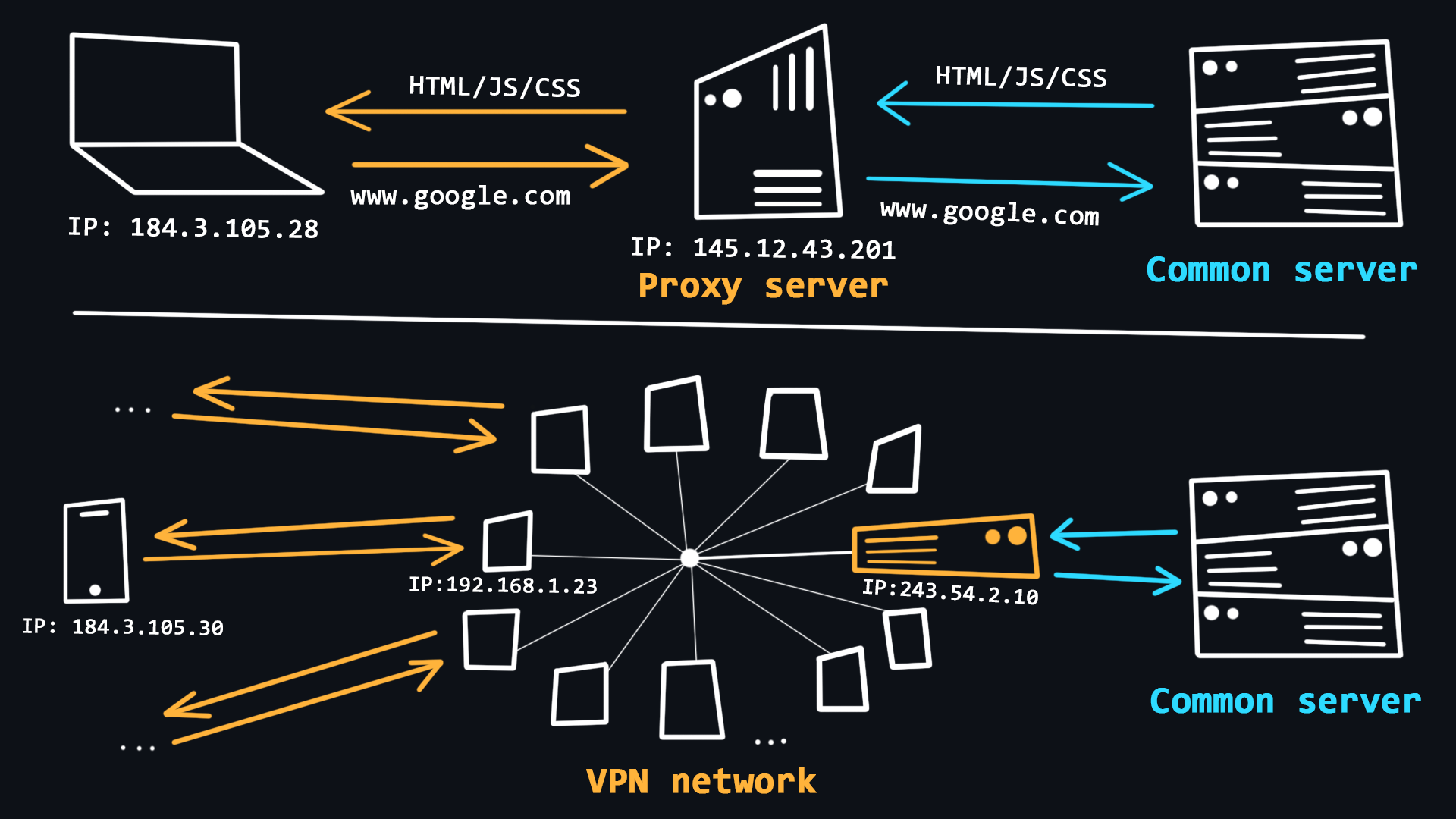

VPN and Proxy

The use of VPNs and Proxy is quite common in recent years. With the help of these technologies, users can get basic anonymity when surfing the web, as well as bypass various regional blockages.

- VPN (Virtual Private Network)

A technology that allows you to become a member of a private network (similar to your local network), where requests from all participants go through a single public IP address. This allows you to blend in with the general mass of requests from other participants.

- Simple procedure for connection and use.

- Reliable traffic encryption.

- There is no guarantee of 100% anonymity, because the owner of the network knows the IP-addresses of all participants.

- VPNs are useless for dealing with multi-accounts and some programs because all accounts operating from the same VPN are easily detected and blocked.

- Free VPNs tend to be heavily loaded, resulting in unstable performance and slow download speeds.

- Simple procedure for connection and use.

- Proxy (proxy server)

A proxy is a special server on the network that acts as an intermediary between you and the destination server you intend to reach. When you are connected to a proxy server all your requests will be performed on behalf of that server, that is, your IP address and location will be substituted.

- The ability to use an individual IP address, which allows you to work with multi-accounts.

- Stability of the connection due to the absence of high loads.

- Connection via proxy is provided in the operating system and browser, so no additional software is required.

- There are proxy varieties that provide a high level of anonymity.

- The unreliability of free solutions, because the proxy server can see and control everything you do on the Internet.

- The ability to use an individual IP address, which allows you to work with multi-accounts.

- VPN (Virtual Private Network)

🔗 References

- 📄 What is VPN? How It Works, Types of VPN – kaspersky.com

- 📺 VPN (Virtual Private Network) Explained – YouTube

- 📺 What Is a Proxy and How Does It Work? – YouTube

- 📺 What is a Proxy Server? – YouTube

- 📺 Proxy vs. Reverse Proxy (Explained by Example) – YouTube

- 📺 VPN vs. Proxy Explained Pros and Cons – YouTube

-

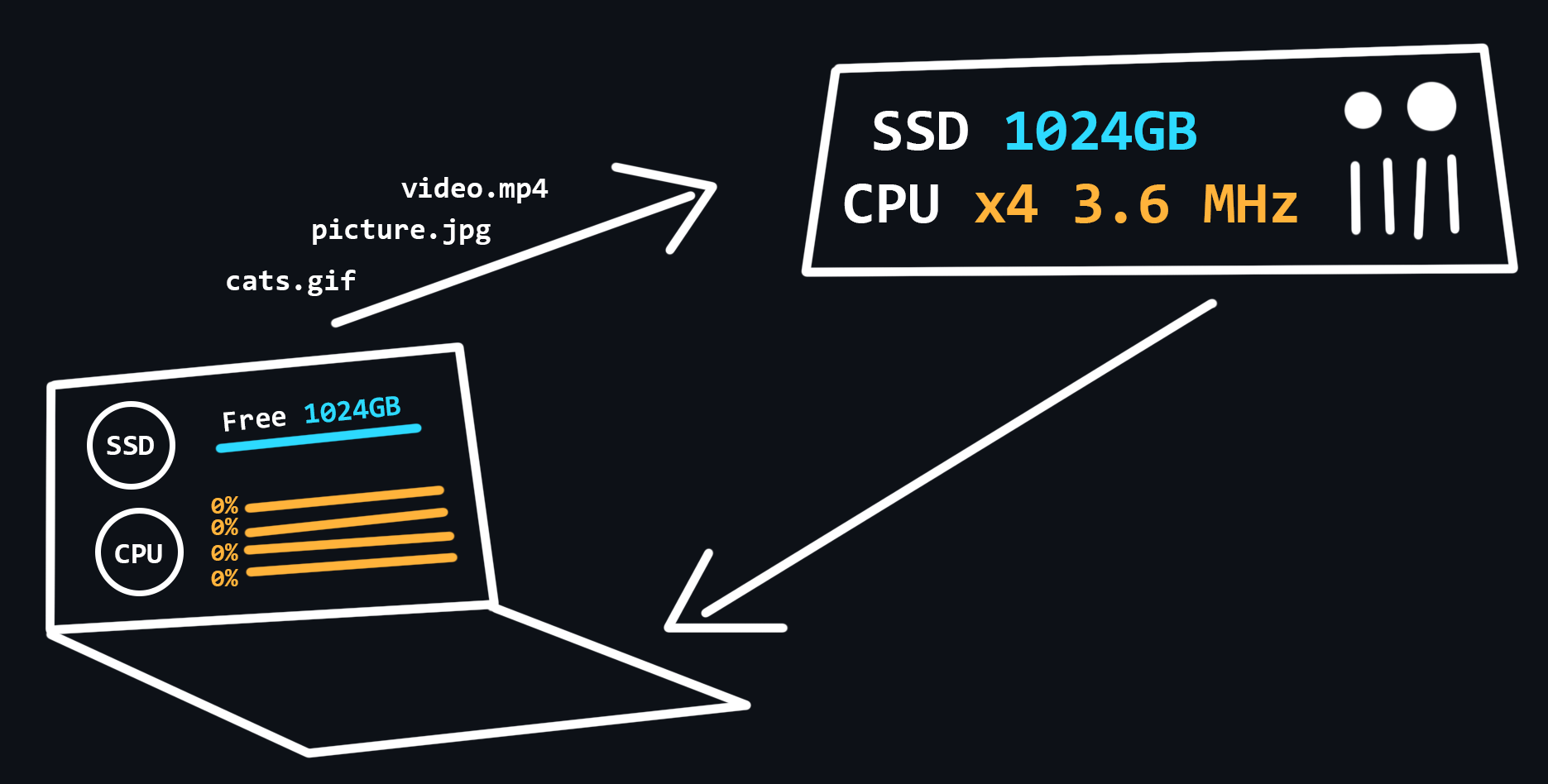

Hosting

Hosting is a special service provided by hosting providers, which allows you to rent space on a server (which is connected to the Internet around the clock), where your data and files can be stored. There are different options for hosting, where you can use not only the disk space of the server, but also the CPU power to run your network applications.

- Virtual hosting

One physical server that distributes its resources to multiple tenants.

- VPS/VDS

Virtual servers that emulate the operation of a separate physical server and are available for rent to the client with maximum privileges.

- Dedicated server

Renting a full physical server with full access to all resources. As a rule, this is the most expensive service.

- Cloud hosting

A service that uses the resources of several servers. When renting, the user pays only for the actual resources used.

- Colocation

A service that gives the customer the opportunity to install their equipment on the provider's premises.

- Virtual hosting

🔗 References

-

OSI network model

№ Level Used protocols 7 Application layer HTTP, DNS, FTP, POP3 6 Presentation layer SSL, SSH, IMAP, JPEG 5 Session layer APIs Sockets 4 Transport layer TCP, UDP 3 Network layer IP, ICMP, IGMP 2 Data link layer Ethernet, MAC, HDLC 1 Physical layer RS-232, RJ45, DSL OSI (The Open Systems Interconnection model) is a set of rules describing how different devices should interact with each other on the network. The model is divided into 7 layers, each of which is responsible for a specific function. All this is to ensure that the process of information exchange in the network follows the same pattern and all devices, whether it is a smart fridge or a smartphone, can understand each other without any problems.

- Physical layer

At this level, bits (ones/zeros) are encoded into physical signals (current, light, radio waves) and transmitted further by wire (Ethernet) or wirelessly (Wi-Fi).

- Data link layer

Physical signals from layer 1 are decoded back into ones and zeros, errors and defects are corrected, and the sender and receiver MAC addresses are extracted.

- Network layer

This is where traffic routing, DNS queries and IP packet generation take place.

- Transport layer

The layer responsible for data transfer. There are two important protocols:

- TCP is a protocol that ensures reliable data transmission. TCP guarantees data delivery and preserves the order of the messages. This has an impact on the transmission speed. This protocol is used where data loss is unacceptable, such as when sending mail or loading web pages.

- UDP is a simple protocol with fast data transfer. It does not use mechanisms to guarantee the delivery and ordering of data. It is used e.g., in online games where partial packet loss is not crucial, but the speed of data transfer is much more important. Also, requests to DNS servers are made through UDP protocol.

- TCP is a protocol that ensures reliable data transmission. TCP guarantees data delivery and preserves the order of the messages. This has an impact on the transmission speed. This protocol is used where data loss is unacceptable, such as when sending mail or loading web pages.

- Session layer

Responsible for opening and closing communications (sessions) between two devices. Ensures that the session stays open long enough to transfer all necessary data, and then closes quickly to avoid wasting resources.

- Presentation layer

Transmission, encryption/decryption and data compression. This is where data that comes in the form of zeros and ones are converted into desired formats (PNG, MP3, PDF, etc.)

- Application layer

Allows the user's applications to access network services such as database query handler, file access, email forwarding.

- Physical layer

🔗 References

-

HTTP Protocol

HTTP (HyperText Transport Protocol) is the most important protocol on the Internet. It is used to transfer data of any format. The protocol itself works according to a simple principle: request -> response.

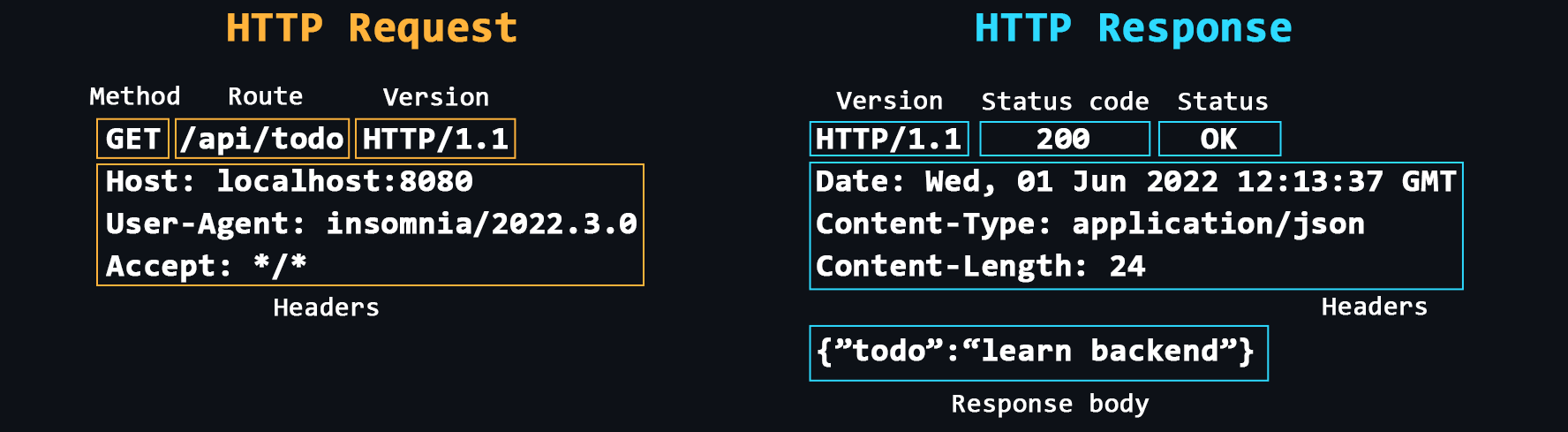

- Structure of HTTP messages

HTTP messages consist of a header section containing metadata about the message, followed by an optional message body containing the data being sent.

- Headers

Additional service information that is sent with the request/response.

Common headers: Host, User-Agent, If-Modified-Since, Cookie, Referer, Authorization, Cache-Control, Content-Type, Content-Length, Last-Modified, Set-Cookie, Content-Encoding. - Request methods

Main: GET, POST, PUT, DELETE.

Others: HEAD, CONNECT, OPTIONS, TRACE, PATCH. - Response status codes

Each response from the server has a special numeric code that characterizes the state of the sent request. These codes are divided into 5 main classes:

- 1хх - Service information

- 2хх - Successful request

- 3хх - Redirect to another address

- 4хх - Client side error

- 5хх - Server side error

- HTTPS

Same HTTP, but with encryption support. Your apps should use HTTPS to be secure.

- Cookie

The HTTP protocol does not provide the ability to save information about the status of previous requests and responses. Cookies are used to solve this problem. Cookies allow the server to store information on the client side that the client can send back to the server. For example, cookies can be used to authenticate users or to store various settings.

- CORS (Cross origin resource sharing)

A technology that allows one domain to securely receive data from another domain.

- CSP (Content Security Policy)

A special header that allows you to recognize and eliminate certain types of web application vulnerabilities.

- Evolution of HTTP

- HTTP/1.0: Uses separate connections for each request/response, lacks caching support, and has plain text headers.

- HTTP/1.1: Introduces persistent connections, pipelining, the Host header, and chunked transfer encoding.

- HTTP/2: Supports multiplexing, header compression, server push, and support a binary data.

- HTTP/3: Built on QUIC, offers improved multiplexing, reliability, and better performance over unreliable networks.

- Structure of HTTP messages

🔗 References

- 📄 How HTTP Works and Why it's Important – freeCodeCamp

- 📄 Hypertext Transfer Protocol (HTTP) – MDN

- 📺 Hyper Text Transfer Protocol Crash Course – YouTube

- 📺 Full HTTP Networking Course (5 hours) – YouTube

- 📄 HTTP vs. HTTPS – What's the Difference? – freeCodeCamp

- 📺 HTTP Cookies Crash Course – YouTube

- 📺 Cross Origin Resource Sharing (Explained by Example) – YouTube

- 📺 When to use HTTP GET vs. POST? – YouTube

- 📺 How HTTP/2 Works, Performance, Pros & Cons and More – YouTube

- 📺 HTTP/2 Critical Limitation that led to HTTP/3 & QUIC – YouTube

- 📺 304 Not Modified HTTP Status (Explained with Code Example and Pros & Cons) – YouTube

- 📺 What is the Largest POST Request the Server can Process? – YouTube

-

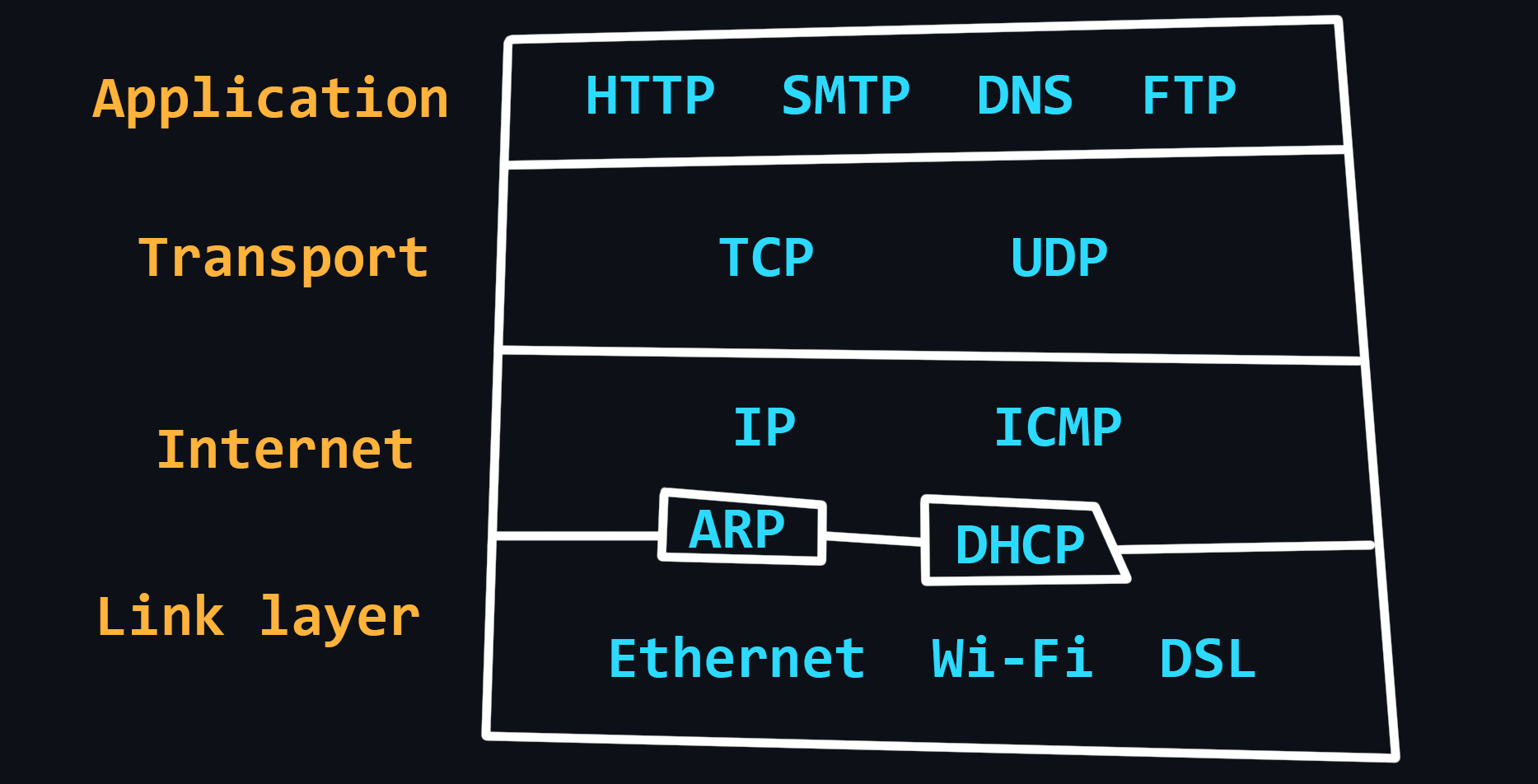

TCP/IP stack

Compared to the OSI model, the TCP/IP stack has a simpler architecture. In general, the TCP/IP model is more widely used and practical, and the OSI model is more theoretical and detailed. Both models describe the same principles, but differ in the approach and protocols they include at their levels.

- Link layer

Defines how data is transmitted over the physical medium, such as cables or wireless signals.

Protocols: Ethernet, Wi-Fi, Bluetooth, Fiber optic. - Internet Layer

Routing data across different networks. It uses IP addresses to identify devices and routes data packets to their destination.

Protocols: IP, ARP, ICMP, IGMP - Transport Layer

Data transmission between two devices. It uses protocols such as TCP - reliable, but slow and UDP - fast, but unreliable.

- Application Layer

Provides services to the end user, such as web browsing, email, and file transfer. It interacts with the lower layers of the stack to transmit data over the network.

Protocols: HTTP, FTP, SMTP, DNS, SNMP.

- Link layer

🔗 References

-

Network problems

The quality of networks, including the Internet, is far from ideal. This is due to the complex structure of networks and their dependence on a huge number of factors. For example, the stability of the connection between the client device and its router, the quality of service of the provider, the power, and performance of the server, the physical distance between the client and the server, etc.

- Latency

The time it takes for a data packet to travel from sender to receiver. It depends more on the physical distance.

- Packet loss

Not all packets traveling over the network can reach their destination. This happens most often when using wireless networks or due to network congestion.

- Round Trip Time (RTT)

The time it takes for the data packet to reach its destination + the time to respond that the packet was received successfully.

- Jitter

Delay fluctuations, unstable ping (for example, 50ms, 120ms, 35ms...).

- Packet reordering

The IP protocol does not guarantee that packets are delivered in the order in which they are sent.

- Latency

🔗 References

-

Network diagnostics

- Traceroute

A procedure that allows you to trace to which nodes, with which IP addresses, a packet you send before it reaches its destination. Tracing can be used to identify computer network related problems and to examine/analyze the network.

- Ping scan

The easiest way to check the server for performance.

- Checking for packet loss

Due to dropped connections, not all packets sent over the network reach their destination.

- Wireshark

A powerful program with a graphical interface for analyzing all traffic that passes through the network in real time.

- Traceroute

🔗 References

PC device

-

Main components (hardware)

- Motherboard

The most important PC component to which all other elements are connected.

- Chipset - set of electronic components that responsible for the communication of all motherboard components.

- CPU socket - socket for mounting the processor.

- VRM (Voltage Regulator Module) – module that converts the incoming voltage (usually 12V) to a lower voltage to run the processor, integrated graphics, memory, etc.

- Slots for RAM.

- Expansion slots PCI-Express - designed for connection of video cards, external network/sound cards.

- Slots M.2 / SATA - designed to connect hard disks and SSDs.

- CPU (Central processing unit)

The most important device that executes instructions (programme code). Processors only work with 1 and 0, so all programmes are ultimately a set of binary code.

- Registers - the fastest memory in a PC, has an extremely small capacity, is built into the processor and is designed to temporarily store the data being processed.

- Cache - slightly less fast memory, which is also built into the processor and is used to store a copy of data from frequently used cells in the main memory.

- Processors can have different architectures. Currently, the most common are the x86 architecture (desktop and laptop computers) and ARM (mobile devices as well as the latest Apple computers).

- RAM (Random-access memory)

Fast, low capacity memory (4-16GB) designed to temporarily store program code, as well as input, output and intermediate data processed by the processor.

- Data storage

Large capacity memory (256GB-1TB) designed for long-term storage of files and installed programmes.

- GPU (Graphics card)

A separate card that translates and processes data into images for display on a monitor. This device is also called a discrete graphics card. Usually needed for those who do 3D modelling or play games.

Built-in graphics card is a graphics card built into the processor. It is suitable for daily work. - Network card

A device that receives and transmits data from other devices connected to the local network.

- Sound card

A device that allows you to process sound, output it to other devices, record it with a microphone, etc.

- Power supply unit

A device designed to convert the AC voltage from the mains to DC voltage.

- Motherboard

🔗 References

- 📄 Everything You Need to Know About Computer Hardware

- 📄 Putting the "You" in CPU: explainer how your computer runs programs, from start to finish

- 📺 What does what in your computer? Computer parts Explained – YouTube

- 📺 Motherboards Explained – YouTube

- 📺 The Fetch-Execute Cycle: What's Your Computer Actually Doing? – YouTube

- 📺 How a CPU Works in 100 Seconds // Apple Silicon M1 vs. Intel i9 – YouTube

- 📺 Arm vs. x86 - Key Differences Explained – YouTube

-

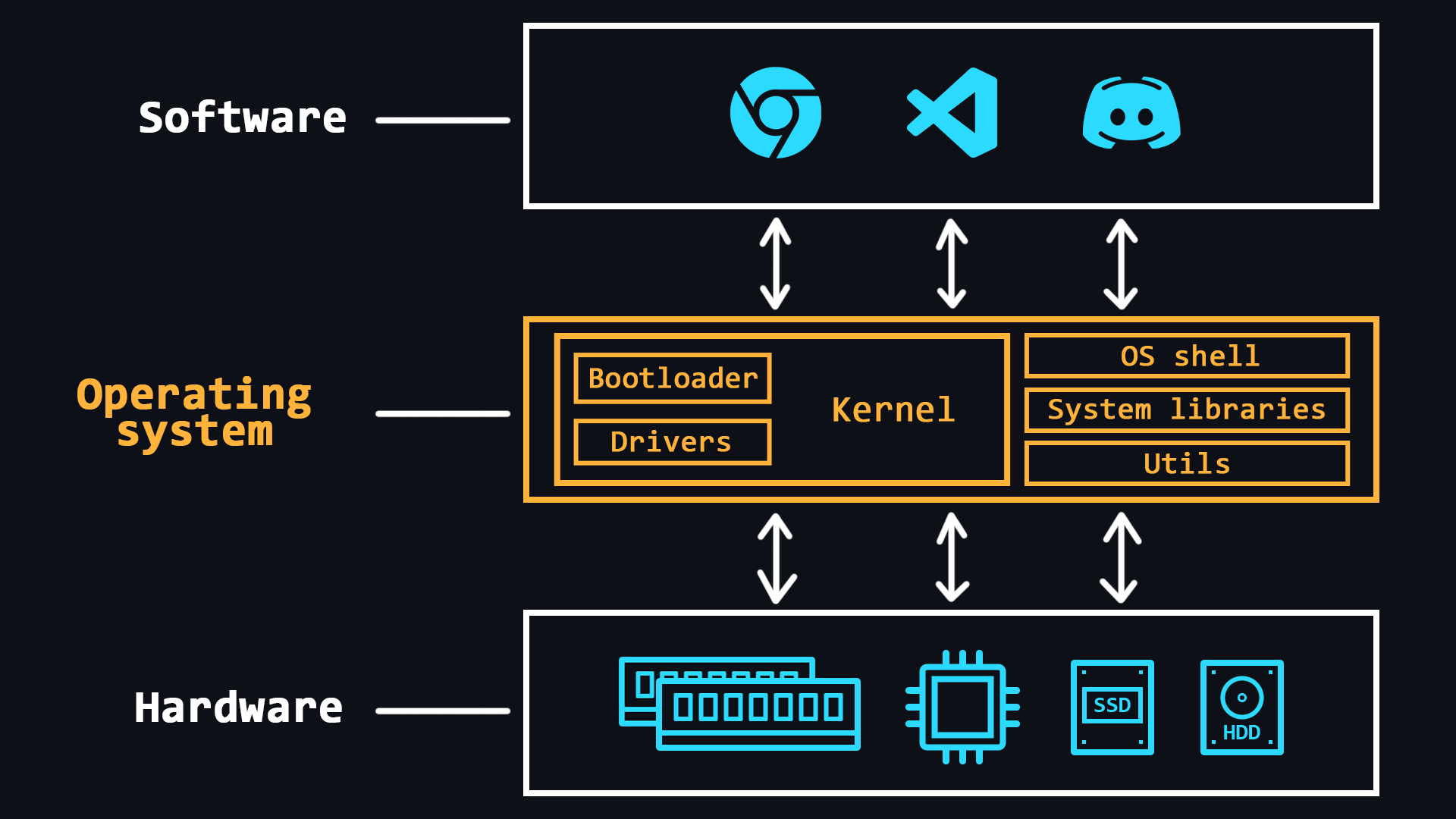

Operating system design

Operating system (OS) is a comprehensive software system designed to manage a computer's resources. With operating systems, people do not have to deal directly with the processor, RAM, or other parts of the PC.

OS can be thought of as an abstraction layer that manages the hardware of a computer, thereby providing a simple and convenient environment for user software to run.

- Main features

- RAM management (space allocation for individual programs)

- Loading programs into RAM and their execution

- Execution of requests from user's programs (inputting and outputting data, starting and stopping other programs, freeing up memory or allocating additional memory, etc.)

- Interaction with input and output devices (mouse, keyboard, monitor, etc.)

- Interaction with storage media (HDDs and SSDs)

- Providing a user's interface (console shell or graphical interface)

- Logging of software errors (saving logs)

- Additional functions (may not be available in all OSs)

- Organize multitasking (simultaneous execution of several programs)

- Delimiting access to resources for each process

- Inter-process communication (data exchange, synchronisation)

- Organize the protection of the operating system itself against other programs and the actions of the user

- Provide multi-user mode and differentiate rights between different OS users (admins, guests, etc.)

- OS kernel

The central part of the operating system which is used most intensively. The kernel is constantly in memory, while other parts of the OS are loaded into and unloaded from memory as needed.

- Bootloader

The system software that prepares the environment for the OS to run (puts the hardware in the right state, prepares the memory, loads the OS kernel there and transfers control to it (the kernel).

- Device drivers

Special software that allows the OS to work with a particular piece of equipment.

- Main features

🔗 References

- 📄 What is an OS? Operating System Definition for Beginners – freeCodeCamp

- 📄 Windows vs. macOS vs. Linux – Operating System Handbook – freeCodeCamp

- 📺 Operating Systems: Crash Course Computer Science – YouTube

- 📺 Operating System Basics – YouTube

- 📺 Operating System in deep details (playlist) – YouTube

- 📄 Awesome Operating System Stuff – GitHub

-

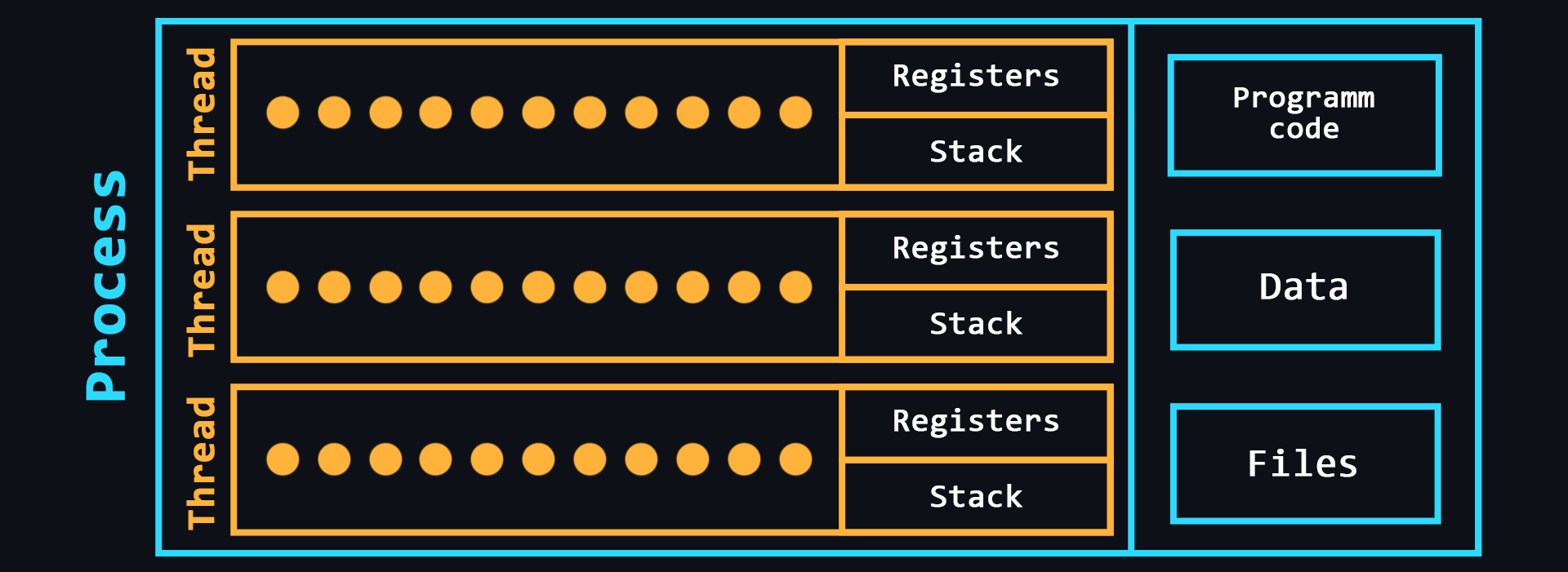

Processes and threads

- Process

A kind of container in which all the resources needed to run a program are stored. As a rule, the process consists of:

- Executable program code

- Input and output data

- Call stack (order of instructions for execution)

- Heap (a structure for storing intermediate data created during the process)

- Segment descriptor

- File descriptor

- Information about the set of permissible powers

- Processor status information

- Executable program code

- Thread

An entity in which sequences of program actions (procedures) are executed. Threads are within a process and use the same address space. There can be multiple threads in a single process, allowing multiple tasks to be performed. These tasks, thanks to threads, can exchange data, use shared data or the results of other tasks.

- Process

🔗 References

-

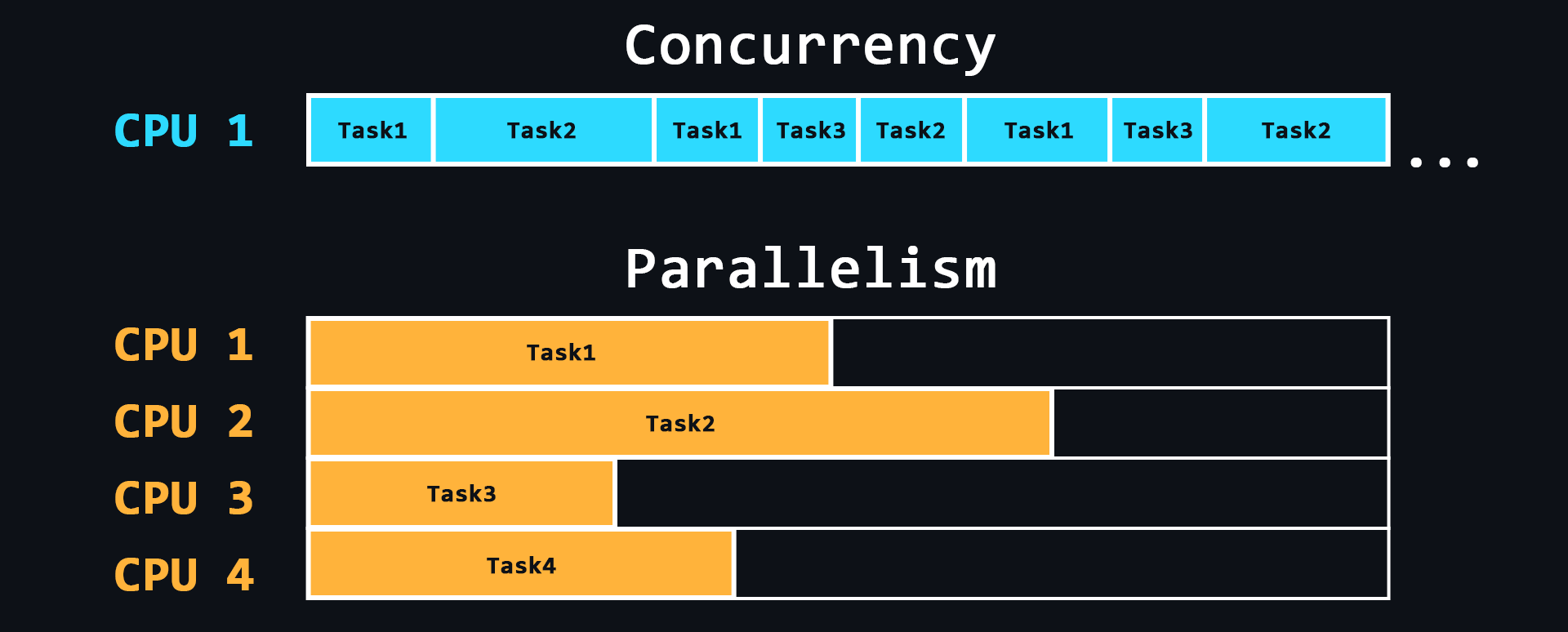

Concurrency and parallelism

- Parallelism

The ability to perform multiple tasks simultaneously using multiple processor cores, where each individual core performs a different task.

- Concurrency

The ability to perform multiple tasks, but using a single processor core. This is achieved by dividing tasks into separate blocks of commands which are executed in turn, but switching between these blocks is so fast that for users it seems as if these processes are running simultaneously.

- Parallelism

🔗 References

-

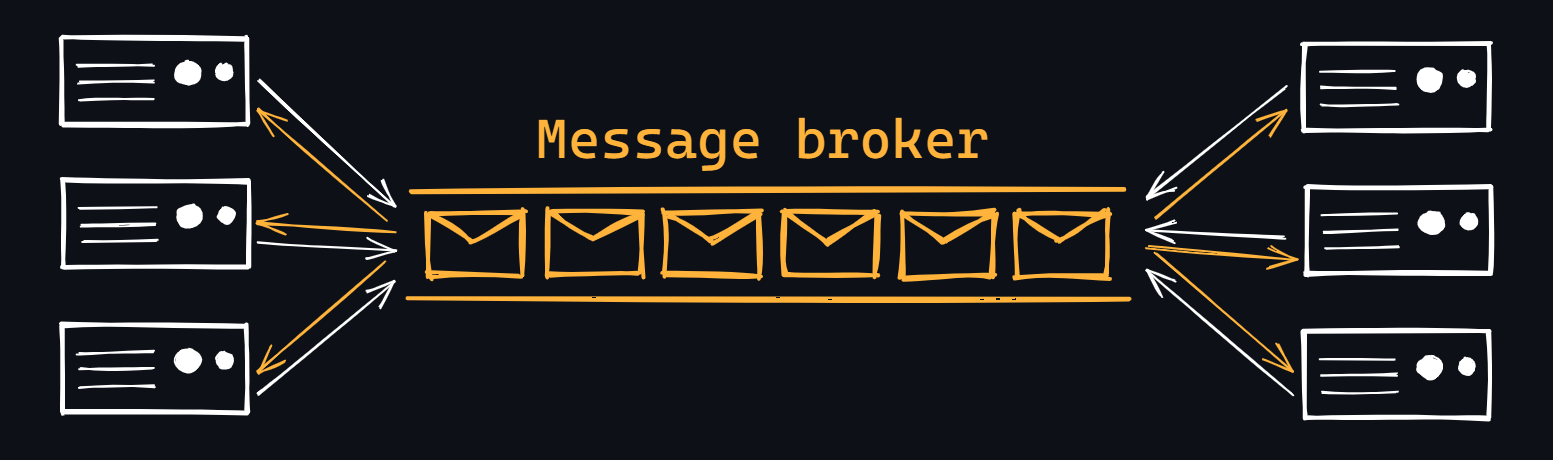

Inter-process communication

A mechanism which allows to exchange data between threads of one or different processes. Processes can be run on the same computer or on different computers connected by a network. Inter-process communication can be done in different ways.

- File

The easiest way to exchange data. One process writes data to a certain file, another process reads the same file and thus receives data from the first process.

- Signal (IPC)

Asynchronous notification of one process about an event which occurred in another process.

- Network socket

In particular, IP addresses and ports are used to communicate between computers using the TCP/IP protocol stack. This pair defines a socket (socket corresponding to the address and port).

- Semaphore

A counter over which only 2 operations can be performed: increasing and decreasing (and for 0 the decreasing operation is blocked).

- Message passing & Message queue

- Pipelines

Redirecting the output of one process to the input of another (similar to a pipe).

- File

🔗 References

Linux Basics

Operating systems based on Linux kernel are the standard in the world of server development, since most servers run on such operating systems. Using Linux on servers is profitable because it is free and open source, secure and works fast on cheap hardware.

There are a huge number of Linux distributions (preinstalled software bundles) to suit all tastes. One of the most popular is Ubuntu. This is where you can start your dive into server development.

Install Ubuntu on a separate PC or laptop. If this is not possible, you can use a special program Virtual Box where you can run other OS on top of the main OS. You can also run Docker Ubuntu image container (Docker is a separate topic that is exists in this repository).

-

Working with shell

Shell (or console, terminal) is a computer program which is used to operate and control a computer by entering special text commands. Generally, servers do not have graphical interfaces (GUI), so you will definitely need to learn how to work with shells. The are many Unix shells, but most Linux distributions come with a Bash shell by default.

- Basic commands for navigating the file system

ls # list directory contents cd [PATH] # go to specified directory cd .. # move to a higher level (to the parent directory) touch [FILE] # create a file cat > [FILE] # enter text into the file (overwrite) cat >> [FILE] # enter text at the end of the file (append) cat/more/less [FILE] # to view the file contents head/tail [FILE] # view the first/last lines of a file pwd # print path to current directory mkdir [NAME] # create a directory rmdir [NAME] # delete a directory cp [FILE] [PATH] # copy a file or directory mv [FILE] [PATH] # moving or renaming rm [FILE] # deleting a file or directory find [STRING] # file system search du [FILE] # output file or directory size grep [PATTERN] [FILE] # print lines that match patterns - Commands for help information

man [COMMAND] # allows you to view a manual for any command apropos [STRING] # search for a command with a description that has a specified word man -k [STRING] # similar to the command above whatis [COMMAND] # a brief description of the command - Super user rights

Analogue to running as administrator in Windows

sudo [COMMAND] # executes a command with superuser privileges - Text editor

Study any in order to read and edit files freely through the terminal. The easiest – nano. Something in the middle - micro. The most advanced – Vim.

- Basic commands for navigating the file system

🔗 References

- 📄 31 Linux Commands Every Ubuntu User Should Know

- 📄 The Linux Command Handbook – freeCodeCamp

- 📄 A to Z: List of Linux commands

- 📺 The 50 Most Popular Linux & Terminal Commands – YouTube

- 📺 Nano Editor Fundamentals – YouTube

- 📺 Vim Tutorial for Beginners – YouTube

- 📄 Awesome Terminals – GitHub

- 📄 Awesome CLI-apps – GitHub

-

Package manager

The package manager is a utility that allows you to install/update software packages from the terminal.

Linux distributions can be divided into several groups, depending on which package manager they use: apt (in Debian based distributions), RPM (the Red Hat package management system) and Pacman (the package manager in Arch-like distributions)

Ubuntu is based on Debian, so it uses apt (advanced packaging tool) package manager.

- Basic commands

apt install [package] # install the package apt remove [package] # remove the package, but keep the configuration apt purge [package] # remove the package along with the configuration apt update # update information about new versions of packages apt upgrade # update the packages installed in the system apt list --installed # list of packages installed on the system apt list --upgradable # list of packages that need to be updated apt search [package] # searching for packages by name on the network apt show [package] # package information - aptitude

Interactive console utility for easy viewing of packages to install, update and uninstall them.

- Repository management

Package managers typically work with software repositories. These repositories contain a collection of software packages that are maintained and provided by the distribution's community or official sources.

add-apt-repository [repository_url] # add a new repository add-apt-repository --remove [repository_url] # remove a repo # don\'t forget to update after this operations - apt update/etc/apt/sources.list # a file contains a list of configured repo links /etc/apt/sources.list.d # a directory contains files for third party repos - dpkg

Low-level tool to install, build, remove and manage Debian packages.

- Basic commands

🔗 References

-

Bash scripts

You can use scripts to automate the sequential input of any number of commands. In Bash you can create different conditions (branching), loops, timers, etc. to perform all kinds of actions related to shell input.

- Basics of Bash Scripts

The most basic and frequently used features such as: variables, I/O, loops, conditions, etc.

- Practice

Solve challenges on sites like HackerRank and Codewars. Start using Bash to automate routine activities on your computer. If you're already a programmer, create scripts to easily build your project, to install settings, and so on.

- ShellCheck script analysis tool

It will point out possible mistakes and teach you best practices for writing really good scripts.

- Additional resources

Repositories such as awesome bash and awesome shell have entire collections of useful resources and tools to help you develop even more skills with Bash and shell in general.

- Basics of Bash Scripts

🔗 References

-

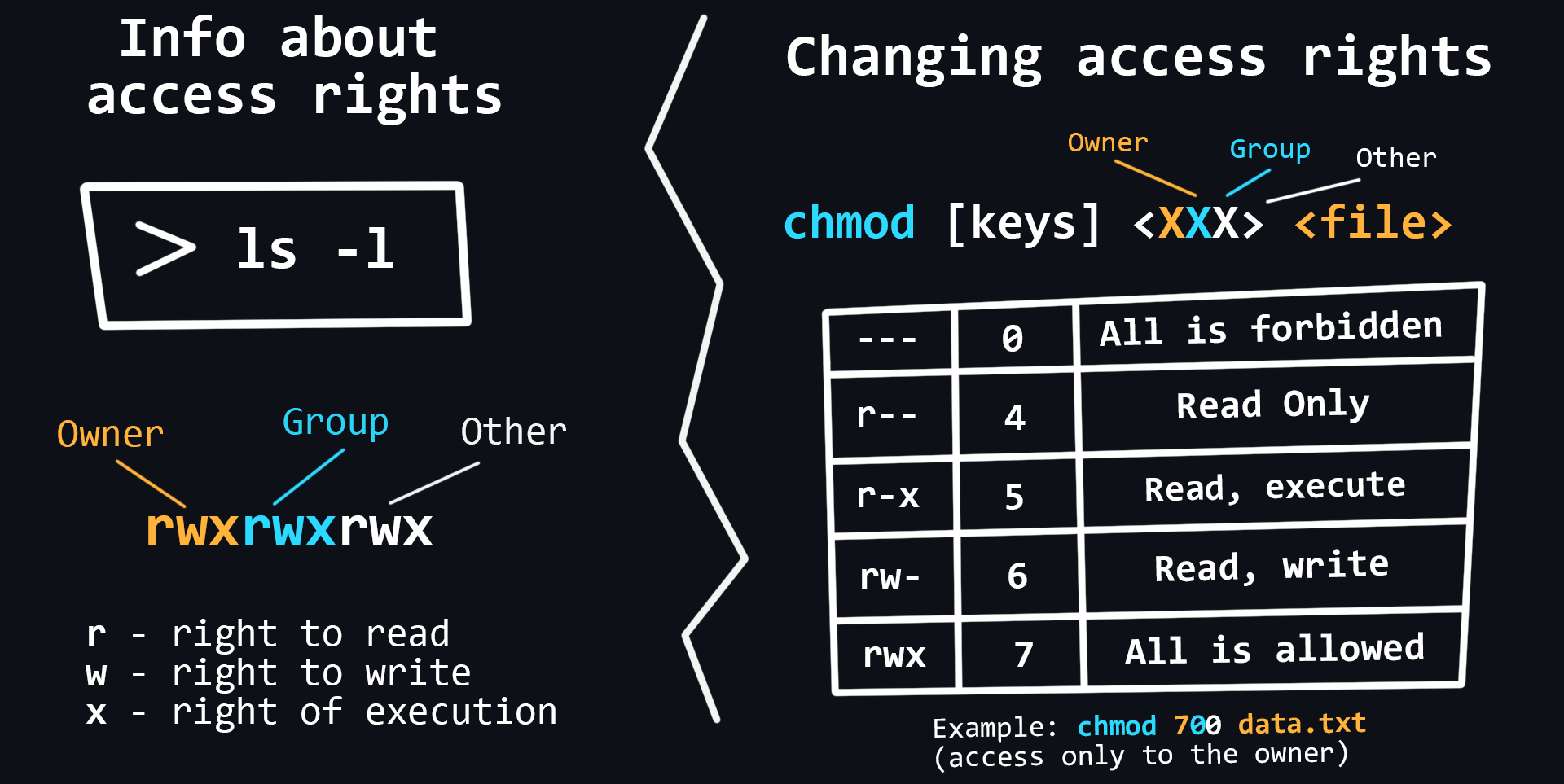

Users, groups, and permissions

Linux-based operating systems are multi-user. This means that several people can run many different applications at the same time on the same computer. For the Linux system to be able to "recognize" a user, he must be logged in, and therefore each user must have a unique name and a secret password.

- Working with users

useradd [name] [flags] # create a new user passwd [name] # set a password for the user usermod [name] [flags] # edit a user usermod -L [name] # block a user usermod -U [name] # unblock a user userdel [name] [flags] # delete a user su [name] # switch to other user - Working with groups

groupadd [group] [flags] # create a group groupmod [group] [flags] # edit group groupdel [group] [flags] # delete group usermod -a -G [groups] [user] # add a user to groups gpasswd --delete [user] [groups] # remove a user from groups - System files

/etc/passwd # a file containing basic information about users /etc/shadow # a file containing encrypted passwords /etc/group # a file containing basic information about groups /etc/gshadow # a file containing encrypted group passwords

On Linux, it is possible to share privileges between users, limit access to unwanted files or features, control available actions for services, and much more. On Linux, there are only three kinds of rights - read, write and execute - and three categories of users to which they can be applied - file owner, file group and everyone else.

- Basic commands for working with rights

chown <user> <file> # changes the owner and/or group for the specified files chmod <rights> <file> # changes access rights to files and directories chgrp <group> <file> # allows users to change groups - Extended rights SUID and GUID, sticky bit

- ACL (Access control list)

An advanced subsystem for managing access rights.

- Working with users

🔗 References

- 📄 Managing Users, Groups, and Permissions on Linux

- 📄 Linux User Groups Explained – freeCodeCamp

- 📺 Linux Users and Groups – YouTube

- 📄 An Introduction to Linux Permissions – Digital Ocean

- 📄 File Permissions on Linux – How to Use the chmod Command – freeCodeCamp

- 📺 Understanding File & Directory Permissions – YouTube

-

Working with processes

Linux processes can be described as containers in which all information about the state of a running program is stored. Sometimes programs can hang and in order to force them to close or restart, you need to be able to manage processes.

- Basic Commands

ps # display a snapshot of the processes of all users top # real-time task manager [command] & # running the process in the background, (without occupying the shell) jobs # list of processes running in the background fg [PID] # return the process back to the active mode by its number # You can press [Ctrl+Z] to return the process to the background bg [PID] # start a stopped process in the background kill [PID] # terminate the process by PID killall [program] # terminate all processes related to the program

- Basic Commands

🔗 References

-

Working with SSH

SSH allows remote access to another computer's terminal. In the case of a personal computer, this may be needed to solve an urgent problem, and in the case of working with the server, remote access via SSH is an integral and regularly used practice.

- Basic commands

apt install openssh-server # installing SSH (out of the box almost everywhere) service ssh start # start SSH service ssh stop # stop SSH ssh -p [port] [user]@[remote_host] # connecting to a remote machine via SSH - Passwordless login

ssh-keygen -t rsa # RSA key generation for passwordless login ssh-copy-id -i ~/.ssh/id_rsa [user]@[remote_host] # copying a key to a remote machine - Config files

/etc/ssh/sshd_config # ssh server global config ~/.ssh/config # ssh server local config ~/.ssh/authorized_keys # file with saved public keys

- Basic commands

🔗 References

-

Network utils

For Linux there are many built-in and third-party utilities to help you configure your network, analyze it and fix possible problems.

- Simple utils

ip address # show info about IPv4 and IPv6 addresses of your devices ip monitor # real time monitor the state of devices ifconfig # config the network adapter and IP protocol settings traceroute <host> # show the route taken by packets to reach the host tracepath <host> # traces the network host to destination discovering MTU ping <host> # check connectivity to host ss -at # show the list of all listening TCP connections dig <host> # show info about the DNS name server host <host | ip-address> # show the IP address of a specified domain mtr <host | ip-address> # combination of ping and traceroute utilities nslookup # query Internet name servers interactively whois <host> # show info about domain registration ifplugstatus # detect the link status of a local Linux ethernet device iftop # show bandwidth usage ethtool <device name> # show detalis about your ethernet device nmap # tool to explore and audit network security bmon # bandwidth monitor and rate estimator firewalld # add, configure and remove rules on firewall ipref # perform network performance measurement and tuning speedtest-cli # check your network download/upload speed wget <link> # download files from the Internet tcpdumpA console utility that allows you to intercept and analyze all network traffic passing through your computer.

netcatUtility for reading from and writing to network connections using TCP or UDP. It includes port scanning, transferring files, and port listening: as with any server, it can be used as a backdoor.

iptablesUser-space utility program that allows to configure the IP packet filter rules of the Linux kernel firewall, implemented as different Netfilter modules. The filters are organized in different tables, which contain chains of rules for how to treat network traffic packets.

curlCommand-line tool for transferring data using various network protocols.

- Simple utils

🔗 References

- 📄 21 Basic Linux Networking Commands You Should Know

- 📄 Using tcpdump Command on Linux to Analyze Network

- 📺 tcpdump - Traffic Capture & Analysis – YouTube

- 📺 tcpdumping Node.js server – YouTube

- 📄 Beginner’s guide to Netcat for hackers

- 📄 Iptables Tutorial

- 📄 An intro to cURL: The basics of the transfer tool

- 📺 Basic cURL Tutorial – YouTube

- 📺 Using cURL better - tutorial by cURL creator Daniel Stenberg – YouTube

- 📄 Awesome console services – GitHub

-

Task scheduler

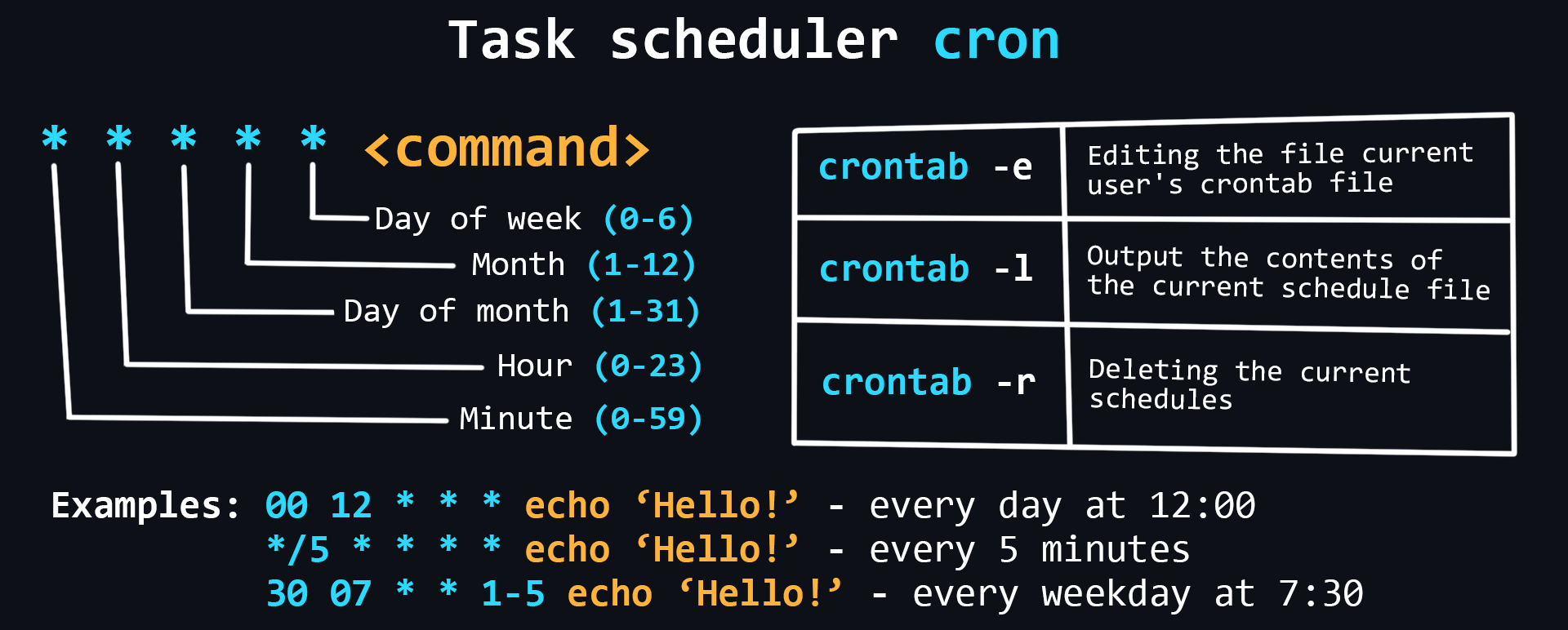

Schedulers allow you to flexibly manage the delayed running of commands and scripts. Linux has a built-in cron scheduler that can be used to easily perform necessary actions at certain intervals.

- Main commands

crontab -e # edit the crontab file of the current user crontab -l # output the contents of the current schedule file crontab -r # deleting the current schedule file - Files and directories

/etc/crontab # base config /etc/cron.d/ # a dir with crontab files used to manage the entire system # dirs where you can store scripts that runs: /etc/cron.daily/ # every day /etc/cron.weekly/ # every week /etc/cron.monthly/ # every month

- Main commands

🔗 References

-

System logs

Log files are special text files that contain all information about the operation of a computer, program, or user. They are especially useful when bugs and errors occur in the operation of a program or server. It is recommended to periodically review log files, even if nothing suspicious happens.

- Main log files

/var/log/syslog or /var/log/messages # information about the kernel, # various services detected, devices, network interfaces, etc. /var/log/auth.log or /var/log/secure # user authorization information /var/log/faillog # failed login attempts /var/log/dmesg # information about device drivers /var/log/boot.log # operating system boot information /var/log/cron # cron task scheduler report - lnav utility

Designed for easy viewing of log files (highlighting, reading different formats, searching, etc.)

- Log rotation with logrotate

Allows you to configure automatic deletion (cleaning) of log files so as not to clog memory.

- Demon journald

Collects data from all available sources and stores it in binary format for convenient and dynamic control.

- Main log files

🔗 References

-

Main issues with Linux

- Software installation and package management issues

- Unmet dependencies - occurs when package fails to install or update.

- Dependency errors and conflicts

- Problems with drivers

All free Linux drivers are built right into its kernel. Therefore, everything should work "out of the box" after installing the system (problems may occur with brand new hardware which has just been released on the market). Drivers whose source code is closed are considered proprietary and are not included in the kernel but are installed manually (like Nvidia graphics drivers).

- File system issues

- Check disk space availability using the

dfcommand and ensure that critical partitions are not full. - Use the

fsckcommand to check and repair file system inconsistencies. - In case of data loss or accidental deletion, use data recovery tools like

extundeleteortestdisk.

- Check disk space availability using the

- Performance and resource management

- Check system resource usage, including CPU, memory, and disk space, using

free,df, orducommands. - Identify resource-intensive processes using tools like

top,htop, orsystemd-cgtop. - Disable unnecessary startup services or background processes to improve performance.

- Check system resource usage, including CPU, memory, and disk space, using

- Network connectivity issues

- Use the ping command to check network connectivity to a specific host or IP address.

- Check the network settings, such as IP configuration, DNS settings, and firewall rules.

- Problems with kernel

Kernel panic - can occur due to an error when mounting the root file system. This is best helped by the skill of reading the logs to find problems (

dmesgcommand).

- Software installation and package management issues

General knowledge

-

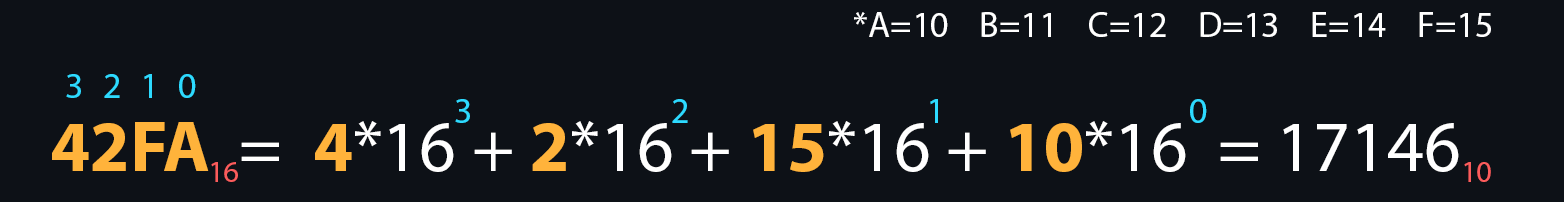

Numeral systems

Numeral system is a set of symbols and rules for denoting numbers. In computer science, it is customary to distinguish four main number systems: binary, octal, decimal, and hexadecimal. It is connected, first of all, with their use in various branches of programming.

- Binary number

The most important system for computing technology. Its use is justified by the fact that the logic of the processor is based on only two states (on/off, open/closed, high/low, true/false, yes/no, high/low).

- Octal

It is used e.g., on Linux systems to grant access rights.

- Decimal

A system that is easy to understand for most people.

- Hexadecimal

The letters A, B, C, D, E, F are additionally used for recording. It is widely used in low-level programming and computer documentation because the minimum addressable memory unit is an 8-bit byte, the values of which are conveniently written in two hexadecimal digits.

- Translation between different number systems

You can try online converter for a better understanding.

- Binary number

🔗 References

-

Logical connective

Logical connective are widely used in programming to handle boolean types (true/false or 1/0). The result of a boolean expression is also a value of a boolean type.

AND

a b a AND b 0 0 0 0 1 0 1 0 0 1 1 1 OR

a b a OR b 0 0 0 0 1 1 1 0 1 1 1 1 XOR

a b a XOR b 0 0 0 0 1 1 1 0 1 1 1 0 - Basic logical operations

They are the basis of other all kinds of operations.

There are three in total: Operation AND (&&, Conjunction), operation OR (||, Disjunction), operation NOT (!, Negation). - Operation Exclusive OR (XOR, Modulo 2 Addition)

An important operation that is fundamental to coding theory and computer networks.

- Truth Tables

For logical operations, there are special tables that describe the input data and the return result.

- Priority of operations

The

NOToperator has the highest priority, followed by theANDoperator, and then theORoperator. You can change this behavior using round brackets.

- Basic logical operations

🔗 References

-

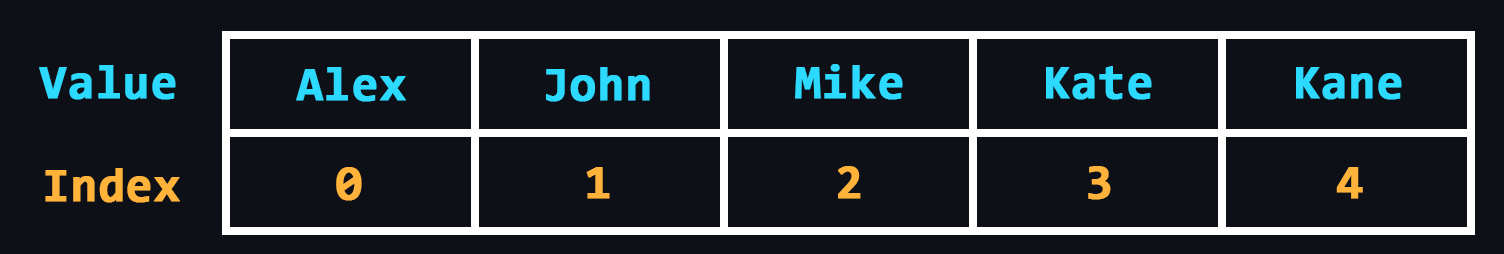

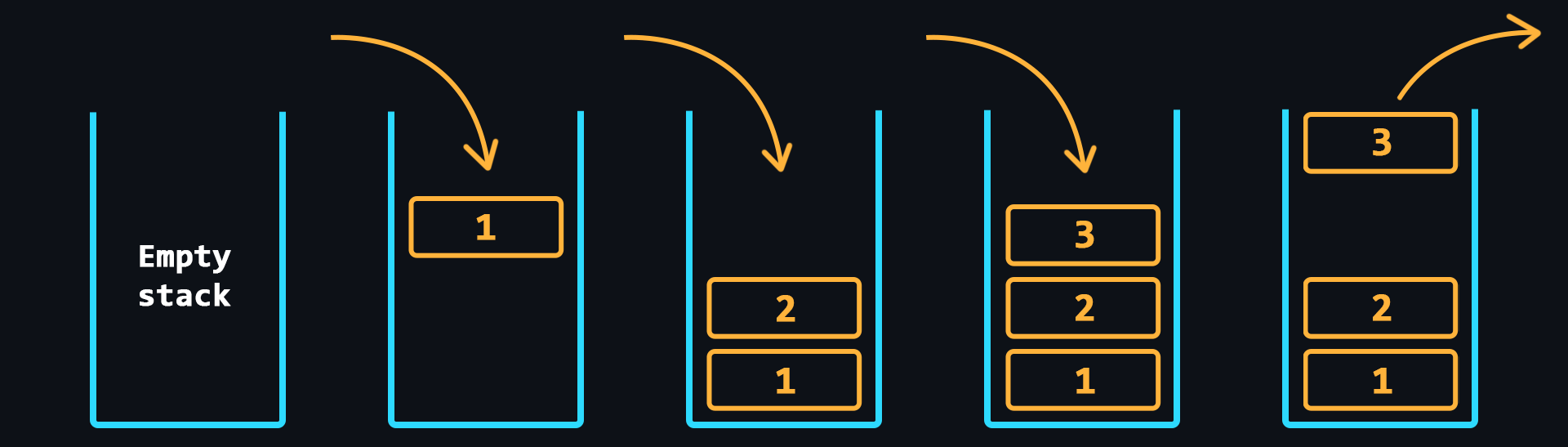

Data structures

Data structures are containers in which data is stored according to certain rules. Depending on these rules, the data structure will be effective in some tasks and ineffective in others. Therefore, it is necessary to understand when and where to use this or that structure.

- Array

A data structure that allows you to store data of the same type, where each element is assigned a different sequence number.

- Linked list

A data structure where all elements, in addition to the data, contain references to the next and/or previous element. There are 3 varieties:

- A singly linked list is a list where each element stores a link to the next element only (one direction).

- A doubly linked list is a list where the items contain links to both the next item and the previous one (two directions).

- A circular linked list is a kind of bilaterally linked list, where the last element of the ring list contains a pointer to the first and the first to the last.

- Stack

Structure where data storage works on the principle of last in - first out (LIFO).

- Queue

Structure where data storage is based on the principle of first in - first out (FIFO).

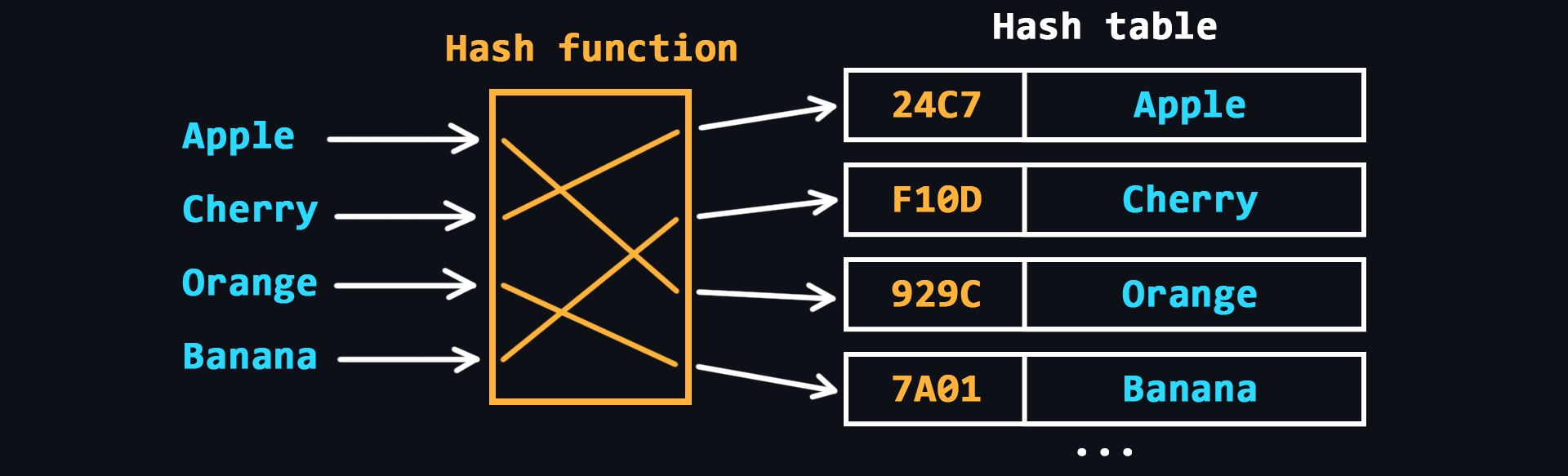

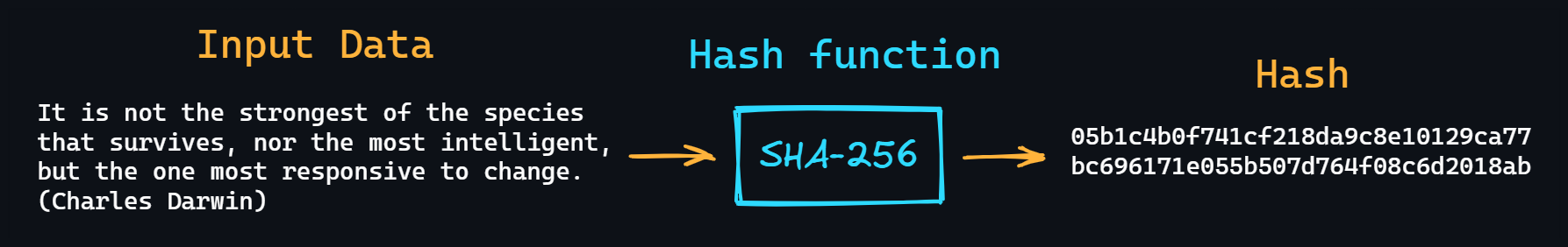

- Hash table

In other words, it is an associative array. Here, each of the elements is accessed with a corresponding key value, which is calculated using hash function according to a certain algorithm.

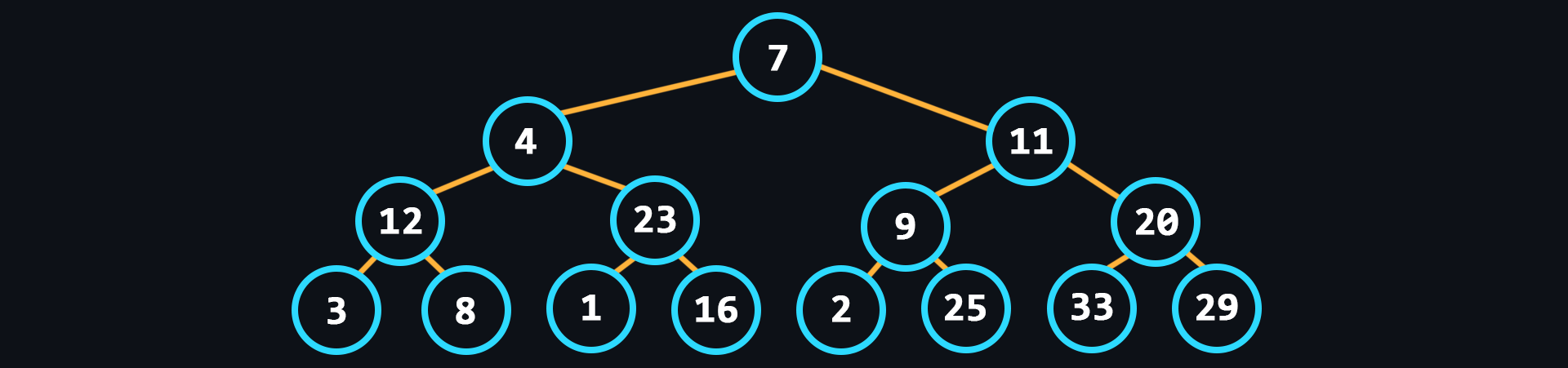

- Tree

Structure with a hierarchical model, as a set of related elements, usually not ordered in any way.

- Heap

Similar to the tree, but in the heap, the items with the largest key is the root node (max-heap). But it may be the other way around, then it is a min heap.

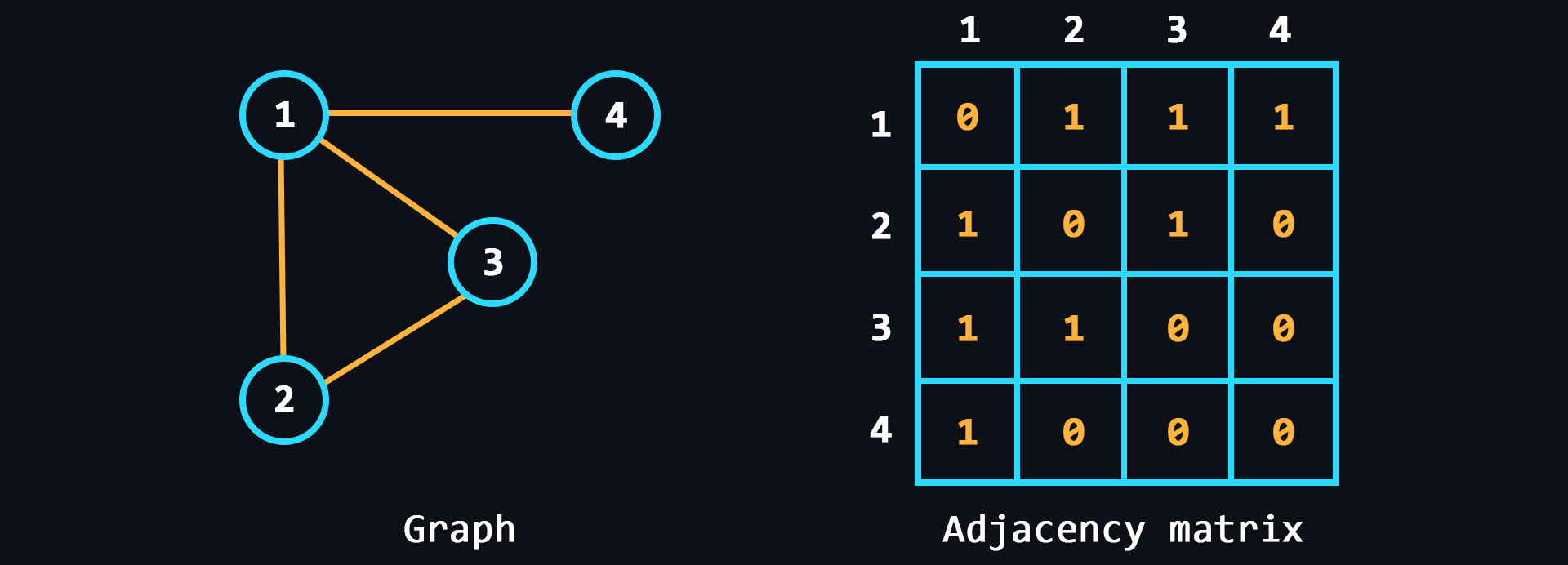

- Graph

A structure that is designed to work with a large number of links.

- Array

🔗 References

- 📺 10 Key Data Structures We Use Every Day – YouTube

- 📺 CS50 2022 - Lecture about Data Structures – YouTube

- 📺 Data Structures Easy to Advanced Course – YouTube

- 📄 Free courses to learn data structures and algorithms in depth – freeCodeCamp

- 📄 Data Structures: collection of topics – GeeksForGeeks

- 📄 JavaScript Data Structures and Algorithms – GitHub

- 📄 Go Data Structures – GitHub

-

Basic algorithms

Algorithms refer to sets of sequential instructions (steps) that lead to the solution of a given problem. Throughout human history, a huge number of algorithms have been invented to solve certain problems in the most efficient way. Accordingly, the correct choice of algorithms in programming will allow you to create the fastest and most resource-intensive solutions.

There is a very good book about algorithms for beginners – Grokking algorithms. You can start learning a programming language in parallel with reading it.

- Binary search

Maximum efficient search algorithm for sorted lists.

- Selection sort

At each step of the algorithm, the minimum element is searched for and then swapped with the current iteration element.

- Recursion

When a function can call itself and so on to infinity. On the one hand, recursion-based solutions look very elegant, but on the other hand, this approach quickly leads to Stack Overflow and is recommended to be avoided.

- Bubble sort

At each iteration neighboring elements are sequentially compared, and if the order of the pair is wrong, the elements are swapped.

- Quicksort

Improved bubble sorting method.

- Breadth-first search

Allows finding all shortest paths from a given vertex of the graph.

- Dijkstra's algorithm

Finds the shortest paths between all vertices of a graph and their length.

- Greedy algorithm

An algorithm that at each step makes locally the best choice in the hope that the final solution will be optimal.

- Binary search

🔗 References

-

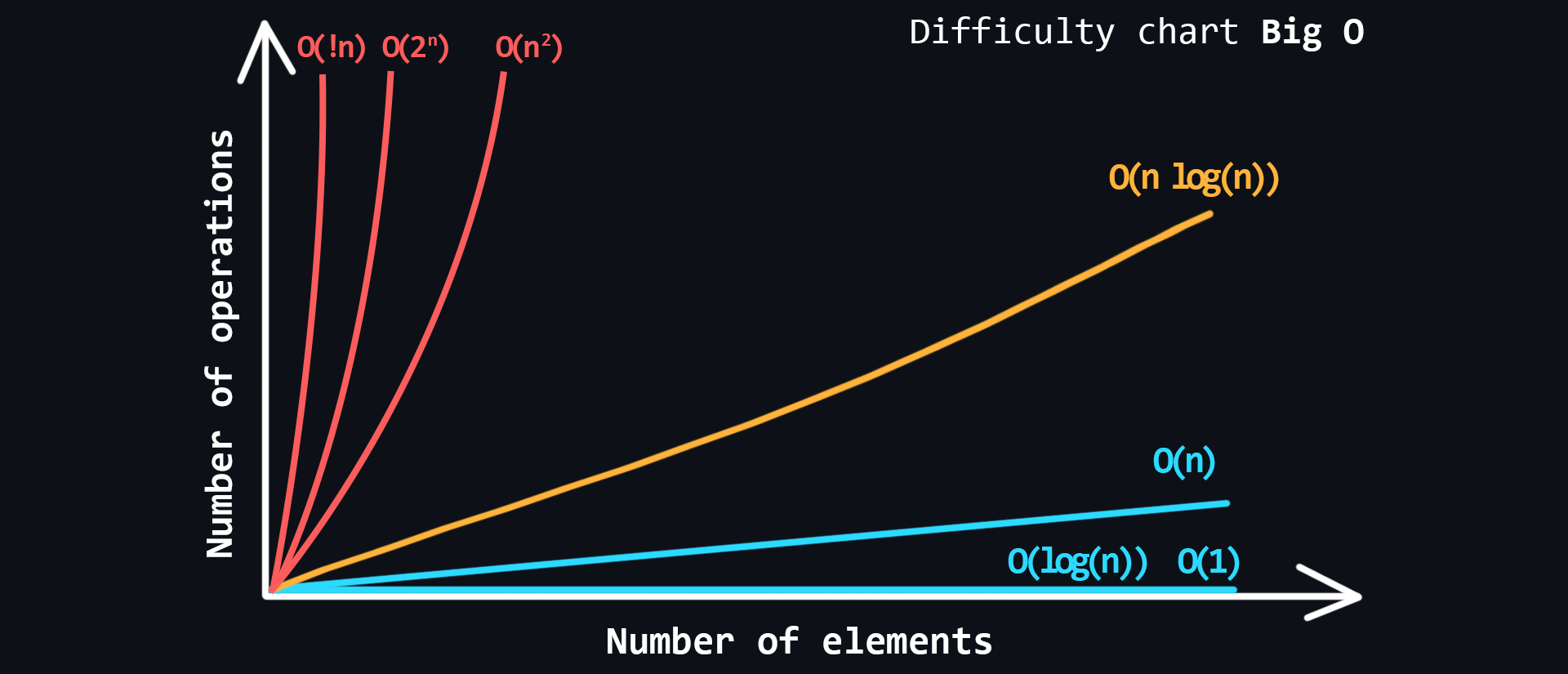

Algorithm complexity

In the world of programming there is a special unit of measure Big O notation. It describes how the complexity of an algorithm increases with the amount of input data. Big O estimates how many actions (steps/iterations) it takes to execute the algorithm, while always showing the worst case scenario.

- Main types of complexity

- Constant O(1) – the fastest.

- Linear O(n)

- Logarithmic O(log n)

- Linearimetric O(n * log n)

- Quadratic O(n^2)

- Stepwise O(2^n)

- Factorial O(n!) – the slowest.

- Constant O(1) – the fastest.

- Time complexity

When you know in advance on which machine the algorithm will be executed, you can measure the execution time of the algorithm. Again, on very good hardware the execution time of the algorithm can be quite acceptable, but the same algorithm on a weaker hardware can run for hundreds of milliseconds or even a few seconds. Such delays will be very sensitive if your application handles user requests over the network.

- Space complexity

In addition to time, you need to consider how much memory is spent on the work of an algorithm. It is important when you're working with limited memory resources.

- Main types of complexity

-

Data storage formats

Different file formats can be used to store and transfer data over the network. Text files are human-readable, so they are used for configuration files, for example. But transferring data in text formats over the network is not always rational, because they weigh more than their corresponding binary files.

-

Text formats

- JSON (JavaScript Object Notation)

Represents an object in which data is stored as key-value pairs.

- XML (eXtensible Markup Language)

The format is closer to HTML. Here the data is wrapped in opening and closing tags.

- YAML (Yet Another Markup Language)

The format is close to markup languages like HTML. Minimalist, because it has no opening or closing tags. Easy to edit.

- TOML (Tom's Obvious Minimal Language)

A minimal configuration file format that's easy to read due to obvious semantics. TOML is designed to map unambiguously to a hash table. TOML should be easy to parse into data structures in a wide variety of languages.

- JSON (JavaScript Object Notation)

-

Binary formats

- Message Pack

Binary analog of JSON. Allows you to pack data 15-20% more efficiently.

- BSON (Binary JavaScript Object Notation)

It is a superset of JSON, including additionally regular expressions, binary data and dates.

- ProtoBuf (Protocol Buffers)

Binary alternative to XML text format. Simpler, more compact and faster.

- Message Pack

-

Image formats

- JPEG (Joint Photographic Experts Group)

It is best suited for photographs and complex images with a wide range of colors. JPEG images can achieve high compression ratios while maintaining good image quality, but repeated editing and saving can result in loss of image fidelity.

- PNG (Portable Network Graphics)

It is a lossless compression format that supports transparency. It is commonly used for images with sharp edges, logos, icons, and images that require transparency. PNG images can have a higher file size compared to JPEG, but they retain excellent quality without degradation during repeated saves.

- GIF (Graphics Interchange Format)

Used for simple animations and low-resolution images with limited colors. It supports transparency and can be animated by displaying a sequence of frames.

- SVG (Scalable Vector Graphics)

XML-based vector image format defined by mathematical equations rather than pixels. SVG images can be scaled to any size without losing quality and are well-suited for logos, icons, and graphical elements.

- WebP

Modern image format developed by Google. It supports both lossy and lossless compression, providing good image quality with smaller file sizes compared to JPEG and PNG. WebP images are optimized for web use and can include transparency and animation.

- JPEG (Joint Photographic Experts Group)

-

Video formats

- MP4 (MPEG-4 Part 14)

Widely used video format that supports high-quality video compression, making it suitable for streaming and storing videos. MP4 files can contain both video and audio.

- AVI (Audio Video Interleave)

Is a multimedia container format developed by Microsoft. It can store audio and video data in a single file, allowing for synchronized playback. However, they tend to have larger file sizes compared to more modern formats.

- MOV (QuickTime Movie)

Is a video format developed by Apple for use with their QuickTime media player. It is widely used with Mac and iOS devices. MOV files can contain both video and audio, and they offer good compression and quality, making them suitable for editing and professional use.

- WEBM

Best for videos embedded on your personal or business website. It is lightweight, load quickly and stream easily.

- MP4 (MPEG-4 Part 14)

-

Audio formats

- MP3 (MPEG-1 Audio Layer 3)

The most popular audio format known for its high compression and small file sizes. It achieves this by removing some of the audio data that may be less perceptible to the human ear. Suitable for music storage, streaming, and sharing.

- WAV (Waveform Audio File Format)

Is an uncompressed audio format that stores audio data in a lossless manner, resulting in high-quality sound reproduction. WAV files are commonly used in professional audio production and editing due to their accuracy and fidelity. However, they tend to have larger file sizes compared to compressed formats.

- AAC (Advanced Audio Coding)

Is a widely used audio format known for its efficient compression and good sound quality. It offers better sound reproduction at lower bit rates compared to MP3. AAC files are commonly used for streaming music, online radio, and mobile devices, as they deliver good audio quality while conserving bandwidth and storage.

- MP3 (MPEG-1 Audio Layer 3)

-

🔗 References

-

Text encodings

Computers work only with numbers, or more precisely, only with 0 and 1. It is already clear how to convert numbers from different number systems to binary. But you can't do that with text. That's why special tables called encodings were invented, in which text characters are assigned numeric equivalents.

- ASCII (American standard code for information interchange)

The simplest encoding created specifically for the American alphabet. Consists of 128 characters.

- Unicode

This is an international character table that, in addition to the English alphabet, contains the alphabets of almost all countries. It can hold more than a million different characters (the table is currently incomplete).

- UTF-8 (Unicode Transformation Format)

UTF-8 is a variable-length encoding that can be used to represent any Unicode character.

- UTF-16

Its main difference from UTF-8 is that its structural unit is not one but two bytes. That is, in UTF-16 any Unicode character can be encoded by either two or four bytes.

- ASCII (American standard code for information interchange)

🔗 References

Programming Language

At this stage you have to choose one programming language to study. There is plenty of information on various languages in the Internet (books, courses, thematic sites, etc.), so you should have no problem finding information.

Below is a list of specific languages that personally, in my opinion are good for backend development (⚠️ may not agree with the opinions of others, including those more competent in this matter).

- Python

A very popular language with a wide range of applications. Easy to learn due to its simple syntax.

- JavaScript

No less popular and practically the only language for full-fledged Web-development. Thanks to the platform Node.js last few years is gaining popularity in the field of backend development as well.

- Go

A language created internally by Google. It was created specifically for high-load server development. Minimalist syntax, high performance and rich standard library.

- Kotlin

A kind of modern version of Java. Simpler and more concise syntax, better type-safety, built-in tools for multi-threading. One of the best choices for Android development.

Find a good book or online tutorial in English at this repository. There is a large collection for different languages and frameworks.

Look for a special awesome repository - a resource that contains a huge number of useful links to materials for your language (libraries, cheat sheets, blogs, and other various resources).

-

Classification of programming languages

There are many programming languages. They are all created for a reason. Some languages may be very specific and used only for certain purposes. Also, different languages may use different approaches to writing programs. They may even run differently on a computer. In general, there are many different classifications, which would be useful to understand.

- Depending on language level

- Low level languages

As close to machine code, complex to write, but as productive as possible. As a rule, it provides access to all of the computer's resources.

- High-level languages

They have a fairly high level of abstraction, which makes them easy to write and easy to use. As a rule, they are safer because they do not provide access to all of the computer's resources.

- Low level languages

- Depending on implementation

- Compilation

Allows you to convert the source code of a program to an executable file.

- Interpretation

The source code of a program is translated and immediately executed (interpreted) by a special interpreter program.

- Virtual machine

In this approach, the program is not compiled into a machine code, but into machine-independent low-level code - bytecode. This bytecode is then executed by the virtual machine itself.

- Compilation

- Depending on the programming paradigm

- Imperative

Focuses on describing the steps to solve a problem through a sequence of statements or commands.

- Declarative

Focuses on describing what the program should do, rather than how it should do it. Examples of declarative languages include SQL and HTML.

- Functional

Based on the idea of treating computation as the evaluation of mathematical functions. It emphasizes immutability, avoiding side effects, and using higher-order functions. Examples of functional languages include Haskell, Lisp, and Clojure.

- Object-Oriented

Revolves around creating objects that contain both data and behavior, with the goal of modeling real-world concepts. Examples of object-oriented languages include Java, Python, and C++.

- Concurrent

Focused on handling multiple tasks or threads at the same time, and is used in systems that require high performance and responsiveness. Examples of concurrent languages include Go and Erlang.

- Imperative

- Depending on language level

🔗 References

-

Language Basics

By foundations are meant some fundamental ideas present in every language.

- Variables and constants

Are names assigned to a memory location in the program to store some data.

- Data types

Define the type of data that can be stored in a variable. The main data types are integers, floating point numbers, symbols, strings, and boolean.

- Operators

Used to perform operations on variables or values. Common operators include arithmetic operators, comparison operators, logical operators, and assignment operators.

- Flow control

Loops, conditions

if else,switch casestatements. - Functions

Are blocks of code that can be called multiple times in a program. They allow for code reusability and modularization. Functions are an important concept for understanding the scope of variables.

- Data structures

Special containers in which data are stored according to certain rules. Main data structures are arrays, maps, trees, graphs.

- Standard library

This refers to the language's built-in features for manipulating data structures, working with the file system, network, cryptography, etc.

- Error handling

Used to handle unexpected events that can occur during program execution.

- Regular expressions

A powerful tool for working with strings. Be sure to familiarize yourself with it in your language, at least on a basic level.

- Modules

Writing the code of the whole program in one file is not at all convenient. It is much more readable to break it up into smaller modules and import them into the right places.

- Package Manager

Sooner or later, there will be a desire to use third-party libraries.

After mastering the minimal base for writing the simplest programs, there is not much point in continuing to learn without having specific goals (without practice, everything will be forgotten). You need to think of/find something that you would like to create yourself (a game, a chatbot, a website, a mobile/desktop application, whatever). For inspiration, check out these repositories: Build your own x and Project based learning.

At this point, the most productive part of learning begins: You just look for all kinds of information to implement your project. Your best friends are Google, YouTube, and Stack Overflow.

- Variables and constants

🔗 References

- 📺 CS50 2022 – Harvard University's course about programming – YouTube

- 📺 Harvard CS50’s Web Programming with Python and JavaScript – YouTube

- 📄 Free Interactive Python Tutorial

- 📺 Harvard CS50’s Introduction to Programming with Python – YouTube

- 📺 Python Tutorial for Beginners – YouTube

- 📄 Python cheatsheet – Learn X in Y minutes

- 📄 Python cheatsheet – quickref.me

- 📄 Free Interactive JavaScript Tutorial

- 📺 JavaScript Programming - Full Course – YouTube

- 📄 The Modern JavaScript Tutorial

- 📄 JavaScript cheatsheet – Learn X in Y minutes

- 📄 JavaScript cheatsheet – quickref.me

- 📄 Go Tour – learn most important features of the language

- 📺 Learn Go Programming - Go Tutorial for Beginners – YouTube

- 📄 Go cheatsheet – Learn X in Y minutes

- 📄 Go cheatsheet – quickref.me

- 📄 Learn Go by Examples

- 📄 Get started with Kotlin

- 📺 Learn Kotlin Programming – Full Course for Beginners – YouTube

- 📄 Kotlin cheatsheet – Learn X in Y minutes

- 📄 Kotlin cheatsheet – devhints.io

- 📄 Learn Regex step by step, from zero to advanced

- 📄 Projectbook – The Great Big List of Software Project Ideas

-

Object-oriented programming

OOP is one of the most successful and convenient approaches for modeling real-world things. This approach combines several very important principles which allow writing modular, extensible, and loosely coupled code.

- Understanding Classes

A class can be understood as a custom data type (a kind of template) in which you describe the structure of future objects that will implement the class. Classes can contain

properties(these are specific fields in which data of a particular data type can be stored) andmethods(these are functions that have access to properties and the ability to manipulate, modify them). - Understanding objects

An object is a specific implementation of a class. If, for example, the name property with type string is described in a class, the object will have a specific value for that field, for example "Alex".

- Inheritance principle

Ability to create new classes that inherit properties and methods of their parents. This allows you to reuse code and create a hierarchy of classes.

- Encapsulation principle

Ability to hide certain properties/methods from external access, leaving only a simplified interface for interacting with the object.

- Polymorphism principle

The ability to implement the same method differently in descendant classes.

- Composition over inheritance

Often the principle of

inheritancecan complicate and confuse your program if you do not think carefully about how to build the future hierarchy. That is why there is an alternative (more flexible) approach called composition. In particular, Go language lacks classes and many OOP principles, but widely uses composition. - Dependency injection (DI)

Dependency injection is a popular OOP pattern that allows objects to receive their dependencies (other objects) from the outside rather than creating them internally. It promotes loose coupling between classes, making code more modular, maintainable, and easier to test.

- Understanding Classes

🔗 References

- 📺 Intro to Object Oriented Programming - Crash Course – YouTube

- 📄 OOP Meaning – What is Object-Oriented Programming? – freeCodeCamp

- 📺 OOP in Python (CS50 lecture) – YouTube

- 📄 OOP tutorial from Python docs

- 📺 OOP in JavaScript: Made Super Simple – YouTube

- 📄 OOP in Go by examples

- 📺 Object Oriented Programming is not what I thought - Talk by Anjana Vakil – YouTube

- 📺 The Flaws of Inheritance (tradeoffs between Inheritance and Composition) – YouTube

- 📺 Dependency Injection, The Best Pattern – YouTube

-

Server development

- Understand sockets

A socket is an endpoint of a two-way communication link between two programs running over a network. You need to know how to create, connect, send, and receive data over sockets.

- Running a local TCP, UDP and HTTP servers

These protocols are the most important, you need to understand the intricacies of working with each of them.

- Handing out static files

You need to know how to host HTML pages, pictures, PDF documents, music/video files, etc.

- Routing

Creation of endpoints (URLs) which will call the appropriate handler on the server when accessed.

- Processing requests

As a rule, HTTP handlers have a special object which receives all information about user request (headers, method, request body, query parameters and so on)

- Processing responses

Sending an appropriate message to a received request (HTTP status and code, response body, headers, etc.)

- Error handling

You should always be prepared for the possibility that something will go wrong: the user will send incorrect data, the database will not perform the operation, or an unexpected error will simply occur in the application. It is necessary for the server not to crash, but to send a response with information about the error.

- Middleware

An intermediate component between the application and the server. It used for handling authentication, validation, caching data, logging requests, and so on.

- Sending requests

Often, within one application, you will need to access another application over the network. That's why it's important to be able to send HTTP requests using the built-in features of the language.

- Template processor

Is a special module that uses a more convenient syntax to generate HTML based on dynamic data.

- Understand sockets

🔗 References

- 📄 Learn Django – Python-based web framework

- 📺 Python Django 7 Hour Course – YouTube

- 📄 A curated list of awesome things related to Django – GitHub

- 📺 Python Web Scraping for Beginners – YouTube

- 📺 Build servers in pure Node.js – YouTube

- 📄 Node.js HTTP Server Examples – GitHub

- 📄 Learn Express – web framework for Node.js

- 📺 Express.js 2022 Course – YouTube

- 📄 A curated list of awesome Express.js resources – GitHub

- 📄 How to build servers in Go

- 📺 Golang server development course – YouTube

- 📄 Web services in Go – GitBook

- 📄 List of libraries for working with network in Go – GitHub

- 📄 Learn Ktor – web framework for Kotlin

- 📺 Ktor - REST API Tutorials – YouTube

- 📄 Kotlin for server side

-

Asynchronous programming

Asynchronous programming is an efficient way to write programs with a large number of I/O (input/output) operations. Such operations may include reading files, requesting to a database or remote server, reading user input, and so on. In these cases, the program spends a lot of time waiting for external resources to respond, and asynchronous programming allows the program to perform other tasks while waiting for the response.

- Callback

This is function that is passed as an argument to another function and is intended to be called by that function at a later time. The purpose of a callback is to allow the calling function to continue executing while the called function performs a time-consuming or asynchronous task. Once the task is complete, the called function will invoke the callback function, passing it any necessary data as arguments.

- Event-driven architecture (EDA)

A popular approach to writing asynchronous programs. The logic of the program is to wait for certain events and process them as they arrive. This can be useful in web applications that need to handle a large number of concurrent connections, such as chat applications or real-time games.

- Asynchronous in particular languages

- In Python, asynchronous programming can be done using the asyncio module, which provides an event loop and coroutine-based API for concurrency. There are also other third-party libraries like Twisted and Tornado that provide asynchronous capabilities.

- In JavaScript, asynchronous programming is commonly achieved through the use of promises, callbacks, async/await syntax and the event loop.

- Go has built-in support for concurrency through goroutines and channels, which allow developers to write asynchronous code that can communicate and synchronize across multiple threads.

- Kotlin provides coroutines are similar to JavaScript's async/await and Python's asyncio, and can be used with a variety of platforms and frameworks.

- Callback

🔗 References

- 📺 Synchronous vs. Asynchronous Applications (Explained by Example) – YouTube

- 📄 Async IO in Python: A Complete Walkthrough

- 📄 Asynchronous Programming in JavaScript – Guide for Beginners – freeCodeCamp

- 📄 A roadmap for asynchronous programming in JavaScript

- 📺 Master Go Programming With These Concurrency Patterns – YouTube

- 📺 Kotlin coroutines: new ways to do asynchronous programming – YouTube

-

Multitasking

Computers today have processors with several physical and virtual cores, and if we take into account server machines, their number can reach up to hundreds. All of these available resources would be good to use to the fullest, for maximum application performance. That is why modern server development cannot do without implementing multitasking and paralleling.

- How it works

Multitasking refers to the concurrent execution of multiple threads of control within a single program. A thread is a lightweight process that runs within the context of a process, and has its own stack, program counter, and register set. Multiple threads can share the resources of a single process, such as memory, files, and I/O devices. Each thread executes independently and can perform a different task or part of a task.

- Multitasking types

- Cooperative multitasking: each program or task voluntarily gives up control of the CPU to allow other programs or tasks to run. Each program or task is responsible for yielding control to other programs or tasks at appropriate times. This approach requires programs or tasks to be well-behaved and to avoid monopolizing the CPU. If a program or task does not yield control voluntarily, it can cause the entire system to become unresponsive. Cooperative multitasking was commonly used in early operating systems and is still used in some embedded systems or real-time operating systems.

- Preemptive multitasking: operating system forcibly interrupts programs or tasks at regular intervals to allow other programs or tasks to run. The operating system is responsible for managing the CPU and ensuring that each program or task gets a fair share of CPU time. This approach is more robust than cooperative multitasking and can handle poorly behaved programs or tasks that do not yield control. Preemptive multitasking is used in modern operating systems, such as Windows, macOS, Linux, and Android.

- Main problems and difficulties

- Race conditions: When multiple threads access and modify shared data concurrently, race conditions can occur, resulting in unpredictable behavior or incorrect results.

- Deadlocks: Occur when two or more threads are blocked waiting for resources that are held by other threads, resulting in a deadlock.

- Debugging: Multitasking programs can be difficult to debug due to their complexity and non-deterministic behavior. You need to use advanced debugging tools and techniques, such as thread dumps, profilers, and logging, to diagnose and fix issues.

- Synchronizing primitives

Needed to securely exchange data between different threads.

- Semaphore: It is essentially a counter that keeps track of the number of available resources and can block threads or processes that try to acquire more than the available resources.

- Mutex: (short for mutual exclusion) allows only one thread or process to access the resource at a time, ensuring that there are no conflicts or race conditions.

- Atomic operations: operations that are executed as a single, indivisible unit, without the possibility of interruption or interference by other threads or processes.

- Condition variables: allows threads to wait for a specific condition to be true before continuing execution. It is often used in conjunction with a mutex to avoid busy waiting and improve efficiency.

- Working with particular language

- In Python you can see threading and multiprocessing modules.

- In Node.js you can work with worker threads, cluster module and shared array buffers.

- Go has incredible goroutines and channels.

- Kotlin provides coroutines.

- How it works

🔗 References

- 📺 Multithreading Code - Computerphile – YouTube

- 📺 Threading vs. multiprocessing in Python – YouTube

- 📺 When is Node.js Single-Threaded and when is it Multi-Threaded? – YouTube

- 📺 How to use Multithreading with "worker threads" in Node.js? – YouTube

- 📺 Concurrency in Go – YouTube

- 📺 Kotlin coroutines – YouTube

- 📄 Multithreading in practice – GitHub

-

Advanced Topics

- Garbage collector