- ✅Linting fixes and groups.

- ✅Add `File.from that:Text` and use `File` conversions instead of taking both `File` and `Text` and calling `File.new`.

- ✅Align Unix Epoc with the UTC timezone and add converting from long value to `Date_Time` using it.

- ❌Add simple first logging API allowing writing to log messages from Enso.

- ✅Fix minor style issue where a test type had a empty constructor.

- ❌Added a `long` based array builder.

- Added `File_By_Line` to read a file line by line.

- Added "fast" JSON parser based off Jackson.

- ✅Altered range `to_vector` to be a proxy Vector.

- ✅Added `at` and `get` to `Database.Column`.

- ✅Added `get` to `Table.Column`.

- ✅Added ability to expand `Vector`, `Array` `Range`, `Date_Range` to columns.

- ✅Altered so `expand_to_column` default column name will be the same as the input column (i.e. no `Value` suffix).

- ✅Added ability to expand `Map`, `JS_Object` and `Jackson_Object` to rows with two columns coming out (and extra key column).

- ✅ Fixed bug where couldn't use integer index to expand to rows.

Uniqueness check of `UpsertVisualizationJob` only involved expressionId. Apparently now GUI sends mutliple visualizations for the same expressions and expects all of them to exist. Since previously we would cancel duplicate jobs, this was problematic.

This change makes sure that uniqueness also takes into account visualization id. Fixed a few logs that were not passing arguments properly.

Closes#8801

# Important Notes

I have not noticed any more problems with loading visualizations so the issue appears to be resolved with this change.

Added a unit test case that would previously fail due to cancellation of a job that upserts visualization.

This is a quick fix to a long standing problem of

`org.enso.interpreter.service.error.FailedToApplyEditsException` which would prevent backend from processing any more changes, rendering GUI (and backend) virtually useless.

Edits are submitted for (background) processing in the order they are handled. However the order of execution of such tasks is not guaranteed. Most of the time edits are processed in the same order as their requests but when they don't, files get quickly out of sync.

Related to #8770.

# Important Notes

I'm not a fan of this change because it essentially blocks all open/file requests until all edits are processed and we already have logic to deal with that appropriately. Moreover those tasks can and should be processed independently. Since we already had the single thread executor present to ensure correct synchronization of open/file/push commands, we are simply adding edit commands to the list.

Ideally we want to have a specialized executor that executes tasks within the same group sequentially but groups of tasks can be executed in parallel, thus ensuring sufficient throughput. The latter will take much longer and will require significant rewrite of the command execution.

Added tests that would previously fail due to non-deterministic execution.

Since #8685, there is the following error (warning) message from JPMS plugin when building `runtime-test-instruments`:

```

[error] Returned (10): Vector(/home/pavel/.cache/coursier/v1/https/repo1.maven.org/maven2/org/graalvm/sdk/nativeimage/23.1.0/nativeimage-23.1.0.jar, /home/pavel/.cache/coursier/v1/https/repo1.maven.org/maven2/org/graalvm/sdk/word/23.1.0/word-23.1.0.jar, /home/pavel/.cache/coursier/v1/https/repo1.maven.org/maven2/org/graalvm/sdk/jniutils/23.1.0/jniutils-23.1.0.jar, /home/pavel/.cache/coursier/v1/https/repo1.maven.org/maven2/org/graalvm/sdk/collections/23.1.0/collections-23.1.0.jar, /home/pavel/.cache/coursier/v1/https/repo1.maven.org/maven2/org/graalvm/polyglot/polyglot/23.1.0/polyglot-23.1.0.jar, /home/pavel/.cache/coursier/v1/https/repo1.maven.org/maven2/org/graalvm/truffle/truffle-api/23.1.0/truffle-api-23.1.0.jar, /home/pavel/.cache/coursier/v1/https/repo1.maven.org/maven2/org/graalvm/truffle/truffle-runtime/23.1.0/truffle-runtime-23.1.0.jar, /home/pavel/.cache/coursier/v1/https/repo1.maven.org/maven2/org/graalvm/truffle/truffle-compiler/23.1.0/truffle-compiler-23.1.0.jar, /home/pavel/.cache/coursier/v1/https/repo1.maven.org/maven2/org/graalvm/sdk/polyglot-tck/23.1.0/polyglot-tck-23.1.0.jar, /home/pavel/.cache/coursier/v1/https/repo1.maven.org/maven2/org/netbeans/api/org-openide-util-lookup/RELEASE180/org-openide-util-lookup-RELEASE180.jar)

[error] Expected: (13): List(org.graalvm.sdk:nativeimage:23.1.0, org.graalvm.sdk:word:23.1.0, org.graalvm.sdk:jniutils:23.1.0, org.graalvm.sdk:collections:23.1.0, org.graalvm.polyglot:polyglot:23.1.0, org.graalvm.truffle:truffle-api:23.1.0, org.graalvm.truffle:truffle-runtime:23.1.0, org.graalvm.truffle:truffle-compiler:23.1.0, org.graalvm.sdk:polyglot-tck:23.1.0, org.graalvm.truffle:truffle-tck:23.1.0, org.graalvm.truffle:truffle-tck-common:23.1.0, org.graalvm.truffle:truffle-tck-tests:23.1.0, org.netbeans.api:org-openide-util-lookup:RELEASE180)

```

This PR removes this error message by providing appropriate `libraryDependencies` to `runtime-test-instruments`.

Initial implementation of the Arrow language. Closes#7755.

Currently supported logical types are

- Date (days and milliseconds)

- Int (8, 16, 32, 64)

One can currently

- allocate a new fixed-length, nullable Arrow vector - `new[<name-of-the-type>]`

- cast an already existing fixed-length Arrow vector from a memory address - `cast[<name-of-the-type>]`

Closes#7755.

The change adds a convenient trait `ReportLogsOnFailure` that, when merged with the test class, will keep logs in memory and only delegate to the underlying appender on failure. For now we only support forwarding to the console which is sufficient.

A corresponding entry in `application-test.conf` has to point to the new `memory` appender. The additional complexity in the implementation ensures that if someone forgets to mixin `ReportLogsOnFailure` logs appear as before i.e. they respect the log level.

As a bonus fixed arguments passed to ScalaTest in build.sbt so that we are now, again, showing timings of individual tests.

Closes#8603.

# Important Notes

Before:

```

[info] VcsManagerTest:

[info] Initializing project

[ERROR] [2024-01-04 17:27:03,366] [org.enso.languageserver.search.SuggestionsHandler] Cannot read the package definition from [/tmp/3607843843826594318].

[info] - must create a repository (3 seconds, 538 milliseconds)

[info] - must fail to create a repository for an already existing project (141 milliseconds)

[info] Save project

[ERROR] [2024-01-04 17:27:08,346] [org.enso.languageserver.search.SuggestionsHandler] Cannot read the package definition from [/tmp/3607843843826594318].

[info] - must create a commit with a timestamp (198 milliseconds)

[ERROR] [2024-01-04 17:27:08,570] [org.enso.languageserver.search.SuggestionsHandler] Cannot read the package definition from [/tmp/3607843843826594318].

[info] - must create a commit with a name (148 milliseconds)

[ERROR] [2024-01-04 17:27:08,741] [org.enso.languageserver.search.SuggestionsHandler] Cannot read the package definition from [/tmp/3607843843826594318].

[info] - must force all pending saves (149 milliseconds)

[info] Status project

[ERROR] [2024-01-04 17:27:08,910] [org.enso.languageserver.search.SuggestionsHandler] Cannot read the package definition from [/tmp/3607843843826594318].

[info] - must report changed files since last commit (148 milliseconds)

[info] Restore project

[ERROR] [2024-01-04 17:27:09,076] [org.enso.languageserver.search.SuggestionsHandler] Cannot read the package definition from [/tmp/3607843843826594318].

[info] - must reset to the last state with committed changes (236 milliseconds)

[ERROR] [2024-01-04 17:27:09,328] [org.enso.languageserver.search.SuggestionsHandler] Cannot read the package definition from [/tmp/3607843843826594318].

[info] - must reset to a named save (pending)

[ERROR] [2024-01-04 17:27:09,520] [org.enso.languageserver.search.SuggestionsHandler] Cannot read the package definition from [/tmp/3607843843826594318].

[info] - must reset to a named save and notify about removed files *** FAILED *** (185 milliseconds)

[info] Right({

[info] "jsonrpc" : "2.0",

[info] "method" : "file/event",

[info] "params" : {

[info] "path" : {

[info] "rootId" : "cd84a4a3-fa50-4ead-8d80-04f6d0d124a3",

[info] "segments" : [

[info] "src",

[info] "Bar.enso"

[info] ]

[info] },

[info] "kind" : "Removed"

[info] }

[info] }) did not equal Right({

[info] "jsonrpc" : "1.0",

[info] "method" : "file/event",

[info] "params" : {

[info] "path" : {

[info] "rootId" : "cd84a4a3-fa50-4ead-8d80-04f6d0d124a3",

[info] "segments" : [

[info] "src",

[info] "Bar.enso"

[info] ]

[info] },

[info] "kind" : "Removed"

[info] }

[info] }) (VcsManagerTest.scala:1343)

[info] Analysis:

[info] Right(value: Json$JObject(value: object[jsonrpc -> "2.0",method -> "file/event",params -> {

[info] "path" : {

[info] "rootId" : "cd84a4a3-fa50-4ead-8d80-04f6d0d124a3",

[info] "segments" : [

[info] "src",

[info] "Bar.enso"

[info] ]

[info] },

[info] "kind" : "Removed"

[info] }] -> object[jsonrpc -> "1.0",method -> "file/event",params -> {

[info] "path" : {

[info] "rootId" : "cd84a4a3-fa50-4ead-8d80-04f6d0d124a3",

[info] "segments" : [

[info] "src",

[info] "Bar.enso"

[info] ]

[info] },

[info] "kind" : "Removed"

[info] }]))

[ERROR] [2024-01-04 17:27:09,734] [org.enso.languageserver.search.SuggestionsHandler] Cannot read the package definition from [/tmp/3607843843826594318].

[info] List project saves

[info] - must return all explicit commits (146 milliseconds)

[info] Run completed in 9 seconds, 270 milliseconds.

[info] Total number of tests run: 9

[info] Suites: completed 1, aborted 0

[info] Tests: succeeded 8, failed 1, canceled 0, ignored 0, pending 1

[info] *** 1 TEST FAILED ***

```

After:

```

[info] VcsManagerTest:

[info] Initializing project

[info] - must create a repository (3 seconds, 554 milliseconds)

[info] - must fail to create a repository for an already existing project (164 milliseconds)

[info] Save project

[info] - must create a commit with a timestamp (212 milliseconds)

[info] - must create a commit with a name (142 milliseconds)

[info] - must force all pending saves (185 milliseconds)

[info] Status project

[info] - must report changed files since last commit (142 milliseconds)

[info] Restore project

[info] - must reset to the last state with committed changes (202 milliseconds)

[info] - must reset to a named save (pending)

[ERROR] [2024-01-04 17:24:55,738] [org.enso.languageserver.search.SuggestionsHandler] Cannot read the package definition from [/tmp/8456553964637757156].

[info] - must reset to a named save and notify about removed files *** FAILED *** (186 milliseconds)

[info] Right({

[info] "jsonrpc" : "2.0",

[info] "method" : "file/event",

[info] "params" : {

[info] "path" : {

[info] "rootId" : "965ed5c8-1760-4284-91f2-1376406fde0d",

[info] "segments" : [

[info] "src",

[info] "Bar.enso"

[info] ]

[info] },

[info] "kind" : "Removed"

[info] }

[info] }) did not equal Right({

[info] "jsonrpc" : "1.0",

[info] "method" : "file/event",

[info] "params" : {

[info] "path" : {

[info] "rootId" : "965ed5c8-1760-4284-91f2-1376406fde0d",

[info] "segments" : [

[info] "src",

[info] "Bar.enso"

[info] ]

[info] },

[info] "kind" : "Removed"

[info] }

[info] }) (VcsManagerTest.scala:1343)

[info] Analysis:

[info] Right(value: Json$JObject(value: object[jsonrpc -> "2.0",method -> "file/event",params -> {

[info] "path" : {

[info] "rootId" : "965ed5c8-1760-4284-91f2-1376406fde0d",

[info] "segments" : [

[info] "src",

[info] "Bar.enso"

[info] ]

[info] },

[info] "kind" : "Removed"

[info] }] -> object[jsonrpc -> "1.0",method -> "file/event",params -> {

[info] "path" : {

[info] "rootId" : "965ed5c8-1760-4284-91f2-1376406fde0d",

[info] "segments" : [

[info] "src",

[info] "Bar.enso"

[info] ]

[info] },

[info] "kind" : "Removed"

[info] }]))

[info] List project saves

[info] - must return all explicit commits (131 milliseconds)

[info] Run completed in 9 seconds, 400 milliseconds.

[info] Total number of tests run: 9

[info] Suites: completed 1, aborted 0

[info] Tests: succeeded 8, failed 1, canceled 0, ignored 0, pending 1

[info] *** 1 TEST FAILED ***

```

I noticed that sources in `runtime/bench` are not formatted at all. Turns out that the `JavaFormatterPlugin` does not override `javafmt` task for the `Benchmark` configuration. After some failed attempts, I have just redefined the `Benchmark/javafmt` task in the `runtime` project. After all, the `runtime` project is almost the only project where we have any Java benchmarks.

# Important Notes

`javafmtAll` now also formats sources in `runtime/bench/src/java`.

After #8467, Engine benchmarks are broken, they cannot compile - https://github.com/enso-org/enso/actions/runs/7268987483/job/19805862815#logs

This PR fixes the benchmark build

# Important Notes

Apart from fixing the build of `engine/bench`:

- Don't assemble any fat jars in `runtime/bench`.

- Use our `TestLogProvider` in the benches instead of NOOP provider.

- So that we can at least see warnings and errors in benchmarks.

Make sure that the correct test logging provider is loaded in `project-manager/Test`, so that only WARN and ERROR log messages are displayed. Also, make sure that the test log provider parses the correct configuration file - Rename all the `application.conf` files in the test resources to `application-test.conf`.

The problem was introduced in #8467

- After [suggestion](https://github.com/enso-org/enso/pull/8497#discussion_r1429543815) from @JaroslavTulach I have tried reimplementing the URL encoding using just `URLEncode` builtin util. I will see if this does not complicate other followup improvements, but most likely all should work so we should be able to get rid of the unnecessary bloat.

- Closes#8354

- Extends `simple-httpbin` with a simple mock of the Cloud API (currently it checks the token and serves the `/users` endpoint).

- Renames `simple-httpbin` to `http-test-helper`.

Followup to #8467

Fixes the issue when `runtime/test` command fails to compile without fat jar classes:

```

sbt:enso> runtime/test

[warn] JPMSPlugin: Directory /home/dbushev/projects/luna/enso/engine/runtime-fat-jar/target/scala-2.13/classes does not exist.

[warn] JPMSPlugin: Directory /home/dbushev/projects/luna/enso/engine/runtime-fat-jar/target/scala-2.13/classes does not exist.

[info] compiling 106 Scala sources and 60 Java sources to /home/dbushev/projects/luna/enso/engine/runtime/target/scala-2.13/test-classes ...

[warn] Unexpected javac output: error: module not found: org.enso.runtime

[warn] 1 error.

[warn] frgaal exited with exit code 1

[error] (runtime / Test / compileIncremental) javac returned non-zero exit code

```

- Closes#8352

- ~~Proposed fix for #8493~~

- The temporary fix is deemed not viable. I will try to figure out a workaround and leave fixing #8493 to the engine team.

- Closes#8111 by making sure that all Excel workbooks are read using a backing file (which should be more memory efficient).

- If the workbook is being opened from an input stream, that stream is materialized to a `Temporary_File`.

- Adds tests fetching Table formats from HTTP.

- Extends `simple-httpbin` with ability to serve files for our tests.

- Ensures that the `Infer` option on `Excel` format also works with streams, if content-type metadata is available (e.g. from HTTP headers).

- Implements a `Temporary_File` facility that can be used to create a temporary file that is deleted once all references to the `Temporary_File` instance are GCed.

* Build distribution for amd64 and aarch64 MacOS

Possible after the GraalVM upgrade.

* Another attempt at building on MacOS M1

* One less hardcoded architecture

* Eliminate one more hardcoded architecture

* add more debug info

* nit

Adds these JAR modules to the `component` directory inside Engine distribution:

- `graal-language-23.1.0`

- `org.bouncycastle.*` - these need to be added for graalpy language

# Important Notes

- Remove `org.bouncycastle.*` packages from `runtime.jar` fat jar.

- Make sure that the `./run` script preinstalls GraalPy standalone distribution before starting engine tests

- Note that using `python -m venv` is only possible from standalone distribution, we cannot distribute `graalpython-launcher`.

- Make sure that installation of `numpy` and its polyglot execution example works.

- Convert `Text` to `TruffleString` before passing to GraalPy - 8ee9a2816f

This is a follow-up of #7991. #7991 broken `runtime-version-manager`. This is mostly reverts.

### Important Notes

Launcher now correctly recognizes that the newest engine needs some runtime:

```sh

> java -jar launcher.jar list

Enso 2023.2.1-nightly.2023.10.31 -> GraalVM 23.0.0-java17.0.7

Enso 0.0.0-dev -> GraalVM 23.1.0-java21.0.1

```

(this has not worked before)

Ensure that `-Dbench.compileOnly` system property is correctly forwarded to the benchmarks' runner. So that in the CI Engine tests, benchmarks are *dry run*.

# Important Notes

- Fixes dry run benchmarks in Engine Test Action

- Fixes Engine Benchmark Action

close#8249

Changelog:

- add: `profiling/snapshot` request that takes a heap dump of the language server and puts it in the `ENSO_DATA_DIRECTORY/profiling` direcotry

Upgrade to GraalVM JDK 21.

```

> java -version

openjdk version "21" 2023-09-19

OpenJDK Runtime Environment GraalVM CE 21+35.1 (build 21+35-jvmci-23.1-b15)

OpenJDK 64-Bit Server VM GraalVM CE 21+35.1 (build 21+35-jvmci-23.1-b15, mixed mode, sharing)

```

With SDKMan, download with `sdk install java 21-graalce`.

# Important Notes

- After this PR, one can theoretically run enso with any JRE with version at least 21.

- Removed `sbt bootstrap` hack and all the other build time related hacks related to the handling of GraalVM distribution.

- `project-manager` remains backward compatible - it can open older engines with runtimes. New engines now do no longer require a separate runtime to be downloaded.

- sbt does not support compilation of `module-info.java` files in mixed projects - https://github.com/sbt/sbt/issues/3368

- Which means that we can have `module-info.java` files only for Java-only projects.

- Anyway, we need just a single `module-info.class` in the resulting `runtime.jar` fat jar.

- `runtime.jar` is assembled in `runtime-with-instruments` with a custom merge strategy (`sbt-assembly` plugin). Caching is disabled for custom merge strategies, which means that re-assembly of `runtime.jar` will be more frequent.

- Engine distribution contains multiple JAR archives (modules) in `component` directory, along with `runner/runner.jar` that is hidden inside a nested directory.

- The new entry point to the engine runner is [EngineRunnerBootLoader](https://github.com/enso-org/enso/pull/7991/files#diff-9ab172d0566c18456472aeb95c4345f47e2db3965e77e29c11694d3a9333a2aa) that contains a custom ClassLoader - to make sure that everything that does not have to be loaded from a module is loaded from `runner.jar`, which is not a module.

- The new command line for launching the engine runner is in [distribution/bin/enso](https://github.com/enso-org/enso/pull/7991/files#diff-0b66983403b2c329febc7381cd23d45871d4d555ce98dd040d4d1e879c8f3725)

- [Newest version of Frgaal](https://repo1.maven.org/maven2/org/frgaal/compiler/20.0.1/) (20.0.1) does not recognize `--source 21` option, only `--source 20`.

The change upgrades `directory-watcher` library, hoping that it will fix the problem reported in #7695 (there has been a number of bug fixes in MacOS listener since then).

Once upgraded, tests in `WatcherAdapterSpec` because the logic that attempted to ensure the proper initialization order in the test using semaphore was wrong. Now starting the watcher using `watchAsync` which only returns the future when the watcher successfully registers for paths. Ideally authors of the library would make the registration bit public

(3218d68a84/core/src/main/java/io/methvin/watcher/DirectoryWatcher.java (L229C7-L229C20)) but it is the best we can do so far.

Had to adapt to the new API in PathWatcher as well, ensuring the right order of initialization.

Should fix#7695.

- Fixes the issue that sometimes occurred on CI where old `services` configuration was not cleaned and SPI definitions were leaking between PRs, causing random failures:

```

❌ should allow selecting table rows based on a boolean column

An unexpected panic was thrown: java.util.ServiceConfigurationError: org.enso.base.file_format.FileFormatSPI: Provider org.enso.database.EnsoConnectionSPI not found

```

- The issue is fixed by detecting unknown SPI classes before the build, and if such classes are detected, cleaning the config and forcing a rebuild of the given library to ensure consistency of the service config.

- Follow-up of #8055

- Adds a benchmark comparing performance of Enso Map and Java HashMap in two scenarios - _only incremental_ updates (like `Vector.distinct`) and _replacing_ updates (like keeping a counter for each key). These benchmarks can be used as a metric for #8090

Having a modest-size files in a project would lead to a timeout when the project was first initialized. This became apparent when testing delivered `.enso-project` files with some data files. After some digging there was a bug in JGit

(https://bugs.eclipse.org/bugs/show_bug.cgi?id=494323) which meant that adding such files was really slow. The implemented fix is not on by default but even with `--renormalization` turned off I did not see improvement.

In the end it didn't make sense to add `data` directory to our version control, or any other files than those in `src` or some meta files in `.enso`. Not including such files eliminates first-use initialization problems.

# Important Notes

To test, pick an existing Enso project with some data files in it (> 100MB) and remove `.enso/.vcs` directory. Previously it would timeout on first try (and work in successive runs). Now it works even on the first try.

The crash:

```

[org.enso.languageserver.requesthandler.vcs.InitVcsHandler] Initialize project request [Number(2)] for [f9a7cd0d-529c-4e1d-a4fa-9dfe2ed79008] failed with: null.

java.util.concurrent.TimeoutException: null

at org.enso.languageserver.effect.ZioExec$.<clinit>(Exec.scala:134)

at org.enso.languageserver.effect.ZioExec.$anonfun$exec$3(Exec.scala:60)

at org.enso.languageserver.effect.ZioExec.$anonfun$exec$3$adapted(Exec.scala:60)

at zio.ZIO.$anonfun$foldCause$4(ZIO.scala:683)

at zio.internal.FiberRuntime.runLoop(FiberRuntime.scala:904)

at zio.internal.FiberRuntime.evaluateEffect(FiberRuntime.scala:381)

at zio.internal.FiberRuntime.evaluateMessageWhileSuspended(FiberRuntime.scala:504)

at zio.internal.FiberRuntime.drainQueueOnCurrentThread(FiberRuntime.scala:220)

at zio.internal.FiberRuntime.run(FiberRuntime.scala:139)

at akka.dispatch.TaskInvocation.run(AbstractDispatcher.scala:49)

at java.base/java.util.concurrent.ForkJoinTask$RunnableExecuteAction.exec(ForkJoinTask.java:1395)

at java.base/java.util.concurrent.ForkJoinTask.doExec(ForkJoinTask.java:373)

at java.base/java.util.concurrent.ForkJoinPool$WorkQueue.topLevelExec(ForkJoinPool.java:1182)

```

* Reduce extra output in compilation and tests

I couldn't stand the amount of extra output that we got when compiling

a clean project and when executing regular tests. We should strive to

keep output clean and not print anything additional to stdout/stderr.

* Getting rid of explicit setup by service loading

In order for SL4J to use service loading correctly had to upgrade to

latest slf4j. Unfortunately `TestLogProvider` which essentially

delegates to `logback` provider will lead to spurious ambiguous warnings

on multiple providers. In order to dictate which one to use and

therefore eliminate the warnings we can use the `slf4j.provider` env

var, which is only available in slf4j 2.x.

Now, there is no need to explicitly call `LoggerSetup.get().setup()` as

that is being called during service setup.

* legal review

* linter

* Ensure ConsoleHandler uses the default level

ConsoleHandler's constructor uses `Level.INFO` which is unnecessary for

tests.

* report warnings

* Add support for https and wss

Preliminary support for https and wss. During language server startup we

will read the application config and search for the `https` config with

necessary env vars set.

The configuration supports two modes of creating ssl-context - via

PKCS12 format and certificat+private key.

Fixes#7839.

* Added tests, improved documentation

Generic improvements along with actual tests.

* lint

* more docs + wss support

* changelog

* Apply suggestions from code review

Co-authored-by: Dmitry Bushev <bushevdv@gmail.com>

* PR comment

* typo

* lint

* make windows line endings happy

---------

Co-authored-by: Dmitry Bushev <bushevdv@gmail.com>

close#7871close#7698

Changelog:

- fix: the `run` script logic to place the GraalVM runtime in the expected directory when building the bundle

- fix: the `makeBundles` SBT logic to place the GraalVM runtime in the expected directory

* Always log verbose to a file

The change adds an option by default to always log to a file with

verbose log level.

The implementation is a bit tricky because in the most common use-case

we have to always log in verbose mode to a socket and only later apply

the desired log levels. Previously socket appender would respect the

desired log level already before forwarding the log.

If by default we log to a file, verbose mode is simply ignored and does

not override user settings.

To test run `project-manager` with `ENSO_LOGSERVER_APPENDER=console` env

variable. That will output to the console with the default `INFO` level

and `TRACE` log level for the file.

* add docs

* changelog

* Address some PR requests

1. Log INFO level to CONSOLE by default

2. Change runner's default log level from ERROR to WARN

Took a while to figure out why the correct log level wasn't being passed

to the language server, therefore ignoring the (desired) verbose logs

from the log file.

* linter

* 3rd party uses log4j for logging

Getting rid of the warning by adding a log4j over slf4j bridge:

```

ERROR StatusLogger Log4j2 could not find a logging implementation. Please add log4j-core to the classpath. Using SimpleLogger to log to the console...

```

* legal review update

* Make sure tests use test resources

Having `application.conf` in `src/main/resources` and `test/resources`

does not guarantee that in Tests we will pick up the latter. Instead, by

default it seems to do some kind of merge of different configurations,

which is far from desired.

* Ensure native launcher test log to console only

Logging to console and (temporary) files is problematic for Windows.

The CI also revealed a problem with the native configuration because it

was not possible to modify the launcher via env variables as everything

was initialized during build time.

* Adapt to method changes

* Potentially deal with Windows failures

This change replaces Enso's custom logger with an existing, mostly off the shelf logging implementation. The change attempts to provide a 1:1 replacement for the existing solution while requiring only a minimal logic for the initialization.

Loggers are configured completely via `logging-server` section in `application.conf` HOCON file, all initial logback configuration has been removed. This opens up a lot of interesting opportunities because we can benefit from all the well maintained slf4j implementations without being to them in terms of functionality.

Most important differences have been outlined in `docs/infrastructure/logging.md`.

# Important Notes

Addresses:

- #7253

- #6739

# Important Notes

#### The Plot

- there used to be two kinds of benchmarks: in Java and in Enso

- those in Java got quite a good treatment

- there even are results updated daily: https://enso-org.github.io/engine-benchmark-results/

- the benchmarks written in Enso used to be 2nd class citizen

#### The Revelation

This PR has the potential to fix it all!

- It designs new [Bench API](88fd6fb988) ready for non-batch execution

- It allows for _single benchmark in a dedicated JVM_ execution

- It provides a simple way to wrap such an Enso benchmark as a Java benchmark

- thus the results of Enso and Java benchmarks are [now unified](https://github.com/enso-org/enso/pull/7101#discussion_r1257504440)

Long live _single benchmarking infrastructure for Java and Enso_!

Follow-up of recent GraalVM update #7176 that fixes downloading of GraalVM for Mac - instead of "darwin", the releases are now named "macos"

# Important Notes

Also re-enables the JDK/GraalVM version check as onLoad hook to the `sbt` process. We used to have that check a long time ago. Provides errors like this one if the `sbt` is run with a different JVM version:

```

[error] GraalVM version mismatch - you are running Oracle GraalVM 20.0.1+9.1 but GraalVM 17.0.7 is expected.

[error] GraalVM version check failed.

```

The added benchmark is a basis for a performance investigation.

We compare the performance of the same operation run in Java vs Enso to see what is the overhead and try to get the Enso operations closer to the pure-Java performance.

- Previous GraalVM update: https://github.com/enso-org/enso/pull/6750

Removed warnings:

- Remove deprecated `ConditionProfile.createCountingProfile()`.

- Add `@Shared` to some `@Cached` parameters (Truffle now emits warnings about potential `@Share` usage).

- Specialization method names should not start with execute

- Add limit attribute to some specialization methods

- Add `@NeverDefault` for some cached initializer expressions

- Add `@Idempotent` or `@NonIdempotent` where appropriate

BigInteger and potential Node inlining are tracked in follow-up issues.

# Important Notes

For `SDKMan` users:

```

sdk install java 17.0.7-graalce

sdk use java 17.0.7-graalce

```

For other users - download link can be found at https://github.com/graalvm/graalvm-ce-builds/releases/tag/jdk-17.0.7

Release notes: https://www.graalvm.org/release-notes/JDK_17/

R component was dropped from the release 23.0.0, only `python` is available to install via `gu install python`.

The current instructions to _build, use and debug_ `project-manager` and its engine/ls process are complicated and require a lot of symlinks to properly point to each other. This pull requests simplifies all of that by introduction of `ENSO_ENGINE_PATH` and `ENSO_JVM_PATH` environment variables. Then it hides all the complexity behind a simple _sbt command_: `runProjectManagerDistribution --debug`.

# Important Notes

I decided to tackle this problem as I have three repositories with different branches of Enso and switching between them requires me to mangle the symlinks. I hope I will not need to do that anymore with the introduction of the `runProjectManagerDistribution` command.

This PR modifies the builtin method processor such that it forbids arrays of non-primitive and non-guest objects in builtin methods. And provides a proper implementation for the builtin methods in `EnsoFile`.

- Remove last `to_array` calls from `File.enso`

close#7194

Changelog:

- add: `/projects/{project_id}/enso_project` HTTP endpoint returning an `.enso-project` archive structure

- update: archive enso project to a `.enso-project` `.tar.gz` archive

- update: make project `path` a required field

- Add type detection for `Mixed` columns when calling column functions.

- Excel uses column name for missing headers.

- Add aliases for parse functions on text.

- Adjust `Date`, `Time_Of_Day` and `Date_Time` parse functions to not take `Nothing` anymore and provide dropdowns.

- Removed built-in parses.

- All support Locale.

- Add support for missing day or year for parsing a Date.

- All will trim values automatically.

- Added ability to list AWS profiles.

- Added ability to list S3 buckets.

- Workaround for Table.aggregate so default item added works.

Remove the magical code generation of `enso_project` method from codegen phase and reimplement it as a proper builtin method.

The old behavior of `enso_project` was special, and violated the language semantics (regarding the `self` argument):

- It was implicitly declared in every module, so it could be called without a self argument.

- It can be called with explicit module as self argument, e.g. `Base.enso_project`, or `Visualizations.enso_project`.

Let's avoid implicit methods on modules and let's be explicit. Let's reimplement the `enso_project` as a builtin method. To comply with the language semantics, we will have to change the signature a bit:

- `enso_project` is a static method in the `Standard.Base.Meta.Enso_Project` module.

- It takes an optional `project` argument (instead of taking it as an explicit self argument).

Having the `enso_project` defined as a (shadowed) builtin method, we will automatically have suggestions created for it.

# Important Notes

- Truffle nodes are no longer generated in codegen phase for the `enso_project` method. It is a standard builtin now.

- The minimal import to use `enso_project` is now `from Standard.Base.Meta.Enso_Project import enso_project`.

- Tested implicitly by `org.enso.compiler.ExecCompilerTest#testInvalidEnsoProjectRef`.

Fixes#6416 by introducing `InlineableNode`. It runs fast even on GraalVM CE, fixes ([forever broken](https://github.com/enso-org/enso/pull/6442#discussion_r1178782635)) `Debug.eval` with `<|` and [removes discouraged subclassing](https://github.com/enso-org/enso/pull/6442#discussion_r1178778968) of `DirectCallNode`. Introduces `@BuiltinMethod.needsFrame` - something that was requested by #6293. Just in this PR the attribute is optional - its implicit value continues to be derived from `VirtualFrame` presence/absence in the builtin method argument list. A lot of methods had to be modified to pass the `VirtualFrame` parameter along to propagate it where needed.

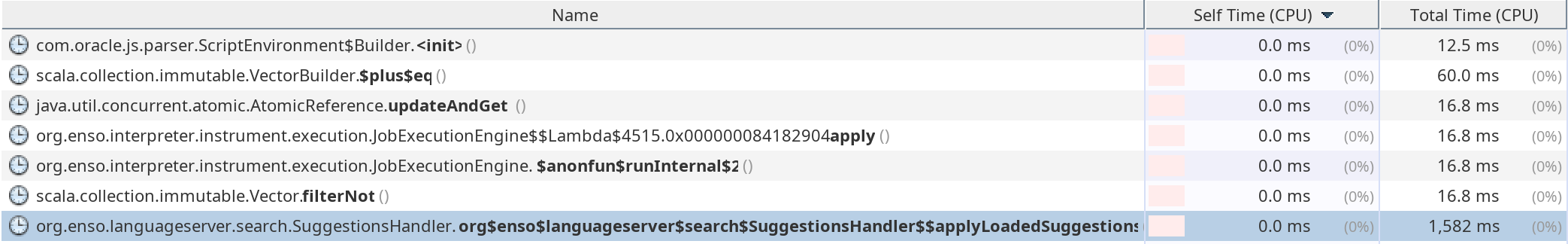

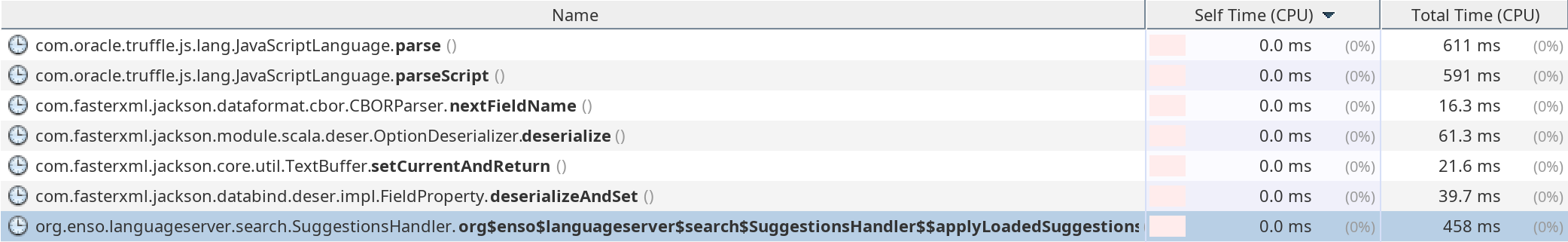

close#6080

Changelog

- add: implement `SuggestionsRepo.insertAll` as a batch SQL insert

- update: `search/getSuggestionsDatabase` returns empty suggestions. Currently, the method is only used at startup and returns the empty response anyway because the libs are not loaded at that point.

- update: serialize only global (defined in the module scope) suggestions during the distribution building. There's no sense in storing the local library suggestions.

- update: sqlite dependency

- remove: unused methods from `SuggestionsRepo`

- remove: Arguments table

# Important Notes

Speeds up libraries loading by ~1 second.

This is the first part of the #5158 umbrella task. It closes#5158, follow-up tasks are listed as a comment in the issue.

- Updates all prototype methods dealing with `Value_Type` with a proper implementation.

- Adds a more precise mapping from in-memory storage to `Value_Type`.

- Adds a dialect-dependent mapping between `SQL_Type` and `Value_Type`.

- Removes obsolete methods and constants on `SQL_Type` that were not portable.

- Ensures that in the Database backend, operation results are computed based on what the Database is meaning to return (by asking the Database about expected types of each operation).

- But also ensures that the result types are sane.

- While SQLite does not officially support a BOOLEAN affinity, we add a set of type overrides to our operations to ensure that Boolean operations will return Boolean values and will not be changed to integers as SQLite would suggest.

- Some methods in SQLite fallback to a NUMERIC affinity unnecessarily, so stuff like `max(text, text)` will keep the `text` type instead of falling back to numeric as SQLite would suggest.

- Adds ability to use custom fetch / builder logic for various types, so that we can support vendor specific types (for example, Postgres dates).

# Important Notes

- There are some TODOs left in the code. I'm still aligning follow-up tasks - once done I will try to add references to relevant tasks in them.

`--compile` command would run the compilation pipeline but silently omit any encountered errors, thus skipping the serialization. This maybe was a good idea in the past but it was problematic now that we generate indexes on build time.

This resulted in rather obscure errors (#6092) for modules that were missing their caches.

The change should significantly improve developers' experience when working on stdlib.

# Important Notes

Making compilation more resilient to sudden cache misses is a separate item to be worked on.

The change adds support for generating suggestions and bindings when using the convenient task for building individual stdlib components. By default commands do not generate index since it adds build time. But `buildStdLibAllWithIndex` will.

Closes#5999.

Implement new Enso documentation parser; remove old Scala Enso parser.

Performance: Total time parsing documentation is now ~2ms.

# Important Notes

- Doc parsing is now done only in the frontend.

- Some engine tests had never been switched to the new parser. We should investigate tests that don't pass after the switch: #5894.

- The option to run the old searcher has been removed, as it is obsolete and was already broken before this (see #5909).

- Some interfaces used only by the old searcher have been removed.

Adds a common project that allows sharing code between the `runtime` and `std-bits`.

Due to classpath separation and the way it is compiled, the classes will be duplicated - we will have one copy for the `runtime` classpath and another copy as a small JAR for `Standard.Base` library.

This is still much better than having the code duplicated - now at least we have a single source of truth for the shared implementations.

Due to the copying we should not expand this project too much, but I encourage to put here any methods that would otherwise require us to copy the code itself.

This may be a good place to put parts of the hashing logic to then allow sharing the logic between the `runtime` and the `MultiValueKey` in the `Table` library (cc: @Akirathan).

This change adds serialization and deserialization of library bindings.

In order to be functional, one needs to first generate IR and

serialize bindings using `--compiled <path-to-library>` command. The bindings

will be stored under the library with `.bindings` suffix.

Bindings are being generated during `buildEngineDistribution` task, thus not

requiring any extra steps.

When resolving import/exports the compiler will first try to load

module's bindings from cache. If successful, it will not schedule its

imports/exports for immediate compilation, as we always did, but use the

bindings info to infer the dependent modules.

The current change does not make any optimizations when it comes to

compiling the modules, yet. It only delays the actual

compilation/loading IR from cache so that it can be done in bulk.

Further optimizations will come from this opportunity such as parallel

loading of caches or lazily inferring only the necessary modules.

Part of https://github.com/enso-org/enso/issues/5568 work.

Creating two `findExceptionMessage` methods in `HostEnsoUtils` and in `VisualizationResult`. Why two? Because one of them is using `org.graalvm.polyglot` SDK as it runs in _"normal Java"_ mode. The other one is using Truffle API as it is running inside of partially evaluated instrument.

There is a `FindExceptionMessageTest` to guarantee consistency between the two methods. It simulates some exceptions in Enso code and checks that both methods extract the same _"message"_ from the exception. The tests verifies hosted and well as Enso exceptions - however testing other polyglot languages is only possible in other modules - as such I created `PolyglotFindExceptionMessageTest` - but that one doesn't have access to Truffle API - e.g. it doesn't really check the consistency - just that a reasonable message is extracted from a JavaScript exception.

# Important Notes

This is not full fix of #5260 - something needs to be done on the IDE side, as the IDE seems to ignore the delivered JSON message - even if it contains properly extracted exception message.

Automating the assembly of the engine and its execution into a single task. If you are modifying standard libraries, engine sources or Enso tests, you can launch `sbt` and then just:

```

sbt:enso> runEngineDistribution --run test/Tests/src/Data/Maybe_Spec.enso

[info] Engine package created at built-distribution/enso-engine-0.0.0-dev-linux-amd64/enso-0.0.0-dev

[info] Executing built-distribution/enso-engine-...-dev/bin/enso --run test/Tests/src/Data/Maybe_Spec.enso

Maybe: [5/5, 30ms]

- should have a None variant [14ms]

- should have a Some variant [5ms]

- should provide the `maybe` function [4ms]

- should provide `is_some` [2ms]

- should provide `is_none` [3ms]

5 tests succeeded.

0 tests failed.unEngineDistribution 4s

0 tests skipped.

```

the [runEngineDistribution](3a581f29ee/docs/CONTRIBUTING.md (running-enso)) `sbt` input task makes sure all your sources are properly compiled and only then executes your enso source. Everything ready at a single press of Enter.

# Important Notes

To debug in chrome dev tools, just add `--inspect`:

```

sbt:enso> runEngineDistribution --inspect --run test/Tests/src/Data/Maybe_Spec.enso

E.g. in Chrome open: devtools://devtools/bundled/js_app.html?ws=127.0.0.1:9229/7JsgjXlntK8

```

everything gets build and one can just attach the Enso debugger.

- Updated `Widget.Vector_Editor` ready for use by IDE team.

- Added `get` to `Row` to make API more aligned.

- Added `first_column`, `second_column` and `last_column` to `Table` APIs.

- Adjusted `Column_Selector` and associated methods to have simpler API.

- Removed `Column` from `Aggregate_Column` constructors.

- Added new `Excel_Workbook` type and added to `Excel_Section`.

- Added new `SQLiteFormatSPI` and `SQLite_Format`.

- Added new `IamgeFormatSPI` and `Image_Format`.

Before, any failures of the Rust-side of the parser build would be swallowed by sbt, for example if I add gibberish to the Rust code I will get:

<img width="474" alt="image" src="https://user-images.githubusercontent.com/1436948/217374050-fd9ddaca-136c-459e-932e-c4b9e630d610.png">

This is problematic, because when users are compiling in SBT they may get confusing errors about Java files not being found whereas the true cause is hard to track down because it is somewhere deep in the logs. We've run into this silent failure when setting up SBT builds together with @GregoryTravis today.

I suggest to change it so that once cargo fails, the build is failed with a helpful message - this way it will be easier to track down the issues.

With these changes we get:

<img width="802" alt="image" src="https://user-images.githubusercontent.com/1436948/217374531-707ae348-4c55-4d62-9a86-93850ad8086b.png">

In order to investigate `engine/language-server` project, I need to be able to open its sources in IGV and NetBeans.

# Important Notes

By adding same Java source (this time `package-info.java`) and compiling with our Frgaal compiler the necessary `.enso-sources*` files are generated for `engine/language-server` and then the `enso4igv` plugin can open them and properly understand their compile settings.

In addition to that this PR enhances the _"logical view"_ presentation of the project by including all source roots found under `src/*/*`.

Enso unit tests were running without `-ea` check enabled and as such various invariant checks in Truffle code were not executed. Let's turn the `-ea` flag on and fix all the code misbehaves.

- add: `GeneralAnnotation` IR node for `@name expression` annotations

- update: compilation pipeline to process the annotation expressions

- update: rewrite `OverloadsResolution` compiler pass so that it keeps the order of module definitions

- add: `Meta.get_annotation` builtin function that returns the result of annotation expression

- misc: improvements (private methods, lazy arguments, build.sbt cleanup)

Introduces unboxed (and arity-specialized) storage schemes for Atoms. It results in improvements both in memory consumption and runtime.

Memory wise: instead of using an array, we now use object fields. We also enable unboxing. This cuts a good few pointers in an unboxed object. E.g. a quadruple of integers is now 64 bytes (4x8 bytes for long fields + 16 bytes for layout and constructor pointers + 16 bytes for a class header). It used to be 168 bytes (4x24 bytes for boxed Longs + 16 bytes for array header + 32 bytes for array contents + 8 bytes for constructor ptr + 16 bytes for class header), so we're saving 104 bytes a piece. In the least impressive scenarios (all-boxed fields) we're saving 8 bytes per object (saving 16 bytes for array header, using 8 bytes for the new layout field). In the most-benchmarked case (list of longs), we save 32 bytes per cons-cell.

Time wise:

All list-summing benchmarks observe a ~2x speedup. List generation benchmarks get ~25x speedups, probably both due to less GC activity and better allocation characteristics (only allocating one object per Cons, rather than Cons + Object[] for fields). The "map-reverse" family gets a neat 10x speedup (part of the work is reading, which is 2x faster, the other is allocating, which is now 25x faster, we end up with 10x when combined).

Use `InteropLibrary.isString` and `asString` to convert any string value to `byte[]`

# Important Notes

Also contains a support for `Metadata.assertInCode` to help locating the right place in the code snippets.

`runtime-with-instruments` project sets `-Dgraalvm.locatorDisabled=true` that disables the discovery of available polyglot languages (installed with `gu`). On the other hand, enabling locator makes polyglot languages available, but also makes the program classes and the test classes loaded with different classloaders. This way we're unable to use `EnsoContext` in tests to observe internal context state (there is an exception when you try to cast to `EnsoContext`).

The solution is to move tests with enabled polyglot support, but disabled `EnsoContext` introspection to a separate project.

Disabling `musl` as it isn't capable to load dynamic library.

# Important Notes

With this change it is possible to:

```

$ sbt bootstrap

$ sbt engine-runner/buildNativeImage

$ ./runner --run ./engine/runner/src/test/resources/Factorial.enso 3

6

$ ./runner --run ./engine/runner/src/test/resources/Factorial.enso 4

24

$ ./runner --run ./engine/runner/src/test/resources/Factorial.enso 100

93326215443944152681699238856266700490715968264381621468592963895217599993229915608941463976156518286253697920827223758251185210916864000000000000000000000000

```

Is it OK, @radeusgd to disable `musl`? If not, we would have to find a way to link the parser in statically, not dynamically.

Upgrading to GraalVM 22.3.0.

# Important Notes

- Removed all deprecated `FrameSlot`, and replaced them with frame indexes - integers.

- Add more information to `AliasAnalysis` so that it also gathers these indexes.

- Add quick build mode option to `native-image` as default for non-release builds

- `graaljs` and `native-image` should now be downloaded via `gu` automatically, as dependencies.

- Remove `engine-runner-native` project - native image is now build straight from `engine-runner`.

- We used to have `engine-runner-native` without `sqldf` in classpath as a workaround for an internal native image bug.

- Fixed chrome inspector integration, such that it shows values of local variables both for current stack frame and caller stack frames.

- There are still many issues with the debugging in general, for example, when there is a polyglot value among local variables, a `NullPointerException` is thrown and no values are displayed.

- Removed some deprecated `native-image` options

- Remove some deprecated Truffle API method calls.

Previously, when exporting the same module multiple times only the first statement would count and the rest would be discarded by the compiler.

This change allows for multiple exports of the same module e.g.,

```

export project.F1

from project.F1 export foo

```

Multiple exports may however lead to conflicts when combined with hiding names. Added logic in `ImportResolver` to detect such scenarios.

This fixes https://www.pivotaltracker.com/n/projects/2539304/stories/183092447

# Important Notes

Added a bunch of scenarios to simulate pos and neg results.

1-to-1 translation of the HTTPBin expected by our testsuite using Java's HttpServer.

Can be started from SBT via

```

sbt:enso> simple-httpbin/run <hostname> <port>

```

# Important Notes

@mwu-tow this will mean we can ditch Go dependency completely and replace it with the above call.

This change adds support for Version Controlled projects in language server.

Version Control supports operations:

- `init` - initialize VCS for a project

- `save` - commit all changes to the project in VCS

- `restore` - ability to restore project to some past `save`

- `status` - show the status of the project from VCS' perspective

- `list` - show a list of requested saves

# Important Notes

Behind the scenes, Enso's VCS uses git (or rather [jGit](https://www.eclipse.org/jgit/)) but nothing stops us from using a different implementation as long as it conforms to the establish API.

- Added expression ANTLR4 grammar and sbt based build.

- Added expression support to `set` and `filter` on the Database and InMemory `Table`.

- Added expression support to `aggregate` on the Database and InMemory `Table`.

- Removed old aggregate functions (`sum`, `max`, `min` and `mean`) from `Column` types.

- Adjusted database `Column` `+` operator to do concatenation (`||`) when text types.

- Added power operator `^` to both `Column` types.

- Adjust `iif` to allow for columns to be passed for `when_true` and `when_false` parameters.

- Added `is_present` to database `Column` type.

- Added `coalesce`, `min` and `max` functions to both `Column` types performing row based operation.

- Added support for `Date`, `Time_Of_Day` and `Date_Time` constants in database.

- Added `read` method to InMemory `Column` returning `self` (or a slice).

# Important Notes

- Moved approximate type computation to `SQL_Type`.

- Fixed issue in `LongNumericOp` where it was always casting to a double.

- Removed `head` from InMemory Table (still has `first` method).

Make sure `libenso_parser.so`, `.dll` or `.dylib` are packaged and included when `sbt buildEngineDistribution`.

# Important Notes

There was [a discussion](https://discord.com/channels/401396655599124480/1036562819644141598) about proper location of the library. It was concluded that _"there's no functional difference between a dylib and a jar."_ and as such the library is placed in `component` folder.

Currently the old parser is still used for parsing. This PR just integrates the build system changes and makes us ready for smooth flipping of the parser in the future as part of #3611.

We've had an old attempt at integrating a Rust parser with our Scala/Java projects. It seems to have been abandoned and is not used anywhere - it is also superseded by the new integration of the Rust parser. I think it was used as an experiment to see how to approach such an integration.

Since it is not used anymore - it make sense to remove it, because it only adds some (slight, but non-zero) maintenance effort. We can always bring it back from git history if necessary.

- Reimplement the `Duration` type to a built-in type.

- `Duration` is an interop type.

- Allow Enso method dispatch on `Duration` interop coming from different languages.

# Important Notes

- The older `Duration` type should now be split into new `Duration` builtin type and a `Period` type.

- This PR does not implement `Period` type, so all the `Period`-related functionality is currently not working, e.g., `Date - Period`.

- This PR removes `Integer.milliseconds`, `Integer.seconds`, ..., `Integer.years` extension methods.

This PR adds a possibility to generate native-image for engine-runner.

Note that due to on-demand loading of stdlib, programs that make use of it are currently not yet supported

(that will be resolved at a later point).

The purpose of this PR is only to make sure that we can generate a bare minimum runner because due to lack TruffleBoundaries or misconfiguration in reflection config, this can get broken very easily.

To generate a native image simply execute:

```

sbt> engine-runner-native/buildNativeImage

... (wait a few minutes)

```

The executable is called `runner` and can be tested via a simple test that is in the resources. To illustrate the benefits

see the timings difference between the non-native and native one:

```

>time built-distribution/enso-engine-0.0.0-dev-linux-amd64/enso-0.0.0-dev/bin/enso --no-ir-caches --in-project test/Tests/ --run engine/runner-native/src/test/resources/Factorial.enso 6

720

real 0m4.503s

user 0m9.248s

sys 0m1.494s

> time ./runner --run engine/runner-native/src/test/resources/Factorial.enso 6

720

real 0m0.176s

user 0m0.042s

sys 0m0.038s

```

# Important Notes

Notice that due to a [bug in GraalVM](https://github.com/oracle/graal/issues/4200), which is already fixed in 22.x, and us still being on 21.x for the time being, I had to add a workaround to our sbt build to build a different fat jar for native image. To workaround it I had to exclude sqlite jar. Hence native image task is on `engine-runner-native` and not on `engine-runner`.

Will need to add the above command to CI.

Implements https://www.pivotaltracker.com/story/show/182307143

# Important Notes

- Modified standard library Java helpers dependencies so that `std-table` module depends on `std-base`, as a provided dependency. This is allowed, because `std-table` is used by the `Standard.Table` Enso module which depends on `Standard.Base` which ensures that the `std-base` is loaded onto the classpath, thus whenever `std-table` is loaded by `Standard.Table`, so is `std-base`. Thus we can rely on classes from `std-base` and its dependencies being _provided_ on the classpath. Thanks to that we can use utilities like `Text_Utils` also in `std-table`, avoiding code duplication. Additional advantage of that is that we don't need to specify ICU4J as a separate dependency for `std-table`, since it is 'taken' from `std-base` already - so we avoid including it in our build packages twice.

This change adds support for matching on constants by:

1) extending parser to allow literals in patterns

2) generate branch node for literals

Related to https://www.pivotaltracker.com/story/show/182743559

Execution of `sbt runtime/bench` doesn't seem to be part of the gate. As such it can happen a change into the Enso language syntax, standard libraries, runtime & co. can break the benchmarks suite without being noticed. Integrating such PR causes unnecessary disruptions to others using the benchmarks.

Let's make sure verification of the benchmarks (e.g. that they compile and can execute without error) is part of the CI.

# Important Notes

Currently the gate shall fail. The fix is being prepared in parallel PR - #3639. When the two PRs are combined, the gate shall succeed again.

The option asks to print a final test report for each projects at the

end `sbt> run`.

That way, when running the task in aggregate mode, we have a summary at

the end, rather than somewhere in the large output of the individual

subproject.

Updated the SQLite, PostgreSQL and Redshift drivers.

# Important Notes

Updated the API for Redshift and proved able to connect without the ini file workaround.

This PR merges existing variants of `LiteralNode` (`Integer`, `BigInteger`, `Decimal`, `Text`) into a single `LiteralNode`. It adds `PatchableLiteralNode` variant (with non `final` `value` field) and uses `Node.replace` to modify the AST to be patchable. With such change one can remove the `UnwindHelper` workaround as `IdExecutionInstrument` now sees _patched_ return values without any tricks.

This change makes sure that Runtime configuration of `runtime` is listed

as a dependency of `runtime-with-instruments`.

That way `buildEngineDistribution` which indirectly depends on

`runtime-with-instruments`/assembly triggers compilation for std-bits,

if necessary.

# Important Notes

Minor adjustments for a problem introduced in https://github.com/enso-org/enso/pull/3509

New plan to [fix the `sbt` build](https://www.pivotaltracker.com/n/projects/2539304/stories/182209126) and its annoying:

```

log.error(

"Truffle Instrumentation is not up to date, " +

"which will lead to runtime errors\n" +

"Fixes have been applied to ensure consistent Instrumentation state, " +

"but compilation has to be triggered again.\n" +

"Please re-run the previous command.\n" +

"(If this for some reason fails, " +

s"please do a clean build of the $projectName project)"

)

```

When it is hard to fix `sbt` incremental compilation, let's restructure our project sources so that each `@TruffleInstrument` and `@TruffleLanguage` registration is in individual compilation unit. Each such unit is either going to be compiled or not going to be compiled as a batch - that will eliminate the `sbt` incremental compilation issues without addressing them in `sbt` itself.

fa2cf6a33ec4a5b2e3370e1b22c2b5f712286a75 is the first step - it introduces `IdExecutionService` and moves all the `IdExecutionInstrument` API up to that interface. The rest of the `runtime` project then depends only on `IdExecutionService`. Such refactoring allows us to move the `IdExecutionInstrument` out of `runtime` project into independent compilation unit.

Auto-generate all builtin methods for builtin `File` type from method signatures.

Similarly, for `ManagedResource` and `Warning`.

Additionally, support for specializations for overloaded and non-overloaded methods is added.

Coverage can be tracked by the number of hard-coded builtin classes that are now deleted.

## Important notes

Notice how `type File` now lacks `prim_file` field and we were able to get rid off all of those

propagating method calls without writing a single builtin node class.

Similarly `ManagedResource` and `Warning` are now builtins and `Prim_Warnings` stub is now gone.

Drop `Core` implementation (replacement for IR) as it (sadly) looks increasingly

unlikely this effort will be continued. Also, it heavily relies

on implicits which increases some compilation time (~1sec from `clean`)

Related to https://www.pivotaltracker.com/story/show/182359029

This change introduces a custom LogManager for console that allows for

excluding certain log messages. The primarily reason for introducing

such LogManager/Appender is to stop issuing hundreds of pointless

warnings coming from the analyzing compiler (wrapper around javac) for

classes that are being generated by annotation processors.

The output looks like this:

```

[info] Cannot install GraalVM MBean due to Failed to load org.graalvm.nativebridge.jni.JNIExceptionWrapperEntryPoints

[info] compiling 129 Scala sources and 395 Java sources to /home/hubert/work/repos/enso/enso/engine/runtime/target/scala-2.13/classes ...

[warn] Unexpected javac output: warning: File for type 'org.enso.interpreter.runtime.type.ConstantsGen' created in the last round will not be subject to annotation processing.

[warn] 1 warning.

[info] [Use -Dgraal.LogFile=<path> to redirect Graal log output to a file.]

[info] Cannot install GraalVM MBean due to Failed to load org.graalvm.nativebridge.jni.JNIExceptionWrapperEntryPoints

[info] foojavac Filer

[warn] Could not determine source for class org.enso.interpreter.node.expression.builtin.number.decimal.CeilMethodGen

[warn] Could not determine source for class org.enso.interpreter.node.expression.builtin.resource.TakeNodeGen

[warn] Could not determine source for class org.enso.interpreter.node.expression.builtin.error.ThrowErrorMethodGen

[warn] Could not determine source for class org.enso.interpreter.node.expression.builtin.number.smallInteger.MultiplyMethodGen

[warn] Could not determine source for class org.enso.interpreter.node.expression.builtin.warning.GetWarningsNodeGen

[warn] Could not determine source for class org.enso.interpreter.node.expression.builtin.number.smallInteger.BitAndMethodGen

[warn] Could not determine source for class org.enso.interpreter.node.expression.builtin.error.ErrorToTextNodeGen

[warn] Could not determine source for class org.enso.interpreter.node.expression.builtin.warning.GetValueMethodGen

[warn] Could not determine source for class org.enso.interpreter.runtime.callable.atom.AtomGen$MethodDispatchLibraryExports$Cached

....

```

The output now has over 500 of those and there will be more. Much more

(generated by our and Truffle processors).

There is no way to tell SBT that those are OK. One could potentially

think of splitting compilation into 3 stages (Java processors, Java and

Scala) but that will already complicate the non-trivial build definition

and we may still end up with the initial problem.

This is a fix to make it possible to get reasonable feedback from

compilation without scrolling mutliple screens *every single time*.

Also fixed a spurious warning in javac processor complaining about

creating files in the last round.

Related to https://www.pivotaltracker.com/story/show/182138198

`interpreter-dsl` should only attempt to run explicitly specified

processors. That way, even if the generated

`META-INF/services/javax.annotation.processing.Processor` is present,

it does not attempt to apply those processors on itself.

This change makes errors related to

```

[warn] Unexpected javac output: error: Bad service configuration file, or

exception thrown while constructing Processor object:

javax.annotation.processing.Processor: Provider org.enso.interpreter.dsl....

```

a thing of the past. This was supper annoying when switching branches and

required to either clean the project or remove the file by hand.

Related to https://www.pivotaltracker.com/story/show/182297597

@radeusgd discovered that no formatting was being applied to std-bits projects.

This was caused by the fact that `enso` project didn't aggregate them. Compilation and

packaging still worked because one relied on the output of some tasks but

```

sbt> javafmtAll

```

didn't apply it to `std-bits`.

# Important Notes

Apart from `build.sbt` no manual changes were made.

This is the 2nd part of DSL improvements that allow us to generate a lot of

builtins-related boilerplate code.

- [x] generate multiple method nodes for methods/constructors with varargs

- [x] expanded processing to allow for @Builtin to be added to classes and

and generate @BuiltinType classes

- [x] generate code that wraps exceptions to panic via `wrapException`

annotation element (see @Builtin.WrapException`

Also rewrote @Builtin annotations to be more structured and introduced some nesting, such as

@Builtin.Method or @Builtin.WrapException.

This is part of incremental work and a follow up on https://github.com/enso-org/enso/pull/3444.

# Important Notes

Notice the number of boilerplate classes removed to see the impact.

For now only applied to `Array` but should be applicable to other types.

Before, when running Enso with `-ea`, some assertions were broken and the interpreter would not start.

This PR fixes two very minor bugs that were the cause of this - now we can successfully run Enso with `-ea`, to test that any assertions in Truffle or in our own libraries are indeed satisfied.

Additionally, this PR adds a setting to SBT that ensures that IntelliJ uses the right language level (Java 17) for our projects.

A low-hanging fruit where we can automate the generation of many

@BuiltinMethod nodes simply from the runtime's methods signatures.

This change introduces another annotation, @Builtin, to distinguish from

@BuiltinType and @BuiltinMethod processing. @Builtin processing will

always be the first stage of processing and its output will be fed to

the latter.

Note that the return type of Array.length() is changed from `int` to

`long` because we probably don't want to add a ton of specializations

for the former (see comparator nodes for details) and it is fine to cast

it in a small number of places.

Progress is visible in the number of deleted hardcoded classes.

This is an incremental step towards #181499077.

# Important Notes

This process does not attempt to cover all cases. Not yet, at least.

We only handle simple methods and constructors (see removed `Array` boilerplate methods).

Auxiliary sbt commands for building individual

stdlib packages.

The commands check if the engine distribution was built at least once,

and only copy the necessary package files if necessary.

So far added:

- `buildStdLibBase`

- `buildStdLibDatabase`

- `buildStdLibTable`

- `buildStdLibImage`

- `buildStdLibGoogle_Api`

Related to [#182014385](https://www.pivotaltracker.com/story/show/182014385)