* Rename makeUnique overloads to avoid issue when Nothing is passed.

Suspend warnings when building the output table to avoid mass warning duplication.

* Add test for mixed invalid names.

Adjust so a single warning attached.

* PR comments.

related #6323

Fixes the following scenario:

- preprocessor function catches `ThreadInterruptedException` and returns it as a JSON

```json

{"error":"org.enso.interpreter.runtime.control.ThreadInterruptedException"}

```

- engine thinks that the visualization was computed successfully and just returns it to the user (without doing any fallback logic like retrying the computation)

- IDE displays blank visualization

- Missing tests from number parsing.

- Fix type signature on some warning methods.

- Fix warnings on `Standard.Database.Data.Table.parse_values`.

- Added test for `Nothing` and empty string on `use_first_row_as_names`.

- New API for `Number.format` taking a simple format string and `Locale`.

- Add ellipsis to truncated `Text.to_display_text`.

- Adjusted built-in `to_display_text` for numbers to not include type (but also to display BigInteger as value).

- Remove `Noise.Generator` interface type.

- Json: Added `to_display_text` to `JS_Object`.

- Time: Added `to_display_text` for `Date`, `Time_Of_Day`, `Date_Time`, `Duration` and `Period`.

- Text: Added `to_display_text` to `Locale`, `Case_Sensitivity`, `Encoding`, `Text_Sub_Range`, `Span`, `Utf_16_Span`.

- System: Added `to_display_text` to `File`, `File_Permissions`, `Process_Result` and `Exit_Code`.

- Network: Added `to_display_text` to `URI`, `HTTP_Status_Code` and `Header`.

- Added `to_display_text` to `Maybe`, `Regression`, `Pair`, `Range`, `Filter_Condition`.

- Added support for `to_js_object` and `to_display_text` to `Random_Number_Generator`.

- Verified all error types have `to_display_text`.

- Removed `BigInt`, `Date`, `Date_Time` and `Time_Of_Day` JS based rendering as using `to_display_text` now.

- Added support for rendering nested structures in the table viz.

Follow up of #6298 as it grew too much. Adds the needed typechecks to aggregate operations. Ensures that the DB operations report `Floating_Point_Equality` warning consistently with in-memory.

- Add `replace` with same syntax as on `Text` to an in-memory `Column`.

- Add `trim` with same syntax as on `Text` to an in-memory `Column`.

- Add `trim` to in-database `Column`.

- Added `is_supported` to dialects and exposed the dialect consistently on the `Connection`.

- Add `write_table` support to `JSON_File` allowing `Table.write` to write JSON.

- Updated the parsing for integers and decimals:

- Support for currency symbols.

- Support for brackets for negative numbers.

- Automatic detection of decimal points and thousand separators.

- Tighter rules for scientific and thousand separated numbers.

- Remove `replace_text` from `Table`.

- Remove `write_json` from `Table`.

`Number.nan` can be used as a key in `Map`. This PR basically implements the support for [JavaScript's Same Value Zero Equality](https://developer.mozilla.org/en-US/docs/Web/JavaScript/Equality_comparisons_and_sameness#same-value-zero_equality) so that `Number.nan` can be used as a key in `Map`.

# Important Notes

- For NaN, it holds that `Meta.is_same_object Number.nan Number.nan`, and `Number.nan != Number.nan` - inspired by JS spec.

- `Meta.is_same_object x y` implies `Any.== x y`, except for `Number.nan`.

`Vector.sort` does some custom method dispatch logic which always expected a function as `by` and `on` arguments. At the same time, `UnresolvedSymbol` is treated like a (to be resolved) `Function` and under normal circumstances there would be no difference between `_.foo` and `.foo` provided as arguments.

Rather than adding an additional phase that does some form of eta-expansion, to accomodate for this custom dispatch, this change only fixes the problem locally. We accept `Function` and `UnresolvedSymbol` and perform the resolution on the fly. Ideally, we would have a specialization on the latter but again, it would be dependent on the contents of the `Vector` so unclear if that is better.

Closes#6276,

# Important Notes

There was a suggestion to somehow modify our codegen to accomodate for this scenario but I went against it. In fact a lot of name literals have `isMethod` flag and that information is used in the passes but it should not control how (late) codegen is done. If we were to make this more generic, I would suggest maybe to add separate eta-expansion pass. But it could affect other things and could be potentially a significant change with limited potential initially, so potential future work item.

https://github.com/orgs/enso-org/discussions/6344 requested to change the order of arguments when controlling context permissions.

# Important Notes

The change brings it closer to the design doc but IMHO also a bit cumbersome to use (see changed tests) - applications involving default arguments don't play well when the last argument is not the default 🤷 .`

* Don't propagate warnings on suspended arguments

In the current implementation, application of arguments with warnings

first extracts warnings, does the application and appends the warnings

to the result.

This process was however too eager if the suspended argument was a

literal (we don't know if it will be executed after all).

The change modifies method processor to take into account the

`@Suspend` annotation and not gather warnings before the application

takes place.

* PR review

* Update type ascriptions in some operators in Any

* Add @GenerateUncached to AnyToTextNode.

Will be used in another node with @GenerateUncached.

* Add tests for "sort handles incomparable types"

* Vector.sort handles incomparable types

* Implement sort handling for different comparators

* Comparison operators in Any do not throw Type_Error

* Fix some issues in Ordering_Spec

* Remove the remaining comparison operator overrides for numbers.

* Consolidate all sorting functionality into a single builtin node.

* Fix warnings attachment in sort

* PrimitiveValuesComparator handles other types than primitives

* Fix byFunc calling

* on function can be called from the builtin

* Fix build of native image

* Update changelog

* Add VectorSortTest

* Builtin method should not throw DataflowError.

If yes, the message is discarded (a bug?)

* TypeOfNode may not return only Type

* UnresolvedSymbol is not supported as `on` argument to Vector.sort_builtin

* Fix docs

* Fix bigint spec in LessThanNode

* Small fixes

* Small fixes

* Nothings and Nans are sorted at the end of default comparator group.

But not at the whole end of the resulting vector.

* Fix checking of `by` parameter - now accepts functions with default arguments.

* Fix changelog formatting

* Fix imports in DebuggingEnsoTest

* Remove Array.sort_builtin

* Add comparison operators to micro-distribution

* Remove Array.sort_builtin

* Replace Incomparable_Values by Type_Error in some tests

* Add on_incomparable argument to Vector.sort_builtin

* Fix after merge - Array.sort delegates to Vector.sort

* Add more tests for problem_behavior on Vector.sort

* SortVectorNode throws only Incomparable_Values.

* Delete Collections helper class

* Add test for expected failure for custom incomparable values

* Cosmetics.

* Fix test expecting different comparators warning

* isNothing is checked via interop

* Remove TruffleLogger from SortVectorNode

* Small review refactorings

* Revert "Remove the remaining comparison operator overrides for numbers."

This reverts commit 0df66b1080.

* Improve bench_download.py tool's `--compare` functionality.

- Output table is sorted by benchmark labels.

- Do not fail when there are different benchmark labels in both runs.

* Wrap potential interop values with `HostValueToEnsoNode`

* Use alter function in Vector_Spec

* Update docs

* Invalid comparison throws Incomparable_Values rather than Type_Error

* Number comparison builtin methods return Nothing in case of incomparables

The primary motivation for this change was

https://github.com/enso-org/enso/issues/6248, which requested the possibility of defining `to_display_text` methods of common errors via regular method definitions. Until now one could only define them via builtins.

To be able to support that, polyglot invocation had to report `to_display_text` in the list of (invokable) members, which it didn't. Until now, it only considered fields of constructors and builtin methods. That is now fixed as indicated by the change in `Atom`.

Closes#6248.

# Important Notes

Once most of builtins have been translated to regular Enso code, it became apparent how the usage of `.` at the end of the message is not consistent and inflexible. The pure message should never follow with a dot or it makes it impossible to pretty print consistently for the purpose of error reporting. Otherwise we regularly end up with errors ending with `..` or worse. So I went medieval on the reasons for failures and removed all the dots.

The overall result is mostly the same except now we are much more consistent.

Finally, there was a bit of a good reason for using builtins as it simplified our testing.

Take for example `No_Such_Method.Error`. If we do not import `Errors.Common` module we only rely on builtin error types. The type obviously has the constructor but it **does not have** `to_display_text` in scope; the latter is no longer a builtin method but a regular method. This is not really a problem for users who will always import stdlib but our tests often don't. Hence the number of changes and sometimes lack of human-readable errors there.

Closes#5254

In #6189 the SQLite version was bumped to a newer release which has builtin support for Full and Right joins, so no workaround is no longer needed.

As per design, IOContexts controlled via type signatures are going away. They are replaced by explicit `Context.if_enabled` runtime checks that will be added to particular method implementations.

`production`/`development` `IOPermissions` are replaced with `live` and `design` execution enviornment. Currently, the `live` env has a hardcoded list of allowed contexts i.e. `Input` and `Output`.

# Important Notes

As per design PR-55. Closes#6129. Closes#6131.

close#6080

Changelog

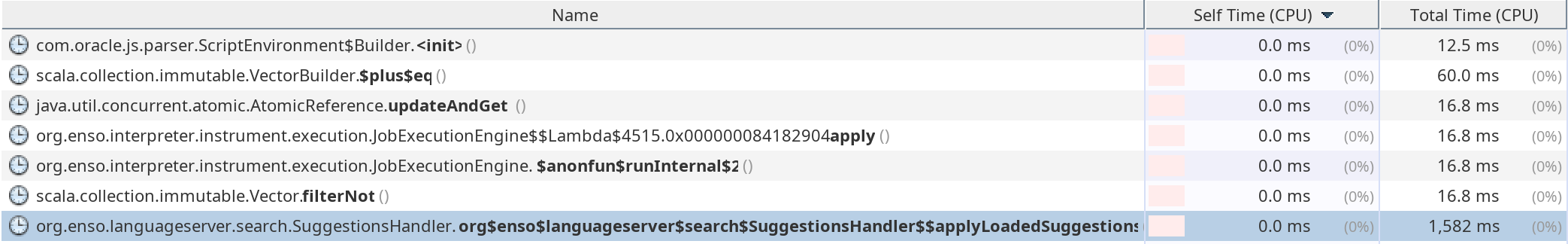

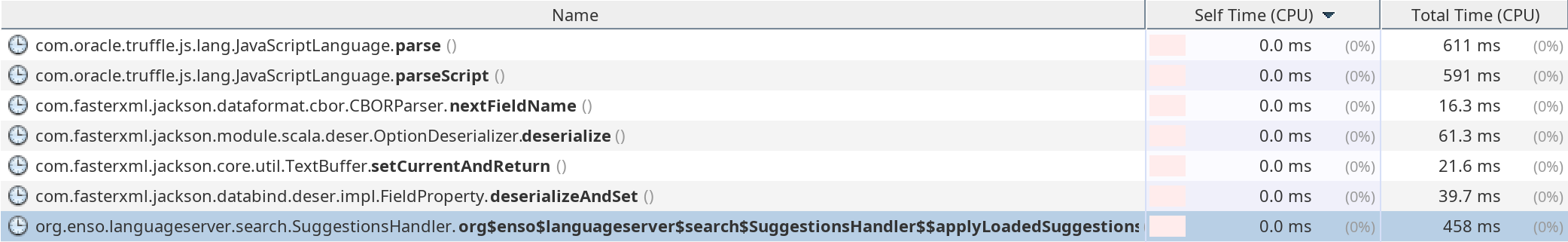

- add: implement `SuggestionsRepo.insertAll` as a batch SQL insert

- update: `search/getSuggestionsDatabase` returns empty suggestions. Currently, the method is only used at startup and returns the empty response anyway because the libs are not loaded at that point.

- update: serialize only global (defined in the module scope) suggestions during the distribution building. There's no sense in storing the local library suggestions.

- update: sqlite dependency

- remove: unused methods from `SuggestionsRepo`

- remove: Arguments table

# Important Notes

Speeds up libraries loading by ~1 second.

Implements #6134.

# Important Notes

One can define lazy atom fields as:

```haskell

type Lazy

Value ~x ~y

```

the evaluation of the `x` and `y` fields is then delayed until they are needed. The evaluation happens once. Then the computed value is kept in the atom for further use.

Fixes#5898 by removing `Catch.panic` and speeding the `sieve.enso` benchmark from 1058 ms to 514 ms. Should there be no dedicated conversion, let's use one defined on `Any` type - e.g. defining a conversion `from(Any)` makes such a conversion is always available.

- `Process.run` now returns a `Process_Result` allowing the easy capture of stdout and stderr.

- Joining a column with a column name does not warn if adding just the prefix.

- Stop the table viz from changing case and adding spaces to the headers.

This is the first part of the #5158 umbrella task. It closes#5158, follow-up tasks are listed as a comment in the issue.

- Updates all prototype methods dealing with `Value_Type` with a proper implementation.

- Adds a more precise mapping from in-memory storage to `Value_Type`.

- Adds a dialect-dependent mapping between `SQL_Type` and `Value_Type`.

- Removes obsolete methods and constants on `SQL_Type` that were not portable.

- Ensures that in the Database backend, operation results are computed based on what the Database is meaning to return (by asking the Database about expected types of each operation).

- But also ensures that the result types are sane.

- While SQLite does not officially support a BOOLEAN affinity, we add a set of type overrides to our operations to ensure that Boolean operations will return Boolean values and will not be changed to integers as SQLite would suggest.

- Some methods in SQLite fallback to a NUMERIC affinity unnecessarily, so stuff like `max(text, text)` will keep the `text` type instead of falling back to numeric as SQLite would suggest.

- Adds ability to use custom fetch / builder logic for various types, so that we can support vendor specific types (for example, Postgres dates).

# Important Notes

- There are some TODOs left in the code. I'm still aligning follow-up tasks - once done I will try to add references to relevant tasks in them.

Enables `distinct`, `aggregate` and `cross_tab` to use the Enso hashing and equality operations.

Also, I rewired the way the ObjectComparators are obtained in polyglot code to be more consistent.

Add Comparator for `Day_Of_Week`, `Header`, `SQL_Type`, `Image` and `Matrix`.

Also, removed the custom `==` from these types as needed. (Closes#5626)

- Fixes InvokeCallableNode to support warnings.

- Strips warnings from annotations in `get_widget_json`.

- Remove `get_full_annotations_json`.

- Fix warnings on Dialect.

Exporting types named the same as the module where they are defined in `Main` modules of library components may lead to accidental name conflicts. This became apparent when trying to access `Problem_Behavior` module via a fully qualified name and the compiler rejected it. This is due to the fact that `Main` module exported `Error` type defined in `Standard.Base.Error` module, thus making it impossible to access any other submodules of `Standard.Base.Error` via a fully qualified name.

This change adds a warning to FullyQualifiedNames pass that detects any such future problems.

While only `Error` module was affected, it was widely used in the stdlib, hence the number of changes.

Closes#5902.

# Important Notes

I left out the potential conflict in micro-distribution, thus ensuring we actually detect and report the warning.

Fixing #5768 and #5765 and co. Introducing `Meta.Type` and giving it the desired methods.

# Important Notes

`Type` is no longer a `Meta.Atom`, but it has a dedicated `Meta.Type` representation.

Fixes#5805 by returning `[]` as list of fields of `Type`.

# Important Notes

`Type` is recognized as `Meta.is_atom` since #3671. However `Type` isn't an `Atom` internally. We have to provide special handling for it where needed.

Closes#5151 and adds some additional tests for `cross_tab` that verify duplicated and invalid names.

I decided that for empty or `Nothing` names, instead of replacing them with `Column` and implicitly losing connection with the value that was in the column, we should just error on such values.

To make handling of these easier, `fill_empty` was added allowing to easily replace the empty values with something else.

Also, `{is,fill}_missing` was renamed to `{is,fill}_nothing` to align with `Filter_Condition.Is_Nothing`.

Merge _ordered_ and _unordered_ comparators into a single one.

# Important Notes

Comparator is now required to have only `compare` method:

```

type Comparator

comapre : T -> T -> (Ordering|Nothing)

hash : T -> Integer

```

Follow-up to #5113 - I add some more edge case tests as we discussed with @jdunkerley

When debugging some quoting issues, I also realised the current `Mismatched_Quote` error provided not enough information. So I amended it to at least include some context indicating which was the 'offending' cell.

Removing special handling of `AtomConstructor` in `Meta.is_a` check.

# Important Notes

A lot of tests are about to fail. Many of them indirectly call `Meta.is_a` with a constructor rather than type.

- Fix issue with Geo Map viz.

- Handle invalid format strings better in `Data_Formatter`.

- New constants for the ISO format strings (and a special ENSO_ZONED_DATE_TIME)

- Consistent Date Time format for parsing in all places.

- Avoid throwing exception in datetime parsing.

- Support for milliseconds (well nanoseconds) in Date_Time and Time_Of_Day.

- `Column.map` stays within Enso.

- Allow `Aggregate_Column.Group_By` in `cross_tab` group_by parameter.

Coerce values obtained from polyglot calls to fix#5177.

# Important Notes

Adds `IntHolder` class into the `test/Tests` project to simulate access to a class with integer field.

Closes#5113

Fixes a bug where read-only files would be overwritten if File.write was used in backup mode, and added tests to avoid such regression. To implement it, introduced a `is_writable` property on `File`.

- Adjust Excel Workbook write behaviour.

- Support Nothing / Null constants.

- Deduce the type of arithmetic operations and `iif`.

- Allow Date_Time constants, treating as local timezone.

- Removed the `to_column_name` and `ensure_sane_name` code.

- Updates the `rename_columns` API.

- Add `first_row`, `second_row` and `last_row` to the Table types.

- New option for reading only last row of ResultSet.

Rename is_match + match to match + find (respectively), and remove all non-regexp functionality.

Regexp flags and Match_Mode are also no longer supported by these methods.

Remove regex support from .locate and .locate_all; regex functionality is moved to .match and .match_all where appropriate. This is in preparation for simplifying regex support across the board.

Also change Matching_Mode types to a single type with two variants.

Note: the matcher parameter to .locate and .locate_all has been replaced by a case_sensitivity parameter, of type Case_Sensitivity, which differs in that it also has a Default option. Default is treated as Sensitive.

- Updated `Widget.Vector_Editor` ready for use by IDE team.

- Added `get` to `Row` to make API more aligned.

- Added `first_column`, `second_column` and `last_column` to `Table` APIs.

- Adjusted `Column_Selector` and associated methods to have simpler API.

- Removed `Column` from `Aggregate_Column` constructors.

- Added new `Excel_Workbook` type and added to `Excel_Section`.

- Added new `SQLiteFormatSPI` and `SQLite_Format`.

- Added new `IamgeFormatSPI` and `Image_Format`.

Closes#5109

# Important Notes

- Currently the tests pass for the in-memory parts of Common_Table_Operations, but still some stuff not working on DB backends - in progress.

Add `Comparator` type class emulation for all types. Migrate all the types in stdlib to this new `Comparator` API. The main documentation is in `Ordering.enso`.

Fixes these pivotals:

- https://www.pivotaltracker.com/story/show/183945328

- https://www.pivotaltracker.com/story/show/183958734

- https://www.pivotaltracker.com/story/show/184380208

# Important Notes

- The new Comparator API forces users to specify both `equals` and `hash` methods on their custom comparators.

- All the `compare_to` overrides were replaced by definition of a custom _ordered_ comparator.

- All the call sites of `x.compare_to y` method were replaced with `Ordering.compare x y`.

- `Ordering.compare` is essentially a shortcut for `Comparable.from x . compare x y`.

- The default comparator for `Any` is `Default_Unordered_Comparator`, which just forwards to the builtin `EqualsNode` and `HashCodeNode` nodes.

- For `x`, one can get its hash with `Comparable.from x . hash x`.

- This makes `hash` as _hidden_ as possible. There are no other public methods to get a hash code of an object.

- Comparing `x` and `y` can be done either by `Ordering.compare x y` or `Comparable.from x . compare x y` instead of `x.compare_to y`.

- Fixes the display of Date, Time_Of_Day and Date_Time so doesn't wrap.

- Adjust serialization of large integer values for JS and display within table.

- Workaround for issue with using `.lines` in the Table (new bug filed).

- Disabled warning on no specified `separator` on `Concatenate`.

Does not include fix for aggregation on integer values outside of `long` range.

- New `set` function design - takes a `Column` and works with that more easily and supports control of `Set_Mode`.

- New simple `parse` API on `Column`.

- Separated expression support for `filter` to new `filter_by_expression` on `Table`.

- New `compute` function allowing creation of a column from an expression.

- Added case sensitivity argument to `Column` based on `starts_with`, `ends_with` and `contains`.

- Added case sensitivity argument to `Filter_Condition` for `Starts_With`, `Ends_With`, `Contains` and `Not_Contains`.

- Fixed the issue in JS Table visualisation where JavaScript date was incorrectly set.

- Some dynamic dropdown expressions - experimenting with ways to use them.

- Fixed issue with `.pretty` that wasn't escaping `\`.

- Changed default Postgres DB to `postgres`.

- Fixed SQLite support for starts_with, ends_with and contains to be consistent (using GLOB not LIKE).

When a large long would be passed to a host call expecting a double, it would crash with a

```

Cannot convert '<some long>'(language: Java, type: java.lang.Long) to Java type 'double': Invalid or lossy primitive coercion

```

That is unlikely to be expected by users. It also came up in the Statistics examples during Sum. One could workaround it by forcing the conversion manually with `.to_decimal` but it is not a permanent solution.

Instead this change adds a custom type mapping from Long to Double that will do it behind the scenes with no user interaction. The mapping kicks in only for really large longs.

# Important Notes

Note that the _safe_ range is hardcoded in Truffle and it is not accessible in enso packages. Therefore a simple c&p for that max safe long value was necessary.

https://github.com/enso-org/enso/pull/3764 introduced static wrappers for instance methods. Except it had a limitation to only be allowed for types with at least a single constructor.

That excluded builtin types as well which, by default, don't have them. This limitation is problematic for Array/Vector consolidation and makes builtin types somehow second-citizens.

This change lifts the limitation for builtin types only. Note that we do want to share the implementation of the generated builtin methods. At the same time due to the additional argument we have to adjust the starting index of the arguments.

This change avoids messing with the existing dispatch logic, to avoid unnecessary complexity.

As a result it is now possible to call builtin types' instance methods, statically:

```

arr = Array.new_1 42

Array.length arr

```

That would previously lead to missing method exception in runtime.

# Important Notes

The only exception is `Nothing`. Primarily because it requires `Nothing` to have a proper eigentype (`Nothing.type`) which would messed up a lot of existing logic for no obvious benefit (no more calling of `foo=Nothing` in parameters being one example).

Enso unit tests were running without `-ea` check enabled and as such various invariant checks in Truffle code were not executed. Let's turn the `-ea` flag on and fix all the code misbehaves.

- Updated `Text.starts_with`, `Text.ends_with` and `Text.contains` to new simpler API.

- Added a `Case_Sensitivity.Default` and adjusted `Table.distinct` to use it by default.

- Fixed a bug with `Data.fetch` on an HTTP error.

- Improved SQLite Case Sensitivity control in distinct to use collations.

Implements [#183453466](https://www.pivotaltracker.com/story/show/183453466).

https://user-images.githubusercontent.com/1428930/203870063-dd9c3941-ce79-4ce9-a772-a2014e900b20.mp4

# Important Notes

* the best laziness is used for `Text` type, which makes use of its internal representation to send data

* any type will first compute its default string representation and then send the content of that lazy to the IDE

* special handling of files and their content will be implemented in the future

* size of the displayed text can be updated dynamically based on best effort information: if the backend does not yet know the full width/height of the text, it can update the IDE at any time and this will be handled gracefully by updating the scrollbar position and sizes.

- add: `GeneralAnnotation` IR node for `@name expression` annotations

- update: compilation pipeline to process the annotation expressions

- update: rewrite `OverloadsResolution` compiler pass so that it keeps the order of module definitions

- add: `Meta.get_annotation` builtin function that returns the result of annotation expression

- misc: improvements (private methods, lazy arguments, build.sbt cleanup)

* Hash codes prototype

* Remove Any.hash_code

* Improve caching of hashcode in atoms

* [WIP] Add Hash_Map type

* Implement Any.hash_code builtin for primitives and vectors

* Add some values to ValuesGenerator

* Fix example docs on Time_Zone.new

* [WIP] QuickFix for HashCodeTest before PR #3956 is merged

* Fix hash code contract in HashCodeTest

* Add times and dates values to HashCodeTest

* Fix docs

* Remove hashCodeForMetaInterop specialization

* Introduce snapshoting of HashMapBuilder

* Add unit tests for EnsoHashMap

* Remove duplicate test in Map_Spec.enso

* Hash_Map.to_vector caches result

* Hash_Map_Spec is a copy of Map_Spec

* Implement some methods in Hash_Map

* Add equalsHashMaps specialization to EqualsAnyNode

* get and insert operations are able to work with polyglot values

* Implement rest of Hash_Map API

* Add test that inserts elements with keys with same hash code

* EnsoHashMap.toDisplayString use builder storage directly

* Add separate specialization for host objects in EqualsAnyNode

* Fix specialization for host objects in EqualsAnyNode

* Add polyglot hash map tests

* EconomicMap keeps reference to EqualsNode and HashCodeNode.

Rather than passing these nodes to `get` and `insert` methods.

* HashMapTest run in polyglot context

* Fix containsKey index handling in snapshots

* Remove snapshots field from EnsoHashMapBuilder

* Prepare polyglot hash map handling.

- Hash_Map builtin methods are separate nodes

* Some bug fixes

* Remove ForeignMapWrapper.

We would have to wrap foreign maps in assignments for this to be efficient.

* Improve performance of Hash_Map.get_builtin

Also, if_nothing parameter is suspended

* Remove to_flat_vector.

Interop API requires nested vector (our previous to_vector implementation). Seems that I have misunderstood the docs the first time I read it.

- to_vector does not sort the vector by keys by default

* Fix polyglot hash maps method dispatch

* Add tests that effectively test hash code implementation.

Via hash map that behaves like a hash set.

* Remove Hashcode_Spec

* Add some polyglot tests

* Add Text.== tests for NFD normalization

* Fix NFD normalization bug in Text.java

* Improve performance of EqualsAnyNode.equalsTexts specialization

* Properly compute hash code for Atom and cache it

* Fix Text specialization in HashCodeAnyNode

* Add Hash_Map_Spec as part of all tests

* Remove HashMapTest.java

Providing all the infrastructure for all the needed Truffle nodes is no longer manageable.

* Remove rest of identityHashCode message implementations

* Replace old Map with Hash_Map

* Add some docs

* Add TruffleBoundaries

* Formatting

* Fix some tests to accept unsorted vector from Map.to_vector

* Delete Map.first and Map.last methods

* Add specialization for big integer hash

* Introduce proper HashCodeTest and EqualsTest.

- Use jUnit theories.

- Call nodes directly

* Fix some specializations for primitives in HashCodeAnyNode

* Fix host object specialization

* Remove Any.hash_code

* Fix import in Map.enso

* Update changelog

* Reformat

* Add truffle boundary to BigInteger.hashCode

* Fix performance of HashCodeTest - initialize DataPoints just once

* Fix MetaIsATest

* Fix ValuesGenerator.textual - Java's char is not Text

* Fix indent in Map_Spec.enso

* Add maps to datapoints in HashCodeTest

* Add specialization for maps in HashCodeAnyNode

* Add multiLevelAtoms to ValuesGenerator

* Provide a workaround for non-linear key inserts

* Fix specializations for double and BigInteger

* Cosmetics

* Add truffle boundaries

* Add allowInlining=true to some truffle boundaries.

Increases performance a lot.

* Increase the size of vectors, and warmup time for Vector.Distinct benchmark

* Various small performance fixes.

* Fix Geo_Spec tests to accept unsorted Map.to_vector

* Implement Map.remove

* FIx Visualization tests to accept unsorted Map.to_vector

* Treat java.util.Properties as Map

* Add truffle boundaries

* Invoke polyglot methods on java.util.Properties

* Ignore python tests if python lang is missing

Added a separate pass, `FullyQualifiedNames`, that partially resolves fully qualified names. The pass only resolves the library part of the name and replaces it with a reference to the `Main` module.

There are 2 scenarios that could be potentially:

1) the code uses a fully qualified name to a component that has been

parsed/compiled

2) the code uses a fully qualified name to a component that has **not** be

imported

For the former case, it is sufficient to just check `PackageRepository` for the presence of the library name.

In the latter we have to ensure that the library has been already parsed and all its imports are resolved. That would require the reference to `Compiler` in the `FullyQualifiedNames` pass, which could then trigger a full compilation for missing library. Since it has some undesired consequences (tracking of dependencies becomes rather complex) we decided to exclude that scenario until it is really needed.

# Important Notes

With this change, one can use a fully qualified name directly.

e.g.

```

import Standard.Base

main =

Standard.Base.IO.println "Hello world!"

```

- Add `get` to Table.

- Correct `Count Nothing` examples.

- Add `join` to File.

- Add `File_Format.all` listing all installed formats.

- Add some more ALIAS entries.

Fixes https://www.pivotaltracker.com/story/show/184073099

# Important Notes

- Since now the only operator on columns for division, `/`, returns floats, it may be worth creating an additional `div` operator exposing integer division. But that will be done as a separate task aligning column operator APIs.

**Vector**

- Adjusted `Vector.sort` to be `Vector.sort order on by`.

- Adjusted other sort to use `order` for direction argument.

- Added `insert`, `remove`, `index_of` and `last_index_of` to `Vector`.

- Added `start` and `if_missing` arguments to `find` on `Vector`, and adjusted default is `Not_Found` error.

- Added type checking to `+` on `Vector`.

- Altered `first`, `second` and `last` to error with `Index_Out_Of_Bounds` on `Vector`.

- Removed `sum`, `exists`, `head`, `init`, `tail`, `rest`, `append`, `prepend` from `Vector`.

**Pair**

- Added `last`, `any`, `all`, `contains`, `find`, `index_of`, `last_index_of`, `reverse`, `each`, `fold` and `reduce` to `Pair`.

- Added `get` to `Pair`.

**Range**

- Added `first`, `second`, `index_of`, `last_index_of`, `reverse` and `reduce` to `Range`.

- Added `at` and `get` to `Range`.

- Added `start` and `if_missing` arguments to `find` on `Range`.

- Simplified `last` and `length` of `Range`.

- Removed `exists` from `Range`.

**List**

- Added `second`, `find`, `index_of`, `last_index_of`, `reverse` and `reduce` to `Range`.

- Added `at` and `get` to `List`.

- Removed `exists` from `List`.

- Made `all` short-circuit if any fail on `List`.

- Altered `is_empty` to not compute the length of `List`.

- Altered `first`, `tail`, `head`, `init` and `last` to error with `Index_Out_Of_Bounds` on `List`.

**Others**

- Added `first`, `second`, `last`, `get` to `Text`.

- Added wrapper methods to the Random_Number_Generator so you can get random values more easily.

- Adjusted `Aggregate_Column` to operate on the first column by default.

- Added `contains_key` to `Map`.

- Added ALIAS to `row_count` and `order_by`.

Most of the problems with accessing `ArrayOverBuffer` have been resolved by using `CoerceArrayNode` (https://github.com/enso-org/enso/pull/3817). In `Array.sort` we still however specialized on Array which wasn't compatible with `ArrayOverBuffer`. Similarly sorting JS or Python arrays wouldn't work.

Added a specialization to `Array.sort` to deal with that case. A generic specialization (with `hasArrayElements`) not only handles `ArrayOverBuffer` but also polyglot arrays coming from JS or Python. We could have an additional specialization for `ArrayOverBuffer` only (removed in the last commit) that returns `ArrayOverBuffer` rather than `Array` although that adds additional complexity which so far is unnecessary.

Also fixed an example in `Array.enso` by providing a default argument.

Compiler performed name resolution of literals in type signatures but would silently fail to report any problems.

This meant that wrong names or forgotten imports would sneak in to stdlib.

This change introduces 2 main changes:

1) failed name resolutions are appended in `TypeNames` pass

2) `GatherDiagnostics` pass also collects and reports failures from type

signatures IR

Updated stdlib so that it passes given the correct gatekeepers in place.

Implements https://www.pivotaltracker.com/story/show/184032869

# Important Notes

- Currently we get failures in Full joins on Postgres which show a more serious problem - amending equality to ensure that `[NULL = NULL] == True` breaks hash/merge based indexing - so such joins will be extremely inefficient. All our joins currently rely on this notion of equality which will mean all of our DB joins will be extremely inefficient.

- We need to find a solution that will support nulls and still work OK with indices (but after exploring a few approaches: `COALESCE(a = b, a IS NULL AND b is NULL)`, `a IS NOT DISTINCT FROM b`, `(a = b) OR (a IS NULL AND b is NULL)`; all of which did not work (they all result in `ERROR: FULL JOIN is only supported with merge-joinable or hash-joinable join conditions`) I'm less certain that it is possible. Alternatively, we may need to change the NULL semantics to align it with SQL - this seems like likely the simpler solution, allowing us to generate simple, reliable SQL - the NULL=NULL solution will be cornering us into nasty workarounds very dependent on the particular backend.

`Any.==` is a builtin method. The semantics is the same as it used to be, except that we no longer assume `x == y` iff `Meta.is_same_object x y`, which used to be the case and caused failures in table tests.

# Important Notes

Measurements from `EqualsBenchmarks` shows that the performance of `Any.==` for recursive atoms increased by roughly 20%, and the performance for primitive types stays roughly the same.

First part of fixing `Text.to_text`.

- add: `pretty` method for pretty printing.

- update: make `Text.to_text` conversion identity for Text

In the next iterations `to_text` will be gradually replaced with `to Text` conversion once the related issues with conversions are fixed.

Implements `getMetaObject` and related messages from Truffle interop for Enso values and types. Turns `Meta.is_a` into builtin and re-uses the same functionality.

# Important Notes

Adds `ValueGenerator` testing infrastructure to provide unified access to special Enso values and builtin types that can be reused by other tests, not just `MetaIsATest` and `MetaObjectTest`.

Use JavaScript to parse and serialise to JSON. Parses to native Enso object.

- `.to_json` now returns a `Text` of the JSON.

- Json methods now `parse`, `stringify` and `from_pairs`.

- New `JSON_Object` representing a JavaScript Object.

- `.to_js_object` allows for types to custom serialize. Returning a `JS_Object`.

- Default JSON format for Atom now has a `type` and `constructor` property (or method to call for as needed to deserialise).

- Removed `.into` support for now.

- Added JSON File Format and SPI to allow `Data.read` to work.

- Added `Data.fetch` API for easy Web download.

- Default visualization for JS Object trunctes, and made Vector default truncate children too.

Fixes defect where types with no constructor crashed on `to_json` (e.g. `Matching_Mode.Last.to_json`.

Adjusted default visualisation for Vector, so it doesn't serialise an array of arrays forever.

Likewise, JS_Object default visualisation is truncated to a small subset.

New convention:

- `.get` returns `Nothing` if a key or index is not present. Takes an `other` argument allowing control of default.

- `.at` error if key or index is not present.

- `Nothing` gains a `get` method allowing for easy propagation.

This removes the special handling of polyglot exceptions and allows matching on Java exceptions in the same way as for any other types.

`Polyglot_Error`, `Panic.catch_java` and `Panic.catch_primitive` are gone

The change mostly deals with the backslash of removing `Polyglot_Error` and two `Panic` methods.

`Panic.catch` was implemented as a builtin instead of delegating to `Panic.catch_primitive` builtin that is now gone.

This fixes https://www.pivotaltracker.com/story/show/182844611

When integrated with CI, will guard against any compilation failures. Can be enabled via env var `ENSO_BENCHMARK_TEST_DRY_RUN="True"`:

```

ENSO_BENCHMARK_TEST_DRY_RUN="True" built-distribution/enso-engine-0.0.0-dev-linux-amd64/enso-0.0.0-dev/bin/enso --run test/Benchmarks

```

# Important Notes

/cc @mwu-tow this could be run only on linux PRs in CI

- Implemented https://www.pivotaltracker.com/story/show/183913276

- Refactored MultiValueIndex and MultiValueKeys to be more type-safe and more direct about using ordered or unordered maps.

- Added performance tests ensuring we use an efficient algorithm for the joins (the tests will fail for a full O(N*M) scan).

- Removed some duplicate code in the Table library.

- Added optional coloring of test results in terminal to make failures easier to spot.

- Aligned `compare_to` so returns `Type_Error` if `that` is wrong type for `Text`, `Ordering` and `Duration`.

- Add `empty_object`, `empty_array`. `get_or_else`, `at`, `field_names` and `length` to `Json`.

- Fix `Json` serialisation of NaN and Infinity (to "null").

- Added `length`, `at` and `to_vector` to Pair (allowing it to be treated as a Vector).

- Added `running_fold` to the `Vector` and `Range`.

- Added `first` and `last` to the `Vector.Builder`.

- Allow `order_by` to take a single `Sort_Column` or have a mix of `Text` and `Sort_Column.Name` in a `Vector`.

- Allow `select_columns_helper` to take a `Text` value. Allows for a single field in group_by in cross_tab.

- Added `Patch` and `Custom` to HTTP_Method.

- Added running `Statistic` calculation and moved more of the logic from Java to Enso. Performance seems similar to pure Java version now.

Add `Test.with_clue` function similar to ScalaTest's [with_clue](https://www.scalatest.org/user_guide/using_assertions).

This is useful for tests where the assertion depends on context which is not clear from it's operands.

### Important Notes

Using with_clue changes `State Clue` which is extracted by the `fail` function.

The State is introduced by `Test.specify` when the test is not pending.

There should be no changes to the public API apart form the addition of `Test.with_clue`.

* Sequence literal (Vector) should preserve warnings

When Vector was created via a sequence literal, we simply dropped any

associated any warnings associated with it.

This change propagates Warnings during the creation of the Vector.

Ideally, it would be sufficient to propagate warnings from the

individual elements to the underlying storage but doesn't go well with

`Vector.fromArray`.

* update changelog

* Array-like structures preserver warnings

Added a WarningsLibrary that exposes `hasWarnings` and `getWarnings`

messages. That way we can have a single storage that defines how to

extract warnings from an Array and the others just delegate to it.

This simplifies logic added to sequence literals to handle warnings.

* Ensure polyglot method calls are warning-free

Since warnings are no longer automatically extracted from Array-like

structures, we delay the operation until an actual polyglot method call

is performed.

Discovered a bug in `Warning.detach_selected_warnings` which was missing

any usage or tests.

* nits

* Support multi-dimensional Vectors with warnings

* Propagate warnings from case branches

* nit

* Propagate all vector warnings when reading element

Previously, accessing an element of an Array-like structure would only

return warnings of that element or of the structure itself.

Now, accessing an element also returns warnings from all its elements as

well.

- Moved `to_default_visualization_data` to `Standard.Visualization`.

- Remove the use of `is_a` in favour of case statements.

- Stop exporting Standard.Base.Error.Common.

- Separate errors to own files.

- Change constructors to be called `Error`.

- Rename `Caught_Panic.Caught_Panic_Data` -> `Caught_Panic.Panic`.

- Rename `Project_Description.Project_Description_Data` ->`Project_Description.Value`

- Rename `Regex_Matcher.Regex_Matcher_Data` -> `Regex_Matcher.Value` (can't come up with anything better!).

- Rename `Range.Value` -> `Range.Between`.

- Rename `Interval.Value` -> `Interval.Between`.

- Rename `Column.Column_Data` -> `Column.Value`.

- Rename `Table.Table_Data` -> `Table.Value`.

- Align all the Error types in Table.

- Removed GEO Json bits from Table.

- `Json.to_table` doesn't have the GEO bits anymore.

- Added `Json.geo_json_to_table` to add the functions back in.

# Important Notes

No more exports from anywhere but Main!

No more `_Data` constructors!

- Moved `Any`, `Error` and `Panic` to `Standard.Base`.

- Separated `Json` and `Range` extensions into own modules.

- Tidied `Case`, `Case_Sensitivity`, `Encoding`, `Matching`, `Regex_Matcher`, `Span`, `Text_Matcher`, `Text_Ordering` and `Text_Sub_Range` in `Standard.Base.Data.Text`.

- Tidied `Standard.Base.Data.Text.Extensions` and stopped it re-exporting anything.

- Tidied `Regex_Mode`. Renamed `Option` to `Regex_Option` and added type to export.

- Tidied up `Regex` space.

- Tidied up `Meta` space.

- Remove `Matching` from export.

- Moved `Standard.Base.Data.Boolean` to `Standard.Base.Boolean`.

# Important Notes

- Moved `to_json` and `to_default_visualization_data` from base types to extension methods.

Converting `Integer.parse` into a builtin and making sure it can parse big values like `100!`. Adding `locale` parameter to `Locale.parse` and making sure it parses `32,5` as `32.5` double in Czech locale.

# Important Notes

Note that one cannot

```

import Standard.Table as Table_Module

```

because of the 2-component name restriction that gets desugared to `Standard.Table.Main` and we have to write

```

import Standard.Table.Main as Table_Module

```

in a few places. Once we move `Json.to_table` extension this can be improved.

Implements https://www.pivotaltracker.com/story/show/183915527

Adds a common entry point to the `Benchmarks` test suite, so that all benchmarks can be run at once.

Usually we are running only one set of benchmarks that we want to measure, but for the purpose of checking if they are still compiling after a language change, it can be useful to run all of them (currently this takes a pretty long time though, but may work when going for lunch break :)).

Related to https://www.pivotaltracker.com/story/show/183369386/comments/234258671

- Adds transpose and cross_tab to the In-Memory table.

- Cross Tab is built on top of aggregate and hence allows for expressions and has same error trapping as in aggregate.

# Important Notes

Only basic tests have been implemented. Error and warning tests will be added as a follow up task.

Implements https://www.pivotaltracker.com/story/show/183854123

It features a naive full scan join and only allows equality conditions. More advanced conditions and better optimized algorithms will be implemented in a subsequent PR.

Manual implementation of vector builder that avoid any copying (if the initial `capacity` is exact). Moreover the builder optimizes for storage of `double` and `long` values - if the array homogeneously consists of these values, then no boxing happens and only primitive types are stored.

# Important Notes

Added few tests to [Vector_Spec.enso](76d2f38247).

- Export all for `Problem_Behavior` (allowing for Report_Warning, Report_Error and Ignore to be trivially used).

- Renamed `Range.Range_Data` to `Range.Value` moved to using `up_to` wherever possible.

- Reviewed `Function`, `IO`, `Polyglot`, `Random`, `Runtime`, `System`.

- `File` now published as type. Some static methods moved to `Data` others into type. Removed `read_bytes` static.

- New `Data` module for reading input data in one place (e.g. `Data.read_file`) will add `Data.connect` later.

- Added `Random` module to the exports.

- Move static methods into `Warning` type and exporting the type not the module.

# Important Notes

- Sorted a few imports into order (ordering by direct import in project, then by from import in project then polyglot and finally self imports).

Upgrading to GraalVM 22.3.0.

# Important Notes

- Removed all deprecated `FrameSlot`, and replaced them with frame indexes - integers.

- Add more information to `AliasAnalysis` so that it also gathers these indexes.

- Add quick build mode option to `native-image` as default for non-release builds

- `graaljs` and `native-image` should now be downloaded via `gu` automatically, as dependencies.

- Remove `engine-runner-native` project - native image is now build straight from `engine-runner`.

- We used to have `engine-runner-native` without `sqldf` in classpath as a workaround for an internal native image bug.

- Fixed chrome inspector integration, such that it shows values of local variables both for current stack frame and caller stack frames.

- There are still many issues with the debugging in general, for example, when there is a polyglot value among local variables, a `NullPointerException` is thrown and no values are displayed.

- Removed some deprecated `native-image` options

- Remove some deprecated Truffle API method calls.

Here we go again...

- Tidied up `Pair` and stopped exporting `Pair_Data`. Adjusted so type exported.

- Tidy imports for `Json`, `Json.Internal`, `Locale`.

- Tidy imports Ordering.*. Export `Sort_Direction` and `Case_Sensitivity` as types.

- Move methods of `Statistics` into `Statistic`. Publishing the types not the module.

- Added a `compute` to a `Rank_Method`.

- Tidied the `Regression` module.

- Move methods of `Date`, `Date_Time`, `Duration`, `Time_Of_Day` and `Time_Zone` into type. Publishing types not modules.

- Added exporting `Period`, `Date_Period` and `Time_Period` as types. Static methods moved into types.

# Important Notes

- Move `compare_to_ignore_case`, `equals_ignore_case` and `to_case_insensitive_key` from Extensions into `Text`.

- Hiding polyglot java imports from export all in `Main.enso`.

- Moved static methods into `Locale` type. Publishing type not module.

- Stop publishing `Nil` and `Cons` from `List`.

- Tidied up `Json` and merged static in to type. Sorted out various type signatures which used a `Constructor`. Now exporting type and extensions.

- Tidied up `Noise` and merge `Generator` into file. Export type not module.

- Moved static method of `Map` into type. Publishing type not module.

# Important Notes

- Move `Text.compare_to` into `Text`.

- Move `Text.to_json` into `Json`.

1-to-1 translation of the HTTPBin expected by our testsuite using Java's HttpServer.

Can be started from SBT via

```

sbt:enso> simple-httpbin/run <hostname> <port>

```

# Important Notes

@mwu-tow this will mean we can ditch Go dependency completely and replace it with the above call.

* Tidy Bound and Interval.

* Fix Interval tests.

* Fix Interval tests.

* Restructure Index_Sub_Range to new Type/Statics.

* Adjust for Vector exported as a type and static methods on it.

* Tidy Maybe.

* Fix issue with Line_Ending_Style.

* Revert Filter_Condition change.

Fix benchmark test issue.

Tidy imports on Index_Sub_Range.

* Revert Filter_Condition change.

Fix benchmark test issue.

Tidy imports on Index_Sub_Range.

* Can't export constructors unless exported from type in module.

* Fix failing tests.

- Allow `Map` to store a `Nothing` key (fixes `Vector.distinct` with a `Nothing`).

- Add `column_names` method to `Table` as a shorthand.

- Return data flow error when comparing with Nothing (not a Panic or a Polyglot exception).

- Allow milli and micro second for DateTime and Time Of Day

# Important Notes

- Added a load of tests for the various comparison operators to Numbers_Spec.

It appears that we were always adding builtin methods to the scope of the module and the builtin type that shared the same name.

This resulted in some methods being accidentally available even though they shouldn't.

This change treats differently builtins of types and modules and introduces auto-registration feature for builtins.

By default all builtin methods are registered with a type, unless explicitly defined in the annotation property.

Builtin methods that are auto-registered do not have to be explicitly defined and are registered with the underlying type.

Registration correctly infers the right type, depending whether we deal with static or instance methods.

Builtin methods that are not auto-registered have to be explicitly defined **always**. Modules' builtin methods are the prime example.

# Important Notes

Builtins now carry information whether they are static or not (inferred from the lack of `self` parameter).

They also carry a `autoRegister` property to determine if a builtin method should be automatically registered with the type.

- Added expression ANTLR4 grammar and sbt based build.

- Added expression support to `set` and `filter` on the Database and InMemory `Table`.

- Added expression support to `aggregate` on the Database and InMemory `Table`.

- Removed old aggregate functions (`sum`, `max`, `min` and `mean`) from `Column` types.

- Adjusted database `Column` `+` operator to do concatenation (`||`) when text types.

- Added power operator `^` to both `Column` types.

- Adjust `iif` to allow for columns to be passed for `when_true` and `when_false` parameters.

- Added `is_present` to database `Column` type.

- Added `coalesce`, `min` and `max` functions to both `Column` types performing row based operation.

- Added support for `Date`, `Time_Of_Day` and `Date_Time` constants in database.

- Added `read` method to InMemory `Column` returning `self` (or a slice).

# Important Notes

- Moved approximate type computation to `SQL_Type`.

- Fixed issue in `LongNumericOp` where it was always casting to a double.

- Removed `head` from InMemory Table (still has `first` method).

Fix bugs in `TreeToIr` (rewrite) and parser. Implement more undocumented features in parser. Emulate some old parser bugs and quirks for compatibility.

Changes in libs:

- Fix some bugs.

- Clean up some odd syntaxes that the old parser translates idiosyncratically.

- Constructors are now required to precede methods.

# Important Notes

Out of 221 files:

- 215 match the old parser

- 6 contain complex types the old parser is known not to handle correctly

So, compared to the old parser, the new parser parses 103% of files correctly.

This PR adds `Period` type, which is a date-only complement to `Duration` builtin type.

# Important Notes

- `Period` replaces `Date_Period`, and `Time_Period`.

- Added shorthand constructors for `Duration` and `Period`. For example: `Period.days 10` instead of `Period.new days=10`.

- `Period` can be compared to other `Period` in some cases, other cases throw an error.

Define start of Enso epoch as 15th of October 1582 - start of the Gregorian calendar.

# Important Notes

- Some (Gregorian) calendar related functionalities within `Date` and `Date_Time` now produces a warning if the receiving Date/Date_Time is before the epoch start, e.g., `week_of_year`, `is_leap_year`, etc.

1. Changes how we do monadic state – rather than a haskelly solution, we now have an implicit env with mutable data inside. It's better for the JVM. It also opens the possibility to have state ratained on exceptions (previously not possible) – both can now be implemented.

2. Introduces permission check system for IO actions.

Most of the time, rather than defining the type of the parameter of the builtin, we want to accept every Array-like object i.e. Vector, Array, polyglot Array etc.

Rather than writing all possible combinations, and likely causing bugs on the way anyway as we already saw, one should use `CoerceArrayNode` to convert to Java's `Object[]`.

Added various test cases to illustrate the problem.

The main culprit of a Vector slowdown (when compared to Array) was the normalization of the index when accessing the elements. Turns out that the Graal was very persistent on **not** inlining that particular fragment and that was degrading the results in benchmarks.

Being unable to force it to do it (looks like a combination of thunk execution and another layer of indirection) we resorted to just moving the normalization to the builtin method. That makes Array and Vector perform roughly the same.

Moved all handling of invalid index into the builtin as well, simplifying the Enso implementation. This also meant that `Vector.unsafe_at` is now obsolete.

Additionally, added support for negative indices in Array, to behave in the same way as for Vector.

# Important Notes

Note that this workaround only addresses this particular perf issue. I'm pretty sure we will have more of such scenarios.

Before the change `averageOverVector` benchmark averaged around `0.033 ms/op` now it does consistently `0.016 ms/op`, similarly to `averageOverArray`.

Improve `Unsupported_Argument_Types` error so that it includes the message from the original exception. `arguments` field is retained, but not included in `to_display_text` method.

- Removed `Dubious constructor export` from Examples, Geo, Google_Api, Image and Test.

- Updated Google_Api project to meet newer code standards.

- Restructured `Standard.Test`:

- `Main.enso` now exports `Bench`, `Faker`, `Problems`, `Test`, `Test_Suite`

- `Test.Suite` methods moved into a `Test_Suite` type.

- Moved `Bench.measure` into `Bench` type.

- Separated the reporting to a `Test_Reporter` module.

- Moved `Faker` methods into `Faker` type.

- Removed `Verbs` and `.should` method.

- Added `should_start_with` and `should_contain` extensions to `Any`.

- Restructured `Standard.Image`:

- Merged Codecs methods into `Image`.

- Export `Image`, `Read_Flag`, `Write_Flag` and `Matrix` as types from `Main.enso`.

- Merged the internal methods into `Matrix` and `Image`.

- Fixed `Day_Of_Week` to be exported as a type and sort the `from` method.

- Reimplement the `Duration` type to a built-in type.

- `Duration` is an interop type.

- Allow Enso method dispatch on `Duration` interop coming from different languages.

# Important Notes

- The older `Duration` type should now be split into new `Duration` builtin type and a `Period` type.

- This PR does not implement `Period` type, so all the `Period`-related functionality is currently not working, e.g., `Date - Period`.

- This PR removes `Integer.milliseconds`, `Integer.seconds`, ..., `Integer.years` extension methods.

When trying to resolve an invalid method of a polyglot array we were reaching a state where no specialization applied.

Turns out we can now simplify the logic of inferring polyglot call type for arrays and avoid the crash.

- Moved `Standard.Database.connect` into `Standard.Database.Database.connect`, so can now just `from Standard.Database import ...`.

- Removed all `Dubious constructor export`s.

- Switched to using `project` for internal imports.

- Moved to using `Value` for private constructors and not re-exporting.

- Export types not modules from `Standard.Database`.

- Broke up `IR` into separate files (Context, Expression, From_Spec, Internal_Column, Join_Kind, Query).

- No longer use `IR.` instead via specific types.

- Broke up `SQL` into separate files (SQL_Type and SQL_Statement).

Additionally;

- Standard.Table: Moved `storage_types` into `Storage`.

- Standard.Table: Switched to using `project` for internal imports.

- Standard.Table.Excel: Renamed modules `Range` to `Excel_Range` and `Section` to `Excel_Section`.

- `Standard.Visualisation`: Switched to using `project` for internal imports.

- `Standard.Visualisation`: Moved to using `Value` for private constructors and not re-exporting.

# Important Notes

- Have not cleared up the `Errors` yet.

- Have not switched to type pattern matching.

- Generally export types not modules from the `Standard.Table` import.

- Moved `new`, `from_rows` the `Standard.Table` library into the `Table` type.

- Renames `Standard.Table.Data.Storage.Type` to `Standard.Table.Data.Storage.Storage`

- Removed the internal `from_columns` method.

- Removed `join` and `concat` and merged into instance methods.

- Removed `Table` and `Column` from the `Standard.Database` exports.

- Removed `Standard.Table.Data.Column.Aggregate_Column` as not used any more.

Changelog

- fix reporting of runtime type for values annotated with warning

- fix visualizations of values annotated with warnings

- fix `Runtime.get_stack_trace` failure in interactive mode

Allows using `Vector ColumnName` for the various table functions as short hand.

- `select_columns`, `remove_columns`,`reorder_columns`, `distinct` all map to an exact By_Name match.

- `rename_columns` does a positional rename on the Vector passed.

- `order_by` sorts ascending on each column passed in order.

# Important Notes

This may be reversed once widgets are available and working but this makes the APIs much more usable in current UI.

This change brings by-type pattern matching to Enso.

One can pattern match on Enso types as well as on polyglot types.

For example,

```

case x of

_ : Integer -> ...

_ : Text -> ...

_ -> ...

```

as well as Java's types

```

case y of

_ : ArrayList -> ...

_ : List -> ...

_ : AbstractList -> ...

_ -> ..

```

It is no longer possible to match a value with a corresponding type constructor.

For example

```

case Date.now of

Date -> ...

```

will no longer match and one should match on the type (`_ : Date`) instead.

```

case Date of

Date -> ...

```

is fine though, as requested in the ticket.

The change required further changes to `type_of` logic which wasn't dealing well with polyglot values.

Implements https://www.pivotaltracker.com/story/show/183188846

# Important Notes

~I discovered late in the game that nested patterns involving type patterns, such as `Const (f : Foo) tail -> ...` are not possible due to the old parser logic.

I would prefer to add it in a separate PR because this one is already getting quite large.~ This is now supported!

Implements https://www.pivotaltracker.com/story/show/183402892

# Important Notes

- Fixes inconsistent `compare_to` vs `==` behaviour in date/time types and adds test for that.

- Adds test for `Table.order_by` on dates and custom types.

- Fixes an issue with `Table.order_by` for custom types.

- Unifies how incomparable objects are reported by `Table.order_by` and `Vector.sort`.

- Adds benchmarks comparing `Table.order_by` and `Vector.sort` performance.

Makes statics static. A type and its instances have different methods defined on them, as it should be. Constructors are now scoped in types, and can be imported/exported.

# Important Notes

The method of fixing stdlib chosen here is to just not. All the conses are exported to make all old code work. All such instances are marked with `TODO Dubious constructor export` so that it can be found and fixed.

This change implements a simple `type_of` method that returns a type of a given value, including for polyglot objects.

The change also allows for pattern matching on various time-related instances. It is a nice-to-have on its own, but it was primarily needed here to write some tests. For equality checks on types we currently can't use `==` due to a known _feature_ which essentially does wrong dispatching. This will be improved in the upcoming statics PR so we agreed that there is no point in duplicating that work and we can replace it later.

Also, note that this PR changes `Meta.is_same_object`. Comparing types revealed that it was wrong when comparing polyglot wrappers over the same value.

Use an `ArraySlice` to slice `Vector`.

Avoids memory copying for the slice function.

# Important Notes

| Test | Ref | New |

| --- | --- | --- |

| New Vector | 71.9 | 71.0 |

| Append Single | 26.0 | 27.7 |

| Append Large | 15.1 | 14.9 |

| Sum | 156.4 | 165.8 |

| Drop First 20 and Sum | 171.2 | 165.3 |

| Drop Last 20 and Sum | 170.7 | 163.0 |

| Filter | 76.9 | 76.9 |

| Filter With Index | 166.3 | 168.3 |

| Partition | 278.5 | 273.8 |

| Partition With Index | 392.0 | 393.7 |

| Each | 101.9 | 102.7 |

- Note: the performance of New and Append has got slower from previous tests.

Implements https://www.pivotaltracker.com/story/show/183082087

# Important Notes

- Removed unnecessary invocations of `Error.throw` improving performance of `Vector.distinct`. The time of the `add_work_days and work_days_until should be consistent with each other` test suite came down from 15s to 3s after the changes.

Repairing the constructor name following the types work. Some general tiding up as well.

- Remove `Standard.Database.Data.Column.Aggregate_Column_Builder`.

- Remove `Standard.Database.Data.Dialect.Dialect.Dialect_Data`.

- Remove unused imports and update some type definitions.

- Rename `Postgres.Postgres_Data` => `Postgres_Options.Postgres`.

- Rename `Redshift.Redshift_Data` => `Redshift_Options.Redshift`.

- Rename `SQLite.SQLite_Data` => `SQLite_Options.SQLite`.

- Rename `Credentials.Credentials_Data` => `Credentials.Username_And_Password`.

- Rename `Sql` to `SQL` across the board.

- Merge `Standard.Database.Data.Internal` into `Standard.Database.Internal`.

- Move dialects into `Internal` and merge the function in `Helpers` into `Base_Generator`.

Turns that if you import a two-part import we had special code that would a) add Main submodule b) add an explicit rename.

b) is problematic because sometimes we only want to import specific names.

E.g.,

```

from Bar.Foo import Bar, Baz

```

would be translated to

```

from Bar.Foo.Main as Foo import Bar, Baz

```

and it should only be translated to

```

from Bar.Foo.Main import Bar, Baz

```

This change detects this scenario and does not add renames in that case.

Fixes [183276486](https://www.pivotaltracker.com/story/show/183276486).

Resolves https://www.pivotaltracker.com/story/show/183285801

@JaroslavTulach suggested the current implementation may not handle these correctly, which suggests that the logic is not completely trivial - so I added a test to ensure that it works as we'd expect. Fortunately, it did work - but it's good to keep the tests to avoid regressions.

Changes following Marcin's work. Should be back to very similar public API as before.

- Add an "interface" type: `Standard.Base.System.File_Format.File_Format`.

- All `File_Format` types now have a `can_read` method to decide if they can read a file.

- Move `Standard.Table.IO.File_Format.Text.Text_Data` to `Standard.Base.System.File_Format.Plain_Text_Format.Plain_Text`.

- Move `Standard.Table.IO.File_Format.Bytes` to `Standard.Base.System.File_Format.Bytes`.

- Move `Standard.Table.IO.File_Format.Infer` to `Standard.Base.System.File_Format.Infer`. **(doesn't belong here...)**

- Move `Standard.Table.IO.File_Format.Unsupported_File_Type` to `Standard.Base.Error.Common.Unsupported_File_Type`.

- Add `Infer`, `File_Format`, `Bytes`, `Plain_Text`, `Plain_Text_Format` to `Standard.Base` exports.

- Fold extension methods of `Standard.Base.Meta.Unresolved_Symbol` into type.

- Move `Standard.Table.IO.File_Format.Auto` to `Standard.Table.IO.Auto_Detect.Auto_Detect`.

- Added a `types` Vector of all the built in formats.

- `Auto_Detect` asks each type if they `can_read` a file.

- Broke up and moved `Standard.Table.IO.Excel` into `Standard.Table.Excel`:

- Moved `Standard.Table.IO.File_Format.Excel.Excel_Data` to `Standard.Table.Excel.Excel_Format.Excel_Format.Excel`.

- Renamed `Sheet` to `Worksheet`.

- Internal types `Reader` and `Writer` providing the actual read and write methods.

- Created `Standard.Table.Delimited` with similar structure to `Standard.Table.Excel`:

- Moved `Standard.Table.IO.File_Format.Delimited.Delimited_Data` to `Standard.Table.Delimited.Delimited_Format.Delimited_Format.Delimited`.

- Moved `Standard.Table.IO.Quote_Style` to `Standard.Table.Delimited.Quote_Style`.

- Moved the `Reader` and `Writer` internal types into here. Renamed methods to have unique names.

- Add `Aggregate_Column`, `Auto_Detect`, `Delimited`, `Delimited_Format`, `Excel`, `Excel_Format`, `Sheet_Names`, `Range_Names`, `Worksheet` and `Cell_Range` to `Standard.Table` exports.

`Vector` type is now a builtin type. This requires a bunch of additional builtin methods for its creation:

- Use `Vector.from_array` to convert any array-like structure into a `Vector` [by copy](f628b28f5f)

- Use (already existing) `Vector.from_polyglot_array` to convert any array-like structure into a `Vector` **without** copying

- Use (already existing) `Vector.fill 1 item` to create a singleton `Vector`

Additional, for pattern matching purposes, we had to implement a `VectorBranchNode`. Use following to match on `x` being an instance of `Vector` type:

```

import Standard.Base.Data.Vector

size = case x of

Vector.Vector -> x.length

_ -> 0

```

Finally, `VectorLiterals` pass that transforms `[1,2,3]` to (roughly)

```

a1 = 1

a2 = 2

a3 = 3

Vector (Array (a1,a2, a3))

```

had to be modified to generate

```

a1 = 1

a2 = 2

a3 = 3

Vector.from_array (Array (a1, a2, a3))

```

instead to accomodate to the API changes. As of 025acaa676 all the known CI checks passes. Let's start the review.

# Important Notes

Matching in `case` statement is currently done via `Vector_Data`. Use:

```

case x of

Vector.Vector_Data -> True

```

until a better alternative is found.

Small clean up PR.

- Aligns a few type signatures with their functions.

- Some formatting fixes.

- Remove a few unused types.

- Make error extension functions be standard methods.

- Added `databases`, `database`, `set_database`.

- Added `schemas`, `schema`, `set_schema`.

- Added `table_types`,

- Added `tables`.

- Moved the vast majority of the connection work into a lower level `JDBC_Connection` object.

- `Connection` represents the standard API for database connections and provides a base JDBC implementation.

- `SQLite_Connection` has the `Connection` API but with custom `databases` and `schemas` methods for SQLite.

- `Postgres_Connection` has the `Connection` API but with custom `set_database`, `databases`, `set_schema` and `schemas` methods for Postgres.

- Updated `Redshift` - no public API change.

Implements https://www.pivotaltracker.com/story/show/182307143

# Important Notes

- Modified standard library Java helpers dependencies so that `std-table` module depends on `std-base`, as a provided dependency. This is allowed, because `std-table` is used by the `Standard.Table` Enso module which depends on `Standard.Base` which ensures that the `std-base` is loaded onto the classpath, thus whenever `std-table` is loaded by `Standard.Table`, so is `std-base`. Thus we can rely on classes from `std-base` and its dependencies being _provided_ on the classpath. Thanks to that we can use utilities like `Text_Utils` also in `std-table`, avoiding code duplication. Additional advantage of that is that we don't need to specify ICU4J as a separate dependency for `std-table`, since it is 'taken' from `std-base` already - so we avoid including it in our build packages twice.

Many aggregation types fell back to the general `Any` type where they could have used the type of input column - for example `First` of a column of integers is guaranteed to fit the `Integer` storage type, so it doesn't have to fall back to `Any`. This PR fixes that and adds a test that checks this.

This is a step towards the new language spec. The `type` keyword now means something. So we now have

```

type Maybe a

Some (from_some : a)

None

```

as a thing one may write. Also `Some` and `None` are not standalone types now – only `Maybe` is.

This halfway to static methods – we still allow for things like `Number + Number` for backwards compatibility. It will disappear in the next PR.

The concept of a type is now used for method dispatch – with great impact on interpreter code density.

Some APIs in the STDLIB may require re-thinking. I take this is going to be up to the libraries team – some choices are not as good with a semantically different language. I've strived to update stdlib with minimal changes – to make sure it still works as it did.

It is worth mentioning the conflicting constructor name convention I've used: if `Foo` only has one constructor, previously named `Foo`, we now have:

```

type Foo

Foo_Data f1 f2 f3

```

This is now necessary, because we still don't have proper statics. When they arrive, this can be changed (quite easily, with SED) to use them, and figure out the actual convention then.

I have also reworked large parts of the builtins system, because it did not work at all with the new concepts.

It also exposes the type variants in SuggestionBuilder, that was the original tiny PR this was based on.

PS I'm so sorry for the size of this. No idea how this could have been smaller. It's a breaking language change after all.

- Added `Zone`, `Date_Time` and `Time_Of_Day` to `Standard.Base`.

- Renamed `Zone` to `Time_Zone`.

- Added `century`.

- Added `is_leap_year`.

- Added `length_of_year`.

- Added `length_of_month`.

- Added `quarter`.

- Added `day_of_year`.

- Added `Day_Of_Week` type and `day_of_week` function.

- Updated `week_of_year` to support ISO.

# Important Notes

- Had to pass locale to formatter for date/time tests to work on my PC.

- Changed default of `week_of_year` to use ISO.

Implements https://www.pivotaltracker.com/story/show/182879865

# Important Notes

Note that removing `set_at` still does not make our arrays fully immutable - `Array.copy` can still be used to mutate them.

* Builtin Date_Time, Time_Of_Day, Zone

Improved polyglot support for Date_Time (formerly Time), Time_Of_Day and

Zone. This follows the pattern introduced for Enso Date.

Minor caveat - in tests for Date, had to bend a lot for JS Date to pass.

This is because JS Date is not really only a Date, but also a Time and

Timezone, previously we just didn't consider the latter.

Also, JS Date does not deal well with setting timezones so the trick I

used is to first call foreign function returning a polyglot JS Date,

which is converted to ZonedDateTime and only then set the correct

timezone. That way none of the existing tests had to be changes or

special cased.

Additionally, JS deals with milliseconds rather than nanoseconds so

there is loss in precision, as noted in Time_Spec.

* Add tests for Java's LocalTime

* changelog

* Make date formatters in table happy

* PR review, add more tests for zone

* More tests and fixed a bug in column reader

Column reader didn't take into account timezone but that was a mistake

since then it wouldn't map to Enso's Date_Time.

Added tests that check it now.

* remove redundant conversion

* Update distribution/lib/Standard/Base/0.0.0-dev/src/Data/Time.enso

Co-authored-by: Radosław Waśko <radoslaw.wasko@enso.org>

* First round of addressing PR review

* don't leak java exceptions in Zone

* Move Date_Time to top-level module

* PR review

Co-authored-by: Radosław Waśko <radoslaw.wasko@enso.org>

Co-authored-by: Jaroslav Tulach <jaroslav.tulach@enso.org>

Use Proxy_Polyglot_Array as a proxy for polyglot arrays, thus unifying

the way the underlying array is accessed in Vector.

Used the opportunity to cleanup builtin lookup, which now actually

respects what is defined in the body of @Builtin_Method annotation.

Also discovered that polyglot null values (in JS, Python and R) were leaking to Enso.

Fixed that by doing explicit translation to `Nothing`.

https://www.pivotaltracker.com/story/show/181123986

First of all this PR demonstrates how to implement _lazy visualization_:

- one needs to write/enhance Enso visualization libraries - this PR adds two optional parameters (`bounds` and `limit`) to `process_to_json_text` function.

- the `process_to_json_text` can be tested by standard Enso test harness which this PR also does

- then one has to modify JavaScript on the IDE side to construct `setPreprocessor` expression using the optional parameters

The idea of _scatter plot lazy visualization_ is to limit the amount of points the IDE requests. Initially the limit is set to `limit=1024`. The `Scatter_Plot.enso` then processes the data and selects/generates the `limit` subset. Right now it includes `min`, `max` in both `x`, `y` axis plus randomly chosen points up to the `limit`.

The D3 visualization widget is capable of _zooming in_. When that happens the JavaScript widget composes new expression with `bounds` set to the newly visible area. By calling `setPreprocessor` the engine recomputes the visualization data, filters out any data outside of the `bounds` and selects another `limit` points from the new data. The IDE visualization then updates itself to display these more detailed data. Users can zoom-in to see the smallest detail where the number of points gets bellow `limit` or they can select _Fit all_ to see all the data without any `bounds`.

# Important Notes

Randomly selecting `limit` samples from the dataset may be misleading. Probably implementing _k-means clustering_ (where `k=limit`) would generate more representative approximation.

- Removed various unnecessary `Standard.Base` imports still left behind.

- Added `Regex` to default `Standard.Base`.

- Removed aliasing from the examples as no longer needed (case coercion no long occurs).